ByteDance’s Doubao big model team has made a major breakthrough recently and has successfully developed a new sparse model architecture UltraMem. This architecture innovatively solves the problem of high-value memory access in MoE model inference, significantly improving the inference speed and efficiency, and reducing the inference cost. While ensuring the model effect, UltraMem has increased the inference speed by 2-6 times compared to MoE, and the inference cost can be reduced by up to 83%, providing a new solution for efficient inference of large models and laying the foundation for building larger-scale models. A solid foundation.

ByteDance Doubao big model team announced today that it has successfully developed a new sparse model architecture UltraMem. This architecture effectively solves the problem of high-value memory access in MoE (hybrid expert) model inference, and the inference speed is 2-6 times higher than that in MoE. Costs can be reduced up to 83%. This breakthrough progress opens up new paths for efficient inference of large models.

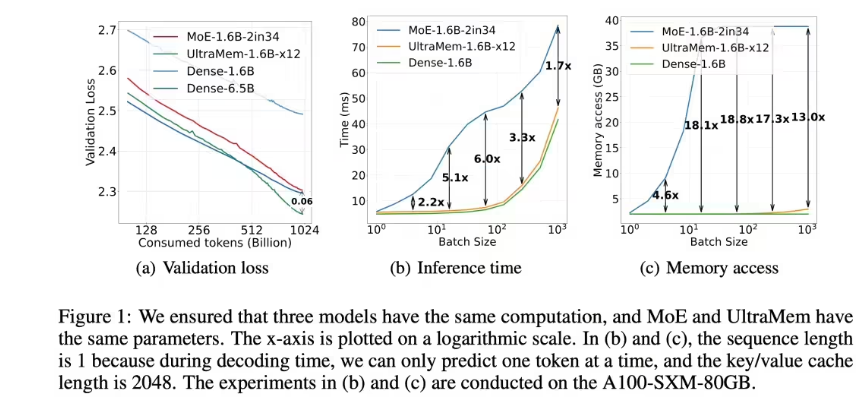

On the premise of ensuring the model effect, the UltraMem architecture successfully solved the memory access bottleneck in MoE architecture inference. The experimental results show that under the same parameters and activation conditions, UltraMem not only has the model effect better than MoE, but also increases the inference speed by 2-6 times. In addition, under common batch size scale, the memory access cost of UltraMem is almost equivalent to that of the Dense model with the same computational volume, significantly reducing the inference cost.

The research team trained the UltraMem model with a scale of 20 million value. The experimental results show that under the same computing resources, the model achieves industry-leading inference speed and model performance. This result verifies the excellent Scaling characteristics of the UltraMem architecture and lays the technical foundation for building billions of value or expert models.

As the scale of large models continues to expand, inference cost and speed have become the key factors that restrict their application. Although the MoE architecture has implemented computational decoupling from parameters, its high memory fetch demand during inference results in an increase in latency. The proposal of UltraMem architecture effectively solves this problem and provides new technical choices for the large-scale application of large models.

The successful development of UltraMem architecture marks a significant progress in big model inference technology, provides strong technical support for the widespread application of big models in the future, and also indicates that the big model era is about to arrive. Its excellent performance and cost-effectiveness will drive the application and development of large models in more fields.