Google recently launched three new AI models of the Gemini 2.0 series: the basic version of Gemini 2.0 Flash, the economic version of Gemini 2.0 Flash-Lite and the experimental version of Gemini 2.0 Pro, aiming to meet the different needs of different users and developers for performance and cost. . These three models have their own emphasis on functionality and price, marking another milestone in Google's continued advancement in the field of large language models. The article will explain the characteristics, performance and pricing strategies of these three models in detail, and make preliminary predictions on Google's future development direction in the field of AI.

Google has expanded its AI model family and launched three new versions of Gemini2.0 models, namely the basic version of Gemini2.0Flash, the economic version of Gemini2.0Flash-Lite, and the experimental version of Gemini2.0Pro. These new models are designed to meet different usage needs and provide a diversified balance between performance and cost.

The basic version of Gemini2.0Flash was first launched in December last year and is now fully launched, with higher usage frequency limits and improved performance. Gemini2.0Flash-Lite is a low-cost variant for developers and is currently being publicly previewed through the API.

Gemini2.0Pro is an experimental model designed for complex prompt and coding tasks, with a context window that expands to 2 million markers, twice as much as the Flash version.

Currently, these models only support text output, and Google plans to add image, audio, and live video capabilities to the Flash and Pro models in the coming months. Additionally, all three models are able to process images and audio as inputs.

In terms of testing, Google benchmarked the performance of Gemini2.0Pro, and the results showed that it outperformed previous models in almost all areas. In the math task, Gemini2.0Pro scored 91.8% on the MATH benchmark, while HiddenMath scored 65.2%, far exceeding the performance of the Flash version.

Gemini2.0Flash scored 29.9% on OpenAI's SimpleQA test, while the Pro model scored 44.3%. This shows that Gemini2.0Pro has higher accuracy when answering complex questions.

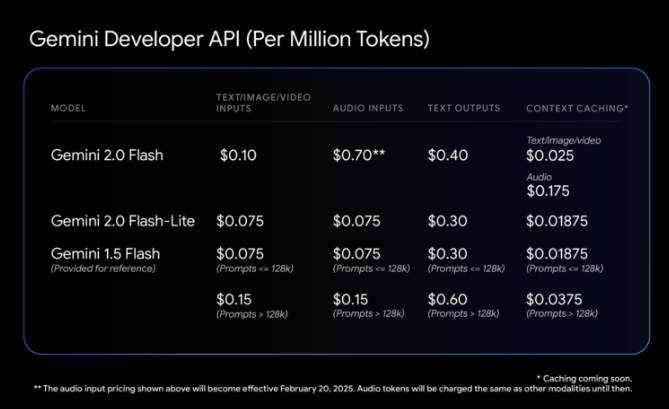

It is worth noting that Google has adjusted its API pricing, eliminating the previous differences between short and long text queries. This means that hybrid workloads (text and images) may cost less than the Gemini1.5Flash version, although performance improvements. In terms of specific price, the fee for Gemini2.0Flash is set to US$0.075 per million input and output is US$0.4. The relatively cheap Gemini2.0Flash-Lite costs $0.075 for text, image and video input and $0.30 for text output.

While Gemini2.0Flash is priced higher than previous generations, the new Flash-Lite model offers better performance at the same price, aiming to fill the gap between price and performance.

In addition, Google has updated the Gemini application and fully opened up the functions of the Gemini series, aiming to provide users with a richer user experience.

All models are available on desktop and mobile devices through Google AI Studio and Vertex AI and Google’s advanced Gemini Advanced chatbot.

Official blog: https://blog.google/technology/google-deepmind/gemini-model-updates-february-2025/

Key points:

Google has launched three new Gemini2.0 models, including Flash, Flash-Lite and Pro to meet different needs.

Gemini2.0Pro performed well in math and accuracy tests, with significantly higher scores than previous generations.

API pricing adjustments make the cost of hybrid workloads more competitive, while Flash-Lite fills the gaps in the market with better performance.

In short, the release of Google's Gemini 2.0 series demonstrates its continuous innovation in the field of large-scale language models and its precise grasp of diversified market demand. In the future, with the improvement of multimodal functions and the optimization of price strategies, the Gemini series is expected to occupy a more important position in the field of AI.