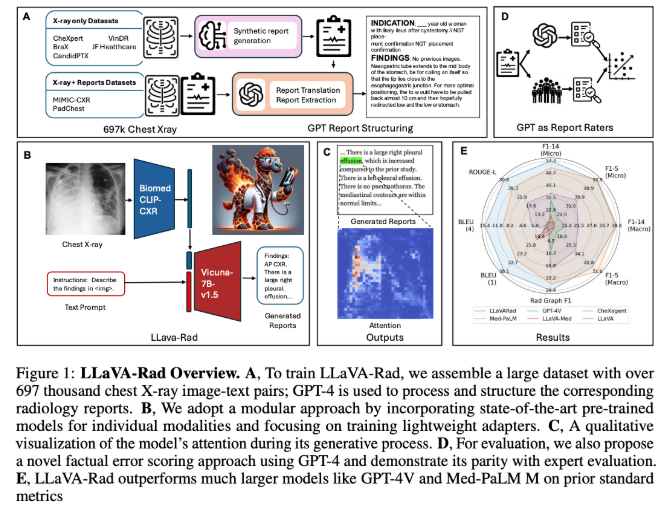

Recently, Microsoft Research Institute jointly launched LLaVA-Rad, a small multimodal model aimed at improving the efficiency of clinical radiological reports generation. The advent of this model not only marks the advancement of medical image processing technology, but also provides new possibilities for the application of radiology.

In the field of biomedical science, research based on large-scale basic models has shown good prospects, but while improving efficiency, small multimodal models still face challenges in resource requirements and performance. The LLaVA-Rad model achieves a performance breakthrough through modular training and focuses on chest X-ray images, and launches CheXprompt metrics to solve evaluation problems.

The release of LLaVA-Rad undoubtedly promotes the application of basic models in clinical settings, providing an efficient and lightweight solution for radiological report generation.

Project address: https://github.com/microsoft/LLaVA-Med

Key points:

LLaVA-Rad is a small multimodal model launched by Microsoft's research team, focusing on the generation of radiological reports.

The model has been trained on 697,435 chest X-ray images and reports to achieve efficient and superior performance.

CheXprompt is a supporting automatic scoring indicator to help solve evaluation problems in clinical applications.

In short, the launch of LLaVA-Rad brings new hope for medical imaging processing.