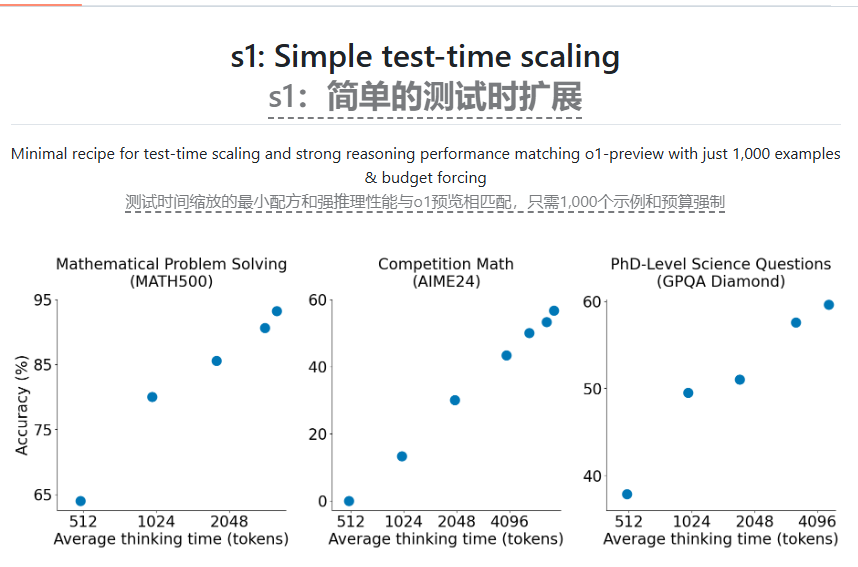

Recently, researchers from Stanford University and the University of Washington successfully trained an AI inference model called s1, which costs only $50. The model's performance in mathematical and programming ability testing is comparable to OpenAI's o1 model and DeepSeek's R1 model. This result has sparked new thinking on the commercialization of AI models and has also caused concerns among large AI laboratories.

The research team extracted the required inference capabilities from the ready-made basic model through distillation technology and trained using Google's Gemini2.0 Flash Thinking Experimental model. This process is not only cheap, but also fast training.

Nevertheless, large AI labs are dissatisfied with the phenomenon of low-cost replication models. Meta, Google and Microsoft plan to invest hundreds of billions of dollars in the next two years to train next-generation AI models to consolidate their position in the market.

The research results of s1 show the possibility of achieving strong inference performance through relatively small data sets and supervised fine-tuning methods, which also provides new directions for future AI research.

Paper: https://arxiv.org/pdf/2501.19393

Code: https://github.com/simplescaling/s1

Key points:

The training cost of the s1 model is less than US$50, and its performance is comparable to that of the top inference models.

Through distillation technology, the research team extracts reasoning capabilities from ready-made models, and the training process is fast and efficient.

Large AI labs have expressed concerns about the situation of low-cost replication models, and investments will focus on AI infrastructure in the future.