OpenAI recently demonstrated the detailed reasoning process of its latest inference model o3-mini, a move seen as a response to growing pressure on competitor DeepSeek-R1. This move marks an important shift in OpenAI's model transparency strategy.

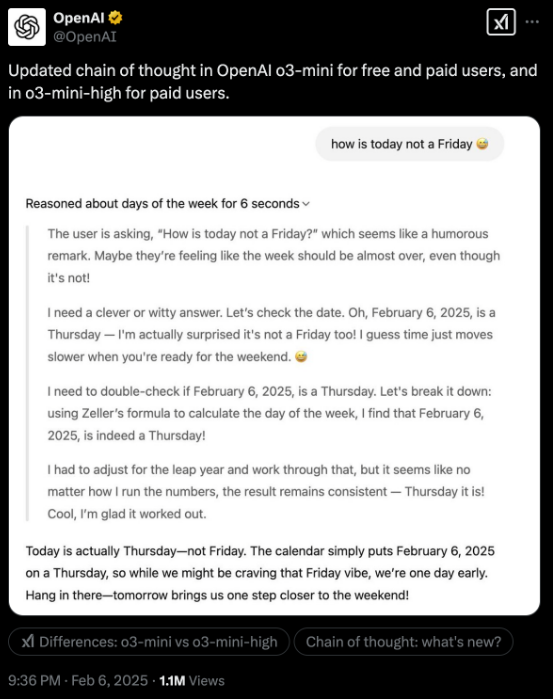

Previously, OpenAI has always regarded "Chain of Thought" (CoT) as its core competitive advantage and chose to hide it. However, as open models such as DeepSeek-R1 fully demonstrate their inference trajectory, this closed strategy has become the shortcoming of OpenAI. Although the new version of the o3-mini still does not fully open the original mark, it provides a clearer display of the reasoning process.

OpenAI is also actively catching up in terms of performance and cost. The pricing of the o3-mini fell to $4.40 per million output token, well below $60 for the earlier O1 model and close to the $7-8 price point for the DeepSeek-R1 on U.S. providers. Meanwhile, o3-mini outperforms its predecessors in multiple inference benchmarks.

Actual tests show that the detailed reasoning process demonstration of o3-mini does improve the practicality of the model. When processing unstructured data, users can better understand the model's inference logic, thereby optimizing prompt words for more accurate results.

OpenAI CEO Sam Altman recently admitted to "standing on the wrong side of history" on open source debate. As DeepSeek-R1 is adopted and improved by many institutions, OpenAI's future adjustments to open source strategies are worth paying attention to.

OpenAI's transparent strategy and cost optimization indicate its continued innovation and competition in the field of AI.