The "Daily New" fusion model launched by SenseTime recently marks a major breakthrough in multimodal information processing in the field of artificial intelligence. This model not only makes significant progress in the fusion processing of various information types such as text, images, videos, etc., but also achieves a qualitative leap in deep reasoning capabilities, bringing a new development direction to the industry.

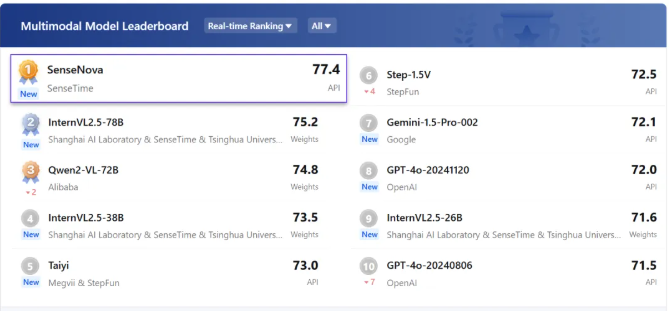

According to the "2024 Annual Report on Chinese Big Model Benchmark Evaluation" released by SuperCLUE, an authoritative domestic evaluation agency, SenseTime's "Daily New" integrated big model tied for the domestic ranking with a high score of 68.3. This achievement fully demonstrates its leading position in the field of Chinese big model. In addition, the model also performed well in the multimodal evaluation of OpenCompass, with significantly surpassing GPT-4o in scores, further consolidating its competitiveness on the international stage.

The success of the "Daily New" fusion model is due to SenseTime's substantial breakthrough in the field of native fusion modal training. This model can achieve the deep integration of various information such as text, images, videos, etc., providing the industry with a new solution. In SuperCLUE's evaluation, the model performed well in both liberal arts and science. It ranked first in the world with 81.8 points, and also won the gold medal in science. The calculation dimension ranked first in China with 78.2 points, fully demonstrating its Strong ability in multidisciplinary fields.

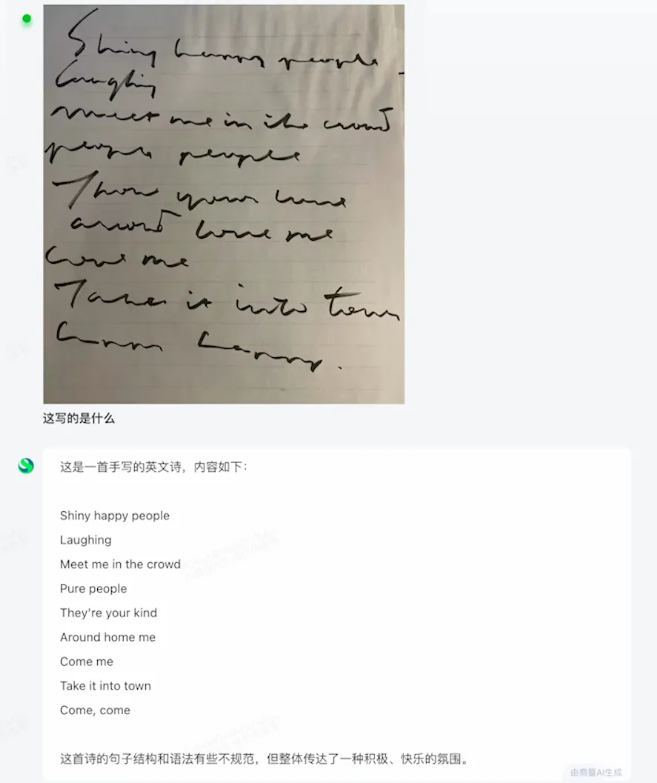

What’s unique about the “Daily New” fusion model is its ability to process multiple complex information simultaneously. Whether it is the recognition of fuzzy text or the rapid analysis of data charts, the model provides in-depth inference support. In practical application scenarios, this model shows its unique advantages, especially in the fields of autonomous driving, video interaction, office education, finance and industrial manufacturing, which has brought revolutionary changes to these industries.

During the product development process, SenseTime used a large amount of interlaced graphic data, and established an interactive bridge between modes through advanced synthesis technology, enhancing the model's understanding of multimodal information. In addition, SenseTime has also built a rich cross-modal task, which provides a solid foundation for the training of the "Daily New" fusion big model. Through this innovative training method, SenseTime's fusion modal model can effectively respond to user needs in a variety of business scenarios, realizing a virtuous cycle of application implementation and basic model iteration.

Key points:

The "Daily New" fusion model launched by SenseTime has achieved significant improvements in multimodal information processing capabilities and in-depth reasoning capabilities, and won the first place in the two authoritative evaluation lists.

This model has outstanding performance in both liberal arts and science, the world's first in liberal arts and the country's first in science, demonstrating its strong ability in multidisciplinary fields.

The "Daily New" integrated model is suitable for multiple fields, such as autonomous driving, finance, online education, etc., showing strong multimodal processing capabilities, bringing revolutionary changes to these industries.