With the rapid development of artificial intelligence technology, large language model (LLM) plays an increasingly important role in modern applications. Whether it is a chatbot, a code generator, or other natural language processing-based tasks, the capabilities of LLM have become the core driver. However, with the continuous expansion of model scale and the increase in complexity, the efficiency problems in the inference process have gradually become prominent, especially when processing large-scale data and complex calculations, delay and resource consumption have become bottlenecks that need to be solved urgently.

As the core component of LLM, the attention mechanism directly affects the inference efficiency of the model. However, traditional attention mechanisms such as FlashAttention and SparseAttention often perform poorly when faced with diverse workloads, dynamic input modes, and GPU resource constraints. High latency, memory bottlenecks and low resource utilization have seriously restricted the scalability and response speed of LLM inference. Therefore, developing an efficient and flexible solution has become the focus of current research.

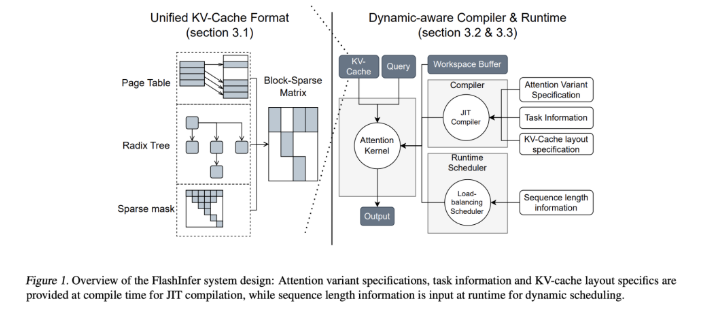

To address this challenge, research teams from the University of Washington, NVIDIA, Perplexity AI and Carnegie Mellon University jointly developed FlashInfer. This is an artificial intelligence library and kernel generator designed specifically for LLM reasoning, designed to optimize multiple attention mechanisms, including FlashAttention, SparseAttention, PageAttention and sampling through high-performance GPU cores. FlashInfer's design philosophy emphasizes flexibility and efficiency, can effectively respond to key challenges in LLM inference services, and provides practical solutions for inference in large-scale language models.

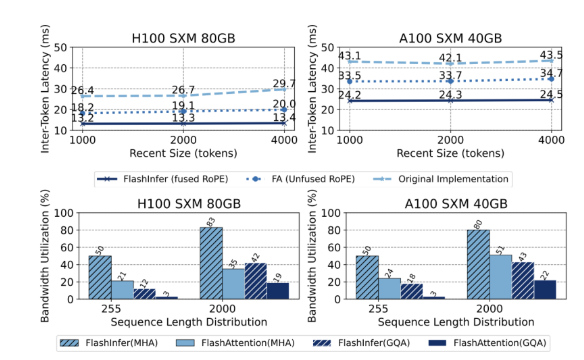

The core technical features of FlashInfer include the following aspects: First, it provides comprehensive attention kernel support, covering various mechanisms such as pre-filling, decoding and additional attention. It is also compatible with various KV-cache formats, significantly improving single-line Performance of request and batch service scenarios. Secondly, through grouped query attention (GQA) and fused rotational position embedding (RoPE) attention, FlashInfer achieves significant performance improvements in long prompt decoding, 31 times faster than vLLM's Page Attention. In addition, FlashInfer's dynamic load balancing scheduler can dynamically adjust according to input changes, reduce GPU idle time and ensure efficient use of resources. Its compatibility with CUDA Graphs further enhances its applicability in production environments.

In terms of performance, FlashInfer performs well in multiple benchmarks, especially when dealing with long context inference and parallel generation tasks, significantly reducing latency. On NVIDIA H100 GPU, FlashInfer achieves a 13-17% speed boost in parallel generation tasks. Its dynamic scheduler and optimized core significantly improve bandwidth and FLOP utilization, enabling efficient utilization of GPU resources whether in uneven or uniform sequence lengths. These advantages make FlashInfer an important tool to promote the development of the LLM service framework.

As an open source project, FlashInfer not only provides efficient solutions to LLM reasoning challenges, but also encourages further collaboration and innovation in the research community. Its flexible design and integration capabilities allow it to adapt to changing AI infrastructure needs and ensure it stays ahead of its leadership in addressing emerging challenges. Through the joint efforts of the open source community, FlashInfer is expected to play a more important role in the future development of AI technology.

Project entrance: https://github.com/flashinfer-ai/flashinfer

Key points:

FlashInfer is a newly released artificial intelligence library designed for large language model reasoning and can significantly improve efficiency.

This library supports multiple attention mechanisms, optimizes GPU resource utilization and reduces inference latency.

As an open source project, FlashInfer welcomes researchers to participate to promote the innovation and development of AI infrastructure.