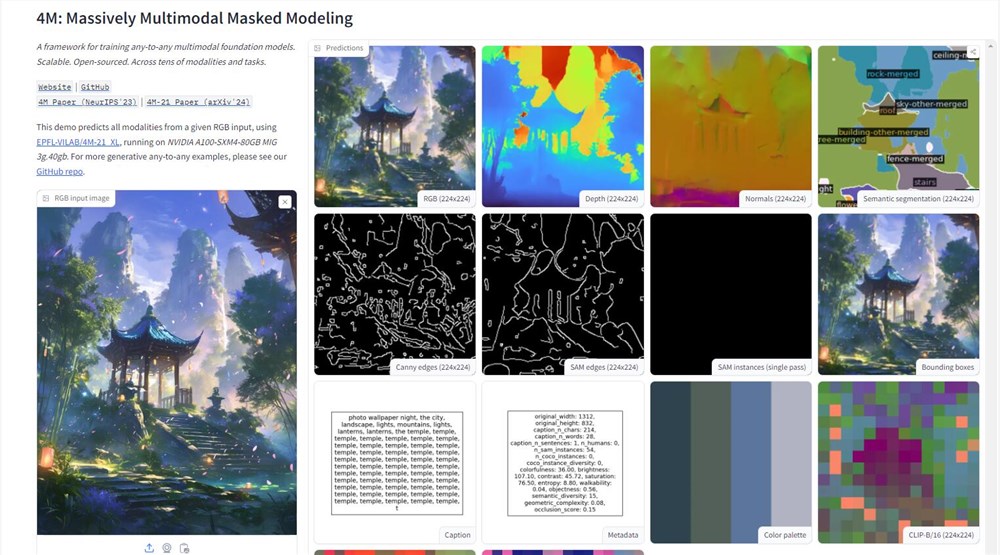

Apple has taken an important step in the field of artificial intelligence, and they have revealed a demonstration of the 4M model on the Hugging Face platform. This multimodal AI model can process a variety of data types such as text, images and 3D scenes, demonstrating powerful information processing capabilities. By uploading a picture, users can easily obtain detailed information such as depth maps, line drawings, etc. of the picture, which marks a major breakthrough in Apple's AI technology application.

The technical core of the 4M model lies in its "large-scale multimodal shielding modeling" training method. This method allows the model to process multiple visual modalities simultaneously, converting image, semantic and geometric information into unified tokens, thereby achieving seamless connection between different modalities. This design not only improves the versatility of the model, but also opens up new possibilities for future multimodal AI applications.

Apple's move broke its consistent tradition of confidentiality in the R&D field and actively demonstrated its technological strength on the open source AI platform. By opening the 4M model, Apple not only demonstrates the advanced nature of its AI technology, but also has also extended an olive branch to the developer community, hoping to build a prosperous ecosystem around 4M. This heralds the possibility of more intelligent applications in the Apple ecosystem, such as the smarter Siri and the more efficient Final Cut Pro.

However, the launch of the 4M model also brought challenges in data practice and AI ethics. As a data-intensive AI model, how to protect user privacy while promoting technological progress will be an issue that Apple needs to seriously consider. Apple has always regarded itself as a user privacy protector. Faced with this challenge, they need to find a balance between technological innovation and user trust.

In terms of training methods, 4M adopts an innovative randomly selected marking method: taking part of marking as input and the other part as the target, thereby achieving the scalability of the training target. This design allows 4M to treat both pictures and text as digital markers, greatly improving the flexibility and adaptability of the model.

The training data of the 4M model comes from CC12M, one of the world's largest open source datasets. Although this data set is rich in data, the labeling information is not perfect. To solve this problem, the researchers adopted a weakly supervised pseudo-label method, used CLIP, MaskRCNN and other technologies to make comprehensive predictions of the data set, and then converted the prediction results into tokens, laying a solid foundation for 4M's multimodal compatibility.

After extensive experimentation and testing, 4M has proven itself to be able to perform multimodal tasks directly without the need for a large number of pre-training or fine-tuning of specific tasks. It's like giving AI a multimodal Swiss Army Knife that allows it to flexibly deal with various challenges. The launch of 4M not only demonstrates Apple's technical strength in the field of AI, but also points out the direction for the future development of AI applications.

Demo address: https://huggingface.co/spaces/EPFL-VILAB/4M