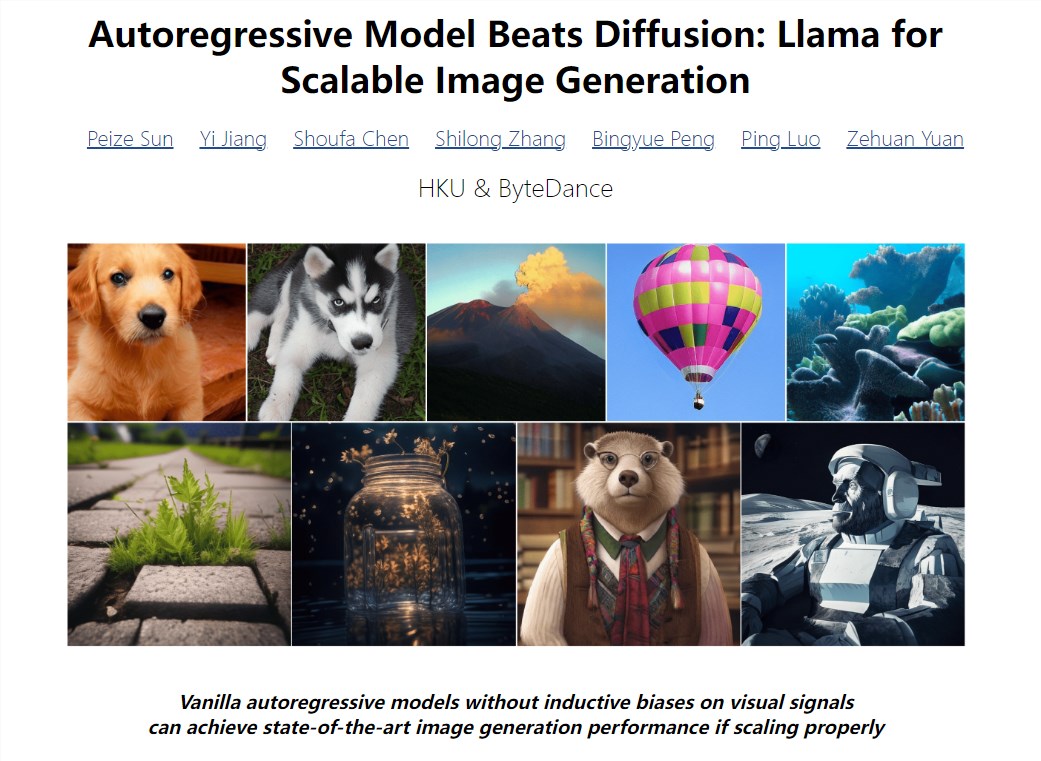

LlamaGen, an autoregressive image generation model jointly developed by Hong Kong University and ByteDance, is launching a revolution in the field of image generation. As an innovative work based on the Llama architecture, it not only breaks through the limitations of the traditional diffusion model in technology, but also arouses enthusiastic responses in the open source community. The nearly 900 stars on GitHub are the best proof.

On the ImageNet test benchmark, LlamaGen surpassed mainstream diffusion models such as LDM and DiT with excellent performance. This breakthrough result comes from the research team's in-depth optimization of the autoregressive model architecture. By retraining Image Tokenizer, LlamaGen has achieved significant advantages on ImageNet and COCO datasets, and its performance has even surpassed well-known models such as VQGAN, ViT-VQGAN and MaskGI.

LlamaGen's success is built on three core technical pillars: advanced image compression/quantizer, scalable image generation model, and carefully screened high-quality training data. The research team adopted a CNN architecture similar to VQ-GAN to convert continuous images into discrete tokens. Through a two-stage training strategy, the visual quality and resolution of the images were significantly improved.

In the first phase of training, LlamaGen trained on a 50M subset of LAION-COCO with an image resolution of 256×256. The research team ensured the quality of the training data through strict screening standards, including effective image URL, aesthetic score, watermark score, etc. The second stage is fine-tuning on internal high-aesthetic quality images of 10 million scales, increasing the image resolution to 512×512, further optimizing the generation effect.

The core advantage of LlamaGen is its excellent Image Tokenizer and the scalability of Llama architecture. In actual generation tests, LlamaGen showed strong competitiveness in key indicators such as FID, IS, Precision and Recall. Compared with the previous autoregressive model, LlamaGen performed excellently on all parameter orders, setting a new benchmark for the field of image generation.

Although LlamaGen has achieved remarkable results, the research team said this is just the beginning of the Stable Diffusion v1 phase. Future development directions will include supporting higher resolution, more aspect ratio, stronger controllability, and new areas such as video generation. These plans indicate that LlamaGen will continue to lead innovation in image generation technology in a broader field.

At present, LlamaGen has been opened for online experience, and users can personally experience this revolutionary technology through the LlamaGen space on Hugging Face. At the same time, the open source release of LlamaGen also provides a platform for global developers and researchers to participate and contribute, jointly promoting the advancement of image generation technology. The project address and online experience address are: https://top.aibase.com/tool/llamagen and https://huggingface.co/spaces/FoundationVision/LlamaGen, respectively.