Elon Musk, who caused an uproar in the technology industry, once again shocked the AI field with amazing works. This technology giant, known as the "realistic version of Iron Man", spent $4 billion to purchase 100,000 Nvidia H100 chips, specifically to train its xAI company's new generation AI model Grok3. This move not only demonstrates Musk's ambitions in the field of AI, but also a major increase in the current AI competition.

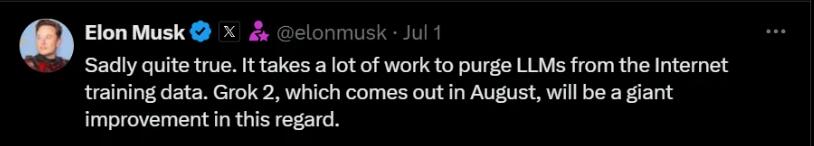

Musk posted two tweets on social media to build momentum for the upcoming Grok series. He announced that he would launch the Grok2 in August and plans to release the Grok3 trained with 100,000 H100 chips by the end of the year. This series of actions directly point to OpenAI's GPT model, marking Musk's official comprehensive layout in the field of generative AI. It is worth noting that this innovative data training method based on large-scale chip clusters may redefine the training paradigm of AI models.

Since the release of Grok1.5, Musk has spared no effort to cheer for the AI startup xAI. As Musk's flagship product for entering the generative AI track, Grok is in fierce competition with technology giants such as OpenAI, Google, and Meta. xAI not only adopts an open source strategy, but is also actively building a supercomputing center, demonstrating its ambition to build a top AI ecosystem. This all-round layout fully reflects Musk's deep understanding and strategic vision of the AI field.

In terms of technological progress, xAI has successively launched the Grok1.5 language model and the first multimodal model Grok1.5Vision since March this year. According to xAI official, Grok1.5V has demonstrated its ability to compete with existing cutting-edge multimodal models in multiple fields such as multidisciplinary reasoning, document understanding, scientific charting, and table processing. These technological breakthroughs have laid a solid foundation for the subsequent launch of products.

In a public speech in May, Musk admitted that as an emerging company, xAI still has a lot of preparations to be completed before it can compete with industry giants like Google Deepmind and OpenAI. But xAI has been silently working hard, focusing on improving model performance, hoping to put pressure on industry giants through technological innovation. This strategy of using small to make big shows Musk's consistent entrepreneurial wisdom.

Grok2, which will be launched in August, is expected to make a major breakthrough in data training. Musk specifically mentioned that the new model is expected to effectively solve the so-called "human centipede effect", that is, the problem of the model producing the same output. This technological breakthrough is of great significance to improving the practicality and diversity of large language models and will also bring users a better user experience.

What's more striking is that Musk revealed that Grok3 will be launched at the end of the year and will be trained on a cluster of 100,000 Nvidia H100GPUs. This chip order worth $3 billion to 4 billion not only demonstrates Musk's attention and investment in the AI field, but also indicates that Grok3 may bring a revolutionary performance breakthrough. Musk called it "a special thing", which has aroused the appetite of the industry and users.

With the upcoming release of Grok2 and Grok3, users are full of expectations for 100,000 models trained by H100. Although tech giants such as Meta are also purchasing a large number of GPUs, this move by xAI undoubtedly shows its ambitions in the field of AI. This large-scale investment may not only bring about technological breakthroughs, but may also reshape the competitive landscape of the entire AI industry.

In order to ensure the efficiency and stability of AI computing, Musk also personally supervised the construction of xAI data centers and adopted advanced liquid cooling technology. This technical solution has the advantages of high cooling efficiency, energy saving and environmental protection, and strong stability, and has become the first choice for AI data centers. This detail once again reflects Musk's emphasis on technical details and his determination to build a top AI infrastructure.