OpenStreetView-5M 的官方 PyTorch 实现:通向全球视觉地理定位的众多道路。

第一作者: Guillaume Astruc、Nicolas Dufour、Ioannis Siglidis

第二作者: Constantin Aronssohn, Nacim Bouia, Stephanie Fu, Romain Loiseau, Van Nguyen Nguyen, Charles Raude, Elliot Vincent, Lintao XU, Hongyu Zhou

最后作者:卢瓦克·兰德里厄

研究机构: Imagine、 LIGM、Ecole des Ponts、Univ Gustave Eiffel、CNRS、Marne-la-Vallée,法国

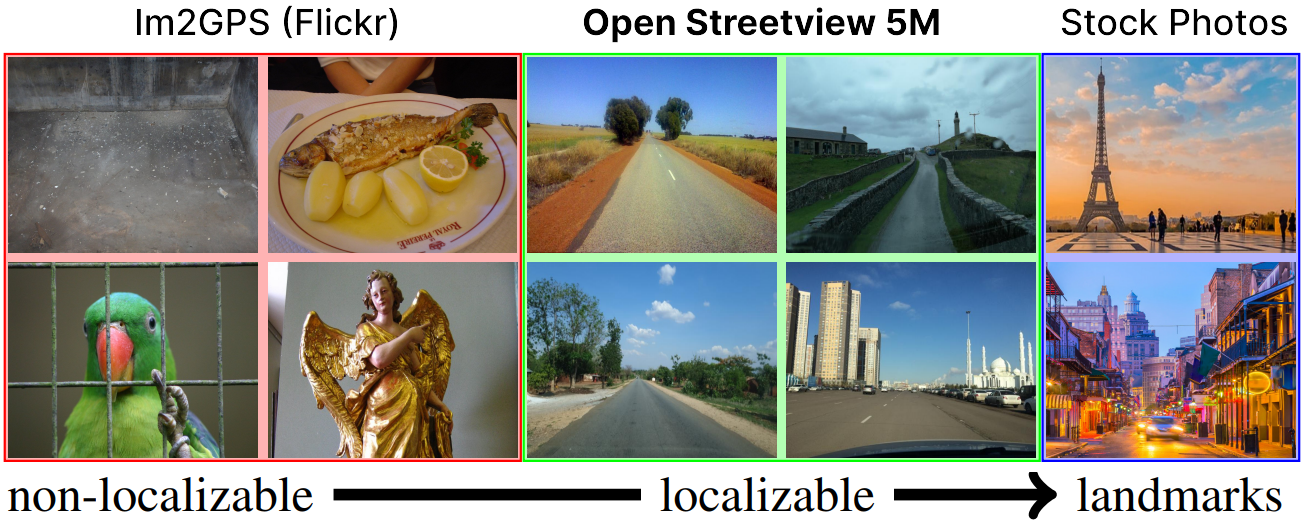

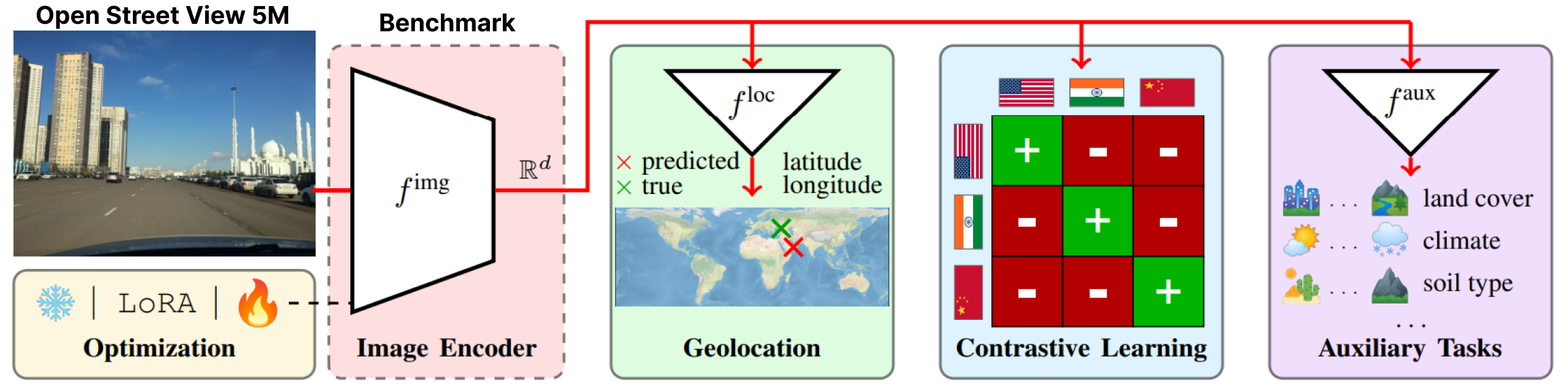

OpenStreetView-5M 是第一个大规模开放街景图像地理定位基准。

要了解基准测试的难度,您可以玩我们的演示。

我们的数据集用于广泛的基准测试,我们提供了最佳模型。

有关更多详细信息和结果,请查看我们的论文和项目页面。

OpenStreetView-5M 托管于 Huggingface/datasets/osv5m/osv5m。要下载并解压它,请运行:

python scripts/download-dataset.py有关导入数据集的不同方式,请参阅 DATASET.md

我们在 OSV-5M 上的最佳模型也可以在 Huggingface 上找到。

from PIL import Image

from models . huggingface import Geolocalizer

geolocalizer = Geolocalizer . from_pretrained ( 'osv5m/baseline' )

img = Image . open ( '.media/examples/img1.jpeg' )

x = geolocalizer . transform ( img ). unsqueeze ( 0 ) # transform the image using our dedicated transformer

gps = geolocalizer ( x ) # B, 2 (lat, lon - tensor in rad)要在 Huggingface 上重现模型的结果,请运行:

python evaluation.py exp=eval_best_model dataset.global_batch_size=1024 为了复制我们论文中的所有实验,我们在

为了复制我们论文中的所有实验,我们在scripts/experiments中提供了专用脚本。

要安装我们的 conda 环境,请运行:

conda env create -f environment.yaml

conda activate osv5m要运行大多数方法,您首先需要预先计算四叉树(大约 10 分钟):

python scripts/preprocessing/preprocess.py data_dir=datasets do_split=1000 # You will need to run this code with other splitting/depth arguments if you want to use different quadtree arguments使用 configs/exp 文件夹选择您想要的实验。请随意探索它。论文中所有评估的模型都有一个专用的配置文件

# Using more workers in the dataloader

computer.num_workers=20

# Change number of devices available

computer.devices=1

# Change batch_size distributed to all devices

dataset.global_batch_size=2

# Changing mode train or eval, default is train

mode=eval

# All these parameters and more can be changed from the config file!

# train best model

python train.py exp=best_model computer.devices=1 computer.num_workers=16 dataset.global_batch_size=2 @article { osv5m ,

title = { {OpenStreetView-5M}: {T}he Many Roads to Global Visual Geolocation } ,

author = { Astruc, Guillaume and Dufour, Nicolas and Siglidis, Ioannis

and Aronssohn, Constantin and Bouia, Nacim and Fu, Stephanie and Loiseau, Romain

and Nguyen, Van Nguyen and Raude, Charles and Vincent, Elliot and Xu, Lintao

and Zhou, Hongyu and Landrieu, Loic } ,

journal = { CVPR } ,

year = { 2024 } ,

}