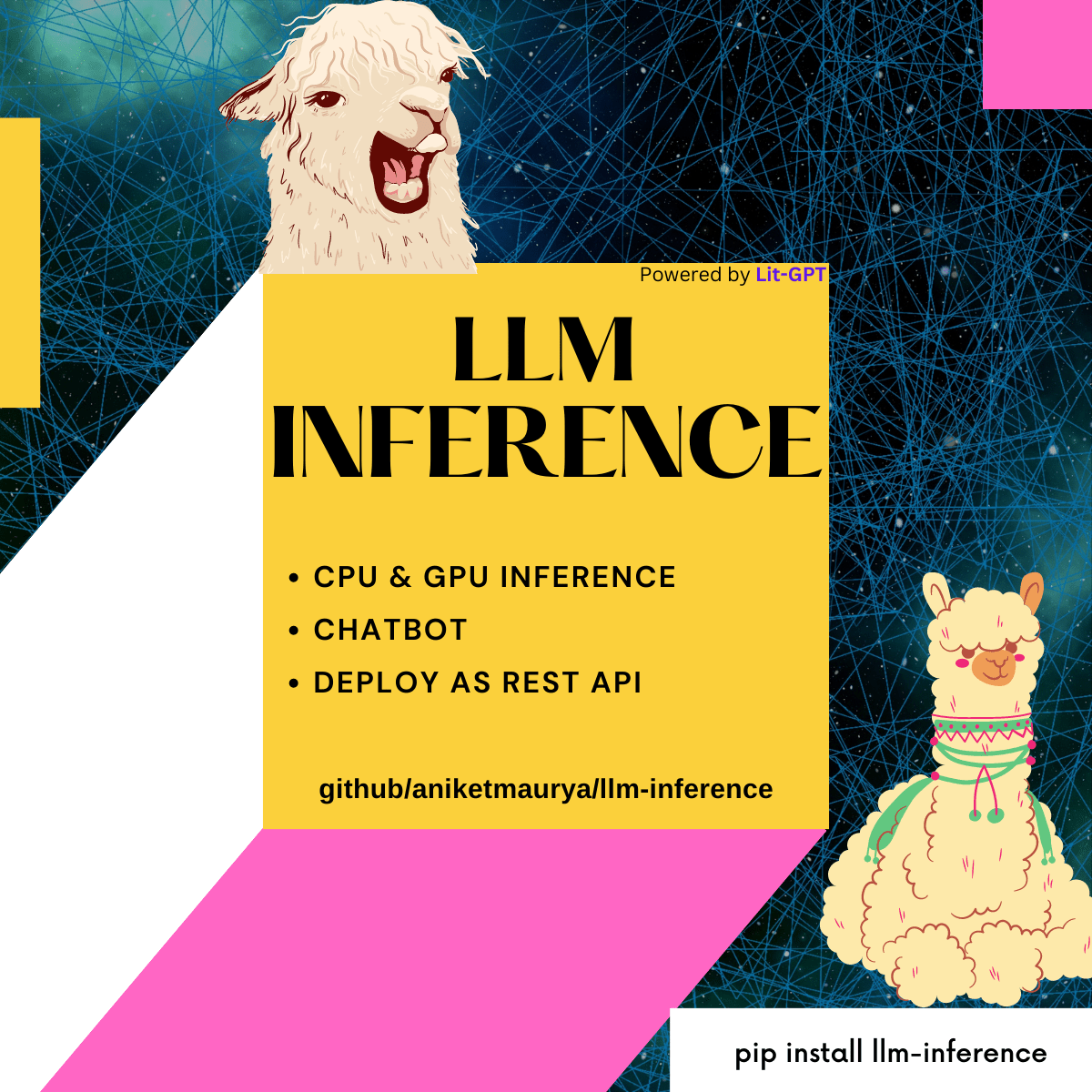

llm inference

v0.0.6

由 Lightning AI 的 Lit-GPT 提供支持的 LLaMA 和 Falcon 等法学硕士的推理 API

pip install llm-inference

pip install git+https://github.com/aniketmaurya/llm-inference.git@main

# You need to manually install [Lit-GPT](https://github.com/Lightning-AI/lit-gpt) and setup the model weights to use this project.

pip install lit_gpt@git+https://github.com/aniketmaurya/install-lit-gpt.git@install from llm_inference import LLMInference , prepare_weights

path = prepare_weights ( "EleutherAI/pythia-70m" )

model = LLMInference ( checkpoint_dir = path )

print ( model ( "New York is located in" ))

from llm_chain import LitGPTConversationChain , LitGPTLLM

from llm_inference import prepare_weights

path = str ( prepare_weights ( "meta-llama/Llama-2-7b-chat-hf" ))

llm = LitGPTLLM ( checkpoint_dir = path , quantize = "bnb.nf4" ) # 7GB GPU memory

bot = LitGPTConversationChain . from_llm ( llm = llm , prompt = llama2_prompt_template )

print ( bot . send ( "hi, what is the capital of France?" ))1.下载权重

from llm_inference import prepare_weights

path = prepare_weights ( "meta-llama/Llama-2-7b-chat-hf" )2.启动Gradio应用程序

python examples/chatbot/gradio_demo.py