本存储库涵盖以下论文的实现: 《Contrastive Learning for Prompt-based Few-shot Language Learners》,作者:Yirenjian、Chongyang Gau 和 Soroush Vosoughi,已被 NAACL 2022 接收。

如果您发现此存储库对您的研究有用,请考虑引用该论文。

@inproceedings { jian-etal-2022-contrastive ,

title = " Contrastive Learning for Prompt-based Few-shot Language Learners " ,

author = " Jian, Yiren and

Gao, Chongyang and

Vosoughi, Soroush " ,

booktitle = " Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies " ,

month = jul,

year = " 2022 " ,

address = " Seattle, United States " ,

publisher = " Association for Computational Linguistics " ,

url = " https://aclanthology.org/2022.naacl-main.408 " ,

pages = " 5577--5587 " ,

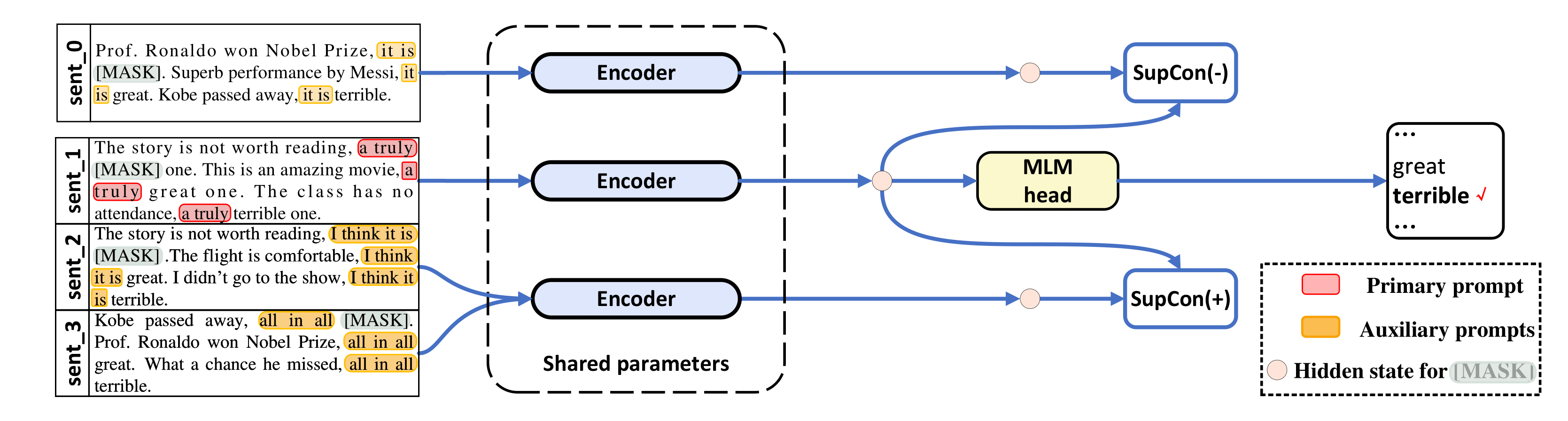

abstract = "The impressive performance of GPT-3 using natural language prompts and in-context learning has inspired work on better fine-tuning of moderately-sized models under this paradigm. Following this line of work, we present a contrastive learning framework that clusters inputs from the same class for better generality of models trained with only limited examples. Specifically, we propose a supervised contrastive framework that clusters inputs from the same class under different augmented {``}views{''} and repel the ones from different classes. We create different {``}views{''} of an example by appending it with different language prompts and contextual demonstrations. Combining a contrastive loss with the standard masked language modeling (MLM) loss in prompt-based few-shot learners, the experimental results show that our method can improve over the state-of-the-art methods in a diverse set of 15 language tasks. Our framework makes minimal assumptions on the task or the base model, and can be applied to many recent methods with little modification.",

}我们的代码大量借鉴自 LM-BFF 和 SupCon ( /src/losses.py )。

此存储库已使用 Ubuntu 18.04.5 LTS、Python 3.7、PyTorch 1.6.0 和 CUDA 10.1 进行测试。您将需要 48 GB GPU 来进行 RoBERTa-base 实验,并需要 4 个 48 GB GPU 来进行 RoBERTa-large 实验。我们在 Nvidia RTX-A6000 和 RTX-8000 上运行实验,但 40 GB 的 Nvidia A100 也应该可以工作。

我们使用来自 LM-BFF 的预处理数据集(SST-2、SST-5、MR、CR、MPQA、Subj、TREC、CoLA、MNLI、SNLI、QNLI、RTE、MRPC、QQP)。 LM-BFF 提供了用于下载和准备数据集的有用脚本。只需运行以下命令即可。

cd data

bash download_dataset.sh然后使用以下命令生成我们在研究中使用的 16 个镜头数据集。

python tools/generate_k_shot_data.py用于任务的主要提示(模板)已在run_experiments.sh中预定义。生成用于对比学习的输入的多视图时使用的辅助模板可以在/auto_template/$TASK中找到。

假设您的系统中有一个 GPU,我们将展示一个在 SST-5 上运行微调的示例(随机模板和输入“增强视图”的随机演示)。

for seed in 13 21 42 87 100 # ### random seeds for different train-test splits

do

for bs in 40 # ### batch size

do

for lr in 1e-5 # ### learning rate for MLM loss

do

for supcon_lr in 1e-5 # ### learning rate for SupCon loss

do

TAG=exp

TYPE=prompt-demo

TASK=sst-5

BS= $bs

LR= $lr

SupCon_LR= $supcon_lr

SEED= $seed

MODEL=roberta-base

bash run_experiment.sh

done

done

done

done

rm -rf result/我们的框架也适用于基于提示的方法,无需演示,即TYPE=prompt (在这种情况下,我们仅随机采样模板以生成“增强视图”)。结果保存在log中。

使用 RoBERTa-large 作为基础模型需要 4 个 GPU,每个 GPU 具有 48 GB 内存。您需要首先将src/models.py中的第 20 行编辑为def __init__(self, hidden_size=1024) 。

for seed in 13 21 42 87 100 # ### random seeds for different train-test splits

do

for bs in 10 # ### batch size for each GPU, total batch size is then 40

do

for lr in 1e-5 # ### learning rate for MLM loss

do

for supcon_lr in 1e-5 # ### learning rate for SupCon loss

do

TAG=exp

TYPE=prompt-demo

TASK=sst-5

BS= $bs

LR= $lr

SupCon_LR= $supcon_lr

SEED= $seed

MODEL=roberta-large

bash run_experiment.sh

done

done

done

done

rm -rf result/ python tools/gather_result.py --condition "{'tag': 'exp', 'task_name': 'sst-5', 'few_shot_type': 'prompt-demo'}"

它将收集log结果并计算这 5 次训练测试分割的平均值和标准差。

如有任何疑问,请联系作者。

感谢 LM-BFF 和 SupCon 的初步实施。