青山上空的薄雾

草地上的碎盘子

宇宙的爱和关注

人群中的时间旅行者

瘟疫期间的生活

在阳光照射的森林中冥想和平

一个人画了一个完全红色的图像

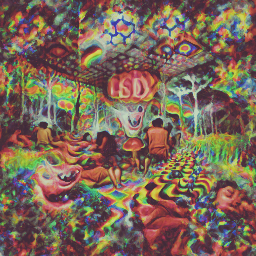

LSD 的迷幻体验

使用 OpenAI 的 CLIP 和 Siren 生成文本到图像的简单命令行工具。归功于 Ryan Murdock 发现了这项技术(并提出了这个伟大的名字)!

原装笔记本

新的简化笔记本

这需要您有 Nvidia GPU 或 AMD GPU

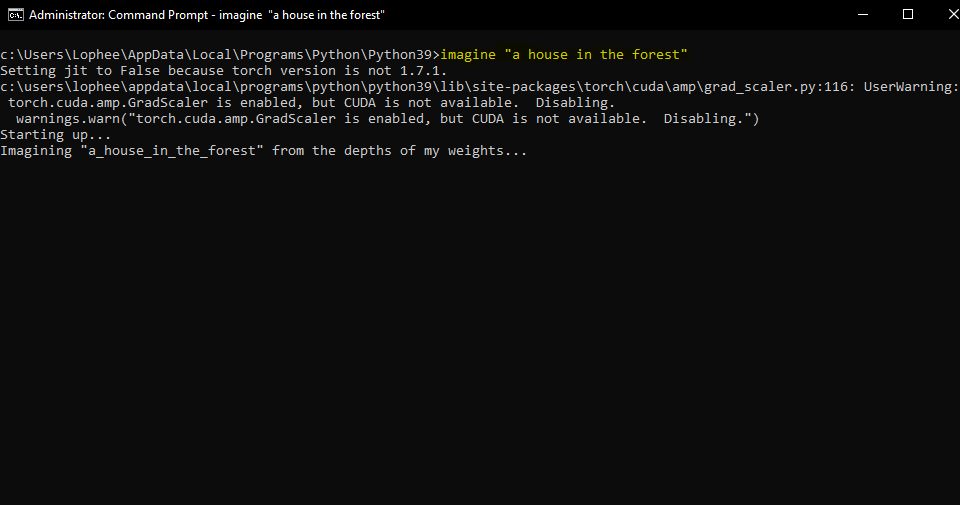

$ pip install deep-daze

假设Python已安装:

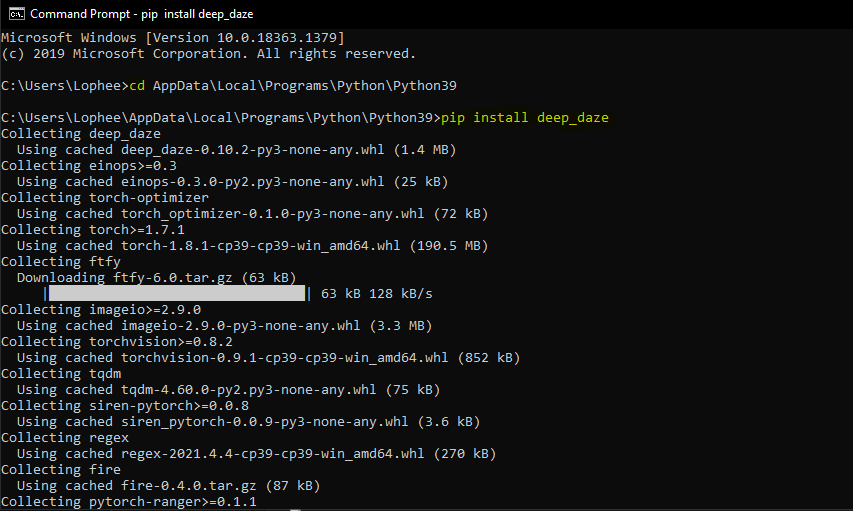

pip install deep-daze$ imagine " a house in the forest "对于 Windows:

imagine " a house in the forest "就是这样。

如果你有足够的内存,你可以通过添加--deeper标志来获得更好的质量

$ imagine " shattered plates on the ground " --deeper在真正的深度学习时尚中,更多的层会产生更好的结果。默认值为16 ,但可以根据您的资源增加到32 。

$ imagine " stranger in strange lands " --num-layers 32NAME

imagine

SYNOPSIS

imagine TEXT < flags >

POSITIONAL ARGUMENTS

TEXT

(required) A phrase less than 77 tokens which you would like to visualize.

FLAGS

--img=IMAGE_PATH

Default: None

Path to png/jpg image or PIL image to optimize on

--encoding=ENCODING

Default: None

User-created custom CLIP encoding. If used, replaces any text or image that was used.

--create_story=CREATE_STORY

Default: False

Creates a story by optimizing each epoch on a new sliding-window of the input words. If this is enabled, much longer texts than 77 tokens can be used. Requires save_progress to visualize the transitions of the story.

--story_start_words=STORY_START_WORDS

Default: 5

Only used if create_story is True. How many words to optimize on for the first epoch.

--story_words_per_epoch=STORY_WORDS_PER_EPOCH

Default: 5

Only used if create_story is True. How many words to add to the optimization goal per epoch after the first one.

--story_separator:

Default: None

Only used if create_story is True. Defines a separator like ' . ' that splits the text into groups for each epoch. Separator needs to be in the text otherwise it will be ignored

--lower_bound_cutout=LOWER_BOUND_CUTOUT

Default: 0.1

Lower bound of the sampling of the size of the random cut-out of the SIREN image per batch. Should be smaller than 0.8.

--upper_bound_cutout=UPPER_BOUND_CUTOUT

Default: 1.0

Upper bound of the sampling of the size of the random cut-out of the SIREN image per batch. Should probably stay at 1.0.

--saturate_bound=SATURATE_BOUND

Default: False

If True, the LOWER_BOUND_CUTOUT is linearly increased to 0.75 during training.

--learning_rate=LEARNING_RATE

Default: 1e-05

The learning rate of the neural net.

--num_layers=NUM_LAYERS

Default: 16

The number of hidden layers to use in the Siren neural net.

--batch_size=BATCH_SIZE

Default: 4

The number of generated images to pass into Siren before calculating loss. Decreasing this can lower memory and accuracy.

--gradient_accumulate_every=GRADIENT_ACCUMULATE_EVERY

Default: 4

Calculate a weighted loss of n samples for each iteration. Increasing this can help increase accuracy with lower batch sizes.

--epochs=EPOCHS

Default: 20

The number of epochs to run.

--iterations=ITERATIONS

Default: 1050

The number of times to calculate and backpropagate loss in a given epoch.

--save_every=SAVE_EVERY

Default: 100

Generate an image every time iterations is a multiple of this number.

--image_width=IMAGE_WIDTH

Default: 512

The desired resolution of the image.

--deeper=DEEPER

Default: False

Uses a Siren neural net with 32 hidden layers.

--overwrite=OVERWRITE

Default: False

Whether or not to overwrite existing generated images of the same name.

--save_progress=SAVE_PROGRESS

Default: False

Whether or not to save images generated before training Siren is complete.

--seed=SEED

Type: Optional[]

Default: None

A seed to be used for deterministic runs.

--open_folder=OPEN_FOLDER

Default: True

Whether or not to open a folder showing your generated images.

--save_date_time=SAVE_DATE_TIME

Default: False

Save files with a timestamp prepended e.g. ` %y%m%d-%H%M%S-my_phrase_here `

--start_image_path=START_IMAGE_PATH

Default: None

The generator is trained first on a starting image before steered towards the textual input

--start_image_train_iters=START_IMAGE_TRAIN_ITERS

Default: 50

The number of steps for the initial training on the starting image

--theta_initial=THETA_INITIAL

Default: 30.0

Hyperparameter describing the frequency of the color space. Only applies to the first layer of the network.

--theta_hidden=THETA_INITIAL

Default: 30.0

Hyperparameter describing the frequency of the color space. Only applies to the hidden layers of the network.

--save_gif=SAVE_GIF

Default: False

Whether or not to save a GIF animation of the generation procedure. Only works if save_progress is set to True.该技术最初由 Mario Klingemann 设计和分享,它允许您在引导至文本之前使用起始图像来启动生成器网络。

只需指定您要使用的图像的路径,以及可选的初始训练步骤数。

$ imagine ' a clear night sky filled with stars ' --start_image_path ./cloudy-night-sky.jpg已涂底漆的起始图像

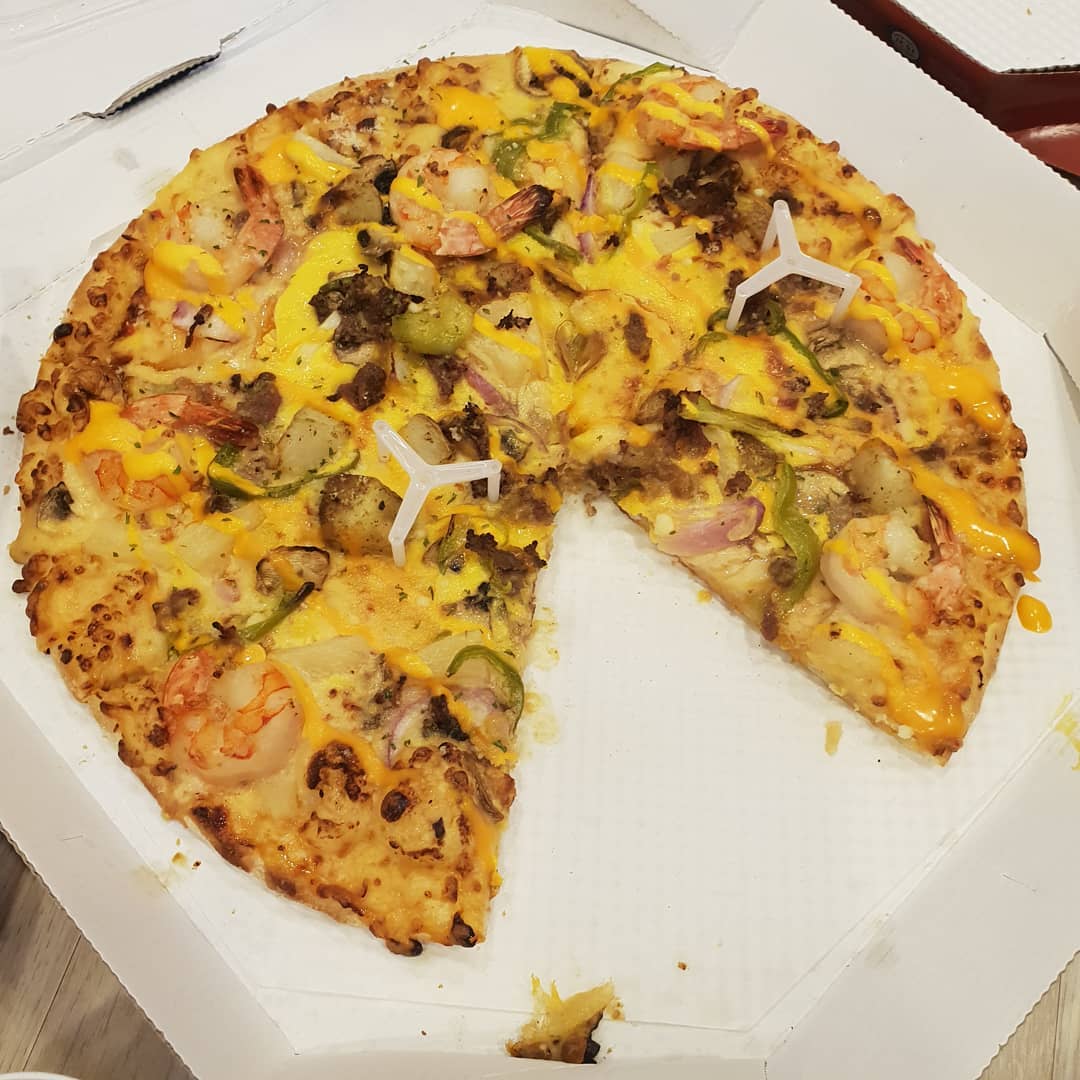

然后按照提示进行训练A pizza with green pepper.

我们还可以输入图像作为优化目标,而不仅仅是启动生成器网络。然后 Deepdaze 会对该图像做出自己的解释:

$ imagine --img samples/Autumn_1875_Frederic_Edwin_Church.jpg原图:

网络解读:

原图:

网络解读:

$ imagine " A psychedelic experience. " --img samples/hot-dog.jpg网络解读:

文本的常规模式仅允许 77 个标记。如果您想可视化完整的故事/段落/歌曲/诗歌,请将create_story设置为True 。

考虑到罗伯特·弗罗斯特的诗《在一个下雪的夜晚停在树林里》——“我想我知道这些是谁的树林。尽管他的房子在村子里;他不会看到我在这里停下来观看他的树林被雪填满。我的小马一定觉得很奇怪 在树林和结冰的湖边附近没有农舍停下来 在一年中最黑暗的夜晚 他摇动马具的铃铛 询问是否有什么错误 唯一的其他声音是轻松的声音。风和柔软的雪花,树林可爱,黑暗而深邃,但我要遵守诺言,在我睡觉前还有几英里路要走,在我睡觉前还有几英里路要走。”

我们得到:

deep_daze.Imagine from deep_daze import Imagine

imagine = Imagine (

text = 'cosmic love and attention' ,

num_layers = 24 ,

)

imagine ()以以下格式保存图像:insert_text_here.00001.png、insert_text_here.00002.png、...最多(total_iterations % save_every)

imagine = Imagine (

text = text ,

save_every = 4 ,

save_progress = True

)创建带有时间戳和序列号的文件。

例如 210129-043928_328751_insert_text_here.00001.png、210129-043928_512351_insert_text_here.00002.png、...

imagine = Imagine (

text = text ,

save_every = 4 ,

save_progress = True ,

save_date_time = True ,

)如果您有至少 16 GiB 的可用 vram,您应该能够在有一定回旋空间的情况下运行这些设置。

imagine = Imagine (

text = text ,

num_layers = 42 ,

batch_size = 64 ,

gradient_accumulate_every = 1 ,

) imagine = Imagine (

text = text ,

num_layers = 24 ,

batch_size = 16 ,

gradient_accumulate_every = 2

)如果您迫切希望在小于 8 GiB vram 的卡上运行此程序,则可以降低 image_width。

imagine = Imagine (

text = text ,

image_width = 256 ,

num_layers = 16 ,

batch_size = 1 ,

gradient_accumulate_every = 16 # Increase gradient_accumulate_every to correct for loss in low batch sizes

)这些实验是使用 2060 Super RTX 和 3700X Ryzen 5 进行的。我们首先提到参数(bs = 批量大小),然后是内存使用情况,在某些情况下是每秒的训练迭代次数:

对于 512 的图像分辨率:

对于 256 的图像分辨率:

@NotNANtoN 建议批量大小为 32、44 层和训练 1-8 轮。

这只是一个预告片。我们将能够用自然语言随意生成图像、声音、任何东西。全息甲板即将在我们的有生之年成为现实。

如果您有兴趣进一步发展这项技术,请加入 Pytorch 或 Mesh Tensorflow 的 DALL-E 复制工作。

Big Sleep - CLIP 和 Big GAN 的生成器

@misc { unpublished2021clip ,

title = { CLIP: Connecting Text and Images } ,

author = { Alec Radford, Ilya Sutskever, Jong Wook Kim, Gretchen Krueger, Sandhini Agarwal } ,

year = { 2021 }

} @misc { sitzmann2020implicit ,

title = { Implicit Neural Representations with Periodic Activation Functions } ,

author = { Vincent Sitzmann and Julien N. P. Martel and Alexander W. Bergman and David B. Lindell and Gordon Wetzstein } ,

year = { 2020 } ,

eprint = { 2006.09661 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

}