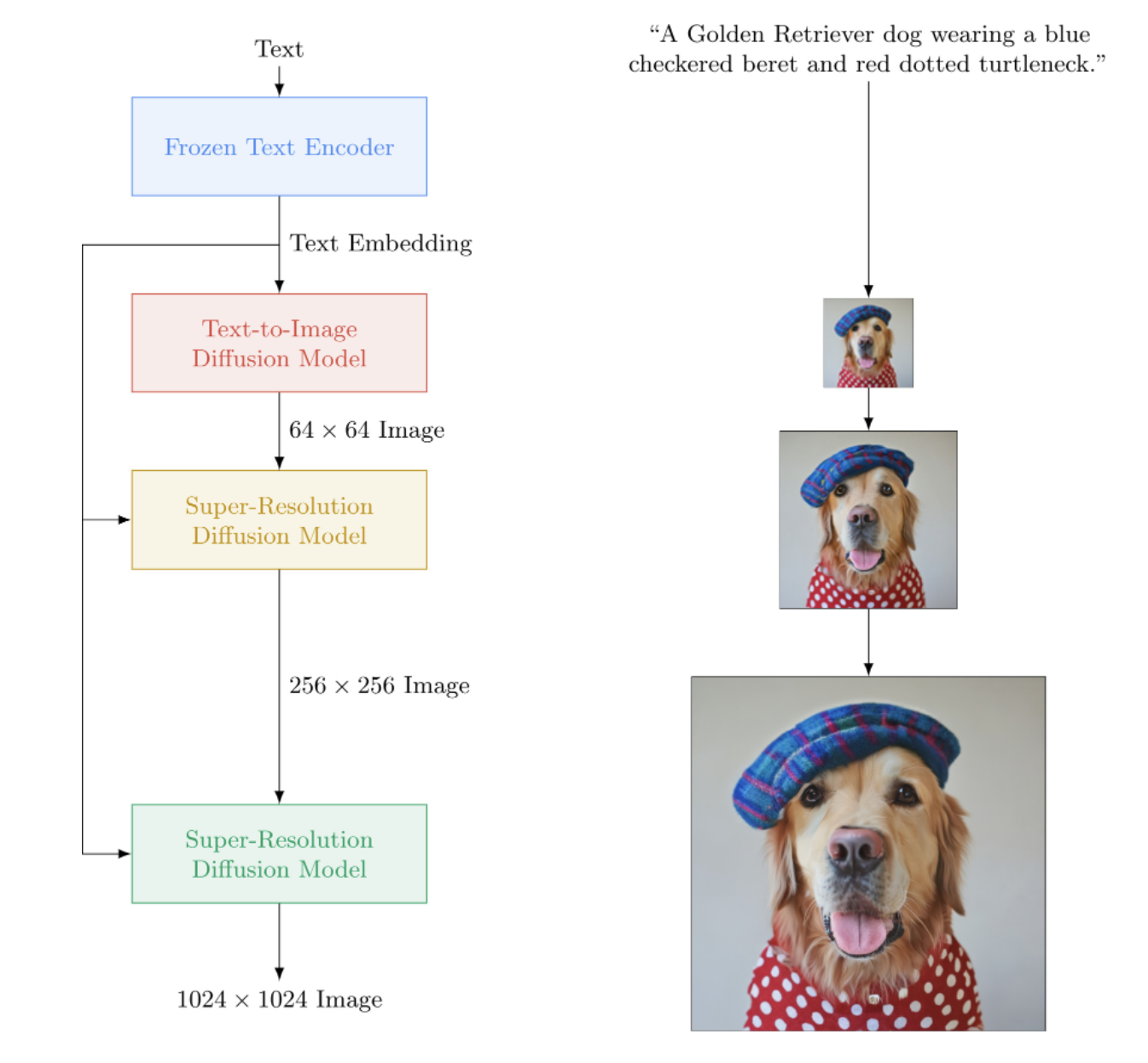

在 Pytorch 中实现 Imagen,这是谷歌的文本到图像神经网络,击败了 DALL-E2。它是用于文本到图像合成的新 SOTA。

从架构上来说,它实际上比DALL-E2简单得多。它由一个级联 DDPM 组成,该 DDPM 以来自大型预训练 T5 模型(注意力网络)的文本嵌入为条件。它还包含用于改进分类器自由引导的动态裁剪、噪声水平调节和内存高效的 unet 设计。

看来 CLIP 和之前的网络都不再需要了。因此研究仍在继续。

AI 与 Letitia 喝咖啡 |装配人工智能 |雅尼克·基尔彻

如果您有兴趣帮助 LAION 社区进行复制,请加入

StabilityAI 以及我的其他赞助商的慷慨赞助

?拥抱他们令人惊叹的变形金刚库。由于它们,文本编码器部分几乎得到了处理

乔纳森·何(Jonathan Ho)通过他的开创性论文带来了生成人工智能的革命

Sylvain 和 Zachary 的 Accelerate 库,该存储库用于分布式训练

Alex for einops,张量操作不可或缺的工具

Jorge Gomes 帮助完成 T5 加载代码并提供有关正确 T5 版本的建议

Katherine Crowson,她的漂亮代码帮助我理解了高斯扩散的连续时间版本

Marunine 和 Netruk44,用于审查代码、共享实验结果并帮助调试

Marunine 为内存高效 u-net 中的色移问题提供了潜在的解决方案。感谢 Jacob 分享基本 unets 和内存高效 unets 之间的实验比较

Marunine 发现了许多错误,解决了正确调整大小的问题,并分享了他的实验配置和结果

MalumaDev 提议使用像素洗牌上采样器来修复棋盘伪影

Valentin 指出unet中skip连接的不足,以及附录中base-unet中注意力调节的具体方法

BIGJUN 用于在推理时通过连续时间高斯扩散噪声水平调节捕获大错误

Bingbing 用于通过低分辨率调节图像的采样和归一化顺序以及噪声来识别错误

Kay 贡献了 Imagen 的一行命令训练!

Hadrien Reynaud 在医疗数据集上测试文本到视频,分享他的结果并发现问题!

$ pip install imagen-pytorch import torch

from imagen_pytorch import Unet , Imagen

# unet for imagen

unet1 = Unet (

dim = 32 ,

cond_dim = 512 ,

dim_mults = ( 1 , 2 , 4 , 8 ),

num_resnet_blocks = 3 ,

layer_attns = ( False , True , True , True ),

layer_cross_attns = ( False , True , True , True )

)

unet2 = Unet (

dim = 32 ,

cond_dim = 512 ,

dim_mults = ( 1 , 2 , 4 , 8 ),

num_resnet_blocks = ( 2 , 4 , 8 , 8 ),

layer_attns = ( False , False , False , True ),

layer_cross_attns = ( False , False , False , True )

)

# imagen, which contains the unets above (base unet and super resoluting ones)

imagen = Imagen (

unets = ( unet1 , unet2 ),

image_sizes = ( 64 , 256 ),

timesteps = 1000 ,

cond_drop_prob = 0.1

). cuda ()

# mock images (get a lot of this) and text encodings from large T5

text_embeds = torch . randn ( 4 , 256 , 768 ). cuda ()

images = torch . randn ( 4 , 3 , 256 , 256 ). cuda ()

# feed images into imagen, training each unet in the cascade

for i in ( 1 , 2 ):

loss = imagen ( images , text_embeds = text_embeds , unet_number = i )

loss . backward ()

# do the above for many many many many steps

# now you can sample an image based on the text embeddings from the cascading ddpm

images = imagen . sample ( texts = [

'a whale breaching from afar' ,

'young girl blowing out candles on her birthday cake' ,

'fireworks with blue and green sparkles'

], cond_scale = 3. )

images . shape # (3, 3, 256, 256)为了更简单的训练,您可以直接提供文本字符串,而不是预先计算文本编码。 (尽管出于缩放目的,您肯定会想要预先计算文本嵌入+掩码)

如果您采用此方法,文本标题的数量必须与图像的批量大小相匹配。

# mock images and text (get a lot of this)

texts = [

'a child screaming at finding a worm within a half-eaten apple' ,

'lizard running across the desert on two feet' ,

'waking up to a psychedelic landscape' ,

'seashells sparkling in the shallow waters'

]

images = torch . randn ( 4 , 3 , 256 , 256 ). cuda ()

# feed images into imagen, training each unet in the cascade

for i in ( 1 , 2 ):

loss = imagen ( images , texts = texts , unet_number = i )

loss . backward ()使用ImagenTrainer包装类,在调用update时将自动处理级联 DDPM 中所有 U 网的指数移动平均值

import torch

from imagen_pytorch import Unet , Imagen , ImagenTrainer

# unet for imagen

unet1 = Unet (

dim = 32 ,

cond_dim = 512 ,

dim_mults = ( 1 , 2 , 4 , 8 ),

num_resnet_blocks = 3 ,

layer_attns = ( False , True , True , True ),

)

unet2 = Unet (

dim = 32 ,

cond_dim = 512 ,

dim_mults = ( 1 , 2 , 4 , 8 ),

num_resnet_blocks = ( 2 , 4 , 8 , 8 ),

layer_attns = ( False , False , False , True ),

layer_cross_attns = ( False , False , False , True )

)

# imagen, which contains the unets above (base unet and super resoluting ones)

imagen = Imagen (

unets = ( unet1 , unet2 ),

text_encoder_name = 't5-large' ,

image_sizes = ( 64 , 256 ),

timesteps = 1000 ,

cond_drop_prob = 0.1

). cuda ()

# wrap imagen with the trainer class

trainer = ImagenTrainer ( imagen )

# mock images (get a lot of this) and text encodings from large T5

text_embeds = torch . randn ( 64 , 256 , 1024 ). cuda ()

images = torch . randn ( 64 , 3 , 256 , 256 ). cuda ()

# feed images into imagen, training each unet in the cascade

loss = trainer (

images ,

text_embeds = text_embeds ,

unet_number = 1 , # training on unet number 1 in this example, but you will have to also save checkpoints and then reload and continue training on unet number 2

max_batch_size = 4 # auto divide the batch of 64 up into batch size of 4 and accumulate gradients, so it all fits in memory

)

trainer . update ( unet_number = 1 )

# do the above for many many many many steps

# now you can sample an image based on the text embeddings from the cascading ddpm

images = trainer . sample ( texts = [

'a puppy looking anxiously at a giant donut on the table' ,

'the milky way galaxy in the style of monet'

], cond_scale = 3. )

images . shape # (2, 3, 256, 256)您还可以训练没有文本的 Imagen(无条件图像生成),如下所示

import torch

from imagen_pytorch import Unet , Imagen , SRUnet256 , ImagenTrainer

# unets for unconditional imagen

unet1 = Unet (

dim = 32 ,

dim_mults = ( 1 , 2 , 4 ),

num_resnet_blocks = 3 ,

layer_attns = ( False , True , True ),

layer_cross_attns = False ,

use_linear_attn = True

)

unet2 = SRUnet256 (

dim = 32 ,

dim_mults = ( 1 , 2 , 4 ),

num_resnet_blocks = ( 2 , 4 , 8 ),

layer_attns = ( False , False , True ),

layer_cross_attns = False

)

# imagen, which contains the unets above (base unet and super resoluting ones)

imagen = Imagen (

condition_on_text = False , # this must be set to False for unconditional Imagen

unets = ( unet1 , unet2 ),

image_sizes = ( 64 , 128 ),

timesteps = 1000

)

trainer = ImagenTrainer ( imagen ). cuda ()

# now get a ton of images and feed it through the Imagen trainer

training_images = torch . randn ( 4 , 3 , 256 , 256 ). cuda ()

# train each unet separately

# in this example, only training on unet number 1

loss = trainer ( training_images , unet_number = 1 )

trainer . update ( unet_number = 1 )

# do the above for many many many many steps

# now you can sample images unconditionally from the cascading unet(s)

images = trainer . sample ( batch_size = 16 ) # (16, 3, 128, 128)或者仅训练超分辨率 unets

import torch

from imagen_pytorch import Unet , NullUnet , Imagen

# unet for imagen

unet1 = NullUnet () # add a placeholder "null" unet for the base unet

unet2 = Unet (

dim = 32 ,

cond_dim = 512 ,

dim_mults = ( 1 , 2 , 4 , 8 ),

num_resnet_blocks = ( 2 , 4 , 8 , 8 ),

layer_attns = ( False , False , False , True ),

layer_cross_attns = ( False , False , False , True )

)

# imagen, which contains the unets above (base unet and super resoluting ones)

imagen = Imagen (

unets = ( unet1 , unet2 ),

image_sizes = ( 64 , 256 ),

timesteps = 250 ,

cond_drop_prob = 0.1

). cuda ()

# mock images (get a lot of this) and text encodings from large T5

text_embeds = torch . randn ( 4 , 256 , 768 ). cuda ()

images = torch . randn ( 4 , 3 , 256 , 256 ). cuda ()

# feed images into imagen, training each unet in the cascade

loss = imagen ( images , text_embeds = text_embeds , unet_number = 2 )

loss . backward ()

# do the above for many many many many steps

# now you can sample an image based on the text embeddings as well as low resolution images

lowres_images = torch . randn ( 3 , 3 , 64 , 64 ). cuda () # starting un-resoluted images

images = imagen . sample (

texts = [

'a whale breaching from afar' ,

'young girl blowing out candles on her birthday cake' ,

'fireworks with blue and green sparkles'

],

start_at_unet_number = 2 , # start at unet number 2

start_image_or_video = lowres_images , # pass in low resolution images to be resoluted

cond_scale = 3. )

images . shape # (3, 3, 256, 256)您可以随时使用保存和load方法save和加载训练器以及所有相关状态。建议您使用这些方法,而不是使用state_dict调用手动保存,因为训练器内部会进行一些设备内存管理。

前任。

trainer . save ( './path/to/checkpoint.pt' )

trainer . load ( './path/to/checkpoint.pt' )

trainer . steps # (2,) step number for each of the unets, in this case 2 您还可以依靠ImagenTrainer自动训练DataLoader实例。您只需制作DataLoader即可返回images (对于无条件情况),或返回('images', 'text_embeds')用于文本引导生成。

前任。无条件训练

from imagen_pytorch import Unet , Imagen , ImagenTrainer

from imagen_pytorch . data import Dataset

# unets for unconditional imagen

unet = Unet (

dim = 32 ,

dim_mults = ( 1 , 2 , 4 , 8 ),

num_resnet_blocks = 1 ,

layer_attns = ( False , False , False , True ),

layer_cross_attns = False

)

# imagen, which contains the unet above

imagen = Imagen (

condition_on_text = False , # this must be set to False for unconditional Imagen

unets = unet ,

image_sizes = 128 ,

timesteps = 1000

)

trainer = ImagenTrainer (

imagen = imagen ,

split_valid_from_train = True # whether to split the validation dataset from the training

). cuda ()

# instantiate your dataloader, which returns the necessary inputs to the DDPM as tuple in the order of images, text embeddings, then text masks. in this case, only images is returned as it is unconditional training

dataset = Dataset ( '/path/to/training/images' , image_size = 128 )

trainer . add_train_dataset ( dataset , batch_size = 16 )

# working training loop

for i in range ( 200000 ):

loss = trainer . train_step ( unet_number = 1 , max_batch_size = 4 )

print ( f'loss: { loss } ' )

if not ( i % 50 ):

valid_loss = trainer . valid_step ( unet_number = 1 , max_batch_size = 4 )

print ( f'valid loss: { valid_loss } ' )

if not ( i % 100 ) and trainer . is_main : # is_main makes sure this can run in distributed

images = trainer . sample ( batch_size = 1 , return_pil_images = True ) # returns List[Image]

images [ 0 ]. save ( f'./sample- { i // 100 } .png' )感谢?加速,只需两步即可轻松进行多GPU训练。

首先,您需要在训练脚本所在的目录中调用accelerate config (假设它名为train.py )

$ accelerate config接下来,您将使用加速 CLI,而不是像针对单 GPU 那样调用python train.py

$ accelerate launch train.py就是这样!

Imagen 也可以直接通过 CLI 使用。

前任。

$ imagen config或者

$ imagen config --path ./configs/config.json在配置中,您可以更改训练器、数据集和图像配置的设置。

Imagen 配置参数可以在这里找到

阐明的 Imagen 配置参数可以在这里找到

Imagen Trainer 配置参数可以在这里找到

对于数据集参数,可以使用所有数据加载器参数。

此命令允许您训练或恢复训练模型

前任。

$ imagen train或者

$ imagen train --unet 2 --epoches 10您可以将以下参数传递给训练命令。

--config指定用于训练的配置文件[默认值:./imagen_config.json]--unet要训练的unet的索引[默认值:1]--epoches训练多少个纪元[默认值:50]请注意,在采样时,您的检查点应该训练所有 unets 以获得可用的结果。

前任。

$ imagen sample --model ./path/to/model/checkpoint.pt " a squirrel raiding the birdfeeder "

# image is saved to ./a_squirrel_raiding_the_birdfeeder.png您可以将以下参数传递给示例命令。

--model指定用于采样的模型文件--cond_scale解码器中的调节尺度(分类器免费指导)--load_ema加载 EMA 版本的 unets(如果可用)为了使用具有此功能的已保存检查点,您必须使用配置类ImagenConfig和ElucidatedImagenConfig化 Imagen 实例,或者直接通过 CLI 创建检查点

为了进行正确的训练,您可能无论如何都需要设置配置驱动的训练。

前任。

import torch

from imagen_pytorch import ImagenConfig , ElucidatedImagenConfig , ImagenTrainer

# in this example, using elucidated imagen

imagen = ElucidatedImagenConfig (

unets = [

dict ( dim = 32 , dim_mults = ( 1 , 2 , 4 , 8 )),

dict ( dim = 32 , dim_mults = ( 1 , 2 , 4 , 8 ))

],

image_sizes = ( 64 , 128 ),

cond_drop_prob = 0.5 ,

num_sample_steps = 32

). create ()

trainer = ImagenTrainer ( imagen )

# do your training ...

# then save it

trainer . save ( './checkpoint.pt' )

# you should see a message informing you that ./checkpoint.pt is commandable from the terminal事情真的应该这么简单

您还可以传递此检查点文件,任何人都可以继续微调自己的数据

from imagen_pytorch import load_imagen_from_checkpoint , ImagenTrainer

imagen = load_imagen_from_checkpoint ( './checkpoint.pt' )

trainer = ImagenTrainer ( imagen )

# continue training / fine-tuning 修复遵循最近的 Repaint 论文中提出的公式。只需将inpaint_images和inpaint_masks传递给Imagen或ElucidatedImagen上的sample函数

inpaint_images = torch . randn ( 4 , 3 , 512 , 512 ). cuda () # (batch, channels, height, width)

inpaint_masks = torch . ones (( 4 , 512 , 512 )). bool (). cuda () # (batch, height, width)

inpainted_images = trainer . sample ( texts = [

'a whale breaching from afar' ,

'young girl blowing out candles on her birthday cake' ,

'fireworks with blue and green sparkles' ,

'dust motes swirling in the morning sunshine on the windowsill'

], inpaint_images = inpaint_images , inpaint_masks = inpaint_masks , cond_scale = 5. )

inpainted_images # (4, 3, 512, 512)对于视频,同样将您的视频传递给.sample上的inpaint_videos关键字。所有帧的修复蒙版可以相同(batch, height, width) ,也可以不同(batch, frames, height, width)

inpaint_videos = torch . randn ( 4 , 3 , 8 , 512 , 512 ). cuda () # (batch, channels, frames, height, width)

inpaint_masks = torch . ones (( 4 , 8 , 512 , 512 )). bool (). cuda () # (batch, frames, height, width)

inpainted_videos = trainer . sample ( texts = [

'a whale breaching from afar' ,

'young girl blowing out candles on her birthday cake' ,

'fireworks with blue and green sparkles' ,

'dust motes swirling in the morning sunshine on the windowsill'

], inpaint_videos = inpaint_videos , inpaint_masks = inpaint_masks , cond_scale = 5. )

inpainted_videos # (4, 3, 8, 512, 512) StyleGAN 出名的 Tero Karras 撰写了一篇新论文,其结果已得到许多独立研究人员以及我自己的机器的证实。我决定创建Imagen的一个版本,即ElucidatedImagen ,以便可以使用新的阐明的 DDPM 进行文本引导的级联生成。

只需导入ElucidatedImagen ,然后像之前一样实例化该实例。超参数与离散和连续时间高斯扩散的常用参数不同,并且可以针对级联中的每个 unet 进行个性化。

前任。

from imagen_pytorch import ElucidatedImagen

# instantiate your unets ...

imagen = ElucidatedImagen (

unets = ( unet1 , unet2 ),

image_sizes = ( 64 , 128 ),

cond_drop_prob = 0.1 ,

num_sample_steps = ( 64 , 32 ), # number of sample steps - 64 for base unet, 32 for upsampler (just an example, have no clue what the optimal values are)

sigma_min = 0.002 , # min noise level

sigma_max = ( 80 , 160 ), # max noise level, @crowsonkb recommends double the max noise level for upsampler

sigma_data = 0.5 , # standard deviation of data distribution

rho = 7 , # controls the sampling schedule

P_mean = - 1.2 , # mean of log-normal distribution from which noise is drawn for training

P_std = 1.2 , # standard deviation of log-normal distribution from which noise is drawn for training

S_churn = 80 , # parameters for stochastic sampling - depends on dataset, Table 5 in apper

S_tmin = 0.05 ,

S_tmax = 50 ,

S_noise = 1.003 ,

). cuda ()

# rest is the same as above 该存储库还将开始积累围绕文本引导视频合成的新研究。首先,它将采用 Jonathan Ho 在视频扩散模型中描述的 3dunet 架构

更新:Hadrien Reynaud 已验证工作!

前任。

import torch

from imagen_pytorch import Unet3D , ElucidatedImagen , ImagenTrainer

unet1 = Unet3D ( dim = 64 , dim_mults = ( 1 , 2 , 4 , 8 )). cuda ()

unet2 = Unet3D ( dim = 64 , dim_mults = ( 1 , 2 , 4 , 8 )). cuda ()

# elucidated imagen, which contains the unets above (base unet and super resoluting ones)

imagen = ElucidatedImagen (

unets = ( unet1 , unet2 ),

image_sizes = ( 16 , 32 ),

random_crop_sizes = ( None , 16 ),

temporal_downsample_factor = ( 2 , 1 ), # in this example, the first unet would receive the video temporally downsampled by 2x

num_sample_steps = 10 ,

cond_drop_prob = 0.1 ,

sigma_min = 0.002 , # min noise level

sigma_max = ( 80 , 160 ), # max noise level, double the max noise level for upsampler

sigma_data = 0.5 , # standard deviation of data distribution

rho = 7 , # controls the sampling schedule

P_mean = - 1.2 , # mean of log-normal distribution from which noise is drawn for training

P_std = 1.2 , # standard deviation of log-normal distribution from which noise is drawn for training

S_churn = 80 , # parameters for stochastic sampling - depends on dataset, Table 5 in apper

S_tmin = 0.05 ,

S_tmax = 50 ,

S_noise = 1.003 ,

). cuda ()

# mock videos (get a lot of this) and text encodings from large T5

texts = [

'a whale breaching from afar' ,

'young girl blowing out candles on her birthday cake' ,

'fireworks with blue and green sparkles' ,

'dust motes swirling in the morning sunshine on the windowsill'

]

videos = torch . randn ( 4 , 3 , 10 , 32 , 32 ). cuda () # (batch, channels, time / video frames, height, width)

# feed images into imagen, training each unet in the cascade

# for this example, only training unet 1

trainer = ImagenTrainer ( imagen )

# you can also ignore time when training on video initially, shown to improve results in video-ddpm paper. eventually will make the 3d unet trainable with either images or video. research shows it is essential (with current data regimes) to train first on text-to-image. probably won't be true in another decade. all big data becomes small data

trainer ( videos , texts = texts , unet_number = 1 , ignore_time = False )

trainer . update ( unet_number = 1 )

videos = trainer . sample ( texts = texts , video_frames = 20 ) # extrapolating to 20 frames from training on 10 frames

videos . shape # (4, 3, 20, 32, 32)您还可以首先训练文本-图像对。 Unet3D会自动将其转换为单帧视频,并在没有时间分量的情况下进行学习(通过自动设置ignore_time = True ),无论是一维卷积还是跨时间的因果注意力。

这是目前所有大型人工智能实验室(Brain、MetaAI、字节跳动)所采用的方法

Imagen 使用一种称为 Classifier Free Guidance 的算法。采样时,您对条件(本例中为文本)应用大于1.0的比例。

研究人员 Netruk44 报告说5-10是最佳值,但任何超过10的值都需要打破。

trainer . sample ( texts = [

'a cloud in the shape of a roman gladiator'

], cond_scale = 5. ) # <-- cond_scale is the conditioning scale, needs to be greater than 1.0 to be better than average目前还不行,但可能会在一年内(甚至更早)接受培训并开源。如果您想参与,可以加入 Laion 的人工神经网络训练师社区(discord 链接位于上面的自述文件中)并开始合作。

更多关于为什么你应该从今天开始训练你自己的模型的原因!我们最不需要的就是这项技术掌握在少数精英手中。希望这个存储库可以减少工作量,只需要查找必要的计算,并使用您自己整理的数据集进行扩充。

任何事物!它获得了麻省理工学院的许可。换句话说,您可以自由复制/粘贴以进行自己的研究,并以您能想到的任何方式进行重新混合。去训练令人惊奇的模型是为了利润、为了科学,或者只是为了满足自己目睹神圣事物在你面前展开的个人乐趣。

超声心动图合成【代码】

SOTA Hi-C接触矩阵合成[代码]

平面图生成

超高分辨率组织病理学载玻片

合成腹腔镜图像

设计超材料

Flavio Schneider 的音频扩散

来自 Ryan O. 的迷你图像 | AssemblyAI 撰写

使用 Huggingface 转换器进行 T5 小文本嵌入

添加动态阈值

还添加动态阈值 DALLE2 和视频扩散存储库

允许设置 T5-large(也许还有小型工厂方法来容纳任何拥抱面变压器)

使用附录中的伪代码添加低分辨率噪声级别,并找出它们在推理时执行的扫描是什么

从 DALLE2 移植一些训练代码

需要能够为每个unet使用不同的噪声计划(余弦用于基础,但线性用于SR)

只需制作一个可主配置的unet

完整的 resnet 块(受 biggan 启发?但具有 groupnorm)- 完整的自我关注

完整的调节嵌入块(并使其完全可配置,无论是注意力、电影等)

考虑使用 https://github.com/lucidrains/flamingo-pytorch 中的感知器重采样器代替注意力池

除了交叉注意力和电影之外,还添加注意力集中选项

为每个unet添加可选的带预热的余弦衰减时间表到训练器

切换到连续时间步长而不是离散化时间步长,因为这似乎是他们用于所有阶段的时间步长 - 首先从变分 ddpm 论文中找出线性噪声调度案例 https://openreview.net/forum?id=2LdBqxc1Yv

计算出 alpha 余弦噪声表的 log(snr)。

抑制变压器警告,因为仅使用 T5 编码器

允许设置在无法使用完全注意的层上使用线性注意

强制 unets 在连续时间情况下使用非傅立叶条件(只需通过具有可选层范数的 MLP 传递日志(snr)),因为这就是我在本地工作的

删除了学习方差

添加连续时间的 p2 损失权重

确保级联 ddpm 可以在没有文本条件的情况下进行训练,并确保连续和离散时间高斯扩散都有效

在线性注意中的 qkv 投影上使用底漆的深度卷积(或在投影之前使用标记移位) - 还使用 bayesformer 提出的新 dropout,因为它似乎与线性注意配合得很好

探索unet解码器中的跳层激励

加速整合

构建 CLI 工具和一行图像生成

消除加速引起的任何问题

使用重绘纸上的重采样器添加修复能力 https://arxiv.org/abs/2201.09865

构建一个简单的检查点系统,由文件夹支持

从所有上采样块的输出添加跳跃连接,用于unet方格纸和一些以前的unet作品

添加 Romain @rom1504 推荐的 fsspec,用于与云/本地文件系统无关的检查点持久性

使用 https://github.com/fsspec/gcsfs 测试 gcs 中的持久性

扩展到视频生成,使用轴向时间注意力,如 Ho 的视频 ddpm 论文中所示

允许阐明的图像推广到任何形状

允许 imagen 推广到任何形状

添加动态位置偏差,以实现跨视频时间的最佳长度外推类型

将视频帧移动到示例函数,因为我们将尝试时间外推

对空键/值的注意偏差应该是头部维度的学习标量

从位扩散纸添加自调节,已在 ddpm-pytorch 编码

添加来自 imagen 视频论文的 v 参数化(https://arxiv.org/abs/2202.00512),这是唯一的新内容

整合从 make-a-video (https://makeavideo.studio/) 中学到的所有知识

构建用于训练的 CLI 工具,从配置文件恢复训练

允许在特定阶段进行时间插值

确保时间插值适用于修复

确保可以自定义所有插值模式(一些研究人员发现三线性有更好的结果)

imagen-video :允许对之前(也可能是未来)的视频帧进行调节。在这种情况下不应允许忽略时间

确保自动处理调节视频帧的时间下/上采样,但允许选择将其关闭

确保修复适用于视频

确保视频修复蒙版可以接受每帧的定制

添加闪光关注

重读 cogvideo 并弄清楚如何使用帧速率调节

在unet3d中引入自注意力层的注意力专业知识

考虑引入 NUWA 的 3d 卷积注意力

考虑时间注意力块中的 Transformer-xl 记忆

考虑感知者方法来关注过去的时间

注意力过程中的帧丢失,以实现正则化效果并缩短训练时间

调查 Frank Wood 的主张 https://github.com/lucidrains/flexible-diffusion-modeling-videos-pytorch 并添加分层采样技术,或者让人们了解其缺陷

提供具有挑战性的移动 mnist(带有干扰对象)作为单行可训练基线,供研究人员从文本到视频进行分支

将文本预编码为内存映射嵌入

能够基于旧纪元样式创建数据加载器迭代器,还可以配置洗牌等

还能够传入参数(而不是要求forward成为模型上的所有关键字参数)

从 revnets 引入 3dunet 的可逆块,以减轻内存负担

添加仅训练超分辨率网络的能力

阅读 dpm-solver 看看它是否适用于连续时间高斯扩散

允许使用任意绝对时间调节视频帧(在时间注意力期间计算 RPE)

容纳梦想展位微调

添加文本倒装

在图像实例化时提取清理自我调节

确保最终的 Dreambooth 能够与 imagen-video 配合使用

添加视频扩散的帧率调节

确保可以同时对视频帧进行调节作为提示,以及对所有帧进行一些调节图像

测试并添加一致性模型的蒸馏技术

@inproceedings { Saharia2022PhotorealisticTD ,

title = { Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding } ,

author = { Chitwan Saharia and William Chan and Saurabh Saxena and Lala Li and Jay Whang and Emily L. Denton and Seyed Kamyar Seyed Ghasemipour and Burcu Karagol Ayan and Seyedeh Sara Mahdavi and Raphael Gontijo Lopes and Tim Salimans and Jonathan Ho and David Fleet and Mohammad Norouzi } ,

year = { 2022 }

} @article { Alayrac2022Flamingo ,

title = { Flamingo: a Visual Language Model for Few-Shot Learning } ,

author = { Jean-Baptiste Alayrac et al } ,

year = { 2022 }

} @inproceedings { Sankararaman2022BayesFormerTW ,

title = { BayesFormer: Transformer with Uncertainty Estimation } ,

author = { Karthik Abinav Sankararaman and Sinong Wang and Han Fang } ,

year = { 2022 }

} @article { So2021PrimerSF ,

title = { Primer: Searching for Efficient Transformers for Language Modeling } ,

author = { David R. So and Wojciech Ma'nke and Hanxiao Liu and Zihang Dai and Noam M. Shazeer and Quoc V. Le } ,

journal = { ArXiv } ,

year = { 2021 } ,

volume = { abs/2109.08668 }

} @misc { cao2020global ,

title = { Global Context Networks } ,

author = { Yue Cao and Jiarui Xu and Stephen Lin and Fangyun Wei and Han Hu } ,

year = { 2020 } ,

eprint = { 2012.13375 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @article { Karras2022ElucidatingTD ,

title = { Elucidating the Design Space of Diffusion-Based Generative Models } ,

author = { Tero Karras and Miika Aittala and Timo Aila and Samuli Laine } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2206.00364 }

} @inproceedings { NEURIPS2020_4c5bcfec ,

author = { Ho, Jonathan and Jain, Ajay and Abbeel, Pieter } ,

booktitle = { Advances in Neural Information Processing Systems } ,

editor = { H. Larochelle and M. Ranzato and R. Hadsell and M.F. Balcan and H. Lin } ,

pages = { 6840--6851 } ,

publisher = { Curran Associates, Inc. } ,

title = { Denoising Diffusion Probabilistic Models } ,

url = { https://proceedings.neurips.cc/paper/2020/file/4c5bcfec8584af0d967f1ab10179ca4b-Paper.pdf } ,

volume = { 33 } ,

year = { 2020 }

} @article { Lugmayr2022RePaintIU ,

title = { RePaint: Inpainting using Denoising Diffusion Probabilistic Models } ,

author = { Andreas Lugmayr and Martin Danelljan and Andr{'e}s Romero and Fisher Yu and Radu Timofte and Luc Van Gool } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2201.09865 }

} @misc { ho2022video ,

title = { Video Diffusion Models } ,

author = { Jonathan Ho and Tim Salimans and Alexey Gritsenko and William Chan and Mohammad Norouzi and David J. Fleet } ,

year = { 2022 } ,

eprint = { 2204.03458 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @inproceedings { rogozhnikov2022einops ,

title = { Einops: Clear and Reliable Tensor Manipulations with Einstein-like Notation } ,

author = { Alex Rogozhnikov } ,

booktitle = { International Conference on Learning Representations } ,

year = { 2022 } ,

url = { https://openreview.net/forum?id=oapKSVM2bcj }

} @misc { chen2022analog ,

title = { Analog Bits: Generating Discrete Data using Diffusion Models with Self-Conditioning } ,

author = { Ting Chen and Ruixiang Zhang and Geoffrey Hinton } ,

year = { 2022 } ,

eprint = { 2208.04202 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { Singer2022 ,

author = { Uriel Singer } ,

url = { https://makeavideo.studio/Make-A-Video.pdf }

} @article { Sunkara2022NoMS ,

title = { No More Strided Convolutions or Pooling: A New CNN Building Block for Low-Resolution Images and Small Objects } ,

author = { Raja Sunkara and Tie Luo } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2208.03641 }

} @article { Salimans2022ProgressiveDF ,

title = { Progressive Distillation for Fast Sampling of Diffusion Models } ,

author = { Tim Salimans and Jonathan Ho } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2202.00512 }

} @article { Ho2022ImagenVH ,

title = { Imagen Video: High Definition Video Generation with Diffusion Models } ,

author = { Jonathan Ho and William Chan and Chitwan Saharia and Jay Whang and Ruiqi Gao and Alexey A. Gritsenko and Diederik P. Kingma and Ben Poole and Mohammad Norouzi and David J. Fleet and Tim Salimans } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2210.02303 }

} @misc { gilmer2023intriguing

title = { Intriguing Properties of Transformer Training Instabilities } ,

author = { Justin Gilmer, Andrea Schioppa, and Jeremy Cohen } ,

year = { 2023 } ,

status = { to be published - one attention stabilization technique is circulating within Google Brain, being used by multiple teams }

} @inproceedings { Hang2023EfficientDT ,

title = { Efficient Diffusion Training via Min-SNR Weighting Strategy } ,

author = { Tiankai Hang and Shuyang Gu and Chen Li and Jianmin Bao and Dong Chen and Han Hu and Xin Geng and Baining Guo } ,

year = { 2023 }

} @article { Zhang2021TokenST ,

title = { Token Shift Transformer for Video Classification } ,

author = { Hao Zhang and Y. Hao and Chong-Wah Ngo } ,

journal = { Proceedings of the 29th ACM International Conference on Multimedia } ,

year = { 2021 }

} @inproceedings { anonymous2022normformer ,

title = { NormFormer: Improved Transformer Pretraining with Extra Normalization } ,

author = { Anonymous } ,

booktitle = { Submitted to The Tenth International Conference on Learning Representations } ,

year = { 2022 } ,

url = { https://openreview.net/forum?id=GMYWzWztDx5 } ,

note = { under review }

} @inproceedings { Sadat2024EliminatingOA ,

title = { Eliminating Oversaturation and Artifacts of High Guidance Scales in Diffusion Models } ,

author = { Seyedmorteza Sadat and Otmar Hilliges and Romann M. Weber } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273098845 }

}