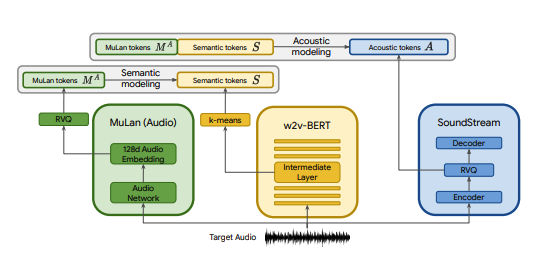

MusicLM 的 Pytorch 实现,是 Google 发布的 SOTA 文本到音乐模型,经过一些修改。我们用 CLAP 代替 MuLan,用 Encodec 代替 SoundStream,用 MERT 代替 w2v-BERT。

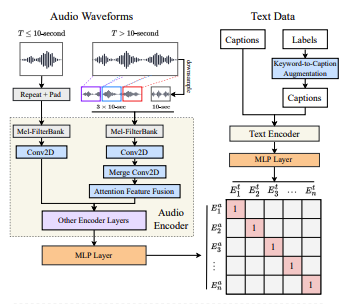

CLAP 是在 LAION-Audio-630K 上训练的联合音频文本模型。与《花木兰》类似,它由一个音频塔和一个文本塔组成,将各自的媒体投影到共享的潜在空间上(CLAP 中为 512 维,花木兰中为 128 维)。

花木兰接受了 5000 万个文本音乐对的训练。不幸的是,我没有数据来复制这一点,所以我依靠 CLAP 的预训练检查点来接近。 CLAP 接受了来自 LAION-630k(约 633k 文本音频对)和 AudioSet(200 万个带有关键字到字幕模型生成的字幕的样本)的总共 260 万个文本音频对的训练。尽管这只是用于训练花木兰的数据的一小部分,但我们已成功使用 CLAP 生成不同的音乐样本,您可以在此处收听(请记住,这些是非常早期的结果)。如果 CLAP 的潜在空间不足以表达音乐生成,我们可以在音乐上训练 CLAP,或者在训练完成后用该模型替换 @lucidrain 的 MuLan 实现。

SoundStream 和 Encodec 都是神经音频编解码器,可将任何波形编码为一系列声学标记,然后将其解码为类似于原始波形的波形。然后可以将这些中间标记建模为 seq2seq 任务。 Encodec 由 Facebook 发布,预训练的检查点是公开可用的,而 SoundStream 则不是这样。

该项目的目标是尽快复制 MusicLM 的结果,而不必遵循论文中的架构。对于那些寻求更真实形式实现的人,请查看 musiclm-pytorch。

我们还寻求更好地了解 CLAP 的潜在空间。

如果您想参与其中,请加入我们的 Discord!

conda env create -f environment.yaml

conda activate open-musiclm“模型配置”包含有关模型架构的信息,例如层数、量化器数量、每个阶段的目标音频长度等。它用于在训练和推理过程中实例化模型。

“训练配置”包含用于训练模型的超参数。它用于在训练期间实例化训练器类。

有关示例配置,请参阅./configs目录。

第一步是训练残差矢量量化器,将连续的 CLAP 嵌入映射到离散的标记序列。

python ./scripts/train_clap_rvq.py

--results_folder ./results/clap_rvq # where to save results and checkpoints

--model_config ./configs/model/musiclm_small.json # path to model config

--training_config ./configs/training/train_musiclm_fma.json # path to training config接下来,我们学习一个 K-means 层,用于将 MERT 嵌入量化为语义标记。

python ./scripts/train_hubert_kmeans.py

--results_folder ./results/hubert_kmeans # where to save results and checkpoints

--model_config ./configs/model/musiclm_small.json

--training_config ./configs/training/train_musiclm_fma.json一旦我们有了可用的 K 均值和 RVQ,我们现在就可以训练语义、粗略和精细阶段。这些阶段可以同时训练。

python ./scripts/train_semantic_stage.py

--results_folder ./results/semantic # where to save results and checkpoints

--model_config ./configs/model/musiclm_small.json

--training_config ./configs/training/train_musiclm_fma.json

--rvq_path PATH_TO_RVQ_CHECKPOINT # path to previously trained rvq

--kmeans_path PATH_TO_KMEANS_CHECKPOINT # path to previously trained kmeans python ./scripts/train_coarse_stage.py

--results_folder ./results/coarse # where to save results and checkpoints

--model_config ./configs/model/musiclm_small.json

--training_config ./configs/training/train_musiclm_fma.json

--rvq_path PATH_TO_RVQ_CHECKPOINT # path to previously trained rvq

--kmeans_path PATH_TO_KMEANS_CHECKPOINT # path to previously trained kmeans python ./scripts/train_fine_stage.py

--results_folder ./results/fine # where to save results and checkpoints

--model_config ./configs/model/musiclm_small.json

--training_config ./configs/training/train_musiclm_fma.json

--rvq_path PATH_TO_RVQ_CHECKPOINT # path to previously trained rvq

--kmeans_path PATH_TO_KMEANS_CHECKPOINT # path to previously trained kmeans 在上面的例子中,我们使用 CLAP、Hubert 和 Encodec 在训练过程中实时生成拍手、语义和声学标记。但是,这些模型会占用 GPU 上的空间,并且如果我们对同一数据进行多次运行,则重新计算这些标记的效率很低。我们可以提前计算这些标记,并在训练期间迭代它们。

为此,请填写配置中的data_preprocessor_cfg字段,并在训练器配置中将use_preprocessed_data设置为 True(查看 train_fma_preprocess.json 以获得灵感)。然后运行以下命令来预处理数据集,然后运行训练脚本。

python ./scripts/preprocess_data.py

--model_config ./configs/model/musiclm_small.json

--training_config ./configs/training/train_fma_preprocess.json

--rvq_path PATH_TO_RVQ_CHECKPOINT # path to previously trained rvq

--kmeans_path PATH_TO_KMEANS_CHECKPOINT # path to previously trained kmeans 生成多个样本并使用 CLAP 选择最佳样本:

python scripts/infer_top_match.py

" your text prompt "

--num_samples 4 # number of samples to generate

--num_top_matches 1 # number of top matches to return

--semantic_path PATH_TO_SEMANTIC_CHECKPOINT # path to previously trained semantic stage

--coarse_path PATH_TO_COARSE_CHECKPOINT # path to previously trained coarse stage

--fine_path PATH_TO_FINE_CHECKPOINT # path to previously trained fine stage

--rvq_path PATH_TO_RVQ_CHECKPOINT # path to previously trained rvq

--kmeans_path PATH_TO_KMEANS_CHECKPOINT # path to previously trained kmeans

--model_config ./configs/model/musiclm_small.json

--duration 4为各种测试提示生成样本:

python scripts/infer.py

--semantic_path PATH_TO_SEMANTIC_CHECKPOINT # path to previously trained semantic stage

--coarse_path PATH_TO_COARSE_CHECKPOINT # path to previously trained coarse stage

--fine_path PATH_TO_FINE_CHECKPOINT # path to previously trained fine stage

--rvq_path PATH_TO_RVQ_CHECKPOINT # path to previously trained rvq

--kmeans_path PATH_TO_KMEANS_CHECKPOINT # path to previously trained kmeans

--model_config ./configs/model/musiclm_small.json

--duration 4您可以使用--return_coarse_wave标志跳过精细阶段并仅从粗略标记重建音频。

您可以在此处下载 musiclm_large_small_context 模型的实验检查点。要微调模型,请使用--fine_tune_from标志调用训练脚本。

@inproceedings { Agostinelli2023MusicLMGM ,

title = { MusicLM: Generating Music From Text } ,

author = { Andrea Agostinelli and Timo I. Denk and Zal{'a}n Borsos and Jesse Engel and Mauro Verzetti and Antoine Caillon and Qingqing Huang and Aren Jansen and Adam Roberts and Marco Tagliasacchi and Matthew Sharifi and Neil Zeghidour and C. Frank } ,

year = { 2023 }

} @article { wu2022large ,

title = { Large-scale Contrastive Language-Audio Pretraining with Feature Fusion and Keyword-to-Caption Augmentation } ,

author = { Wu, Yusong and Chen, Ke and Zhang, Tianyu and Hui, Yuchen and Berg-Kirkpatrick, Taylor and Dubnov, Shlomo } ,

journal = { arXiv preprint arXiv:2211:06687 } ,

year = { 2022 } ,

} @article { defossez2022highfi ,

title = { High Fidelity Neural Audio Compression } ,

author = { Défossez, Alexandre and Copet, Jade and Synnaeve, Gabriel and Adi, Yossi } ,

journal = { arXiv preprint arXiv:2210.13438 } ,

year = { 2022 }

} @misc { li2023mert ,

title = { MERT: Acoustic Music Understanding Model with Large-Scale Self-supervised Training } ,

author = { Yizhi Li and Ruibin Yuan and Ge Zhang and Yinghao Ma and Xingran Chen and Hanzhi Yin and Chenghua Lin and Anton Ragni and Emmanouil Benetos and Norbert Gyenge and Roger Dannenberg and Ruibo Liu and Wenhu Chen and Gus Xia and Yemin Shi and Wenhao Huang and Yike Guo and Jie Fu } ,

year = { 2023 } ,

eprint = { 2306.00107 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.SD }

}