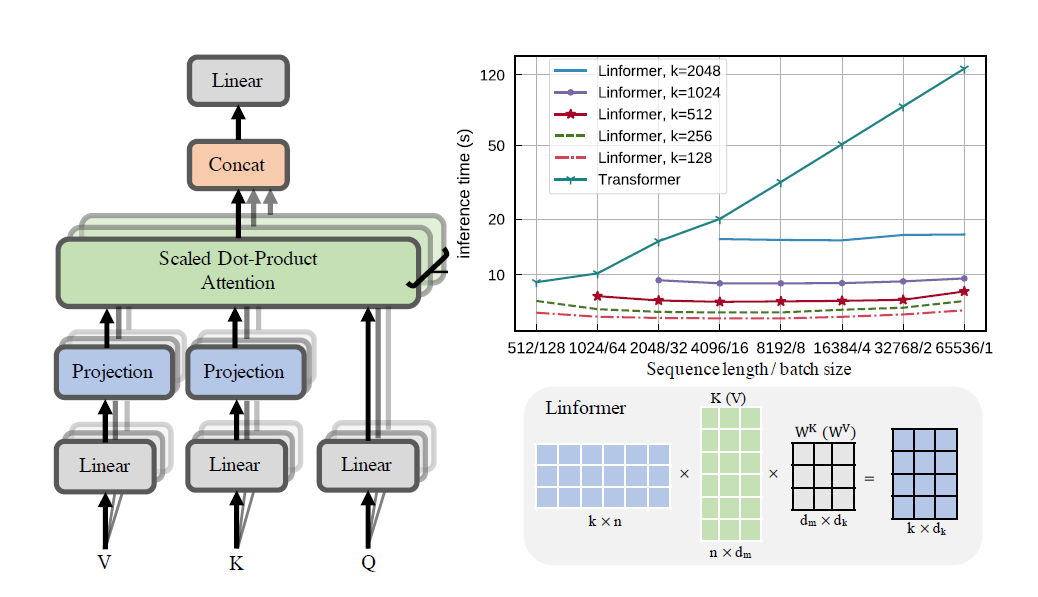

Linformer 论文的实际实现。这是仅具有 n 线性复杂度的关注,允许在现代硬件上关注非常长的序列长度(1mil+)。

这个仓库是一个 Attention Is All You Need 风格的转换器,配有编码器和解码器模块。这里的新颖之处在于,现在可以使注意力头呈线性。看看下面如何使用它。

这正在 wikitext-2 上进行验证。目前,它的性能与其他稀疏注意力机制(如 Sinkhorn Transformer)相同,但仍需找到最佳超参数。

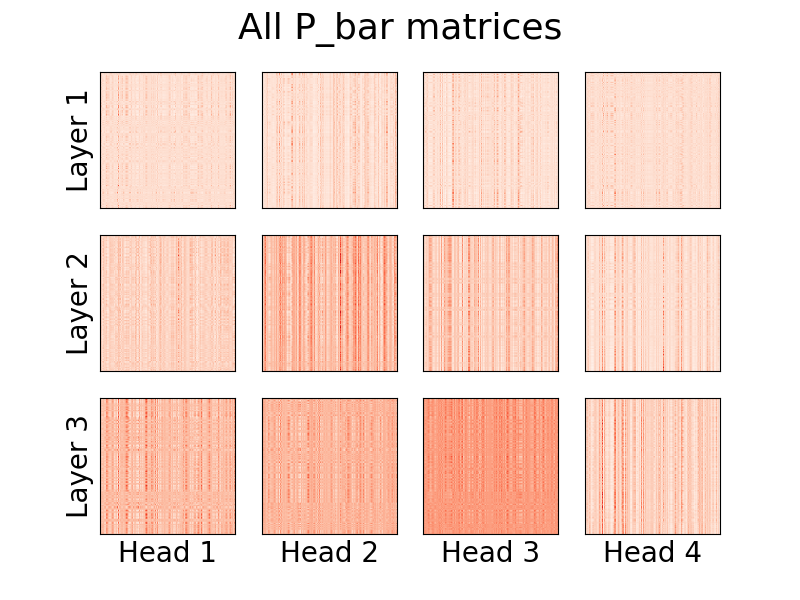

头部的可视化也是可能的。要查看更多信息,请查看下面的可视化部分。

我不是这篇论文的作者。

123 万个代币

pip install linformer-pytorch

或者,

git clone https://github.com/tatp22/linformer-pytorch.git

cd linformer-pytorch

Linformer 语言模型

from linformer_pytorch import LinformerLM

import torch

model = LinformerLM (

num_tokens = 10000 , # Number of tokens in the LM

input_size = 512 , # Dimension 1 of the input

channels = 64 , # Dimension 2 of the input

dim_d = None , # Overwrites the inner dim of the attention heads. If None, sticks with the recommended channels // nhead, as in the "Attention is all you need" paper

dim_k = 128 , # The second dimension of the P_bar matrix from the paper

dim_ff = 128 , # Dimension in the feed forward network

dropout_ff = 0.15 , # Dropout for feed forward network

nhead = 4 , # Number of attention heads

depth = 2 , # How many times to run the model

dropout = 0.1 , # How much dropout to apply to P_bar after softmax

activation = "gelu" , # What activation to use. Currently, only gelu and relu supported, and only on ff network.

use_pos_emb = True , # Whether or not to use positional embeddings

checkpoint_level = "C0" , # What checkpoint level to use. For more information, see below.

parameter_sharing = "layerwise" , # What level of parameter sharing to use. For more information, see below.

k_reduce_by_layer = 0 , # Going down `depth`, how much to reduce `dim_k` by, for the `E` and `F` matrices. Will have a minimum value of 1.

full_attention = False , # Use full attention instead, for O(n^2) time and space complexity. Included here just for comparison

include_ff = True , # Whether or not to include the Feed Forward layer

w_o_intermediate_dim = None , # If not None, have 2 w_o matrices, such that instead of `dim*nead,channels`, you have `dim*nhead,w_o_int`, and `w_o_int,channels`

emb_dim = 128 , # If you want the embedding dimension to be different than the channels for the Linformer

causal = False , # If you want this to be a causal Linformer, where the upper right of the P_bar matrix is masked out.

method = "learnable" , # The method of how to perform the projection. Supported methods are 'convolution', 'learnable', and 'no_params'

ff_intermediate = None , # See the section below for more information

). cuda ()

x = torch . randint ( 1 , 10000 ,( 1 , 512 )). cuda ()

y = model ( x )

print ( y ) # (1, 512, 10000) Linformer 自注意力, MHAttention和FeedForward的堆栈

from linformer_pytorch import Linformer

import torch

model = Linformer (

input_size = 262144 , # Dimension 1 of the input

channels = 64 , # Dimension 2 of the input

dim_d = None , # Overwrites the inner dim of the attention heads. If None, sticks with the recommended channels // nhead, as in the "Attention is all you need" paper

dim_k = 128 , # The second dimension of the P_bar matrix from the paper

dim_ff = 128 , # Dimension in the feed forward network

dropout_ff = 0.15 , # Dropout for feed forward network

nhead = 4 , # Number of attention heads

depth = 2 , # How many times to run the model

dropout = 0.1 , # How much dropout to apply to P_bar after softmax

activation = "gelu" , # What activation to use. Currently, only gelu and relu supported, and only on ff network.

checkpoint_level = "C0" , # What checkpoint level to use. For more information, see below.

parameter_sharing = "layerwise" , # What level of parameter sharing to use. For more information, see below.

k_reduce_by_layer = 0 , # Going down `depth`, how much to reduce `dim_k` by, for the `E` and `F` matrices. Will have a minimum value of 1.

full_attention = False , # Use full attention instead, for O(n^2) time and space complexity. Included here just for comparison

include_ff = True , # Whether or not to include the Feed Forward layer

w_o_intermediate_dim = None , # If not None, have 2 w_o matrices, such that instead of `dim*nead,channels`, you have `dim*nhead,w_o_int`, and `w_o_int,channels`

). cuda ()

x = torch . randn ( 1 , 262144 , 64 ). cuda ()

y = model ( x )

print ( y ) # (1, 262144, 64)Linformer 多头注意力

from linformer_pytorch import MHAttention

import torch

model = MHAttention (

input_size = 512 , # Dimension 1 of the input

channels = 64 , # Dimension 2 of the input

dim = 8 , # Dim of each attn head

dim_k = 128 , # What to sample the input length down to

nhead = 8 , # Number of heads

dropout = 0 , # Dropout for each of the heads

activation = "gelu" , # Activation after attention has been concat'd

checkpoint_level = "C2" , # If C2, checkpoint each of the heads

parameter_sharing = "layerwise" , # What level of parameter sharing to do

E_proj , F_proj , # The E and F projection matrices

full_attention = False , # Use full attention instead

w_o_intermediate_dim = None , # If not None, have 2 w_o matrices, such that instead of `dim*nead,channels`, you have `dim*nhead,w_o_int`, and `w_o_int,channels`

)

x = torch . randn ( 1 , 512 , 64 )

y = model ( x )

print ( y ) # (1, 512, 64)线性注意力头,论文的新颖之处

from linformer_pytorch import LinearAttentionHead

import torch

model = LinearAttentionHead (

dim = 64 , # Dim 2 of the input

dropout = 0.1 , # Dropout of the P matrix

E_proj , F_proj , # The E and F layers

full_attention = False , # Use Full Attention instead

)

x = torch . randn ( 1 , 512 , 64 )

y = model ( x , x , x )

print ( y ) # (1, 512, 64)编码器/解码器模块。

注意:对于因果序列,可以在LinformerLM中设置causal=True标志来屏蔽(n,k)注意力矩阵的右上角。

import torch

from linformer_pytorch import LinformerLM

encoder = LinformerLM (

num_tokens = 10000 ,

input_size = 512 ,

channels = 16 ,

dim_k = 16 ,

dim_ff = 32 ,

nhead = 4 ,

depth = 3 ,

activation = "relu" ,

k_reduce_by_layer = 1 ,

return_emb = True ,

)

decoder = LinformerLM (

num_tokens = 10000 ,

input_size = 512 ,

channels = 16 ,

dim_k = 16 ,

dim_ff = 32 ,

nhead = 4 ,

depth = 3 ,

activation = "relu" ,

decoder_mode = True ,

)

x = torch . randint ( 1 , 10000 ,( 1 , 512 ))

y = torch . randint ( 1 , 10000 ,( 1 , 512 ))

x_mask = torch . ones_like ( x ). bool ()

y_mask = torch . ones_like ( y ). bool ()

enc_output = encoder ( x , input_mask = x_mask )

print ( enc_output . shape ) # (1, 512, 128)

dec_output = decoder ( y , embeddings = enc_output , input_mask = y_mask , embeddings_mask = x_mask )

print ( dec_output . shape ) # (1, 512, 10000)获取E和F矩阵的简单方法可以通过调用get_EF函数来完成。例如,对于n为1000且k为100 :

from linfromer_pytorch import get_EF

import torch

E = get_EF ( 1000 , 100 )通过method标志,可以设置 linformer 执行下采样的方法。目前支持三种方式:

learnable :这种下采样方法创建了一个可学习的n,k nn.Linear模块。convolution :此下采样方法创建 1d 卷积,其步幅长度和内核大小n/k 。no_params :这将创建一个固定的n,k矩阵,其值来自 N(0,1/k)将来我可能会加入池化或其他东西。但就目前而言,这些都是存在的选择。

作为进一步引入内存节省的尝试,引入了检查点级别的概念。当前的三个检查点级别是C0 、 C1和C2 。当提升检查点级别时,人们会牺牲速度来节省内存。也就是说,检查点级别C0最快,但占用 GPU 上的空间最多,而C2最慢,但占用 GPU 上的空间最少。每个检查点级别的详细信息如下:

C0 :无检查点。模型运行的同时将所有注意力头和 ff 层保留在 GPU 内存中。C1 :检查每个 MultiHead 注意力以及每个 ff 层。这样,增加depth对记忆的影响应该是最小的。C2 :除了C1级别的优化外,还对每个 MultiHead Attention 层中的每个头进行检查点。这样,增加nhead对内存的影响应该较小。然而,将头部与torch.cat连接在一起仍然会占用大量内存,这有望在未来得到优化。性能细节仍然未知,但对于想要尝试的用户来说,该选项是存在的。

论文中引入内存节省的另一个尝试是引入投影之间的参数共享。论文第 4 节提到了这一点;特别是,作者讨论了 4 种不同类型的参数共享,并且所有类型都已在此存储库中实现。第一个选项占用最多的内存,每个选项都会减少必要的内存需求。

none :这不是参数共享。对于每个头和每一层,为每层的每个头计算一个新的E和一个新的F矩阵。headwise :每层都有唯一的E和F矩阵。该层中的所有头共享该矩阵。kv :每层都有唯一的投影矩阵P ,并且每层E = F = P 。所有头共享该投影矩阵P 。layerwise :有一个投影矩阵P ,每一层的每个头都使用E = F = P 。正如本文开始的,这意味着对于 12 层、12 头的网络,将分别有288 、 24 、 12和1不同的投影矩阵。

请注意,使用k_reduce_by_layer选项时, layerwise选项将无效,因为它将使用第一层的k维度。因此,如果k_reduce_by_layer值大于0 ,则很可能不使用layerwise共享选项。

另外,请注意,根据作者的说法,在图 3 中,这种参数共享实际上并没有对最终结果产生太大影响。因此,最好坚持对所有内容进行layerwise共享,但用户也可以尝试一下。

Linformer 当前实现的一个小问题是序列长度必须与模型的input_size标志匹配。 Padder 填充输入大小,以便张量可以馈送到网络中。一个例子:

from linformer_pytorch import Linformer , Padder

import torch

model = Linformer (

input_size = 512 ,

channels = 16 ,

dim_d = 32 ,

dim_k = 16 ,

dim_ff = 32 ,

nhead = 6 ,

depth = 3 ,

checkpoint_level = "C1" ,

)

model = Padder ( model )

x = torch . randn ( 1 , 500 , 16 ) # This does not match the input size!

y = model ( x )

print ( y ) # (1, 500, 16)

从版本0.8.0开始,现在可以可视化 linformer 的注意力头了!要查看实际情况,只需导入Visualizer类,然后运行plot_all_heads()函数即可查看每个级别的所有注意力头的图片,大小为 (n,k)。确保在前向传递中指定visualize=True ,因为这会保存P_bar矩阵,以便Visualizer类可以正确可视化头部。

下面可以找到该代码的工作示例,并且可以在./examples/example_vis.py中找到相同的代码:

import torch

from linformer_pytorch import Linformer , Visualizer

model = Linformer (

input_size = 512 ,

channels = 16 ,

dim_k = 128 ,

dim_ff = 32 ,

nhead = 4 ,

depth = 3 ,

activation = "relu" ,

checkpoint_level = "C0" ,

parameter_sharing = "layerwise" ,

k_reduce_by_layer = 1 ,

)

# One can load the model weights here

x = torch . randn ( 1 , 512 , 16 ) # What input you want to visualize

y = model ( x , visualize = True )

vis = Visualizer ( model )

vis . plot_all_heads ( title = "All P_bar matrices" , # Change the title if you'd like

show = True , # Show the picture

save_file = "./heads.png" , # If not None, save the picture to a file

figsize = ( 8 , 6 ), # How big the figure should be

n_limit = None # If not None, limit how much from the `n` dimension to show

)这些头的含义的详细解释可以在 #15 中找到。

与 Reformer 类似,我将尝试制作一个编码器/解码器模块,以便简化训练。这就像 2 个LinformerLM类一样。每个参数都可以单独调整,编码器的所有超参数都具有enc_前缀,解码器以类似的方式具有dec_前缀。到目前为止,实现的是:

import torch

from linformer_pytorch import LinformerEncDec

encdec = LinformerEncDec (

enc_num_tokens = 10000 ,

enc_input_size = 512 ,

enc_channels = 16 ,

dec_num_tokens = 10000 ,

dec_input_size = 512 ,

dec_channels = 16 ,

)

x = torch . randint ( 1 , 10000 ,( 1 , 512 ))

y = torch . randint ( 1 , 10000 ,( 1 , 512 ))

output = encdec ( x , y )我计划有一种方法为此生成文本序列。

ff_intermediate调整现在,中间层的模型维度可以不同。此更改适用于 ff 模块,并且仅适用于编码器。现在,如果标志ff_intermediate不是 None,则层将如下所示:

channels -> ff_dim -> ff_intermediate (For layer 1)

ff_intermediate -> ff_dim -> ff_intermediate (For layers 2 to depth-1)

ff_intermediate -> ff_dim -> channels (For layer depth)

相对于

channels -> ff_dim -> channels (For all layers)

input_size和dim_k进行编辑。apex这样的库应该可以使用它,但是,在实践中,它还没有经过测试。input_size 、 k= dim_k和 d= dim_d 。 LinformerEncDec课程这是我第一次从论文中复制结果,所以有些事情可能是错误的。如果您发现问题,请提出问题,我将尝试解决它。

感谢 lucidrains,他们的其他稀疏注意力存储库帮助我设计了这个 Linformer Repo。

@misc { wang2020linformer ,

title = { Linformer: Self-Attention with Linear Complexity } ,

author = { Sinong Wang and Belinda Z. Li and Madian Khabsa and Han Fang and Hao Ma } ,

year = { 2020 } ,

eprint = { 2006.04768 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

} @inproceedings { vaswani2017attention ,

title = { Attention is all you need } ,

author = { Vaswani, Ashish and Shazeer, Noam and Parmar, Niki and Uszkoreit, Jakob and Jones, Llion and Gomez, Aidan N and Kaiser, {L}ukasz and Polosukhin, Illia } ,

booktitle = { Advances in neural information processing systems } ,

pages = { 5998--6008 } ,

year = { 2017 }

}“专心听……”