flash attention jax

0.3.1

在 Jax 中实现 Flash Attention。由于缺乏精细内存管理的能力,它的性能可能不如官方 CUDA 版本。但仅出于教育目的以及了解 XLA 编译器有多聪明(或不聪明)。

$ pip install flash-attention-jax from jax import random

from flash_attention_jax import flash_attention

rng_key = random . PRNGKey ( 42 )

q = random . normal ( rng_key , ( 1 , 2 , 131072 , 512 )) # (batch, heads, seq, dim)

k = random . normal ( rng_key , ( 1 , 2 , 131072 , 512 ))

v = random . normal ( rng_key , ( 1 , 2 , 131072 , 512 ))

mask = random . randint ( rng_key , ( 1 , 131072 ,), 0 , 2 ) # (batch, seq)

out , _ = flash_attention ( q , k , v , mask )

out . shape # (1, 2, 131072, 512) - (batch, heads, seq, dim)快速健全性检查

from flash_attention_jax import plain_attention , flash_attention , value_and_grad_difference

diff , ( dq_diff , dk_diff , dv_diff ) = value_and_grad_difference (

plain_attention ,

flash_attention ,

seed = 42

)

print ( 'shows differences between normal and flash attention for output, dq, dk, dv' )

print ( f'o: { diff } ' ) # < 1e-4

print ( f'dq: { dq_diff } ' ) # < 1e-6

print ( f'dk: { dk_diff } ' ) # < 1e-6

print ( f'dv: { dv_diff } ' ) # < 1e-6Autoregressive Flash Attention - 类似 GPT 的解码器注意力

from jax import random

from flash_attention_jax import causal_flash_attention

rng_key = random . PRNGKey ( 42 )

q = random . normal ( rng_key , ( 131072 , 512 ))

k = random . normal ( rng_key , ( 131072 , 512 ))

v = random . normal ( rng_key , ( 131072 , 512 ))

out , _ = causal_flash_attention ( q , k , v )

out . shape # (131072, 512) 因果闪光注意变体的主要维度

找出 jit 和 static argnums 的问题

参考论文算法和解释进行评论

确保它可以工作单向键/值,就像在 PaLM 中一样

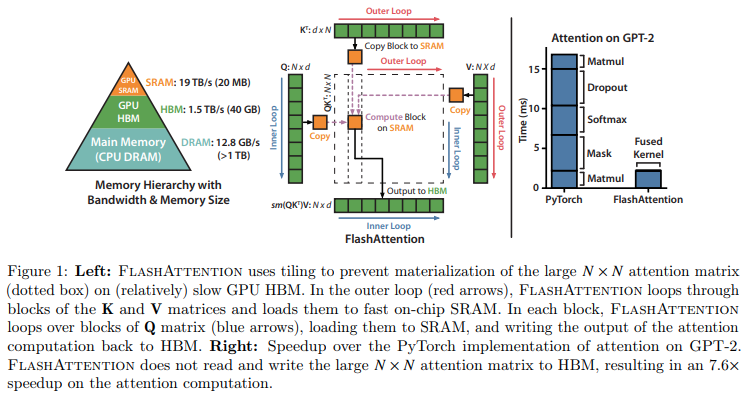

@article { Dao2022FlashAttentionFA ,

title = { FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness } ,

author = { Tri Dao and Daniel Y. Fu and Stefano Ermon and Atri Rudra and Christopher R'e } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2205.14135 }

} @article { Rabe2021SelfattentionDN ,

title = { Self-attention Does Not Need O(n2) Memory } ,

author = { Markus N. Rabe and Charles Staats } ,

journal = { ArXiv } ,

year = { 2021 } ,

volume = { abs/2112.05682 }

}