plip

1.0.0

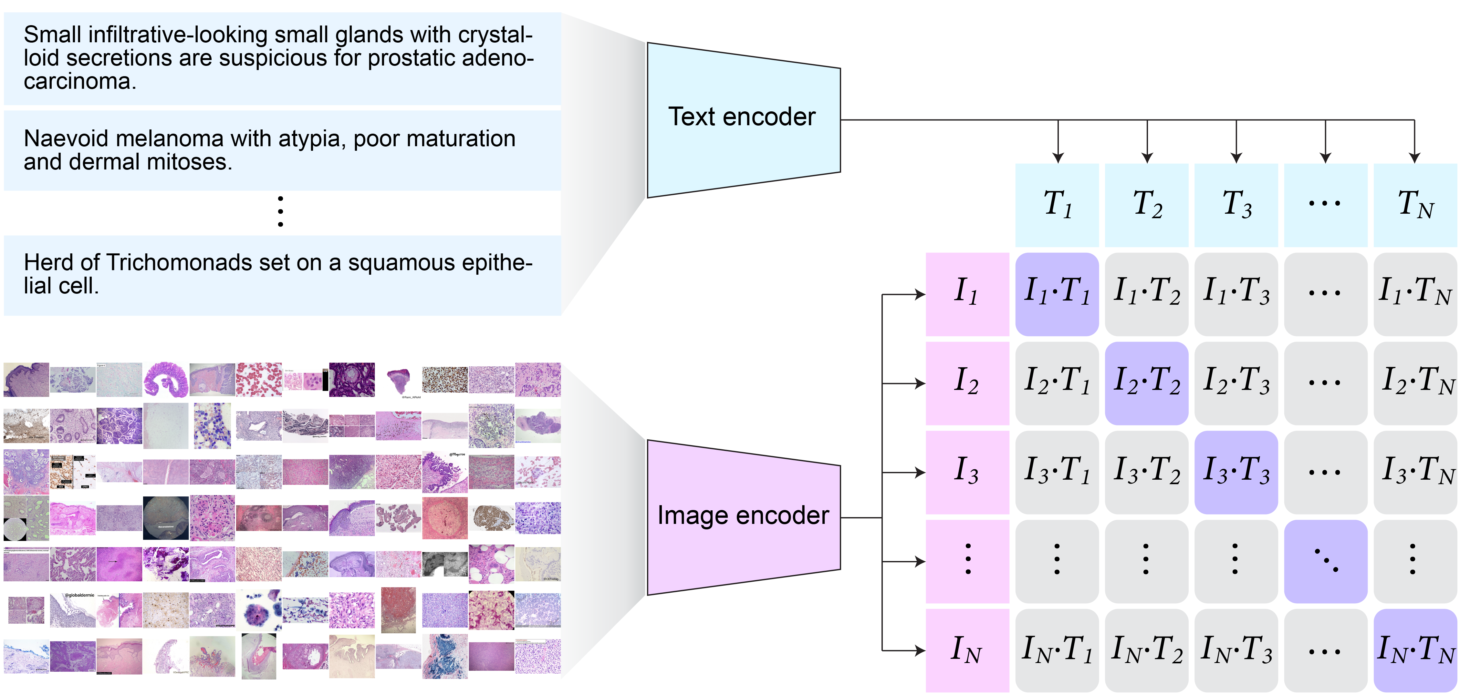

病理学语言和图像预训练(PLIP)是病理学人工智能的第一个视觉和语言基础模型。 PLIP是一种大规模预训练模型,可用于从病理图像和文本描述中提取视觉和语言特征。该模型是原始 CLIP 模型的微调版本。

from plip . plip import PLIP

import numpy as np

plip = PLIP ( 'vinid/plip' )

# we create image embeddings and text embeddings

image_embeddings = plip . encode_images ( images , batch_size = 32 )

text_embeddings = plip . encode_text ( texts , batch_size = 32 )

# we normalize the embeddings to unit norm (so that we can use dot product instead of cosine similarity to do comparisons)

image_embeddings = image_embeddings / np . linalg . norm ( image_embeddings , ord = 2 , axis = - 1 , keepdims = True )

text_embeddings = text_embeddings / np . linalg . norm ( text_embeddings , ord = 2 , axis = - 1 , keepdims = True ) from PIL import Image

from transformers import CLIPProcessor , CLIPModel

model = CLIPModel . from_pretrained ( "vinid/plip" )

processor = CLIPProcessor . from_pretrained ( "vinid/plip" )

image = Image . open ( "images/image1.jpg" )

inputs = processor ( text = [ "a photo of label 1" , "a photo of label 2" ],

images = image , return_tensors = "pt" , padding = True )

outputs = model ( ** inputs )

logits_per_image = outputs . logits_per_image # this is the image-text similarity score

probs = logits_per_image . softmax ( dim = 1 )

print ( probs )

image . resize (( 224 , 224 ))如果您在研究中使用 PLIP,请引用以下论文:

@article { huang2023visual ,

title = { A visual--language foundation model for pathology image analysis using medical Twitter } ,

author = { Huang, Zhi and Bianchi, Federico and Yuksekgonul, Mert and Montine, Thomas J and Zou, James } ,

journal = { Nature Medicine } ,

pages = { 1--10 } ,

year = { 2023 } ,

publisher = { Nature Publishing Group US New York }

}内部 API 是从 FashionCLIP复制的。