nGPT pytorch

0.2.7

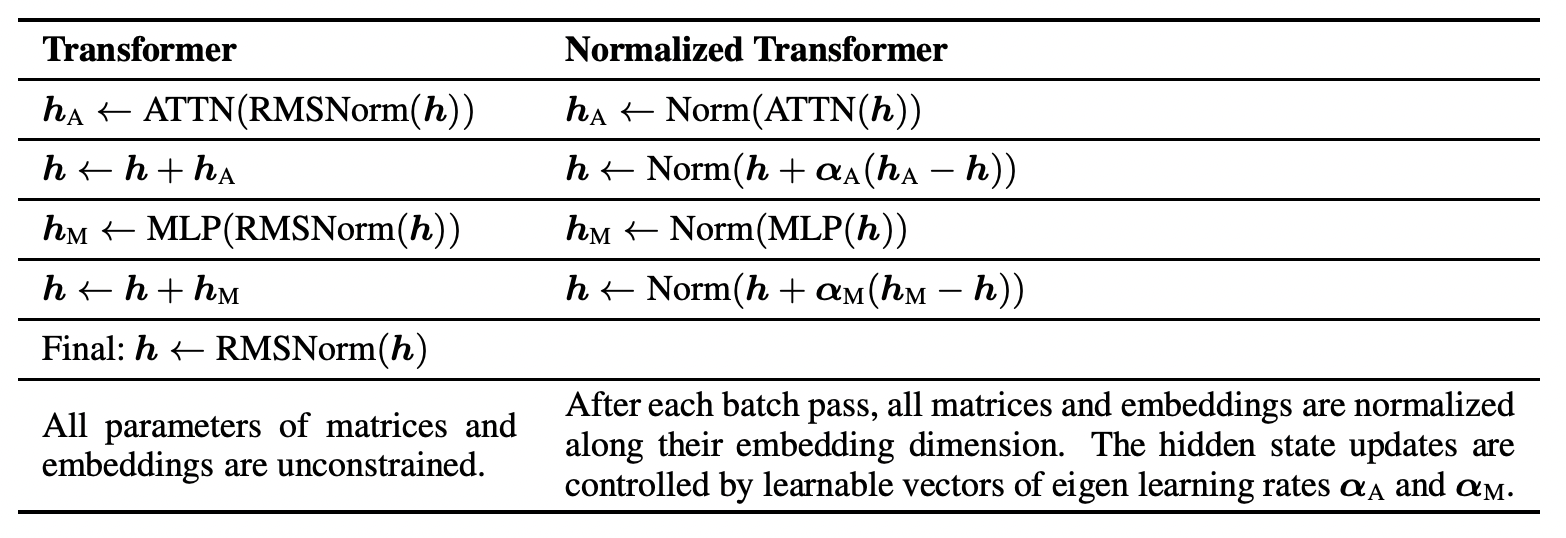

快速实施 nGPT,完全在超球面上学习,来自 NvidiaAI。问题是他们是否隐藏了表达能力的损失,但我会真诚地接受这一点。

这种类型的网络也应该在持续学习和可塑性丧失的背景下进行研究

对视觉变压器的适应就在这里

$ pip install nGPT-pytorch import torch

from nGPT_pytorch import nGPT

model = nGPT (

num_tokens = 256 ,

dim = 512 ,

depth = 4 ,

attn_norm_qk = True

)

x = torch . randint ( 0 , 256 , ( 2 , 2048 ))

loss = model ( x , return_loss = True )

loss . backward ()

logits = model ( x ) # (2, 2048, 256) 恩威克8

$ python train.py @inproceedings { Loshchilov2024nGPTNT ,

title = { nGPT: Normalized Transformer with Representation Learning on the Hypersphere } ,

author = { Ilya Loshchilov and Cheng-Ping Hsieh and Simeng Sun and Boris Ginsburg } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273026160 }

} @article { Luo2017CosineNU ,

title = { Cosine Normalization: Using Cosine Similarity Instead of Dot Product in Neural Networks } ,

author = { Chunjie Luo and Jianfeng Zhan and Lei Wang and Qiang Yang } ,

journal = { ArXiv } ,

year = { 2017 } ,

volume = { abs/1702.05870 } ,

url = { https://api.semanticscholar.org/CorpusID:1505432 }

} @inproceedings { Zhou2024ValueRL ,

title = { Value Residual Learning For Alleviating Attention Concentration In Transformers } ,

author = { Zhanchao Zhou and Tianyi Wu and Zhiyun Jiang and Zhenzhong Lan } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273532030 }

}