VQAScore 允許研究人員使用一行 Python 程式碼自動評估文字到圖像/視訊/3D 模型!

[VQAScore 頁面] [VQAScore 示範] [GenAI-Bench 頁面] [GenAI-Bench 示範] [CLIP-FlanT5 模型動物園]

VQAScore:使用影像到文字產生評估文字到視覺生成(ECCV 2024) [論文] [HF]

林志秋、Deepak Pathak、李白奇、李佳耀、夏熙德、Graham Neubig、張鵬川、Deva Ramanan

GenAI-Bench:評估和改進組合文本到視覺的生成(CVPR 2024,最佳短論文 @ SynData Workshop )[論文] [HF]

Baiqiu Li*、Zhiqiu Lin*、Deepak Pathak、Jiayao Li、Yixin Fei、Kewen Wu、Tiffany Ling、Xide Xia*、Pengchuan Zhang*、Graham Neubig*、Deva Ramanan*(*共同第一作者和共同高級作者)

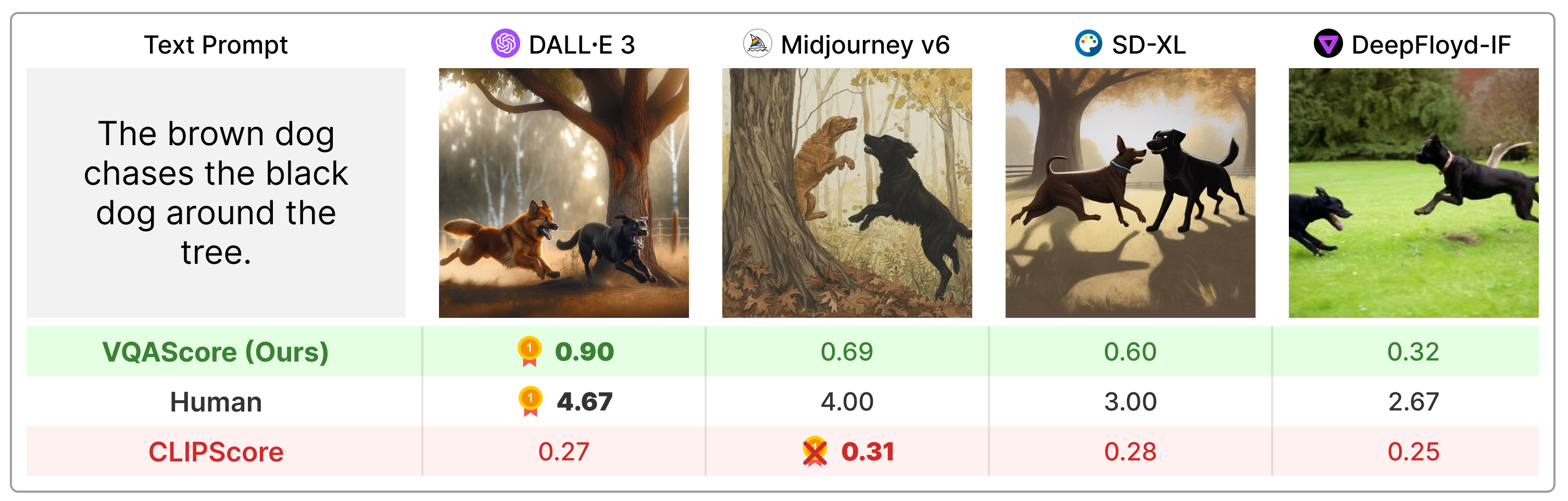

VQAScore 在組合文字提示方面顯著優於先前的指標(例如CLIPScore 和PickScore),並且它比現有技術(例如ImageReward、HPSv2、TIFA、Davidsonian、VPEval、VIEScore)簡單得多,利用人類反饋或專有模型(例如ChatGPT 和GPT) -4願景。

透過以下方式安裝套件:

git clone https://github.com/linzhiqiu/t2v_metrics

cd t2v_metrics

conda create -n t2v python=3.10 -y

conda activate t2v

conda install pip -y

pip install torch torchvision torchaudio

pip install git+https://github.com/openai/CLIP.git

pip install -e . # local pip install或者您可以透過pip install t2v-metrics安裝。

現在,您只需使用以下 Python 程式碼即可計算圖像-文字對齊的 VQAScore(分數越高表示相似度越高):

import t2v_metrics

clip_flant5_score = t2v_metrics . VQAScore ( model = 'clip-flant5-xxl' ) # our recommended scoring model

### For a single (image, text) pair

image = "images/0.png" # an image path in string format

text = "someone talks on the phone angrily while another person sits happily"

score = clip_flant5_score ( images = [ image ], texts = [ text ])

### Alternatively, if you want to calculate the pairwise similarity scores

### between M images and N texts, run the following to return a M x N score tensor.

images = [ "images/0.png" , "images/1.png" ]

texts = [ "someone talks on the phone angrily while another person sits happily" ,

"someone talks on the phone happily while another person sits angrily" ]

scores = clip_flant5_score ( images = images , texts = texts ) # scores[i][j] is the score between image i and text jclip-flant5-xxl和llava-v1.5-13b 。如果您的 GPU 記憶體有限,請考慮較小的模型,例如clip-flant5-xl和llava-v1.5-7b 。HF_CACHE_DIR來變更儲存所有模型檢查點的快取資料夾(預設為./hf_cache/ )。 對於 M 個圖像 x N 個文字的大批量,您可以使用batch_forward()函數來加快速度。

import t2v_metrics

clip_flant5_score = t2v_metrics . VQAScore ( model = 'clip-flant5-xxl' )

# The number of images and texts per dictionary must be consistent.

# E.g., the below example shows how to evaluate 4 generated images per text

dataset = [

{ 'images' : [ "images/0/DALLE3.png" , "images/0/Midjourney.jpg" , "images/0/SDXL.jpg" , "images/0/DeepFloyd.jpg" ], 'texts' : [ "The brown dog chases the black dog around the tree." ]},

{ 'images' : [ "images/1/DALLE3.png" , "images/1/Midjourney.jpg" , "images/1/SDXL.jpg" , "images/1/DeepFloyd.jpg" ], 'texts' : [ "Two cats sit at the window, the blue one intently watching the rain, the red one curled up asleep." ]},

#...

]

scores = clip_flant5_score . batch_forward ( dataset = dataset , batch_size = 16 ) # (n_sample, 4, 1) tensor我們目前支援使用 CLIP-FlanT5、LLaVA-1.5 和 InstructBLIP 運行 VQAScore。對於消融,我們還包括 CLIPScore、BLIPv2Score、PickScore、HPSv2Score 和 ImageReward:

llava_score = t2v_metrics . VQAScore ( model = 'llava-v1.5-13b' )

instructblip_score = t2v_metrics . VQAScore ( model = 'instructblip-flant5-xxl' )

clip_score = t2v_metrics . CLIPScore ( model = 'openai:ViT-L-14-336' )

blip_itm_score = t2v_metrics . ITMScore ( model = 'blip2-itm' )

pick_score = t2v_metrics . CLIPScore ( model = 'pickscore-v1' )

hpsv2_score = t2v_metrics . CLIPScore ( model = 'hpsv2' )

image_reward_score = t2v_metrics . ITMScore ( model = 'image-reward-v1' ) 您可以透過執行以下命令來檢查所有支援的型號:

print ( "VQAScore models:" )

t2v_metrics . list_all_vqascore_models ()

print ( "ITMScore models:" )

t2v_metrics . list_all_itmscore_models ()

print ( "CLIPScore models:" )

t2v_metrics . list_all_clipscore_models ()問題和答案對最終得分略有影響,如我們論文的附錄所示。我們為每個模型提供了一個簡單的預設模板,不建議為了可重複性而更改它。但是,我們確實想指出,問題和答案可以輕鬆修改。例如,CLIP-FlanT5 和 LLaVA-1.5 使用以下模板,可以在 t2v_metrics/models/vqascore_models/clip_t5_model.py 中找到該模板:

# {} will be replaced by the caption

default_question_template = 'Does this figure show "{}"? Please answer yes or no.'

default_answer_template = 'Yes'您可以透過將question_template和answer_template參數傳遞到forward()或batch_forward()函數來自訂模板:

# Use a different question for VQAScore

scores = clip_flant5_score ( images = images ,

texts = texts ,

question_template = 'Is this figure showing "{}"? Please answer yes or no.' ,

answer_template = 'Yes' )您也可以計算 P(caption | image) (VisualGPTScore) 而不是 P(answer | image, Question):

scores = clip_flant5_score ( images = images ,

texts = texts ,

question_template = "" , # no question

answer_template = "{}" ) # this computes P(caption | image)我們的 eval.py 可讓您輕鬆執行 10 個影像/視覺/3D 對齊基準(例如,Winoground/TIFA160/SeeTrue/StanfordT23D/T2VScore):

python eval.py --model clip-flant5-xxl # for VQAScore

python eval.py --model openai:ViT-L-14 # for CLIPScore

# You can optionally specify question/answer template, for example:

python eval.py --model clip-flant5-xxl --question " Is the figure showing '{}'? " --answer " Yes "我們的 genai_image_eval.py 和 genai_video_eval.py 可以重現 GenAI-Bench 結果。另外 genai_image_ranking.py 可以重現 GenAI-Rank 結果:

# GenAI-Bench

python genai_image_eval.py --model clip-flant5-xxl

python genai_video_eval.py --model clip-flant5-xxl

# GenAI-Rank

python genai_image_ranking.py --model clip-flant5-xxl --gen_model DALLE_3

python genai_image_ranking.py --model clip-flant5-xxl --gen_model SDXL_Base我們使用 GPT-4o 實現了 VQAScore,以實現新的最先進的性能。請參閱 t2v_metrics/gpt4_eval.py 範例。以下是如何在命令列中使用它:

openai_key = # Your OpenAI key

score_func = t2v_metrics . get_score_model ( model = "gpt-4o" , device = "cuda" , openai_key = openai_key , top_logprobs = 20 ) # We find top_logprobs=20 to be sufficient for most (image, text) samples. Consider increase this number if you get errors (the API cost will not increase).您可以輕鬆實施自己的評分指標。例如,如果您有一個您認為更有效的 VQA 模型,您可以將其合併到 t2v_metrics/models/vqascore_models 目錄中。如需指導,請參考我們的 LLaVA-1.5 和 InstructBLIP 範例實作作為起點。

若要使用 CLIP-FlanT5 產生文字(字幕或 VQA 任務),請使用下列程式碼:

import t2v_metrics

clip_flant5_score = t2v_metrics . VQAScore ( model = 'clip-flant5-xxl' )

images = [ "images/0.png" , "images/0.png" ] # A list of images

prompts = [ "Please describe this image: " , "Does the image show 'someone talks on the phone angrily while another person sits happily'?" ] # Corresponding prompts

clip_flant5_score . model . generate ( images = images , prompts = prompts )如果您發現此儲存庫對您的研究有用,請使用以下內容(使用 ArXiv ID 進行更新)。

@article{lin2024evaluating,

title={Evaluating Text-to-Visual Generation with Image-to-Text Generation},

author={Lin, Zhiqiu and Pathak, Deepak and Li, Baiqi and Li, Jiayao and Xia, Xide and Neubig, Graham and Zhang, Pengchuan and Ramanan, Deva},

journal={arXiv preprint arXiv:2404.01291},

year={2024}

}

此儲存庫的靈感來自 Richard Zhu 用於自動評估影像品質的 Perceptual Metric (LPIPS) 儲存庫。