官方 chatgpt 部落格文章

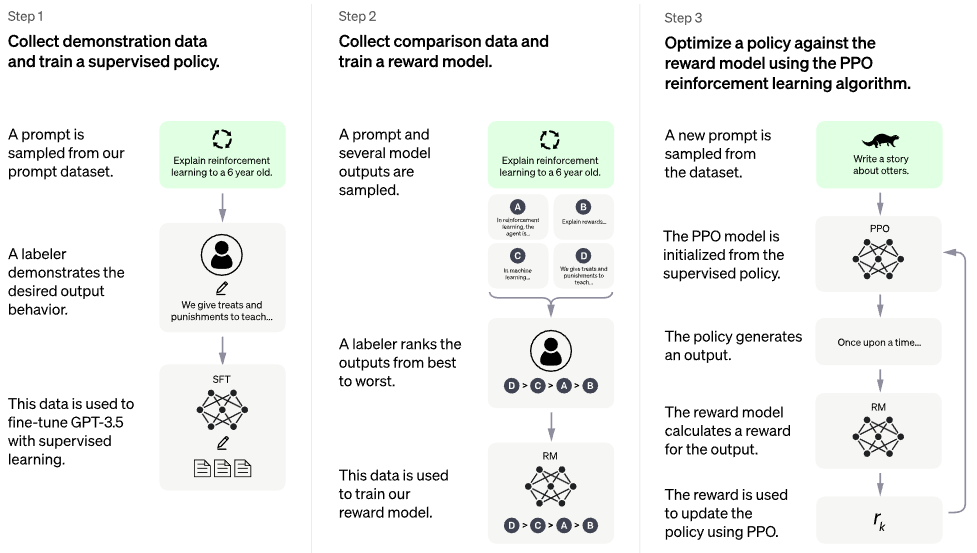

在 PaLM 架構之上實現 RLHF(具有人類回饋的強化學習)。也許我也會加入檢索功能,就像復古一樣

如果您有興趣公開複製 ChatGPT 等內容,請考慮加入 Laion

潛在的後繼者:直接偏好最佳化 - 此儲存庫中的所有程式碼都變成〜二進位交叉熵損失,< 5 loc。獎勵模型和 PPO 就這麼多了

沒有經過訓練的模型。這只是船和總體地圖。我們仍然需要數百萬美元的計算+數據才能航行到高維度參數空間中的正確點。即便如此,你也需要專業的水手(例如因穩定擴散而聞名的羅賓·羅姆巴赫)來實際引導船隻度過動蕩的時期,到達這一點。

在 ChatGPT 發布之前的幾個月裡,CarperAI 一直致力於大型語言模型的 RLHF 框架的發展。

Yannic Kilcher 也在致力於開源實現

與 Letitia 一起喝咖啡 | AI代碼商場 |代碼商場第 2 部分

Stability.ai 慷慨贊助前沿人工智慧研究

? Hugging Face 和 CarperAI 撰寫了部落格文章《說明人類回饋的強化學習》(RLHF),前者也提供了他們的加速庫

@kisseternity 和 @taynoel84 用於程式碼審查和查找錯誤

Enrico 從 Pytorch 2.0 整合 Flash Attention

$ pip install palm-rlhf-pytorch首先訓練PaLM ,就像其他自回歸變壓器一樣

import torch

from palm_rlhf_pytorch import PaLM

palm = PaLM (

num_tokens = 20000 ,

dim = 512 ,

depth = 12 ,

flash_attn = True # https://arxiv.org/abs/2205.14135

). cuda ()

seq = torch . randint ( 0 , 20000 , ( 1 , 2048 )). cuda ()

loss = palm ( seq , return_loss = True )

loss . backward ()

# after much training, you can now generate sequences

generated = palm . generate ( 2048 ) # (1, 2048)然後利用精心策劃的人類回饋來訓練您的獎勵模型。在最初的論文中,他們無法在不過度擬合的情況下從預訓練的 Transformer 中獲得獎勵模型進行微調,但我無論如何都給出了使用LoRA進行微調的選項,因為它仍然是開放研究。

import torch

from palm_rlhf_pytorch import PaLM , RewardModel

palm = PaLM (

num_tokens = 20000 ,

dim = 512 ,

depth = 12 ,

causal = False

)

reward_model = RewardModel (

palm ,

num_binned_output = 5 # say rating from 1 to 5

). cuda ()

# mock data

seq = torch . randint ( 0 , 20000 , ( 1 , 1024 )). cuda ()

prompt_mask = torch . zeros ( 1 , 1024 ). bool (). cuda () # which part of the sequence is prompt, which part is response

labels = torch . randint ( 0 , 5 , ( 1 ,)). cuda ()

# train

loss = reward_model ( seq , prompt_mask = prompt_mask , labels = labels )

loss . backward ()

# after much training

reward = reward_model ( seq , prompt_mask = prompt_mask )然後你將把你的變壓器和獎勵模型傳遞給RLHFTrainer

import torch

from palm_rlhf_pytorch import PaLM , RewardModel , RLHFTrainer

# load your pretrained palm

palm = PaLM (

num_tokens = 20000 ,

dim = 512 ,

depth = 12

). cuda ()

palm . load ( './path/to/pretrained/palm.pt' )

# load your pretrained reward model

reward_model = RewardModel (

palm ,

num_binned_output = 5

). cuda ()

reward_model . load ( './path/to/pretrained/reward_model.pt' )

# ready your list of prompts for reinforcement learning

prompts = torch . randint ( 0 , 256 , ( 50000 , 512 )). cuda () # 50k prompts

# pass it all to the trainer and train

trainer = RLHFTrainer (

palm = palm ,

reward_model = reward_model ,

prompt_token_ids = prompts

)

trainer . train ( num_episodes = 50000 )

# then, if it succeeded...

# generate say 10 samples and use the reward model to return the best one

answer = trainer . generate ( 2048 , prompt = prompts [ 0 ], num_samples = 10 ) # (<= 2048,) 克隆基礎變壓器與獨立的勞拉評論家

也允許基於非 LoRA 的微調

重做規範化以能夠擁有屏蔽版本,不確定是否有人會使用每個代幣獎勵/值,但實施的良好實踐

配備最好的關注

加入 Hugging Face 加速並測試 wandb 檢測

假設 RL 領域仍在取得進展,請搜尋文獻以找出 PPO 的最新 SOTA。

使用預先訓練的情緒網絡作為獎勵模型來測試系統

將 PPO 中的記憶體寫入記憶體映射的 numpy 文件

使用可變長度的提示進行採樣,即使不需要,因為瓶頸是人類回饋

允許僅在演員或評論家中微調倒數第二 N 層,假設經過預訓練

鑑於 Letitia 的視頻,結合了 Sparrow 的一些學習點

使用 django + htmx 的簡單 Web 介面,用於收集人類回饋

考慮 RLAIF

@article { Stiennon2020LearningTS ,

title = { Learning to summarize from human feedback } ,

author = { Nisan Stiennon and Long Ouyang and Jeff Wu and Daniel M. Ziegler and Ryan J. Lowe and Chelsea Voss and Alec Radford and Dario Amodei and Paul Christiano } ,

journal = { ArXiv } ,

year = { 2020 } ,

volume = { abs/2009.01325 }

} @inproceedings { Chowdhery2022PaLMSL ,

title = { PaLM: Scaling Language Modeling with Pathways } ,

author = {Aakanksha Chowdhery and Sharan Narang and Jacob Devlin and Maarten Bosma and Gaurav Mishra and Adam Roberts and Paul Barham and Hyung Won Chung and Charles Sutton and Sebastian Gehrmann and Parker Schuh and Kensen Shi and Sasha Tsvyashchenko and Joshua Maynez and Abhishek Rao and Parker Barnes and Yi Tay and Noam M. Shazeer and Vinodkumar Prabhakaran and Emily Reif and Nan Du and Benton C. Hutchinson and Reiner Pope and James Bradbury and Jacob Austin and Michael Isard and Guy Gur-Ari and Pengcheng Yin and Toju Duke and Anselm Levskaya and Sanjay Ghemawat and Sunipa Dev and Henryk Michalewski and Xavier Garc{'i}a and Vedant Misra and Kevin Robinson and Liam Fedus and Denny Zhou and Daphne Ippolito and David Luan and Hyeontaek Lim and Barret Zoph and Alexander Spiridonov and Ryan Sepassi and David Dohan and Shivani Agrawal and Mark Omernick and Andrew M. Dai and Thanumalayan Sankaranarayana Pillai and Marie Pellat and Aitor Lewkowycz and Erica Oliveira Moreira and Rewon Child and Oleksandr Polozov and Katherine Lee and Zongwei Zhou and Xuezhi Wang and Brennan Saeta and Mark Diaz and Orhan Firat and Michele Catasta and Jason Wei and Kathleen S. Meier-Hellstern and Douglas Eck and Jeff Dean and Slav Petrov and Noah Fiedel},

year = { 2022 }

} @article { Hu2021LoRALA ,

title = { LoRA: Low-Rank Adaptation of Large Language Models } ,

author = { Edward J. Hu and Yelong Shen and Phillip Wallis and Zeyuan Allen-Zhu and Yuanzhi Li and Shean Wang and Weizhu Chen } ,

journal = { ArXiv } ,

year = { 2021 } ,

volume = { abs/2106.09685 }

} @inproceedings { Sun2022ALT ,

title = { A Length-Extrapolatable Transformer } ,

author = { Yutao Sun and Li Dong and Barun Patra and Shuming Ma and Shaohan Huang and Alon Benhaim and Vishrav Chaudhary and Xia Song and Furu Wei } ,

year = { 2022 }

} @misc { gilmer2023intriguing

title = { Intriguing Properties of Transformer Training Instabilities } ,

author = { Justin Gilmer, Andrea Schioppa, and Jeremy Cohen } ,

year = { 2023 } ,

status = { to be published - one attention stabilization technique is circulating within Google Brain, being used by multiple teams }

} @inproceedings { dao2022flashattention ,

title = { Flash{A}ttention: Fast and Memory-Efficient Exact Attention with {IO}-Awareness } ,

author = { Dao, Tri and Fu, Daniel Y. and Ermon, Stefano and Rudra, Atri and R{'e}, Christopher } ,

booktitle = { Advances in Neural Information Processing Systems } ,

year = { 2022 }

} @misc { Rubin2024 ,

author = { Ohad Rubin } ,

url = { https://medium.com/ @ ohadrubin/exploring-weight-decay-in-layer-normalization-challenges-and-a-reparameterization-solution-ad4d12c24950 }

} @inproceedings { Yuan2024FreePR ,

title = { Free Process Rewards without Process Labels } ,

author = { Lifan Yuan and Wendi Li and Huayu Chen and Ganqu Cui and Ning Ding and Kaiyan Zhang and Bowen Zhou and Zhiyuan Liu and Hao Peng } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:274445748 }

}