point transformer pytorch

0.1.5

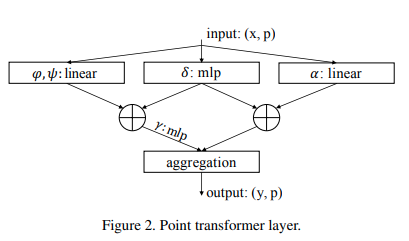

在 Pytorch 中實作 Point Transformer 自註意力層。上面的簡單電路似乎使他們的團隊在點雲分類和分割方面優於以前的所有方法。

$ pip install point-transformer-pytorch import torch

from point_transformer_pytorch import PointTransformerLayer

attn = PointTransformerLayer (

dim = 128 ,

pos_mlp_hidden_dim = 64 ,

attn_mlp_hidden_mult = 4

)

feats = torch . randn ( 1 , 16 , 128 )

pos = torch . randn ( 1 , 16 , 3 )

mask = torch . ones ( 1 , 16 ). bool ()

attn ( feats , pos , mask = mask ) # (1, 16, 128)這種類型的向量注意力比傳統的向量注意力要昂貴得多。在論文中,他們使用點上的 k-近鄰來排除對遠處點的注意力。您可以透過一個額外的設定來執行相同的操作。

import torch

from point_transformer_pytorch import PointTransformerLayer

attn = PointTransformerLayer (

dim = 128 ,

pos_mlp_hidden_dim = 64 ,

attn_mlp_hidden_mult = 4 ,

num_neighbors = 16 # only the 16 nearest neighbors would be attended to for each point

)

feats = torch . randn ( 1 , 2048 , 128 )

pos = torch . randn ( 1 , 2048 , 3 )

mask = torch . ones ( 1 , 2048 ). bool ()

attn ( feats , pos , mask = mask ) # (1, 16, 128) @misc { zhao2020point ,

title = { Point Transformer } ,

author = { Hengshuang Zhao and Li Jiang and Jiaya Jia and Philip Torr and Vladlen Koltun } ,

year = { 2020 } ,

eprint = { 2012.09164 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

}