AudioLM 是一種來自 Google 研究的音訊生成語言建模方法,在 Pytorch 中實現

它還透過 T5 的無分類器指導擴展了調節工作。這允許人們進行文字轉音訊或 TTS,但論文中沒有提供。是的,這意味著 VALL-E 可以從此儲存庫進行訓練。本質上是一樣的。

如果您有興趣公開複製這項工作,請加入

該儲存庫現在還包含 SoundStream 的 MIT 授權版本。它也與 EnCodec 相容,在撰寫本文時 EnCodec 也已獲得 MIT 授權。

更新:AudioLM 本質上是用來「解決」新 MusicLM 中的音樂生成問題

以後這個電影片段就沒有任何意義了。你只需提示人工智慧即可。

Stability.ai 慷慨贊助工作與開源尖端人工智慧研究

? Huggingface 擁有令人驚嘆的加速器和變形金剛庫

MetaAI for Fairseq 和自由許可證

@onglints 和 Joseph 提供了他們的專業建議和專業知識以及拉取請求!

@djqualia、@yigityu、@inspirit 和 @BlackFox1197 幫助調試聲音流

Allen 和 LWprogramming 審查了程式碼並提交了錯誤修復!

Ilya 發現了多尺度鑑別器下採樣的問題並改進了聲音流訓練器

Andrey 識別了聲流中缺少的損失並指導我使用正確的梅爾頻譜圖超參數

Alejandro 和 Ilya 分享了他們的訓練聲音流結果,並解決了本地註意力位置嵌入的一些問題

LWprogramming 新增編碼器相容性!

LWprogramming 用於尋找從FineTransformer採樣時處理 EOS 代幣的問題!

@YoungloLee 識別出與填充相關的聲流一維因果卷積中的一個大錯誤,而不是考慮步幅!

Hayden 指出了 Soundstream 多尺度判別器中的一些差異

$ pip install audiolm-pytorch神經編解碼器有兩種選擇。如果你想使用預先訓練的24kHz Encodec,只需建立一個Encodec對象,如下所示:

from audiolm_pytorch import EncodecWrapper

encodec = EncodecWrapper ()

# Now you can use the encodec variable in the same way you'd use the soundstream variables below.否則,為了更忠實於原始論文,您可以使用SoundStream 。首先, SoundStream需要在大量音訊資料上進行訓練

from audiolm_pytorch import SoundStream , SoundStreamTrainer

soundstream = SoundStream (

codebook_size = 4096 ,

rq_num_quantizers = 8 ,

rq_groups = 2 , # this paper proposes using multi-headed residual vector quantization - https://arxiv.org/abs/2305.02765

use_lookup_free_quantizer = True , # whether to use residual lookup free quantization - there are now reports of successful usage of this unpublished technique

use_finite_scalar_quantizer = False , # whether to use residual finite scalar quantization

attn_window_size = 128 , # local attention receptive field at bottleneck

attn_depth = 2 # 2 local attention transformer blocks - the soundstream folks were not experts with attention, so i took the liberty to add some. encodec went with lstms, but attention should be better

)

trainer = SoundStreamTrainer (

soundstream ,

folder = '/path/to/audio/files' ,

batch_size = 4 ,

grad_accum_every = 8 , # effective batch size of 32

data_max_length_seconds = 2 , # train on 2 second audio

num_train_steps = 1_000_000

). cuda ()

trainer . train ()

# after a lot of training, you can test the autoencoding as so

soundstream . eval () # your soundstream must be in eval mode, to avoid having the residual dropout of the residual VQ necessary for training

audio = torch . randn ( 10080 ). cuda ()

recons = soundstream ( audio , return_recons_only = True ) # (1, 10080) - 1 channel然後,您訓練過的SoundStream可以用作音訊的通用分詞器

audio = torch . randn ( 1 , 512 * 320 )

codes = soundstream . tokenize ( audio )

# you can now train anything with the codebook ids

recon_audio_from_codes = soundstream . decode_from_codebook_indices ( codes )

# sanity check

assert torch . allclose (

recon_audio_from_codes ,

soundstream ( audio , return_recons_only = True )

)您也可以透過分別匯入AudioLMSoundStream和MusicLMSoundStream來使用特定於AudioLM和MusicLM聲音流

from audiolm_pytorch import AudioLMSoundStream , MusicLMSoundStream

soundstream = AudioLMSoundStream (...) # say you want the hyperparameters as in Audio LM paper

# rest is the same as above從版本0.17.0開始,您現在可以呼叫SoundStream上的類別方法來從檢查點檔案加載,而無需記住您的配置。

from audiolm_pytorch import SoundStream

soundstream = SoundStream . init_and_load_from ( './path/to/checkpoint.pt' )要使用重量和偏差跟踪,首先在SoundStreamTrainer上設定use_wandb_tracking = True ,然後執行以下操作

trainer = SoundStreamTrainer (

soundstream ,

...,

use_wandb_tracking = True

)

# wrap .train() with contextmanager, specifying project and run name

with trainer . wandb_tracker ( project = 'soundstream' , run = 'baseline' ):

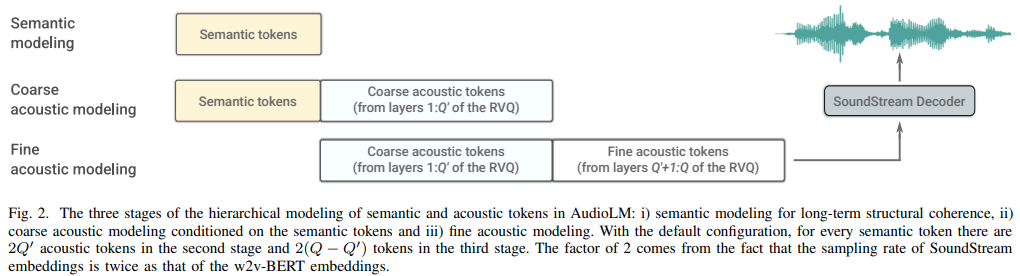

trainer . train ()然後需要訓練三個獨立的變壓器( SemanticTransformer 、 CoarseTransformer 、 FineTransformer )

前任。 SemanticTransformer

import torch

from audiolm_pytorch import HubertWithKmeans , SemanticTransformer , SemanticTransformerTrainer

# hubert checkpoints can be downloaded at

# https://github.com/facebookresearch/fairseq/tree/main/examples/hubert

wav2vec = HubertWithKmeans (

checkpoint_path = './hubert/hubert_base_ls960.pt' ,

kmeans_path = './hubert/hubert_base_ls960_L9_km500.bin'

)

semantic_transformer = SemanticTransformer (

num_semantic_tokens = wav2vec . codebook_size ,

dim = 1024 ,

depth = 6 ,

flash_attn = True

). cuda ()

trainer = SemanticTransformerTrainer (

transformer = semantic_transformer ,

wav2vec = wav2vec ,

folder = '/path/to/audio/files' ,

batch_size = 1 ,

data_max_length = 320 * 32 ,

num_train_steps = 1

)

trainer . train ()前任。 CoarseTransformer

import torch

from audiolm_pytorch import HubertWithKmeans , SoundStream , CoarseTransformer , CoarseTransformerTrainer

wav2vec = HubertWithKmeans (

checkpoint_path = './hubert/hubert_base_ls960.pt' ,

kmeans_path = './hubert/hubert_base_ls960_L9_km500.bin'

)

soundstream = SoundStream . init_and_load_from ( '/path/to/trained/soundstream.pt' )

coarse_transformer = CoarseTransformer (

num_semantic_tokens = wav2vec . codebook_size ,

codebook_size = 1024 ,

num_coarse_quantizers = 3 ,

dim = 512 ,

depth = 6 ,

flash_attn = True

)

trainer = CoarseTransformerTrainer (

transformer = coarse_transformer ,

codec = soundstream ,

wav2vec = wav2vec ,

folder = '/path/to/audio/files' ,

batch_size = 1 ,

data_max_length = 320 * 32 ,

num_train_steps = 1_000_000

)

trainer . train ()前任。 FineTransformer

import torch

from audiolm_pytorch import SoundStream , FineTransformer , FineTransformerTrainer

soundstream = SoundStream . init_and_load_from ( '/path/to/trained/soundstream.pt' )

fine_transformer = FineTransformer (

num_coarse_quantizers = 3 ,

num_fine_quantizers = 5 ,

codebook_size = 1024 ,

dim = 512 ,

depth = 6 ,

flash_attn = True

)

trainer = FineTransformerTrainer (

transformer = fine_transformer ,

codec = soundstream ,

folder = '/path/to/audio/files' ,

batch_size = 1 ,

data_max_length = 320 * 32 ,

num_train_steps = 1_000_000

)

trainer . train ()現在都在一起了

from audiolm_pytorch import AudioLM

audiolm = AudioLM (

wav2vec = wav2vec ,

codec = soundstream ,

semantic_transformer = semantic_transformer ,

coarse_transformer = coarse_transformer ,

fine_transformer = fine_transformer

)

generated_wav = audiolm ( batch_size = 1 )

# or with priming

generated_wav_with_prime = audiolm ( prime_wave = torch . randn ( 1 , 320 * 8 ))

# or with text condition, if given

generated_wav_with_text_condition = audiolm ( text = [ 'chirping of birds and the distant echos of bells' ])更新:鑑於“VALL-E”,看起來這會起作用

前任。語意轉換器

import torch

from audiolm_pytorch import HubertWithKmeans , SemanticTransformer , SemanticTransformerTrainer

wav2vec = HubertWithKmeans (

checkpoint_path = './hubert/hubert_base_ls960.pt' ,

kmeans_path = './hubert/hubert_base_ls960_L9_km500.bin'

)

semantic_transformer = SemanticTransformer (

num_semantic_tokens = 500 ,

dim = 1024 ,

depth = 6 ,

has_condition = True , # this will have to be set to True

cond_as_self_attn_prefix = True # whether to condition as prefix to self attention, instead of cross attention, as was done in 'VALL-E' paper

). cuda ()

# mock text audio dataset (as an example)

# you will have to extend your own from `Dataset`, and return an audio tensor as well as a string (the audio description) in any order (the framework will autodetect and route it into the transformer)

from torch . utils . data import Dataset

class MockTextAudioDataset ( Dataset ):

def __init__ ( self , length = 100 , audio_length = 320 * 32 ):

super (). __init__ ()

self . audio_length = audio_length

self . len = length

def __len__ ( self ):

return self . len

def __getitem__ ( self , idx ):

mock_audio = torch . randn ( self . audio_length )

mock_caption = 'audio caption'

return mock_caption , mock_audio

dataset = MockTextAudioDataset ()

# instantiate semantic transformer trainer and train

trainer = SemanticTransformerTrainer (

transformer = semantic_transformer ,

wav2vec = wav2vec ,

dataset = dataset ,

batch_size = 4 ,

grad_accum_every = 8 ,

data_max_length = 320 * 32 ,

num_train_steps = 1_000_000

)

trainer . train ()

# after much training above

sample = trainer . generate ( text = [ 'sound of rain drops on the rooftops' ], batch_size = 1 , max_length = 2 ) # (1, < 128) - may terminate early if it detects [eos] 因為所有培訓師課程都使用 ?加速器,您可以使用accelerate指令輕鬆進行多 GPU 訓練,如下所示

在專案根目錄下

$ accelerate config然後在同一個目錄下

$ accelerate launch train . py 完整的粗略變壓器

使用 fairseq vq-wav2vec 進行嵌入

添加調理

新增分類器免費指導

添加唯一連續的

合併使用 hubert 中間特徵作為語意標記的能力,由 englints 推薦

容納可變長度的音頻,引入 eos 代幣

確保與粗變壓器的獨特連續工作

漂亮地列印所有鑑別器損失到日誌

產生語意標記時處理,在給定唯一連續處理的情況下,最後一個 logits 可能不一定是序列中的最後一個

粗略和精細變壓器的完整採樣程式碼,這將是棘手的

確保在有或沒有提示的情況下對AudioLM類別進行完整推理

完成 soundstream 的完整訓練程式碼,負責鑑別器訓練

為聲流鑑別器添加有效的梯度懲罰

從聲音資料集連接樣本赫茲 - >變壓器,並在訓練期間進行適當的重採樣 - 考慮是否允許資料集具有不同的聲音檔案或強制執行相同的樣本赫茲

所有三個變壓器的完整變壓器訓練代碼

重構,以便語義轉換器有一個包裝器來處理唯一的連續資料以及 wav 到 hubert 或 vq-wav2vec

完全不關注提示側的 eos 令牌(粗略變壓器的語義,粗略變壓器的語義)

從健忘的因果掩蔽中添加結構化的 dropout,比傳統的 dropout 好得多

弄清楚如何抑制 fairseq 中的日誌記錄

斷言傳遞到 audiolm 的所有三個變壓器是相容的

允許基於粗略和精細之間量化器的絕對匹配位置在精細變壓器中進行專門的相對位置嵌入

允許聲音流中的分組殘差 vq(使用來自向量量化-pytorch lib 的GroupedResidualVQ ),來自 hifi-codec

使用 NoPE 添加閃光注意力

接受AudioLM中的素波作為音訊檔案的路徑,並自動重新取樣語意與聲學

為所有變壓器添加鍵/值緩存,加快推理速度

設計分層粗細變壓器

研究規範解碼,首先在 x-transformers 中進行測試,然後在適用的情況下進行移植

在殘差 vq 中存在群組的情況下重做位置嵌入

針對初學者的語音合成測試

cli 工具,例如audiolm generate <wav.file | text>並將產生的 wav 檔案儲存到本機目錄

在可變長度音訊的情況下返回波形列表

只需處理粗略變壓器文字條件訓練中的邊緣情況,其中原始波以不同頻率重新取樣。根據長度自動決定如何路由

@inproceedings { Borsos2022AudioLMAL ,

title = { AudioLM: a Language Modeling Approach to Audio Generation } ,

author = { Zal{'a}n Borsos and Rapha{"e}l Marinier and Damien Vincent and Eugene Kharitonov and Olivier Pietquin and Matthew Sharifi and Olivier Teboul and David Grangier and Marco Tagliasacchi and Neil Zeghidour } ,

year = { 2022 }

} @misc { https://doi.org/10.48550/arxiv.2107.03312 ,

title = { SoundStream: An End-to-End Neural Audio Codec } ,

author = { Zeghidour, Neil and Luebs, Alejandro and Omran, Ahmed and Skoglund, Jan and Tagliasacchi, Marco } ,

publisher = { arXiv } ,

url = { https://arxiv.org/abs/2107.03312 } ,

year = { 2021 }

} @misc { shazeer2020glu ,

title = { GLU Variants Improve Transformer } ,

author = { Noam Shazeer } ,

year = { 2020 } ,

url = { https://arxiv.org/abs/2002.05202 }

} @article { Shazeer2019FastTD ,

title = { Fast Transformer Decoding: One Write-Head is All You Need } ,

author = { Noam M. Shazeer } ,

journal = { ArXiv } ,

year = { 2019 } ,

volume = { abs/1911.02150 }

} @article { Ho2022ClassifierFreeDG ,

title = { Classifier-Free Diffusion Guidance } ,

author = { Jonathan Ho } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2207.12598 }

} @misc { crowson2022 ,

author = { Katherine Crowson } ,

url = { https://twitter.com/rivershavewings }

} @misc { ding2021cogview ,

title = { CogView: Mastering Text-to-Image Generation via Transformers } ,

author = { Ming Ding and Zhuoyi Yang and Wenyi Hong and Wendi Zheng and Chang Zhou and Da Yin and Junyang Lin and Xu Zou and Zhou Shao and Hongxia Yang and Jie Tang } ,

year = { 2021 } ,

eprint = { 2105.13290 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @article { Liu2022FCMFC ,

title = { FCM: Forgetful Causal Masking Makes Causal Language Models Better Zero-Shot Learners } ,

author = { Hao Liu and Xinyang Geng and Lisa Lee and Igor Mordatch and Sergey Levine and Sharan Narang and P. Abbeel } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2210.13432 }

} @inproceedings { anonymous2022normformer ,

title = { NormFormer: Improved Transformer Pretraining with Extra Normalization } ,

author = { Anonymous } ,

booktitle = { Submitted to The Tenth International Conference on Learning Representations } ,

year = { 2022 } ,

url = { https://openreview.net/forum?id=GMYWzWztDx5 } ,

note = { under review }

} @misc { liu2021swin ,

title = { Swin Transformer V2: Scaling Up Capacity and Resolution } ,

author = { Ze Liu and Han Hu and Yutong Lin and Zhuliang Yao and Zhenda Xie and Yixuan Wei and Jia Ning and Yue Cao and Zheng Zhang and Li Dong and Furu Wei and Baining Guo } ,

year = { 2021 } ,

eprint = { 2111.09883 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @article { Li2021LocalViTBL ,

title = { LocalViT: Bringing Locality to Vision Transformers } ,

author = { Yawei Li and K. Zhang and Jie Cao and Radu Timofte and Luc Van Gool } ,

journal = { ArXiv } ,

year = { 2021 } ,

volume = { abs/2104.05707 }

} @article { Defossez2022HighFN ,

title = { High Fidelity Neural Audio Compression } ,

author = { Alexandre D'efossez and Jade Copet and Gabriel Synnaeve and Yossi Adi } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2210.13438 }

} @article { Hu2017SqueezeandExcitationN ,

title = { Squeeze-and-Excitation Networks } ,

author = { Jie Hu and Li Shen and Gang Sun } ,

journal = { 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition } ,

year = { 2017 } ,

pages = { 7132-7141 }

} @inproceedings { Yang2023HiFiCodecGV ,

title = { HiFi-Codec: Group-residual Vector quantization for High Fidelity Audio Codec } ,

author = { Dongchao Yang and Songxiang Liu and Rongjie Huang and Jinchuan Tian and Chao Weng and Yuexian Zou } ,

year = { 2023 }

} @article { Kazemnejad2023TheIO ,

title = { The Impact of Positional Encoding on Length Generalization in Transformers } ,

author = { Amirhossein Kazemnejad and Inkit Padhi and Karthikeyan Natesan Ramamurthy and Payel Das and Siva Reddy } ,

journal = { ArXiv } ,

year = { 2023 } ,

volume = { abs/2305.19466 }

} @inproceedings { dao2022flashattention ,

title = { Flash{A}ttention: Fast and Memory-Efficient Exact Attention with {IO}-Awareness } ,

author = { Dao, Tri and Fu, Daniel Y. and Ermon, Stefano and Rudra, Atri and R{'e}, Christopher } ,

booktitle = { Advances in Neural Information Processing Systems } ,

year = { 2022 }

} @misc { yu2023language ,

title = { Language Model Beats Diffusion -- Tokenizer is Key to Visual Generation } ,

author = { Lijun Yu and José Lezama and Nitesh B. Gundavarapu and Luca Versari and Kihyuk Sohn and David Minnen and Yong Cheng and Agrim Gupta and Xiuye Gu and Alexander G. Hauptmann and Boqing Gong and Ming-Hsuan Yang and Irfan Essa and David A. Ross and Lu Jiang } ,

year = { 2023 } ,

eprint = { 2310.05737 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @inproceedings { Katsch2023GateLoopFD ,

title = { GateLoop: Fully Data-Controlled Linear Recurrence for Sequence Modeling } ,

author = { Tobias Katsch } ,

year = { 2023 } ,

url = { https://api.semanticscholar.org/CorpusID:265018962 }

} @article { Fifty2024Restructuring ,

title = { Restructuring Vector Quantization with the Rotation Trick } ,

author = { Christopher Fifty, Ronald G. Junkins, Dennis Duan, Aniketh Iyengar, Jerry W. Liu, Ehsan Amid, Sebastian Thrun, Christopher Ré } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2410.06424 } ,

url = { https://api.semanticscholar.org/CorpusID:273229218 }

} @inproceedings { Zhou2024ValueRL ,

title = { Value Residual Learning For Alleviating Attention Concentration In Transformers } ,

author = { Zhanchao Zhou and Tianyi Wu and Zhiyun Jiang and Zhenzhong Lan } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273532030 }

}