egnn pytorch

0.2.8

** 在有屏蔽的情況下,發現鄰居選擇有錯誤。如果您在 0.1.12 之前執行過任何具有屏蔽的實驗,請重新執行它們。 **

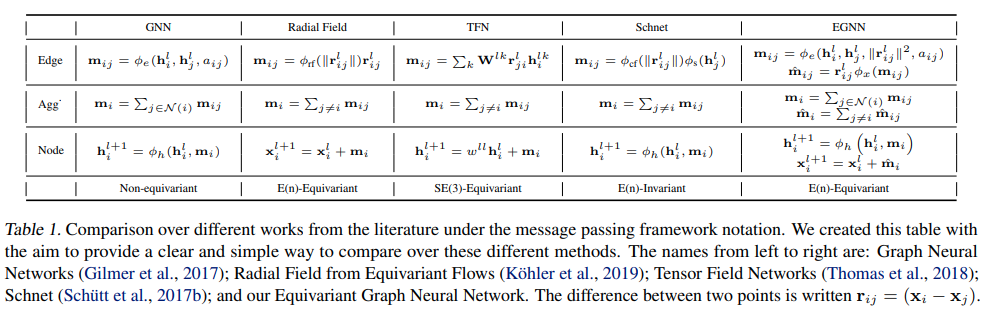

在 Pytorch 實現 E(n)-等變圖神經網路。最終可能用於 Alphafold2 複製。該技術追求簡單的不變特徵,最終在準確性和性能方面擊敗了所有先前的方法(包括 SE3 Transformer 和 Lie Conv)。動力系統模型、分子活性預測任務等中的SOTA

$ pip install egnn-pytorch import torch

from egnn_pytorch import EGNN

layer1 = EGNN ( dim = 512 )

layer2 = EGNN ( dim = 512 )

feats = torch . randn ( 1 , 16 , 512 )

coors = torch . randn ( 1 , 16 , 3 )

feats , coors = layer1 ( feats , coors )

feats , coors = layer2 ( feats , coors ) # (1, 16, 512), (1, 16, 3)有邊

import torch

from egnn_pytorch import EGNN

layer1 = EGNN ( dim = 512 , edge_dim = 4 )

layer2 = EGNN ( dim = 512 , edge_dim = 4 )

feats = torch . randn ( 1 , 16 , 512 )

coors = torch . randn ( 1 , 16 , 3 )

edges = torch . randn ( 1 , 16 , 16 , 4 )

feats , coors = layer1 ( feats , coors , edges )

feats , coors = layer2 ( feats , coors , edges ) # (1, 16, 512), (1, 16, 3)完整的 EGNN 網絡

import torch

from egnn_pytorch import EGNN_Network

net = EGNN_Network (

num_tokens = 21 ,

num_positions = 1024 , # unless what you are passing in is an unordered set, set this to the maximum sequence length

dim = 32 ,

depth = 3 ,

num_nearest_neighbors = 8 ,

coor_weights_clamp_value = 2. # absolute clamped value for the coordinate weights, needed if you increase the num neareest neighbors

)

feats = torch . randint ( 0 , 21 , ( 1 , 1024 )) # (1, 1024)

coors = torch . randn ( 1 , 1024 , 3 ) # (1, 1024, 3)

mask = torch . ones_like ( feats ). bool () # (1, 1024)

feats_out , coors_out = net ( feats , coors , mask = mask ) # (1, 1024, 32), (1, 1024, 3)只關注稀疏鄰居,以鄰接矩陣的形式提供給網路。

import torch

from egnn_pytorch import EGNN_Network

net = EGNN_Network (

num_tokens = 21 ,

dim = 32 ,

depth = 3 ,

only_sparse_neighbors = True

)

feats = torch . randint ( 0 , 21 , ( 1 , 1024 ))

coors = torch . randn ( 1 , 1024 , 3 )

mask = torch . ones_like ( feats ). bool ()

# naive adjacency matrix

# assuming the sequence is connected as a chain, with at most 2 neighbors - (1024, 1024)

i = torch . arange ( 1024 )

adj_mat = ( i [:, None ] >= ( i [ None , :] - 1 )) & ( i [:, None ] <= ( i [ None , :] + 1 ))

feats_out , coors_out = net ( feats , coors , mask = mask , adj_mat = adj_mat ) # (1, 1024, 32), (1, 1024, 3)您還可以讓網路自動確定 N 階鄰居,並傳入鄰接嵌入(取決於順序)以用作邊,並帶有兩個額外的關鍵字參數

import torch

from egnn_pytorch import EGNN_Network

net = EGNN_Network (

num_tokens = 21 ,

dim = 32 ,

depth = 3 ,

num_adj_degrees = 3 , # fetch up to 3rd degree neighbors

adj_dim = 8 , # pass an adjacency degree embedding to the EGNN layer, to be used in the edge MLP

only_sparse_neighbors = True

)

feats = torch . randint ( 0 , 21 , ( 1 , 1024 ))

coors = torch . randn ( 1 , 1024 , 3 )

mask = torch . ones_like ( feats ). bool ()

# naive adjacency matrix

# assuming the sequence is connected as a chain, with at most 2 neighbors - (1024, 1024)

i = torch . arange ( 1024 )

adj_mat = ( i [:, None ] >= ( i [ None , :] - 1 )) & ( i [:, None ] <= ( i [ None , :] + 1 ))

feats_out , coors_out = net ( feats , coors , mask = mask , adj_mat = adj_mat ) # (1, 1024, 32), (1, 1024, 3) 如果需要傳入連續的邊

import torch

from egnn_pytorch import EGNN_Network

net = EGNN_Network (

num_tokens = 21 ,

dim = 32 ,

depth = 3 ,

edge_dim = 4 ,

num_nearest_neighbors = 3

)

feats = torch . randint ( 0 , 21 , ( 1 , 1024 ))

coors = torch . randn ( 1 , 1024 , 3 )

mask = torch . ones_like ( feats ). bool ()

continuous_edges = torch . randn ( 1 , 1024 , 1024 , 4 )

# naive adjacency matrix

# assuming the sequence is connected as a chain, with at most 2 neighbors - (1024, 1024)

i = torch . arange ( 1024 )

adj_mat = ( i [:, None ] >= ( i [ None , :] - 1 )) & ( i [:, None ] <= ( i [ None , :] + 1 ))

feats_out , coors_out = net ( feats , coors , edges = continuous_edges , mask = mask , adj_mat = adj_mat ) # (1, 1024, 32), (1, 1024, 3) 當鄰居數量較多時,EGNN 的初始架構會出現不穩定的情況。值得慶幸的是,似乎有兩種解決方案可以在很大程度上緩解這種情況。

import torch

from egnn_pytorch import EGNN_Network

net = EGNN_Network (

num_tokens = 21 ,

dim = 32 ,

depth = 3 ,

num_nearest_neighbors = 32 ,

norm_coors = True , # normalize the relative coordinates

coor_weights_clamp_value = 2. # absolute clamped value for the coordinate weights, needed if you increase the num neareest neighbors

)

feats = torch . randint ( 0 , 21 , ( 1 , 1024 )) # (1, 1024)

coors = torch . randn ( 1 , 1024 , 3 ) # (1, 1024, 3)

mask = torch . ones_like ( feats ). bool () # (1, 1024)

feats_out , coors_out = net ( feats , coors , mask = mask ) # (1, 1024, 32), (1, 1024, 3) import torch

from egnn_pytorch import EGNN

model = EGNN (

dim = dim , # input dimension

edge_dim = 0 , # dimension of the edges, if exists, should be > 0

m_dim = 16 , # hidden model dimension

fourier_features = 0 , # number of fourier features for encoding of relative distance - defaults to none as in paper

num_nearest_neighbors = 0 , # cap the number of neighbors doing message passing by relative distance

dropout = 0.0 , # dropout

norm_feats = False , # whether to layernorm the features

norm_coors = False , # whether to normalize the coordinates, using a strategy from the SE(3) Transformers paper

update_feats = True , # whether to update features - you can build a layer that only updates one or the other

update_coors = True , # whether ot update coordinates

only_sparse_neighbors = False , # using this would only allow message passing along adjacent neighbors, using the adjacency matrix passed in

valid_radius = float ( 'inf' ), # the valid radius each node considers for message passing

m_pool_method = 'sum' , # whether to mean or sum pool for output node representation

soft_edges = False , # extra GLU on the edges, purportedly helps stabilize the network in updated version of the paper

coor_weights_clamp_value = None # clamping of the coordinate updates, again, for stabilization purposes

)要運行蛋白質主幹去噪範例,首先安裝sidechainnet

$ pip install sidechainnet然後

$ python denoise_sparse.py確保本機安裝了 pytorch 幾何

$ python setup.py test @misc { satorras2021en ,

title = { E(n) Equivariant Graph Neural Networks } ,

author = { Victor Garcia Satorras and Emiel Hoogeboom and Max Welling } ,

year = { 2021 } ,

eprint = { 2102.09844 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

}