pi zero pytorch

0.1.5

物理智慧提出的機器人基礎模型架構π₀的實現

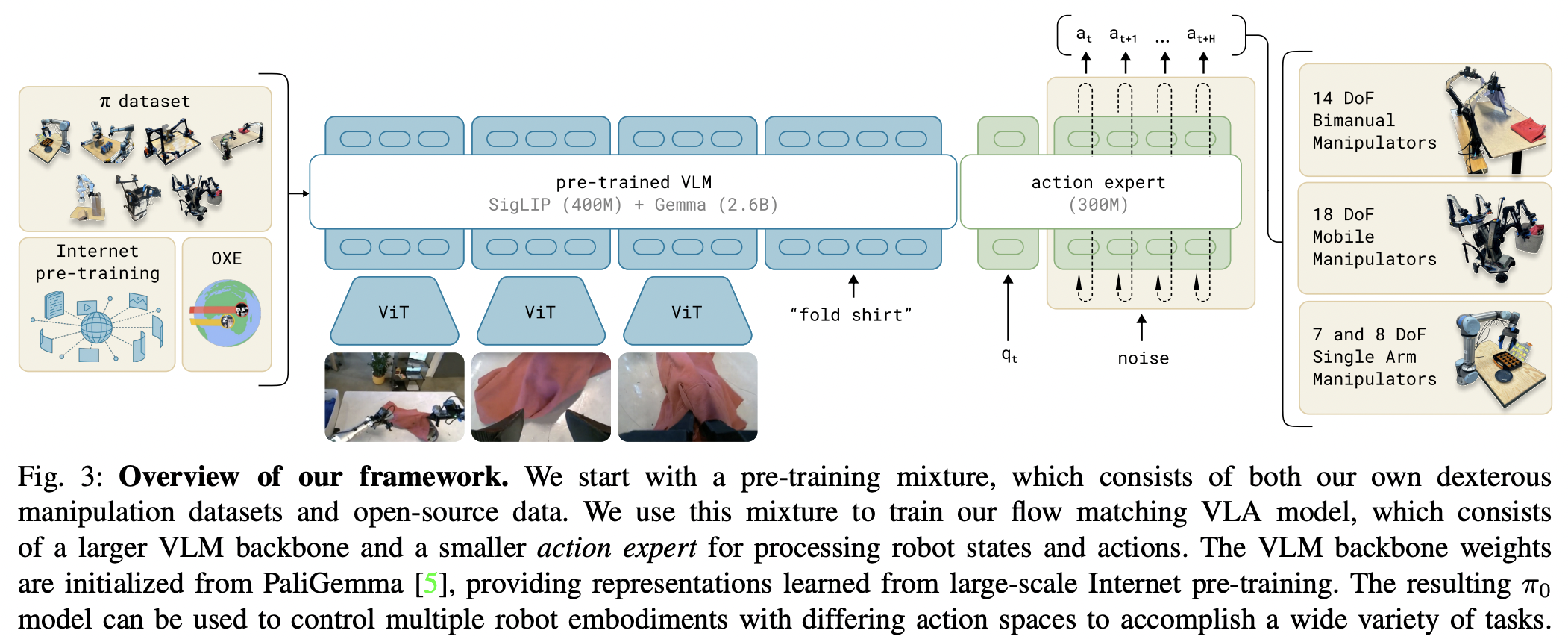

這項工作的總結是,它是一個簡化的 Transfusion(Zhou 等人),受到 Stable Diffusion 3(Esser 等人)的影響,主要是採用流匹配而不是擴散來生成策略,以及分離參數(來自mmDIT 的聯合注意力)。它們建立在預先訓練的視覺語言模型 PaliGemma 2B 之上。

Einops 用於令人驚嘆的打包和解包,在這裡廣泛用於管理各種令牌集

Flex Attention 可輕鬆混合自回歸和雙向注意力

@Wonder1905 用於程式碼審查和識別問題

你?也許是博士生想要為行為複製的最新 SOTA 架構做出貢獻?

$ pip install pi-zero-pytorch import torch

from pi_zero_pytorch import π0

model = π0 (

dim = 512 ,

dim_action_input = 6 ,

dim_joint_state = 12 ,

num_tokens = 20_000

)

vision = torch . randn ( 1 , 1024 , 512 )

commands = torch . randint ( 0 , 20_000 , ( 1 , 1024 ))

joint_state = torch . randn ( 1 , 12 )

actions = torch . randn ( 1 , 32 , 6 )

loss , _ = model ( vision , commands , joint_state , actions )

loss . backward ()

# after much training

sampled_actions = model ( vision , commands , joint_state , trajectory_length = 32 ) # (1, 32, 6)在專案根目錄下,執行

$ pip install ' .[test] ' # or `uv pip install '.[test]'`然後將測試新增至tests/test_pi_zero.py並執行

$ pytest tests/就是這樣

@misc { Black2024 ,

author = { Kevin Black, Noah Brown, Danny Driess, Adnan Esmail, Michael Equi, Chelsea Finn, Niccolo Fusai, Lachy Groom, Karol Hausman, Brian Ichter, Szymon Jakubczak, Tim Jones, Liyiming Ke, Sergey Levine, Adrian Li-Bell, Mohith Mothukuri, Suraj Nair, Karl Pertsch, Lucy Xiaoyang Shi, James Tanner, Quan Vuong, Anna Walling, Haohuan Wang, Ury Zhilinsky } ,

url = { https://www.physicalintelligence.company/download/pi0.pdf }

} @inproceedings { Zhou2024ValueRL ,

title = { Value Residual Learning For Alleviating Attention Concentration In Transformers } ,

author = { Zhanchao Zhou and Tianyi Wu and Zhiyun Jiang and Zhenzhong Lan } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273532030 }

} @inproceedings { Darcet2023VisionTN ,

title = { Vision Transformers Need Registers } ,

author = { Timoth'ee Darcet and Maxime Oquab and Julien Mairal and Piotr Bojanowski } ,

year = { 2023 } ,

url = { https://api.semanticscholar.org/CorpusID:263134283 }

} @article { Li2024ImmiscibleDA ,

title = { Immiscible Diffusion: Accelerating Diffusion Training with Noise Assignment } ,

author = { Yiheng Li and Heyang Jiang and Akio Kodaira and Masayoshi Tomizuka and Kurt Keutzer and Chenfeng Xu } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2406.12303 } ,

url = { https://api.semanticscholar.org/CorpusID:270562607 }

} @inproceedings { Sadat2024EliminatingOA ,

title = { Eliminating Oversaturation and Artifacts of High Guidance Scales in Diffusion Models } ,

author = { Seyedmorteza Sadat and Otmar Hilliges and Romann M. Weber } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:273098845 }

} @article { Bulatov2022RecurrentMT ,

title = { Recurrent Memory Transformer } ,

author = { Aydar Bulatov and Yuri Kuratov and Mikhail S. Burtsev } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2207.06881 } ,

url = { https://api.semanticscholar.org/CorpusID:250526424 }

} @inproceedings { Bessonov2023RecurrentAT ,

title = { Recurrent Action Transformer with Memory } ,

author = { A. B. Bessonov and Alexey Staroverov and Huzhenyu Zhang and Alexey K. Kovalev and D. Yudin and Aleksandr I. Panov } ,

year = { 2023 } ,

url = { https://api.semanticscholar.org/CorpusID:259188030 }

} @article { Zhu2024HyperConnections ,

title = { Hyper-Connections } ,

author = { Defa Zhu and Hongzhi Huang and Zihao Huang and Yutao Zeng and Yunyao Mao and Banggu Wu and Qiyang Min and Xun Zhou } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2409.19606 } ,

url = { https://api.semanticscholar.org/CorpusID:272987528 }

}親愛的愛麗絲