這是我們的TMLR調查的回購,統一了NLP和軟件工程的觀點:關於代碼語言模型的調查 - 對法規LLM研究的全面審查。每個類別的作品按時間順序排序。如果您對機器學習有基本的了解,但是NLP的新知識,我們還提供了第9節中推薦的讀數列表。

[2024/11/28]特色論文:

偏好優化對Nanyang Technology University的偽反饋的推理。

Scribeagent:使用Scribe的生產規模工作流數據邁向專業的Web代理。

計劃驅動的編程:墨爾本大學的大型語言模型編程工作流程。

存儲庫級代碼翻譯基準對陽光森大學的生鏽定位。

利用先前的經驗:中國科學技術大學的文本到SQL的可擴展輔助知識基礎。

法典:一種通才嵌入模型家族,用於從Salesforce AI研究中檢索多任務和多任務代碼。

檢察官:Purdue University通過主動的安全對準加固代碼LLM。

[2024/10/22]我們在2024年9月和10月的一篇微信中收集了70篇論文。

[2024/09/06]我們的調查已被機器學習研究(TMLR)的交易接受。

[2024/09/14]我們在一份微信中彙編了57篇論文(包括在ACL 2024上介紹的48篇論文(包括48篇)。

如果您發現該存儲庫中缺少的論文,分為類別或缺乏對其期刊/會議信息的參考,請隨時毫不猶豫地創建問題。如果您發現此存儲庫有幫助,請引用我們的調查:

@article{zhang2024unifying,

title={Unifying the Perspectives of {NLP} and Software Engineering: A Survey on Language Models for Code},

author={Ziyin Zhang and Chaoyu Chen and Bingchang Liu and Cong Liao and Zi Gong and Hang Yu and Jianguo Li and Rui Wang},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2024},

url={https://openreview.net/forum?id=hkNnGqZnpa},

note={}

}

調查

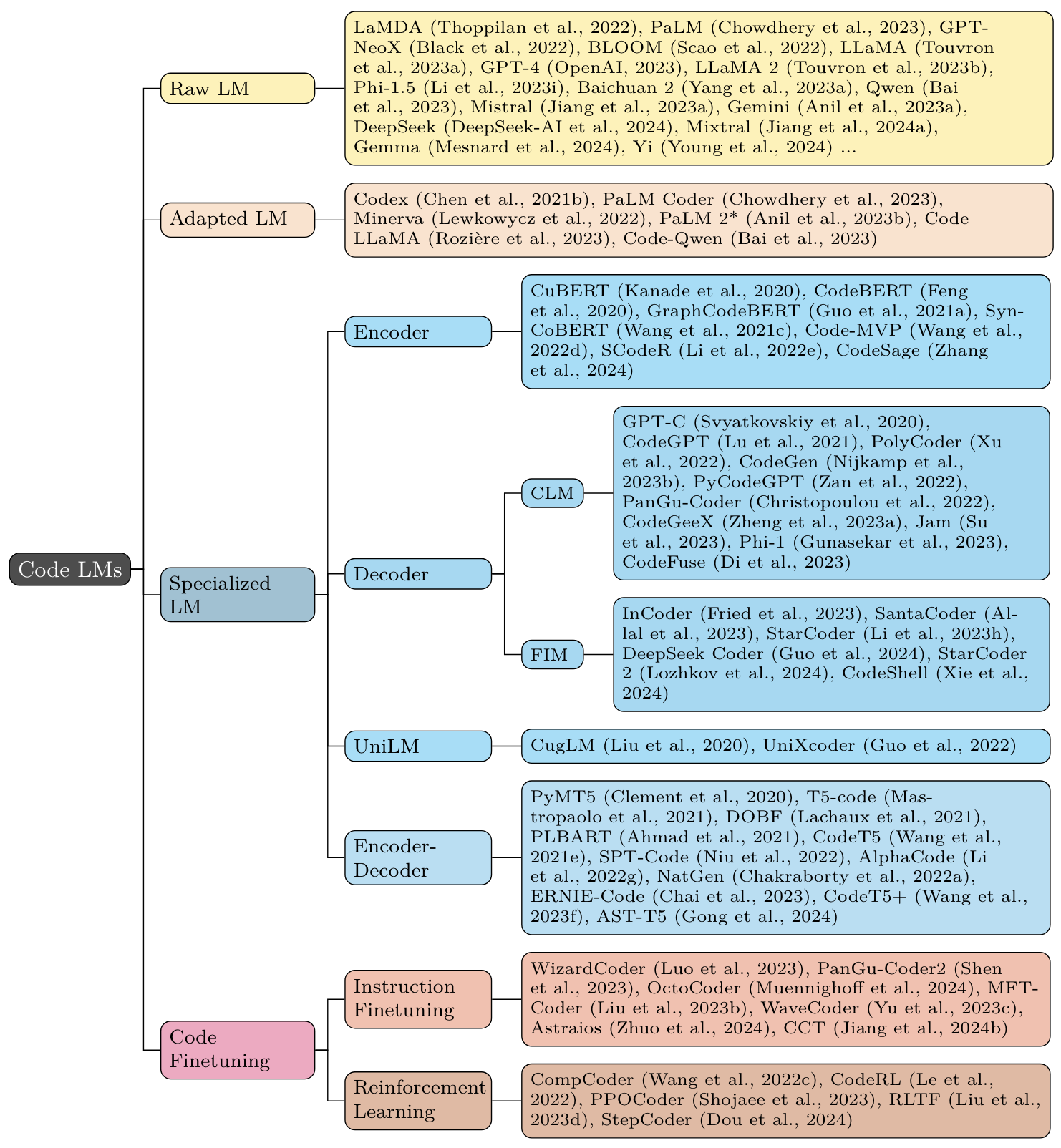

型號

2.1基礎LLM和訓練策略

2.2現有的LLM適用於代碼

2.3一般預測代碼

2.4(指令)代碼進行微調

2.5關於代碼的加固學習

編碼符合推理時

3.1編碼推理

3.2代碼模擬

3.3代碼代理

3.4交互式編碼

3.5前端導航

用於低資源,低級和特定域的語言的代碼LLM

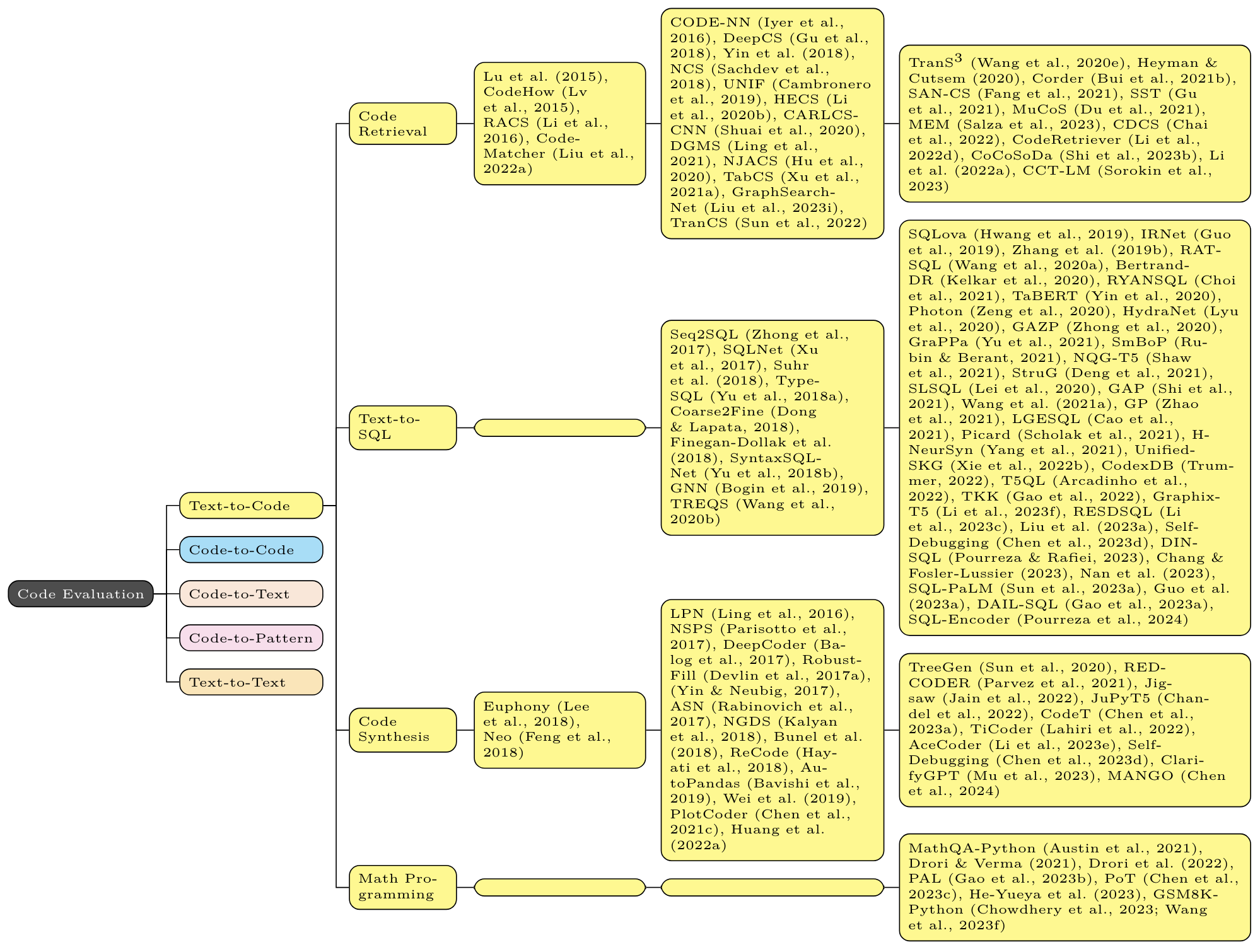

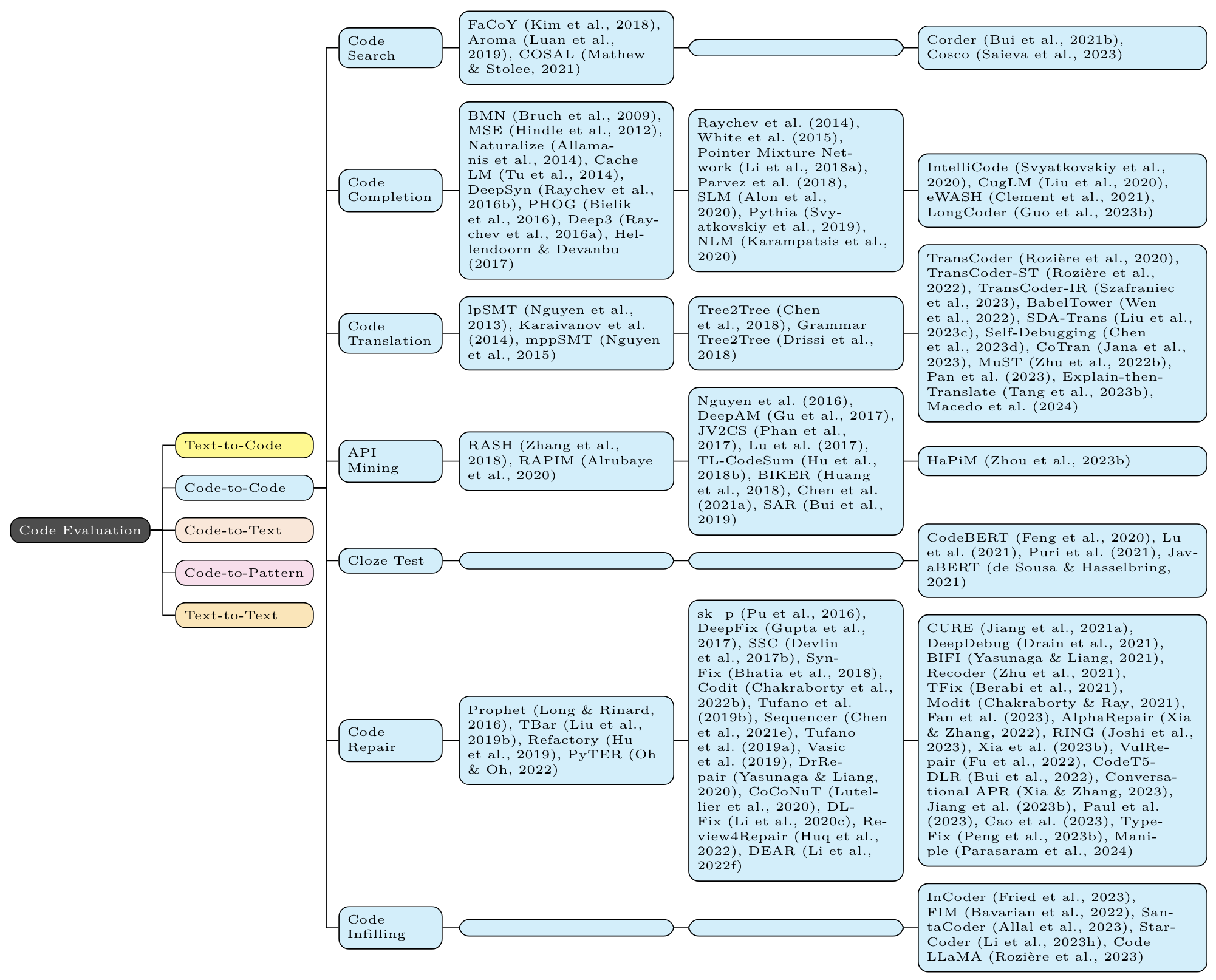

下游任務的方法/模型

程式設計

測試和部署

DevOps

要求

AI生成的代碼的分析

人類相互作用

數據集

8.1預訓練

8.2基準

推薦閱讀

引用

星曆史

加入我們

我們列出了有關類似主題的最近幾項調查。儘管它們都是關於代碼的語言模型,但1-2集中在NLP方面; 3-6專注於SE方; 7-11在我們之後發布。

“大語模型符合NL2Code:調查” [2022-12] [ACL 2023] [Paper]

“關於神經法規智能的驗證語言模型的調查” [2022-12] [紙]

“源代碼的預訓練模型的經驗比較” [2023-02] [ICSE 2023] [Paper]

“軟件工程的大型語言模型:系統文獻綜述” [2023-08] [紙]

“要了解軟件工程任務中的大型語言模型” [2023-08] [紙]

“代碼情報的語言模型中的陷阱:分類法和調查” [2023-10] [紙]

“針對軟件工程的大型語言模型的調查” [2023-12] [紙]

“代碼智能的深度學習:調查,基準和工具包” [2023-12] [紙]

“神經法規智能的調查:範式,進步及以後” [2024-03] [紙]

“任務人員提示:軟件驗證和偽造方法中LLM下游任務的分類法” [2024-04] [Paper]

“自動編程:大語言模型及以後” [2024-05] [紙]

“軟件工程和基礎模型:使用基礎模型的陪審團的行業博客見解” [2024-10] [紙]

“基於深度學習的軟件工程:進步,挑戰和機遇” [2024-10] [紙]

這些LLM並未專門培訓用於代碼,但已證明編碼功能有所不同。

LAMDA :“ LAMDA:對話框的語言模型” [2022-01] [紙]

棕櫚:“棕櫚:使用途徑的縮放語言建模” [2022-04] [JMLR] [紙]

GPT-NEOX :“ GPT-NEOX-20B:開放源節自回歸語言模型” [2022-04] [ACL 2022關於創建LLMS的挑戰和觀點研討會[Paper] [Paper] [repo]

布魯姆:“布魯姆:176B參數開放式訪問多語言模型” [2022-11] [紙] [模型]

Llama :“ Llama:開放有效的基礎語言模型” [2023-02] [紙]

GPT-4 :“ GPT-4技術報告” [2023-03] [紙]

Llama 2 :“ Llama 2:開放基礎和微調聊天模型” [2023-07] [Paper] [repo]

PHI-1.5 :“您需要教科書II:PHI-1.5技術報告” [2023-09] [Paper] [模型]

Baichuan 2 :“ Baichuan 2:打開大規模語言模型” [2023-09] [Paper] [repo]

QWEN :“ QWEN技術報告” [2023-09] [Paper] [Repo]

Mistral :“ Mistral 7b” [2023-10] [Paper] [repo]

雙子座:“雙子座:一個高度強大的多峰模型家族” [2023-12] [紙]

PHI-2 :“ PHI-2:小語言模型的驚喜能力” [2023-12] [博客]

Yayi2 :“ Yayi 2:多語言開源大語言模型” [2023-12] [Paper] [repo]

DeepSeek :“ DeepSeek LLM:使用長期主義擴展開源語言模型” [2024-01] [Paper] [repo]

混音:“專家混音” [2024-01] [Paper] [Blog]

DeepSeekmoe :“ DeepSeekmoe:邁向Experts語言混合物的最終專家專業” [2024-01] [Paper] [repo]

獵戶座:“ Orion-14b:開源多語言大語言模型” [2024-01] [Paper] [repo]

Olmo :“ Olmo:加速語言模型的科學” [2024-02] [Paper] [repo]

Gemma :“ Gemma:基於雙子座研究與技術的開放模型” [2024-02] [Paper] [Blog]

克勞德3 :“克勞德3型家族:Opus,Sonnet,Haiku” [2024-03] [Paper] [Blog]

Yi :“ YI:開放基礎模型,由01.AI” [2024-03] [Paper] [repo]

Poro :“ Poro 34B和多語言的祝福” [2024-04] [Paper] [模型]

JetMoe :“ Jetmoe:以0.1m美元達到Llama2的性能” [2024-04] [Paper] [repo]

Llama 3 :“ Llama 3模型群” [2024-04] [blog] [repo] [Paper]

REKA核心:“ Reka Core,Flash和Edge:一系列強大的多模式模型” [2024-04] [Paper]

PHI-3 :“ PHI-3技術報告:手機上本地有能力的語言模型” [2024-04] [紙]

Openelm :“ OpenElm:具有開源培訓和推理框架的有效語言模型家族” [2024-04] [Paper] [repo]

Tele-FLM :“ Tele-FLM技術報告” [2024-04] [Paper] [模型]

DeepSeek-v2 :“ DeepSeek-V2:強大,經濟和有效的專家語言模型” [2024-05] [Paper] [repo]

壁虎:“壁虎:英語,代碼和韓語的生成語言模型” [2024-05] [Paper] [模型]

MAP-NEO :“ MAP-NEO:高功能和透明的雙語大語言模型系列” [2024-05] [Paper] [repo]

Skywork-Moe :“ Skywork-Moe:深入研究培訓技術的培訓技術” [2024-06] [紙]

XModel-LM :“ Xmodel-LM技術報告” [2024-06] [Paper]

GEB :“ GEB-1.3B:開放輕巧的大語言模型” [2024-06] [紙]

野兔:“野兔:人類先驗,小語言模型效率的關鍵” [2024-06] [紙]

DCLM :“ DataComp-LM:尋找語言模型的下一代培訓集” [2024-06] [Paper]

Nemotron-4 :“ Nemotron-4 340B技術報告” [2024-06] [Paper]

chatglm :“ changglm:從GLM-130B到GLM-4的大型語言模型家庭” [2024-06] [紙]

Yulan :“ Yulan:開源大型語言模型” [2024-06] [紙]

Gemma 2 :“ Gemma 2:以實用大小改善開放語言模型” [2024-06] [紙]

H2O-DANUBE3 :“ H2O-DANUBE3技術報告” [2024-07] [紙]

QWEN2 :“ QWEN2技術報告” [2024-07] [紙]

阿拉姆:“阿拉姆:阿拉伯語和英語的大語言模型” [2024-07] [紙]

Seallms 3 :“ Seallms 3:東南亞語言的開放基礎和聊天多語言大語模型” [2024-07] [紙]

AFM :“ Apple Intelligence Foundation語言模型” [2024-07] [紙]

“要代碼,還是不編碼?探索代碼在預訓練中的影響” [2024-08] [Paper]

Olmoe :“ Olmoe:開放式Experts語言模型” [2024-09] [Paper]

“預處理的代碼如何影響語言模型任務表現?” [2024-09] [紙]

EUROLLM :“ EUROLLM:歐洲的多語言語言模型” [2024-09] [Paper]

“哪種編程語言以及訓練階段的哪些功能會影響下游邏輯推理性能?” [2024-10] [紙]

GPT-4O :“ GPT-4O系統卡” [2024-10] [紙]

Hunyuan-large :“ Hunyuan-large:一種開源MOE型號,由Tencent進行了520億個激活參數” [2024-11] [Paper]

水晶:“水晶:語言和代碼上的LLM功能” [2024-11] [紙]

Xmodel-1.5 :“ Xmodel-1.5:1B規模的多語言LLM” [2024-11] [Paper]

這些模型是通用的LLM,進一步介紹了與代碼相關的數據。

法典(GPT-3):“評估在代碼上訓練的大型語言模型” [2021-07] [紙]

棕櫚編碼器(棕櫚):“棕櫚:用途徑縮放語言建模” [2022-04] [JMLR] [紙]

密涅瓦(Palm):“解決語言模型的定量推理問題” [2022-06] [紙]

棕櫚2 * (棕櫚2):“棕櫚2技術報告” [2023-05] [紙]

Code Llama (Llama 2):“代碼駱駝:代碼的開放基礎模型” [2023-08] [Paper] [repo]

Lemur (Llama 2):“ Lemur:協調語言代理的自然語言和代碼” [2023-10] [ICLR 2024 Spotlight] [Paper]

BTX (LLAMA 2):“分支機構 - 培訓:將專家LLM混合到Experts LLM的混合物中” [2024-03] [Paper]

Hirope :“ Hirope:使用分層位置的代碼模型的長度外推” [2024-03] [ACL 2024] [Paper]

“通過融合高度專業的語言模型同時掌握文本,代碼和數學” [2024-03] [Paper]

Codegemma :“ Codegemma:基於Gemma的打開代碼模型” [2024-04] [Paper] [模型]

DeepSeek-Coder-V2 :“ DeepSeek-Coder-v2:打破代碼智能中的封閉源模型的障礙” [2024-06] [Paper]

“協作代碼生成模型的承諾和危險:平衡有效性和記憶” [2024-09] [Paper]

Qwen2.5代碼:“ QWEN2.5-CODER技術報告” [2024-09] [Paper]

Lingma Swe-GPT :“ Lingma Swe-GPT:用於自動化軟件改進的開放開發過程中的開放開發過程模型” [2024-11] [Paper]

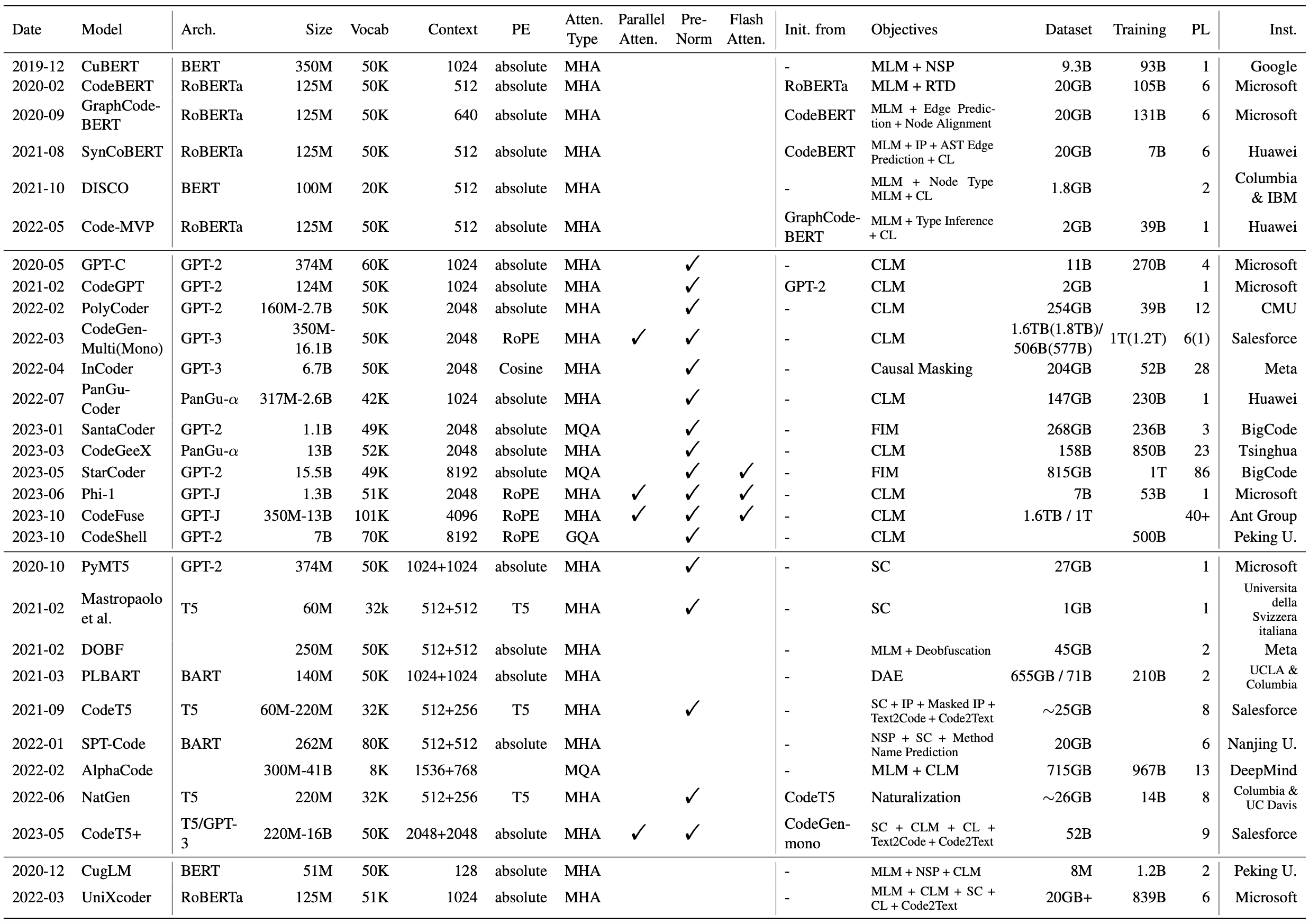

這些模型是使用現有的通用語言建模目標從頭開始預測的變壓器編碼器,解碼器和編碼器描述器。

Cubert (MLM + NSP):“學習和評估源代碼的上下文嵌入” [2019-12] [ICML 2020] [Paper] [repo]

Codebert (MLM + RTD):“ Codebert:用於編程和自然語言的預訓練模型” [2020-02] [EMNLP 2020調查結果] [Paper] [Paper] [repo]

GraphCodebert (MLM + DFG邊緣預測 + DFG節點對齊):“ GraphCodebert:帶有數據流的預訓練代碼表示” [2020-09] [ICLR 2021] [Paper] [Paper] [Repo]

Syncobert (MLM +標識符預測 + AST邊緣預測 +對比度學習):“ Syncobert:語法引導的代碼表示的多模式對比預訓練” [2021-08] [Paper]

迪斯科(MLM +節點類型MLM +對比度學習):“學習(DIS) - 來自程序對比的源代碼的類似性” [2021-10] [ACL 2022] [Paper]

代碼-MVP (MLM +類型推理 +對比度學習):“代碼MVP:學習從多個視圖中表示源代碼具有對比度預訓練” [2022-05] [NAACL 2022技術軌跡] [Paper] [Paper]

代碼(MLM + DEOBFUSCATION +對比度學習):“大規模的代碼表示學習” [2024-02] [ICLR 2024] [Paper] [Paper]

科爾斯伯特(MLM):“代碼理解模型背後的縮放法則” [2024-02] [紙]

GPT-C (CLM):“ Intellicode Compose:使用變壓器代碼生成” [2020-05] [esec/fse 2020] [Paper]

Codegpt (CLM):“ Codexglue:用於代碼理解和生成的機器學習基準數據集” [2021-02] [NEURIPS數據集和基準測試2021] [paper] [paper] [repo]

Codeparrot (CLM)[2021-12] [博客]

PolyCoder (CLM):“代碼大型語言模型的系統評估” [2022-02] [DL4C@ICLR 2022] [Paper] [repo] [repo]

CodeGen (CLM):“ CodeGen:具有多轉化程序合成代碼的開放大語模型” [2022-03] [ICLR 2023] [Paper] [paper] [repo]

啟動器(因果掩蔽):“開關:代碼填充和合成的生成模型” [2022-04] [ICLR 2023] [Paper] [Paper] [repo]

pycodegpt (CLM):“證書:以圖書館為導向的代碼生成的草圖上的持續預訓練” [2022-06] [ijcai-ecai 2022] [paper] [paper] [repo]

pangu-coder (CLM):“ pangu-coder:具有功能級語言建模的程序合成” [2022-07] [Paper]

Santacoder (FIM):“ Santacoder:不要伸手去拿星星!” [2023-01] [紙] [模型]

Codegeex (CLM):“ Codegeex:用於代碼生成的預培訓模型,對HumaneVal-X進行多語言評估” [2023-03] [Paper] [Paper] [repo]

Starcoder (FIM):“ Starcoder:願來源與您同在!” [2023-05] [紙] [模型]

PHI-1 (CLM):“您需要的教科書都是所有的” [2023-06] [Paper] [模型]

CodeFuse (CLM):“ CodeFuse-13b:審慎的多語言代碼大語言模型” [2023-10] [Paper] [模型]

DeepSeek編碼器(CLM+FIM):“ DeepSeek-Coder:當大語言模型符合編程時 - 代碼智能的興起” [2024-01] [Paper] [repo] [repo]

StarCoder2 (CLM+FIM):“ Starcoder 2和堆棧V2:下一代” [2024-02] [Paper] [repo]

CODESHELL (CLM+FIM):“ CodeShell技術報告” [2024-03] [Paper] [repo]

Codeqwen1.5 [2024-04] [博客]

花崗岩:“花崗岩代碼模型:代碼智能的開放基礎模型家族” [2024-05] [紙]“將花崗岩代碼模型縮放到128K上下文” [2024-07] [2024-07] [Paper]

NT-JAVA :“狹窄的變壓器:基於Starcoder的Java-LM用於桌面” [2024-07] [Paper]

Arctic-SnowCoder :“北極 - 搶語:在代碼預處理中揭開高質量數據” [2024-09] [Paper]

AIXCODER :“ AIXCODER-7B:代碼完成代碼完成的輕巧有效的大語言模型” [2024-10] [Paper]

OpenCoder :“ OpenCoder:頂級代碼大型語言模型的開放食譜” [2024-11] [Paper]

PYMT5 (跨度腐敗):“ PYMT5:具有變壓器的自然語言和Python代碼的多模式翻譯” [2020-10] [EMNLP 2020] [Paper]

Mastropaolo等。 (MLM + DEOBFUSCATION):“ DOBF:編程語言的DEOBFUSCATION預訓練目標” [2021-02] [ICSE 2021] [Paper] [Paper] [repo]

DOBF (跨度腐敗):“研究文本到文本傳輸變壓器以支持代碼相關的任務的使用” [2021-02] [Neurips 2021] [Paper] [Paper] [repo]

PLBART (DAE):“用於計劃理解和發電的統一預訓練” [2021-03] [NAACL 2021] [Paper] [repo]

codet5 (跨度損壞 +標識符標記 +蒙版標識符預測 + text2code + code2text):“ codet5:標識符 - 意識到統一的統一的預訓練的編碼器模型,用於代碼理解和生成” [2021-09] [2021-09]

SPT-CODE (跨度損壞 + NSP +方法名稱預測):“ SPT-CODE:學習源代碼表示的序列到序列預訓練” [2022-01] [ICSE 2022技術軌道] [Paper] [Paper]

字母(MLM + CLM):“使用字母的競爭級代碼生成” [2022-02] [Science] [Paper] [Blog]

NATGEN (代碼歸化):“ NATGEN:通過“歸化”源代碼” [2022-06] [ESEC/FSE 2022] [Paper] [Paper] [repo]來培訓生成預訓練

Ernie-Code (跨度腐敗 +基於樞軸的翻譯LM):“ Ernie-Code:超越以英語為中心的編程語言的跨語性讀圖” [2022-12] [2022-12] [ACL23(發現)] [Paper] [Paper] [repo]

codet5 + (跨度損壞 + clm +文本代碼對比學習 +文本代碼翻譯):“ codet5 +:打開代碼理解和生成的大語言模型” [2023-05] [EMNLP 2023] [Paper] [paper] [repo]

AST-T5 (SPAN腐敗):“ AST-T5:結構意識到代碼生成和理解的預讀” [2024-01] [ICML 2024] [Paper] [Paper]

CUGLM (MLM + NSP + CLM):“基於多任務學習的代碼完成的預訓練的語言模型” [2020-12] [ASE 2020] [Paper]

UnixCoder (MLM + NSP + CLM +跨度損壞 +對比度學習 + Code2Text):“ UnixCoder:代碼表示的統一跨模式預訓練” [2022-03] [ACL 2022] [Paper] [Paper] [Paper] [repo] [repo]

這些模型採用教學微調技術來增強代碼LLM的能力。

WizardCoder (Starcoder + Evol-Instruct):“ WizardCoder:使用Evol-Instruct的大型語言模型授權代碼” [2023-06] [ICLR 2024] [Paper] [Paper] [repo]

pangu-coder 2 (Starcoder + Evol-Instruct + RRTF):“ Pangu-Coder2:使用排名反饋的代碼增加大型語言模型” [2023-07] [Paper]

Octocoder (StarCoder) / Octogeex (Codegeex2):“章魚:指令調諧代碼大語言模型” [2023-08] [ICLR 2024 Spotlight] [Paper] [Paper] [repo]

“在哪個培訓階段進行代碼數據有助於LLMS推理” [2023-09] [ICLR 2024 Spotlight] [Paper]

指示尺:“指示尺:指令調整大型語言模型進行代碼編輯” [paper] [repo]

MftCoder :“ MftCoder:使用多任務進行微調來提高代碼LLM” [2023-11] [KDD 2024] [Paper] [repo]

“用於培訓準確代碼生成器的LLM輔助代碼清潔” [2023-11] [ICLR 2024] [Paper]

Magicoder :“ Magicoder:使用OSS-Instruct授權代碼生成” [2023-12] [ICML 2024] [Paper]

WaveCoder :“ WaveCoder:通過指令調整大型語言模型的廣泛而多功能增強” [2023-12] [ACL 2024] [Paper]

Astraios :“ Astraios:參數效率指令調諧代碼大語言模型” [2024-01] [Paper]

Dolphcoder :“ DolphCoder:具有多樣和多目標指令調整的迴聲量表大語言模型” [2024-02] [ACL 2024] [Paper]

SAFECODER :“安全代碼生成的指令調整” [2024-02] [ICML 2024] [Paper]

“代碼需求註釋:增強代碼LLM具有評論增強” [ACL 2024調查結果] [紙]

CCT :“代碼大型語言模型的代碼比較調整” [2024-03] [紙]

SAT :“代碼預培訓模型的結構意識微調” [2024-04] [Paper]

Codefort :“ Codefort:強大的代碼生成模型培訓” [2024-04] [紙]

XFT :“ XFT:通過簡單地合併升級混合物來解鎖代碼指令調整的功能” [2024-04] [ACL 2024] [Paper] [Paper] [repo]

Aiev-Instruct :“ Autocoder:使用AIEV-INSTRUCTICT增強代碼大語言模型” [2024-05] [Paper]

AlchemistCoder :“ AlchemistCoder:通過對多源數據進行後觀察調整來協調和啟發代碼功能” [2024-05] [Paper]

“從符號任務到代碼生成:多元化會產生更好的任務表演者” [2024-05] [紙]

“揭示編碼數據指令對大語言模型推理的影響” [2024-05] [紙]

梅子:“梅子:偏好學習加測試用例可以產生更好的代碼語言模型” [2024-06] [紙]

McOder :“ McEval:大規模多語言代碼評估” [2024-06] [Paper]

“解鎖培訓中監督的微調和加強學習之間的相關性大型語言模型” [2024-06] [紙]

代碼優化:“代碼優勢:自我生成的優先數據以確保正確性和效率” [2024-06] [紙]

Unicoder :“ Unicoder:通過通用代碼縮放代碼大語言模型” [2024-06] [ACL 2024] [Paper]

“簡潔是機智的靈魂:修剪長文件以生成代碼” [2024-06] [紙]

“代碼少,對數:有效的LLM通過數據修剪來生成代碼” [2024-07] [Paper]

InverseCoder :“ InverseCoder:使用逆教學釋放指令調整的代碼LLM的功能” [2024-07] [Paper]

“小型代碼語言模型的課程學習” [2024-07] [紙]

遺傳教學:“遺傳指導:擴大大型語言模型的編碼說明的合成生成” [2024-07] [紙]

DataScope :“ API指導的數據集合成至Finetune大型代碼模型” [2024-08] [Paper]

** Xcoder **:“您的代碼LLMS如何執行?使用高質量數據授權代碼指令進行調整” [2024-09] [Paper]

加拉:“加拉:圖形對齊的大型語言模型,以改進源代碼理解” [2024-09] [紙]

六角形:“六腳架:通過Oracle指導的合成訓練數據生成安全代碼” [2024-09] [Paper]

AMR-evol :“ AMR-Evol:自適應模塊化響應進化引發了代碼生成中大語言模型的更好的知識蒸餾” [2024-10] [Paper]

LINTSEQ :“培訓語言模型有關合成編輯序列的培訓模型可以改善代碼綜合” [2024-10] [Paper]

COBA :“ COBA:大型語言模型多任務鑑定的融合平衡器” [2024-10] [EMNLP 2024] [Paper]

CursorCore :“ CursorCore:通過對齊任何東西來協助編程” [2024-10] [Paper]

selfcodealign :“自我播放:代碼生成的自我調整” [2024-10] [紙]

“掌握Codellm的數據合成的技巧” [2024-10] [紙]

Codelutra :“ Codelutra:通過首選項引導的改進來提高LLM代碼生成” [2024-11] [紙]

DSTC :“ DSTC:僅使用自我生成的測試和代碼來改善代碼LMS的直接偏好學習” [2024-11] [Paper]

compcoder :“編譯器反饋的編譯神經代碼生成” [2022-03] [ACL 2022] [紙]

Coderl :“編碼器:通過驗證的模型和深度強化學習來掌握代碼” [2022-07] [Neurips 2022] [Paper] [repo] [repo]

PPOCODER :“使用深入強化學習的基於執行的代碼生成” [2023-01] [TMLR 2023] [Paper] [repo]

RLTF :“ RLTF:從單位測試反饋學習的加固學習” [2023-07] [Paper] [repo]

B-Coder :“ B-coder:基於價值的計劃合成的深度強化學習” [2023-10] [ICLR 2024] [Paper]

IRCOCO :“ Ircoco:立即獎勵代碼完成的深入強化學習” [2024-01] [FSE 2024] [Paper]

Stepcoder :“ Stepcoder:通過從編譯器反饋中進行加固學習來改善代碼生成” [2024-02] [ACL 2024] [Paper]

RLPF&DPA :“生成快速代碼的性能分配的LLM” [2024-04] [Paper]

“測量RLHF中的記憶以完成代碼完成” [2024-06] [Paper]

“將RLAIF應用於輕量級LLM中的API-USAGE代碼生成” [2024-06] [Paper]

rlCoder :“ RLCODER:存儲庫級代碼完成的加固學習” [2024-07] [Paper]

PF-PPO :“ RLHF中的策略過濾到代碼生成的微調LLM” [2024-09] [Paper]

咖啡蓋:“咖啡蓋:評估和改善錯誤代碼自然語言反饋的環境” [2024-09] [紙]

RLEF :“ RLEF:通過增強學習的執行反饋中的接地代碼LLM” [2024-10] [紙]

codepmp :“ Codepmp:大型語言模型推理的可伸縮偏好模型” [2024-10] [紙]

CodedPo :“編碼PO:用自生成和驗證的源代碼對齊代碼模型” [2024-10] [Paper]

“對代碼生成的流程監督引導的政策優化” [2024-10] [紙]

“與直接偏好優化對齊Codellm” [2024-10] [紙]

獵鷹:“獵鷹:反饋驅動的自適應長/短期記憶加強編碼優化系統” [2024-10] [紙]

PFPO :“使用偽反饋推理的優先優化” [2024-11] [紙]

PAL :“ PAL:程序輔助語言模型” [2022-11] [ICML 2023] [Paper] [repo]

鍋:“提示的思想計劃:將計算與數值推理任務推理的解開” [2022-11] [TMLR 2023] [Paper] [repo]

PAD :“ PAD:程序輔助蒸餾可以比經過思考的微調更好地教導小型模型” [2023-05] [NAACL 2024] [Paper] [Paper]

CSV :“使用基於代碼的自我驗證的GPT-4代碼解釋器解決挑戰性的數學單詞問題” [2023-08] [ICLR 2024] [Paper]

MathCoder :“ MathCoder:LLMS中的無縫代碼集成,用於增強數學推理” [2023-10] [ICLR 2024] [Paper]

COC :“代碼鏈:具有語言模型的代碼模擬器的推理” [2023-12] [ICML 2024] [Paper]

馬里奧:“馬里奧:具有代碼解釋器輸出的數學推理 - 可再現管道” [2024-01] [ACL 2024調查結果] [紙]

富豪:“富豪:重構計劃以發現可概括的抽象” [2024-01] [ICML 2024] [Paper]

“可執行代碼動作會引起更好的LLM代理” [2024-02] [ICML 2024] [Paper]

Hpropro :“通過基於程序的提示來探索混合問題回答” [2024-02] [ACL 2024] [Paper]

XStreet :“從LLM通過代碼誘導更好的多語言結構化推理” [2024-03] [ACL 2024] [Paper]

流程:“流動:使用LLMS自動工作流程” [2024-03] [紙]

思想執行:“作為編譯器的語言模型:模擬偽代碼執行可以改善語言模型中的算法推理” [2024-04] [Paper]

核心:“核心:LLM作為自然語言編程的解釋器,偽代碼編程和AI代理的流程編程” [2024-05] [Paper]

Mumath-Code :“ Mumath-Code:將工具使用的大語言模型與數學推理的多鏡數據增強相結合” [2024-05] [Paper]

COGEX :“學習通過程序產生,仿真和搜索來推理” [2024-05] [紙]

“使用LLM的算術推理:Prolog Generation&Permotunt” [2024-05] [Paper]

“ LLM可以隨著程序而推理嗎?” [2024-06] [紙]

Dotamath :“ Dotamath:用代碼幫助和數學推理的自我糾正的思想分解” [2024-07] [紙]

CIBENCH :“ CIBENCH:使用代碼解釋器插件評估LLM” [2024-07] [Paper]

Pybench :“ Pybench:在各種現實世界編碼任務上評估LLM代理” [2024-07] [Paper]

adacoder :“ adacoder:編程視覺問題的自適應提示壓縮回答” [2024-07] [紙]

金字塔尺寸:“金字塔編碼器:用於組成視覺問題的分層代碼生成器回答” [2024-07] [紙]

CodeGraph :“ CodeGraph:使用代碼增強LLM的圖形推理” [2024-08] [Paper]

暹羅:“暹羅:大語言模型的自我改進代碼輔助數學推理” [2024-08] [紙]

Codeplan :“ Codeplan:通過縮放代碼形式計劃在大型Langauge模型中解鎖推理潛力” [2024-09] [Paper]

鍋:“思想證明:神經肯定程序的合成允許強大而可解釋的推理” [2024-09] [紙]

metamath :“ metamath:在大型語言模型中整合自然語言和代碼以增強數學推理” [2024-09] [紙]

“ Babelbench:用於多模式和多結構數據的代碼驅動分析的OMNI基準” [2024-10] [紙]

CODESTER :“在代碼執行和文本推理之間轉向大型語言模型” [2024-10] [Paper]

MathCoder2 :“ MathCoder2:從模型翻譯的數學代碼進行持續預處理的更好的數學推理” [2024-10] [Paper]

LLMFP :“嚴格規劃的任何內容:使用基於LLM的正式編程的通用零彈計劃” [2024-10] [紙]

證明:“並非所有的選票都計算!程序,因為驗證者改善了數學推理的語言模型的自我一致性” [2024-10] [紙]

證明:“信任但驗證:野外的程序化VLM評估” [2024-10] [紙]

地理編碼器:“地理編碼器:通過視覺模型生成模塊化代碼來解決幾何問題” [2024-10] [紙]

推理:“推理:使用可提取的符號程序來評估數學推理” [2024-10] [紙]

GFP :“縫隙填充提示增強了代碼輔助的數學推理” [2024-11] [Paper]

UTMATH :“ UTMATH:通過推理對編碼思想進行單位測試的數學評估” [2024-11] [紙]

COCOP :“ COCOP:通過代碼完成提示來增強LLM的文本分類” [2024-11] [Paper]

REPL-PLAN :“與大語言模型的交互式和表達的代碼啟動計劃” [2024-11] [Paper]

“大語模型的代碼模擬挑戰” [2024-01] [紙]

“ Codemind:挑戰大型語言模型的代碼推理的框架” [2024-02] [Paper]

“用大語言模型執行自然語言算法:調查” [2024-02] [紙]

“語言模型可以假裝求解器?使用LLMS邏輯代碼仿真” [2024-03] [PAPER]

“通過程序執行的運行時行為評估大型語言模型” [2024-03] [紙]

“下一步:教導大型語言模型以推論代碼執行” [2024-04] [ICML 2024] [Paper]

“ SelfPICO:使用LLMS的自我引導的部分代碼執行” [2024-07] [Paper]

“作為代碼執行者的大型語言模型:探索性研究” [2024-10] [紙]

“可錄像帶:用精細的多模式鏈的推理指導代碼執行中的大型語言模型” [2024-10] [紙]

自我合作:“通過chatgpt生成自我合作代碼” [2023-04] [紙]

Chatdev :“軟件開發的交流代理” [2023-07] [Paper] [repo]

METAGPT :“ METAGPT:多代理協作框架的元編程” [2023-08] [Paper] [repo]

CodeChain :“ Codechain:通過具有代表性子模塊的自我重複鏈生成模塊化代碼” [2023-10] [ICLR 2024] [Paper] [Paper]

代碼:“代碼:使用工具集成的代理系統來增強代碼生成,用於現實世界回复級的編碼挑戰” [2024-01] [ACL 2024] [Paper]

連接:“連接:通過在線搜索和正確性測試進行複雜的代碼生成和完善” [2024-03] [Paper]

LCG :“基於LLM的代碼生成符合軟件開發過程時” [2024-03] [Paper]

Repairagent :“維修:一種自主,基於LLM的程序維修代理” [2024-03] [Paper]

Magis :: “ MAGIS:基於LLM的GitHub發行的多代理框架” [2024-03] [Paper]

SOA :“自組織的代理:朝著超大規模代碼生成和優化的LLM多代理框架” [2024-04] [Paper]

自動編碼器:“ AutoCoderover:自主程序改進” [2024-04] [Paper]

SWE-Agent :“ SWE-AGENT:代理 - 計算機接口啟用自動軟件工程” [2024-05] [Paper]

MAPCODER :“ MapCoder:用於解決競爭問題的多代理代碼生成” [2024-05] [ACL 2024] [Paper]

“用火打火:我們可以在源代碼相關的任務上信任chatgpt多少?” [2024-05] [紙]

Funcoder :“分裂和構成達成共識:釋放代碼生成中功能的力量” [2024-05] [紙]

CTC :“通過跨團隊協作開發多代理軟件” [2024-06] [Paper]

MASAI :“ MASAI:軟件工程AI代理的模塊化體系結構” [2024-06] [Paper]

AgileCoder :“ AgileCoder:基於敏捷方法的軟件開發的動態協作代理” [2024-06] [Paper]

Codenav :“ Codenav:使用LLM代理使用現實世界代碼庫的工具使用” [2024-06] [Paper]

起訴:“起訴:對安全和幫助的批評的內部對話的代碼生成” [2024-06] [紙]

AppWorld :“ AppWorld:一個可控的應用程序和用於基準交互式編碼代理的人的可控世界” [2024-07] [Paper]

CortexCompile :“ CortexCompile:利用皮質啟發的體系結構,用於增強的多代理NLP代碼合成” [2024-08] [Paper]

調查:“針對軟件工程的基於語言模型的大型代理:調查” [2024-09] [紙]

AutoSafeCoder :“ AutoSafeCoder:通過靜態分析和模糊測試確保LLM代碼生成的多代理框架” [2024-09] [Paper]

SuperCoder2.0 :“ SuperCoder2.0:探索LLM作為自主程序員的可行性的技術報告” [2024-09] [Paper]

調查:“軟件工程的代理:調查,景觀和視覺” [2024-09] [紙]

苔蘚:“苔蘚:為AI代理啟用代碼驅動的進化和上下文管理” [2024-09] [Paper]

超級代理:“超級代理:通才軟件工程代理商以大規模求解編碼任務” [2024-09] [紙]

“大語言模型中代碼的組成硬度 - 概率的觀點” [2024-09] [紙]

RGD :“ RGD:通過改進和生成指導基於多LLM的代理調試器” [2024-10] [紙]

Automl-Agent :“ Automl-Agent:全型Pipeline Automl的多代理LLM框架” [2024-10] [Paper]

尋求者:“尋求者:使用基於LLM的多代理方法在代碼中加強異常處理” [2024-10] [Paper]

重做:“重做:編碼劑的無執行運行時錯誤檢測” [2024-10] [Paper]

“評估軟件開發代理:補丁模式,代碼質量和發行現實世界中的複雜性” [2024-10] [紙]

EVOMAC :“軟件開發的自動發展多代理協作網絡” [2024-10] [紙]

VisionCoder :“ VisionCoder:使用混合LLM的圖像處理授權自動編程” [2024-10] [Paper]

Autokaggle :“ Autokaggle:自動數據科學競賽的多代理框架” [2024-10] [Paper]

沃森:“沃森:基礎模型驅動代理推理的認知可觀察性框架” [2024-11] [紙]

Codetree :“ Codetree:用大語言模型的代理搜索代碼生成” [2024-11] [Paper]

EVOCODER :“ LLM作為連續學習者:改善軟件問題中有缺陷代碼的複制” [2024-11] [Paper]

“互動程序綜合” [2017-03] [紙]

“交互式程序綜合問題選擇” [2020-06] [PLDI 2020] [紙]

“通過測試驅動的用戶形式化生成交互式代碼” [2022-08] [紙]

“通過自然語言反饋來改善代碼生成” [2023-03] [TMLR] [紙]

“自我refine:自我反饋的迭代完善” [2023-03] [Neurips 2023] [Paper]

“向自我挑剔教大語言模型” [2023-04] [紙]

“自我編輯:代碼生成的錯誤感知代碼編輯器” [2023-05] [ACL 2023] [紙]

“萊蒂:學習從文本互動中生成” [2023-05] [紙]

“自我修復是代碼生成的銀彈嗎?” [2023-06] [ICLR 2024] [紙]

“交叉代碼:用執行反饋標準化和基准進行交互式編碼” [2023-06] [Neurips 2023] [Paper]

“中間:促使大語言模型與維修的交互式鏈的編碼能力” [2023-11] [ACL 2024調查結果] [紙]

“ OpenCodeInterPreter:將代碼生成與執行和改進整合” [2024-02] [ACL 2024調查結果] [Paper]

“使用編譯器反饋的精確代碼生成項目級代碼上下文的迭代精緻” [2024-03] [ACL 2024調查結果] [paper]

“循環:學習自我重新定制代碼” [2024-03] [紙]

“基於LLM的測試驅動的交互式代碼生成:用戶研究和經驗評估” [2024-04] [Paper]

“肥皂:通過自我優化提高生成代碼的效率” [2024-05] [紙]

“使用LLMS的代碼維修給予探索 - 探索折衷方案” [2024-05] [紙]

“ ReflectionCoder:從反射序列中學習以增強一次性代碼生成” [2024-05] [Paper]

“培訓LLM,以更好地自我挑戰並解釋代碼” [2024-05] [紙]

“您只需要:從LLM到代碼的要求” [2024-06] [Paper]

“我需要幫助!評估LLM要求用戶支持的能力:關於文本到SQL生成的案例研究” [2024-07] [Paper]

“關於數據科學代碼生成的自我校正大語言模型的實證研究” [2024-08] [紙]

“ Rethinkmcts:蒙特卡洛樹中的錯誤思想搜索代碼生成” [2024-09] [紙]

“從代碼到正確性:通過分層調試結束代碼生成的最後一英里” [2024-10] [Paper] [repo]

“是什麼使大型語言模型推理(多轉化)代碼生成?” [2024-10] [紙]

“第一個提示最重要的是!對基於迭代的示例代碼生成的大型語言模型的評估” [2024-11] [Paper]

“計劃驅動的編程:大型語言模型編程工作流” [2024-11] [紙]

“ Conair:一致性的迭代互動框架以增強代碼生成的可靠性” [2024-11] [Paper]

“ Markuplm:文本和標記語言的預培訓,以了解視覺量豐富的文檔理解” [2021-10] [ACL 2022] [Paper]

“ Webke:具有預訓練的標記語言模型的半結構化Web的知識提取” [2021-10] [CIKM 2021] [Paper]

“ Webgpt:通過人類反饋的瀏覽器協助提問” [2021-12] [紙]

“ CM3:互聯網的因果蒙版多模式模型” [2022-01] [Paper]

“ DOM-LM:學習HTML文檔的可推廣表示” [2022-01] [Paper]

“ WebFormer:用於結構信息提取的網頁變壓器” [2022-02] [www 2022] [Paper]

“具有未知命令可行性的交互式視覺導航的數據集” [2022-02] [ECCV 2022] [Paper]

“網絡商店:與基礎語言代理進行可擴展的現實世界網絡互動” [2022-07] [Neurips 2022] [Paper]

“ Pix2 struct:屏幕截圖作為視覺語言理解的預處理” [2022-10] [ICML 2023] [Paper]

“使用大語言模型了解HTML” [2022-10] [EMNLP 2023調查結果] [紙]

“ WebUI:用於通過Web語義增強視覺UI理解的數據集” [2023-01] [CHI 2023] [Paper]

“ Mind2Web:邁向網絡通才代理” [2023-06] [Neurips 2023] [Paper]

“具有計劃,長篇小說理解和程序綜合的真實世界的webagent”,[2023-07] [ICLR 2024] [Paper]

“ Webarena:一個現實的網絡環境,用於構建自治代理” [2023-07] [紙]

“ Cogagent:GUI代理的視覺語言模型” [2023-12] [紙]

“ GPT-4V(ISION)是通才的網絡代理,如果紮根” [2024-01] [Paper]

“ WebVoyager:使用大型多模型建立端到端的Web代理” [2024-01] [Paper]

“ Weblinx:帶有多轉化對話的現實世界網站導航” [2024-02] [紙]

“ OmniAct:用於啟用桌面和Web的多模式自主代理的數據集和基準” [2024-02] [Paper]

“ Autowebglm:Bootstrap並加強基於語言模型的大型網絡導航代理” [2024-04] [Paper]

“威爾伯:適應性和準確的網絡代理商的自適應內在學習” [2024-04] [紙]

“ Autocrawler:網絡爬網生成的漸進式理解Web代理” [2024-04] [Paper]

“ Guicourse:從一般視覺語言模型到多功能GUI代理” [2024-06] [Paper]

“ Naviqate:功能引導的Web應用程序導航” [2024-09] [Paper]

“ MobileVLM:一種視覺語言模型,用於更好的內部和UI理解” [2024-09] [Paper]

“ Web代理中自我進行的多模式自動驗證” [2024-10] [Paper]

“像人類一樣瀏覽數字世界:GUI代理的通用視覺基礎” [2024-10] [紙]

“具有世界模型的網絡代理:學習和利用Web導航中的環境動態” [2024-10] [紙]

“利用網頁UI進行文本豐富的視覺理解” [2024-10] [紙]

“代理商:基於LLM的Web代理的簡單但強大的基線” [2024-10] [Paper]

“超越瀏覽:基於API的Web代理” [2024-10] [紙]

“授權個性化網絡代理的大型語言模型” [2024-10] [紙]

“ advweb:可控的黑盒攻擊對VLM驅動的網絡代理商” [2024-10] [Paper]

“自動意願:大語模型網絡代理的自動意圖發現和自我探索” [2024-10] [紙]

“ OS-ATLAS:通才GUI代理的基礎行動模型” [2024-10] [紙]

“從上下文到行動:對狀態表示和上下文對多轉變Web導航代理的概括的影響的分析” [2024-10] [Paper]

“ AutoGLM:GUIS的自治基礎代理” [2024-10] [紙]

“ Webrl:通過自我改進的在線課程增強學習培訓LLM Web代理學習” [2024-11] [紙]

“ GUI代理的黎明:Claude 3.5計算機使用的初步案例研究” [2024-11] [紙]

“塗鴉:使用生產規模的工作流數據邁向專業的網絡代理” [2024-11] [紙]

“ Showui:GUI視覺試劑的一個視覺語言動作模型” [2024-11] [Paper]

[ Ruby ]“關於低資源編程語言的預訓練語言模型的可傳遞性” [2022-04] [ICPC 2022] [Paper]

[ Verilog ]“為自動化Verilog RTL代碼生成的大型語言模型的基準測試” [2022-12] [日期2023] [Paper]

[ OCL ]“在OCL生成的Codex提示工程上:一項實證研究” [2023-03] [MSR 2023] [Paper]

[ Ansible-Yaml ]“通過大語言模型中的YAML信息技術任務的自動代碼生成” [2023-05] [DAC 2023] [Paper]

[ HANSL ]“使用低資源和域特異性編碼語言編碼LLM的潛力” [2023-07] [Paper]

[ Verilog ]“ Verigen:Verilog代碼生成的大語言模型” [2023-07] [Paper]

[ Verilog ]“ RTLLM:具有大語言模型的設計RTL生成的開源基準” [2023-08] [Paper]

[球拍,Ocaml,Lua,R,Julia ]“知識從高資源轉移到代碼LLMS的低資源編程語言” [2023-08] [Paper]

[ Verilog ]“ Verilogeval:評估Verilog代碼生成的大語言模型” [2023-09] [ICCAD 2023] [Paper]

[ Verilog ]“ RTLFIXER:使用大語言模型自動修復RTL語法錯誤” [2023-11] [Paper]

[ Verilog ]“高級大語言模型(LLM)驅動的Verilog開發:增強代碼合成中的功率,性能和麵積優化” [2023-12] [紙]

[ verilog ]“ rtlcoder:使用我們的開源數據集和輕量級解決方案在設計RTL生成中的gpt-3.5” [2023-12] [紙]

[ Verilog ]“ BetterV:具有歧視指導的受控Verilog生成” [2024-02] [ICML 2024] [Paper]

[ R ]“大型語言模型的參數有效方法的經驗研究和知識轉移到R” [2024-03] [紙]

[ HASKELL ]“研究以功能編程語言完成代碼的語言模型的性能:A Haskell案例研究” [2024-03] [Paper]

[ Verilog ]“ Verilog代碼生成的多專家大型語言模型體系結構” [2024-04] [Paper]

[ Verilog ]“ CreativeVal:評估基於LLM的硬件代碼生成的創造力” [2024-04] [Paper]

[合金]“對修復聲明性形式規格的預先培訓的大語言模型的經驗評估” [2024-04] [紙]

[ Verilog ]“評估硬件設計和測試的LLM” [2024-04] [Paper]

[ Kotlin,Swift和Rust ]“低資源語言中的軟件脆弱性預測:Codebert和Chatgpt的實證研究” [2024-04] [Paper]

[ Verilog ]“ Meic:使用LLMS重新思考RTL調試自動化” [2024-05] [Paper]

[ bash ]“解決基於執行的NL2Bash的評估” [2024-05] [紙]

[ Fortran,Julia,Matlab,R,Rust ]“評估C ++,Fortran,Go,Java,Java,Julia,Matlab,Python,R和Rust的AI生成的代碼” [2024-05] [2024-05] [Paper]

[ OpenAPI ]“為OpenAPI代碼完成優化大型語言模型” [2024-05] [Paper]

[ Kotlin ]“ Kotlin ML Pack:技術報告” [2024-05] [Paper]

[ Verilog ]“ Verilogreader:LLM輔助硬件測試一代” [2024-06] [Paper]

“基於基本視覺編程中計算思維測試的基準生成模型” [2024-06] [紙]

[徽標]“ Xlogoonline環境中視覺編程的程序綜合基準” [2024-06] [Paper]

[ Ansible Yaml,Bash ]“ DOCCGEN:基於文檔的受控代碼生成” [2024-06] [Paper]

[ Qiskit ]“ Qiskit Humaneval:量子代碼生成模型的評估基準” [2024-06] [Paper]

[ Perl,Golang,Swift ]“ Distilrr:低資源編程語言的傳輸代碼維修” [2024-06] [Paper]

[ verilog ]“斷言基礎:評估自斷言產生模型的基準” [2024-06] [紙]

“ DSL代碼生成的比較研究:微調與優化檢索增強” [2024-07] [紙]

[ JSON,XLM,YAML ]“ Consodeeval:評估大型語言模型以特定於領域的語言中的代碼約束” [2024-07] [Paper]

[ verilog ]“自動座:使用LLMS進行HDL設計的自動測試台化和評估” [2024-07] [Paper]

[ Verilog ]“ CodeV:通過多級摘要為Verilog生成的LLM授權” [2024-07] [Paper]

[ Verilog ]“ Itertl:用於RTL代碼生成的微調LLM的迭代框架” [2024-07] [Paper]

[ Verilog ]“ Origen:使用代碼對代碼增強和自我反射增強RTL代碼生成” [2024-07] [Paper]

[ Verilog ]“使用黃金代碼反饋的Verilog生成的大型語言模型” [2024-07] [Paper]

[ Verilog ]“ AutoVcoder:使用LLMS自動化Verilog代碼生成的系統框架” [2024-07] [Paper]

[ RPA ]“使用代碼的計劃:比較魯棒NL與DSL生成的方法” [2024-08] [Paper]

[ Verilog ]“ VerilogCoder:具有基於圖的計劃和抽象語法樹(AST)基於圖形的波形跟踪工具的自主Verilog編碼代理” [2024-08] [Paper]

[ Verilog ]“重新訪問Verilogeval:較新的LLMS,秘密學習和規格到RTL任務” [2024-08] [Paper]

[ MAXMSP,Web音頻]“使用視覺數據流語言的音頻編程的LLM代碼生成” [2024-09] [Paper]

[ Verilog ]“ Rtlwrewriter:大型模型的方法學協助RTL代碼優化” [2024-09] [Paper]

[ Verilog ]“ Craftrtl:具有正確構造的非文本表示和目標代碼修復的Verilog代碼模型的高質量合成數據生成” [2024-09] [Paper]

[ bash ]“腳本史密斯:通過自動bash腳本生成,評估和改進來增強IT操作的統一LLM框架” [2024-09] [Paper]

[調查]“低資源和域特定編程語言的代碼生成的調查” [2024-10] [紙]

[ R ]“當前語言模型是否支持R編程語言的代碼智能?” [2024-10] [紙]

“大型語言模型可以生成地理空間代碼嗎?” [2024-10] [紙]

[ PLC ]“代理4PLC:使用基於LLM的代理在工業控制系統中自動化閉環PLC代碼的生成” [2024-10] [紙]

[ LUA ]“評估在低資源語言基准上定量的代碼生成的大型語言模型” [2024-10] [紙]

“通過LLM優化器通過DSL驅動的代碼生成改善並行程序性能” [2024-10] [Paper]

“ Geocode-GPT:地理空間代碼生成任務的大型語言模型” [2024-10] [紙]

[ R,D,球拍,bash ]:“橋樑:解鎖LLMS在低資源代碼中克服語言差距的潛力” [2024-10] [Paper]

[ SPICE ]:“ SpicePilot:使用AI指南導航香料代碼生成和模擬” [2024-10] [紙]

[ IEC 61131-3 ST ]:“用於生成IEC 61131-3結構化文本的培訓LLM具有在線反饋” [2024-10] [Paper]

[ verilog ]“ Metrex:使用LLMS的Verilog代碼度量推理的基準” [2024-11] [Paper]

[ verilog ]“正確的基礎:使用LLMS進行HDL設計的功能自我糾正自動生成” [2024-11] [Paper]

[ Mumps,ALC ]“利用LLM進行舊版代碼現代化:LLM生成的文檔的挑戰和機遇” [2024-11] [Paper]

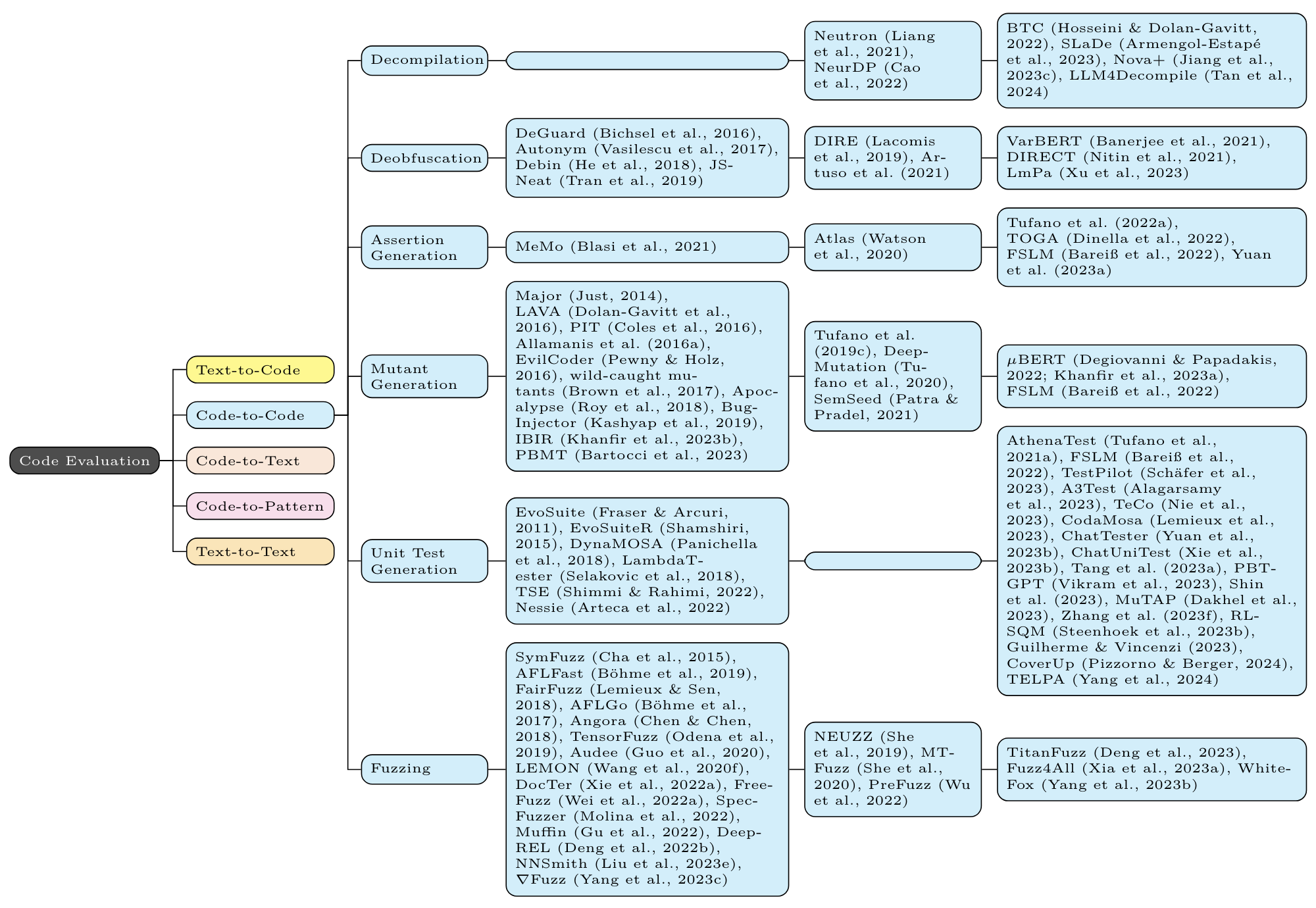

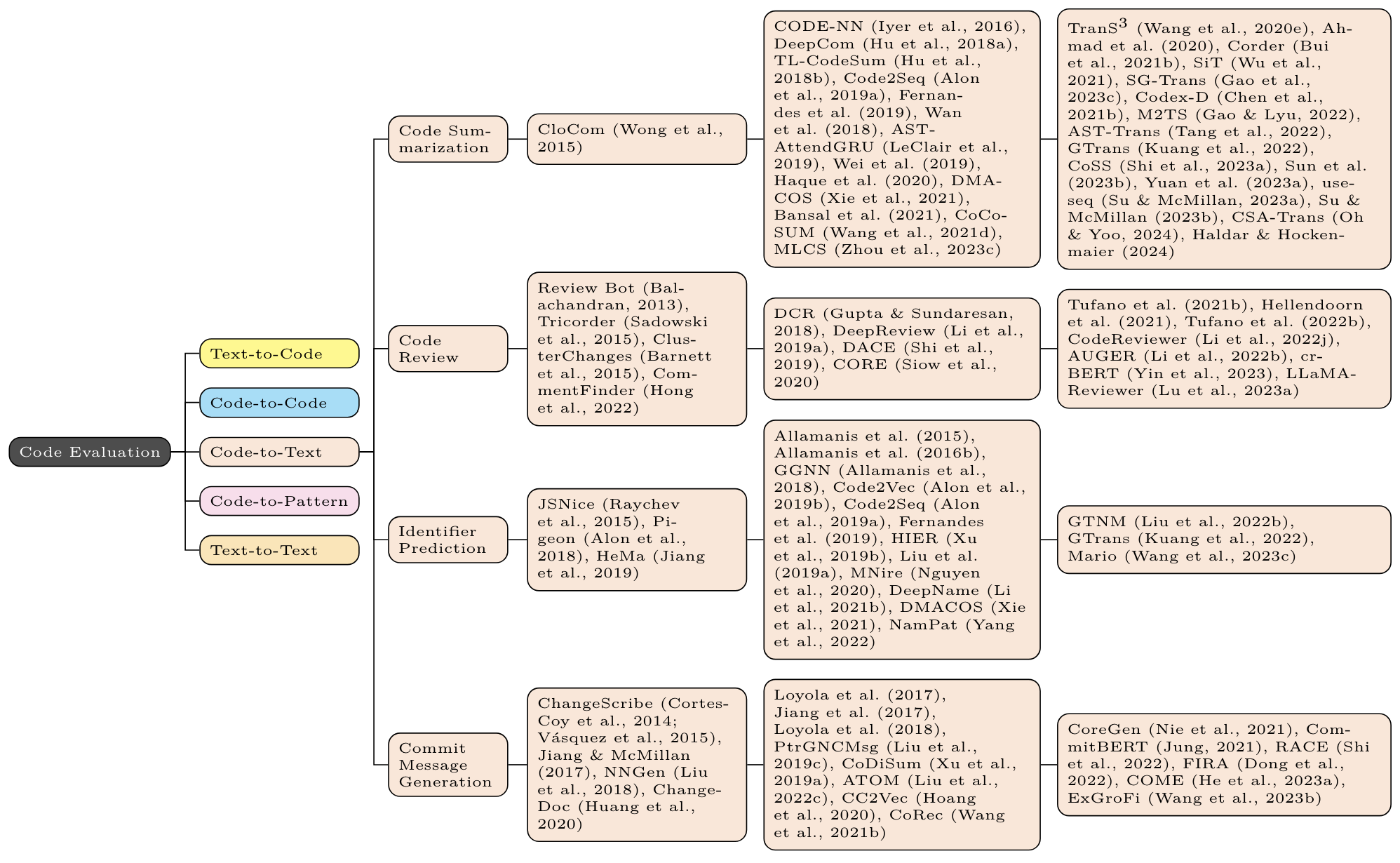

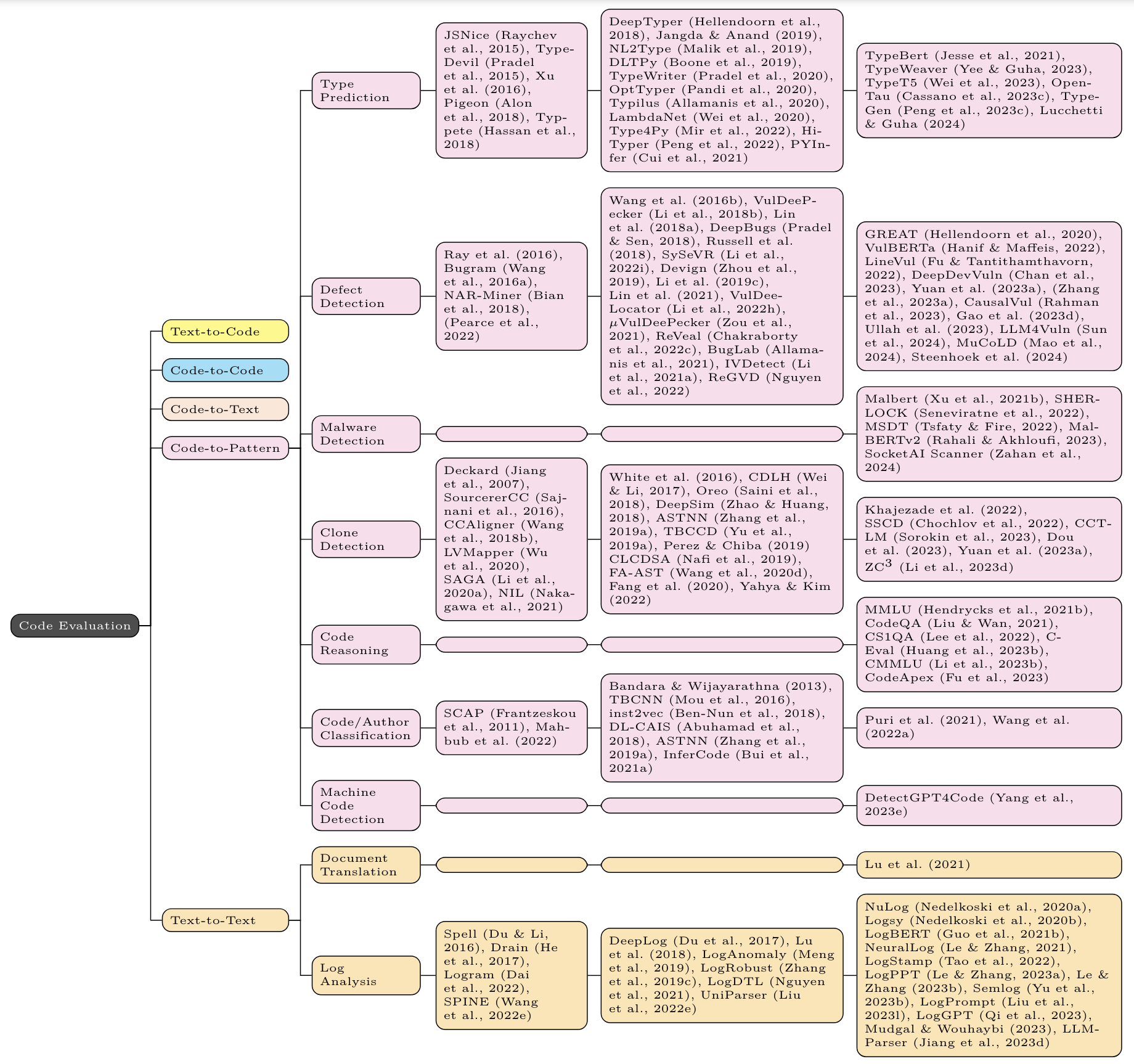

對於每個任務,第一列包含非神經方法(例如n-gram,tf-idf和(偶爾)靜態程序分析);第二列包含非轉化器神經方法(例如LSTM,CNN,GNN);第三列包含基於變壓器的方法(例如Bert,GPT,T5)。

“通過多觀點的自我矛盾來編碼大型語言模型” [2023-09] [ACL 2024] [Paper]

“自我注入代碼生成” [2023-11] [ICML 2024] [紙]

“ JumpCoder:通過在線修改超越自迴旋編碼器” [2024-01] [ACL 2024] [Paper]

“具有往返正確性的代碼LLM的無監督評估” [2024-02] [ICML 2024] [Paper]

“越大?通過預算重新分配改進的LLM代碼生成越好” [2024-03] [Paper]

“在評估語言模型的代碼生成能力時量化污染” [2024-03] [ACL 2024] [紙]

“作為自然邏輯樞軸的評論:通過評論的角度提高代碼生成” [2024-04] [ACL 2024調查結果] [紙]

“通過解釋解決方案程序從LLM中提取算法推理” [2024-04] [紙]

“代碼生成中使用的提示的質量評估” [2024-04] [紙]

“評估基於UML的代碼生成中GPT-4-Vision的功能” [2024-04] [Paper]

“大型語言模型與自動機器學習協同作用” [2024-05] [紙]

“代碼的模型級聯:通過基於LLM的代碼生成的模型級聯降低推理成本” [2024-05] [紙]

“關於代碼生成的大型語言模型的調查” [2024-06] [紙]

“編程是由LLMS解決的嗎?” [2024-06] [紙]

“代碼生成評估的基準和指標:批判性評論” [2024-06] [紙]

“ MPCODER:具有顯式和隱式樣式表示學習的多用戶個性化代碼生成器” [2024-06] [ACL 2024] [Paper]

“重新審視對代碼生成的模塊化的影響” [2024-07] [紙]

“使用多步密鑰檢索評估代碼生成模型中的遠距離依賴性處理” [2024-07] [Paper]

“何時停止?在預防過多的LLM中邁出有效的代碼生成” [2024-07] [Paper]

“評估編程任務難度以有效評估大語言模型” [2024-07] [紙]

“ Arch Code:將軟件生成中的軟件要求與大語言模型合併” [2024-08] [ACL 2024] [Paper]

“與潛在錯誤的聯合重寫和完成代碼完成的微調語言模型” [2024-08] [ACL 2024調查結果] [紙]

“代碼生成的選擇性提示錨定” [2024-08] [紙]

“彌合語言差距:通過零攝 - 跨語性轉移在LLM中增強多語言及時的代碼生成” [2024-08] [Paper]

“優化代碼生成的大型語言模型超參數” [2024-08] [紙]

“史詩般:代碼生成LLM的基於成本效益的促進工程” [2024-08] [紙]

“ Coderefine:提高LLM生成的研究論文代碼實現的管道” [2024-08] [Paper]

“沒有人是一個島嶼:通過代碼搜索,代碼生成和程序維修進行全自動編程” [2024-09] [紙]

“自然語言計劃改善LLM搜索代碼生成” [2024-09] [紙]

“大型語言模型中代碼生成的多編程集合” [2024-09] [紙]

“通過多計劃探索和反饋驅動的改進,用於代碼生成的配對編程框架” [2024-09] [Paper]

“ USCD:通過不確定性意識的選擇性對比解碼來改善LLM的代碼生成” [2024-09] [Paper]

“啟髮指令調整的代碼模型的功能用於代碼生成的輔助功能” [2024-09] [Paper]

“通過預測代碼複雜性來選擇代碼生成的及時工程技術” [2024-09] [紙]

“地平線長度預測:通過lookahead規劃來推進代碼生成的中間功能” [2024-10] [紙]

“根據LLM的置信度有選擇地顯示LLM生成的代碼” [2024-10] [Paper]

“自動反饋:一個基於LLM的框架,用於高效,準確的API請求生成” [2024-10] [Paper]

“增強具有可能性和通過率優先經驗重播的代碼生成的LLM代理” [2024-10] [Paper]

“從孤獨指令到互動鼓勵!LLM通過自然語言提示生成安全代碼” [2024-10] [紙]

“自我解釋的關鍵字賦予代碼生成的大型語言模型” [2024-10] [紙]

“使用編程知識圖生成上下文增強的代碼生成” [2024-10] [紙]

“雙峰軟件工程中的文本中文字學習” [2024-10] [紙]

“將LLM代碼生成與形式規格和反應性程序合成相結合” [2024-10] [紙]

“更少的是更多:代碼生成中的DocString壓縮” [2024-10] [紙]

“ llms的多編程語言沙盒” [2024-10] [紙]

“使用大語言模型的人格指導代碼生成” [2024-10] [紙]

“高級語言模型是否消除了軟件工程迅速工程的需求?” [2024-11] [紙]

“分散的森林搜索:使用LLMS的智能代碼空間探索” [2024-11] [紙]

“錨點,小緩存:具有大語言模型的代碼生成” [2024-11] [紙]

“ Rocode:在代碼生成的大語言模型中集成回溯機制和程序分析” [2024-11] [紙]

“ SRA-MCTS:蒙特卡洛樹的自我驅動推理式審查搜索增強的代碼生成” [2024-11] [紙]

“ CodeGrag:提取構成的語法圖,以檢索增強跨語性代碼生成” [2024-05] [Paper]

“通過多retrieval增強發電的基於及時的代碼完成” [2024-05] [Paper]

“使用批評模型在代碼完成中自適應檢索的輕量級框架” [2024-06] [Papaer]

“偏好指導的重構調整以檢索增強代碼生成” [2024-09] [Paper]

“通過檢索的語言模型建立編碼助手” [2024-10] [紙]

“ DroidCoder:增強的Android代碼完成,具有上下文增強的檢索效果生成” [2024-10] [ASE 2024] [Paper] [Paper]

“評估查詢的回答代碼生成中的回答性” [2024-11] [紙]

“缺陷感知神經代碼排名” [2022-06] [Neurips 2022] [紙]

“神經代碼生成的功能重疊重疊” [2023-10] [ACL 2024調查結果] [紙]

“頂級通行證:通過PASS@k-Maximized代碼排名提高代碼生成” [2024-08] [PAPER]

“ DOCE:找到基於執行的代碼生成的最佳位置” [2024-08] [Paper]

“篩選穀殼:利用執行反饋來對生成的代碼候選者進行排名” [2024-08] [Paper]

“ B4:通過合理測試對合理的代碼解決方案進行最佳評估” [2024-09] [紙]

“通過合成進化的學習代碼偏好” [2024-10] [紙]

“針對程序翻譯的樹對樹神經網絡” [2018-02] [Neurips 2018] [Paper]

“使用語法驅動的樹對樹模型的程序語言翻譯” [2018-07] [紙]

“編程語言的無監督翻譯” [2020-06] [Neurips 2020] [Paper]

“利用無監督的代碼翻譯的自動單位測試” [2021-10] [ICLR 2022]紙]

“具有編譯器表示的代碼翻譯” [2022-06] [ICLR 2023] [Paper]

“程序翻譯的多語言代碼片段培訓” [2022-06] [AAAI 2022] [紙]

“ Babeltower:學習自動平行的程序翻譯” [2022-07] [ICML 2022] [Paper]

“無監督程序翻譯的語法和域意識模型” [2023-02] [ICSE 2023] [Paper]

“ Cotran:使用加固學習的基於LLM的代碼轉換器,並通過編譯器和象徵性執行的反饋” [2023-06] [Paper]

“翻譯中的丟失:對大語模型引入的錯誤的研究在翻譯代碼時” [2023-08] [ICSE 2024] [Paper]

“關於神經守則翻譯的評估:分類法和基準”,2023-08,ASE 2023,[紙]

“通過代碼蒸餾的程序翻譯” [2023-10] [EMNLP 2023] [紙]

“解釋 - 然後是翻譯:關於用自我生成的解釋改進程序翻譯的分析” [2023-11] [EMNLP 2023發現] [紙]

“探索輸出格式對代碼翻譯的大型語言模型評估的影響” [2024-03] [Paper]

“在自動代碼翻譯中探索和釋放大語言模型的力量” [2024-04] [紙]

“ vert:經過驗證的等效鏽蝕,沒有幾乎沒有學習” [2024-04] [紙]

“用LLMS轉化現實世界代碼:轉化為生鏽的研究” [2024-05] [Paper]

“一種可解釋的錯誤校正方法,用於增強代碼對代碼翻譯” [2024-05] [ICLR 2024] [Paper]

“ LASSI:基於LLM的自動自我校正管道,用於翻譯平行科學代碼” [2024-06] [Paper]

“整流器:通過llms具有校正器的代碼翻譯” [2024-07] [Paper]

“在語言模型中增強代碼翻譯,並通過檢索增強的一代學習很少學習” [2024-07] [Paper]

“具有多語言程序翻譯的變異互動的聯合學習模型” [2024-08] [紙]

“使用大語言模型的自動庫遷移:第一個結果” [2024-08] [紙]

“使用大語言模型的C-lust翻譯的上下文感知代碼分割” [2024-09] [Paper]

“ Transagent:用於代碼翻譯的基於LLM的多代理系統” [2024-10] [Paper]

“在代碼翻譯中揭示大語模型的潛力:我們有多遠?” [2024-10] [紙]

“ CodeRosetta:推動平行編程的無監督代碼翻譯的界限” [2024-10] [Paper]

“神經代碼翻譯中的無測試語義錯誤本地化框架” [2024-10] [紙]

“存儲庫級的組成代碼翻譯和驗證” [2024-10] [Paper]

“利用大型語言模型進行科學計算中的代碼翻譯和軟件開發” [2024-10] [紙]

“跨性別:利用及時中間翻譯以增強基於LLM的代碼翻譯” [2024-11] [Paper]

“將C轉換為生鏽:用戶研究的課程” [2024-11] [紙]

“基於變壓器的源代碼摘要方法” [2020-05] [ACL 2020] [Paper]

“使用結構引起的變壓器的代碼摘要” [2020-12] [ACL 2021調查結果] [紙]

“源代碼摘要的代碼結構指導變壓器” [2021-04] [ACM TSEM] [PAPER]

“ M2TS:基於源代碼摘要的變壓器的多尺度多模式方法” [2022-03] [ICPC 2022] [Paper]

“ AST-TRANS:有效的樹結構注意力的代碼摘要” [2022-05] [ICSE 2022] [Paper]

“ COSS:利用代碼摘要的語句語義” [2023-03] [IEEE TSE] [PAPER]

“自動代碼摘要通過chatgpt:我們有多遠?” [2023-05] [紙]

“神經源代碼摘要的語義相似性損失” [2023-08] [紙]

“用於源代碼摘要的蒸餾GPT” [2023-08] [ASE] [PAPER]

“ CSA-TRANS:AST的代碼結構意識到變壓器” [2024-04] [Paper]

“分析大語模型在代碼摘要中的性能” [2024-04] [紙]

“增強對LLM生成的代碼摘要的信任,並具有校準的信心分數” [2024-04] [Paper]

“使用小語言模型的python的Documint:Docstring生成” [2024-05] [Paper] [repo]

“自然是最好的:預先訓練的大型語言模型的模型無關代碼” [2024-05] [紙]

“代碼摘要的大型語言模型” [2024-05] [紙]

“探索大語模型(GPT-4)在二進制逆向工程中的功效” [2024-06] [紙]

“通過測試執行來確定LLM生成的代碼註釋中的不正確描述” [2024-06] [Paper]

“ Malsight:探索迭代二進制惡意軟件摘要的惡意源代碼和良性偽代碼” [2024-06] [Paper]

“ Esale:增強源代碼摘要的代碼 - 薩爾對準學習” [2024-07] [Paper]

“源代碼摘要在大語模型時代” [2024-07] [紙]

“代碼的自然語言概述:LLM時代的識字編程” [2024-08] [紙]

“上下文感知代碼摘要一代” [2024-08] [紙]

“自動源:使用llm的編程問答網站上的代碼段的自動生成上下文的內聯註釋” [2024-08] [Paper]

“ LLMS作為評估者:評估錯誤報告摘要的新方法” [2024-09] [Paper]

“評估大語模型為新手程序員生成的代碼評論的質量” [2024-09] [紙]

“通過自我反射方法生成代碼的等效表示” [2024-10] [紙]

“自動源代碼摘要的評論” [2024-10] [經驗軟件工程] [紙]

“ deepdebug:使用堆棧跟踪,反射和代碼骨架修復Python錯誤” [2021-05] [Paper]

“ Break-It-Fix-IT:無監督的計劃維修學習” [2021-06] [ICML 2021] [Paper]

“ TFIX:學習使用文本到文本變壓器修復編碼錯誤” [2021-07] [ICML 2021] [Paper]

“大語模型的程序自動修復” [2022-05] [ICSE 2023] [紙]

“較少的培訓,更多的維修:通過零射門學習重新訪問自動化程序維修” [2022-07] [ESEC/FSE 2022] [PAPER]

“維修幾乎是一代:使用LLM的多語言程序維修” [2022-08] [AAAI 2023] [紙]

“大型預訓練語言模型時代的實用計劃維修” [2022-10] [紙]

“ Vulrepair:基於T5的自動化軟件漏洞維修” [2022-11] [ESEC/FSE 2022] [PAPER]

“對話自動化程序維修” [2023-01] [紙]

“代碼語言模型對自動化程序維修的影響” [2023-02] [ICSE 2023] [紙]

“推論:使用LLMS的端到端程序維修” [2023-03] [ESEC/FSE 2023] [PAPER]

“通過微調和及時工程增強自動化程序維修” [2023-04] [紙]

“有關及時設計的研究,研究計劃維修的優勢和局限性” [2023-04] [紙]

“域知識重要:使用修復python類型錯誤的修復模板改進提示” [2023-06] [ICSE 2024] [Paper]

“ RepingLalama:有效的表示和微調的程序修復適配器” [2023-12] [紙]

“基於LLM的程序維修中的事實選擇問題” [2024-04] [紙]

“對無FL程序修復的LLM對準LLM” [2024-04] [紙]

“深入了解自動蟲定位和維修的大型語言模型” [2024-04] [紙]

“用於使用LLM的增強程序維修的多目標微調” [2024-04] [紙]

“我們可以進行實際功能級別的程序維修多遠?” [2024-04] [paper]

"Revisiting Unnaturalness for Automated Program Repair in the Era of Large Language Models" [2024-04] [paper]

"A Unified Debugging Approach via LLM-Based Multi-Agent Synergy" [2024-04] [paper]

"A Systematic Literature Review on Large Language Models for Automated Program Repair" [2024-05] [paper]

"NAVRepair: Node-type Aware C/C++ Code Vulnerability Repair" [2024-05] [paper]

"Automated Program Repair: Emerging trends pose and expose problems for benchmarks" [2024-05] [paper]

"Automated Repair of AI Code with Large Language Models and Formal Verification" [2024-05] [paper]

"A Case Study of LLM for Automated Vulnerability Repair: Assessing Impact of Reasoning and Patch Validation Feedback" [2024-05] [paper]

"CREF: An LLM-based Conversational Software Repair Framework for Programming Tutors" [2024-06] [paper]

"Towards Practical and Useful Automated Program Repair for Debugging" [2024-07] [paper]

"ThinkRepair: Self-Directed Automated Program Repair" [2024-07] [paper]

"MergeRepair: An Exploratory Study on Merging Task-Specific Adapters in Code LLMs for Automated Program Repair" [2024-08] [paper]

"RePair: Automated Program Repair with Process-based Feedback" [2024-08] [ACL 2024 Findings] [paper]

"Enhancing LLM-Based Automated Program Repair with Design Rationales" [2024-08] [paper]

"Automated Software Vulnerability Patching using Large Language Models" [2024-08] [paper]

"Enhancing Source Code Security with LLMs: Demystifying The Challenges and Generating Reliable Repairs" [2024-09] [paper]

"MarsCode Agent: AI-native Automated Bug Fixing" [2024-09] [paper]

"Co-Learning: Code Learning for Multi-Agent Reinforcement Collaborative Framework with Conversational Natural Language Interfaces" [2024-09] [paper]

"Debugging with Open-Source Large Language Models: An Evaluation" [2024-09] [paper]

"VulnLLMEval: A Framework for Evaluating Large Language Models in Software Vulnerability Detection and Patching" [2024-09] [paper]

"ContractTinker: LLM-Empowered Vulnerability Repair for Real-World Smart Contracts" [2024-09] [paper]

"Can GPT-O1 Kill All Bugs? An Evaluation of GPT-Family LLMs on QuixBugs" [2024-09] [paper]

"Exploring and Lifting the Robustness of LLM-powered Automated Program Repair with Metamorphic Testing" [2024-10] [paper]

"LecPrompt: A Prompt-based Approach for Logical Error Correction with CodeBERT" [2024-10] [paper]

"Semantic-guided Search for Efficient Program Repair with Large Language Models" [2024-10] [paper]

"A Comprehensive Survey of AI-Driven Advancements and Techniques in Automated Program Repair and Code Generation" [2024-11] [paper]

"Self-Supervised Contrastive Learning for Code Retrieval and Summarization via Semantic-Preserving Transformations" [2020-09] [SIGIR 2021] [paper]

"REINFOREST: Reinforcing Semantic Code Similarity for Cross-Lingual Code Search Models" [2023-05] [paper]

"Rewriting the Code: A Simple Method for Large Language Model Augmented Code Search" [2024-01] [ACL 2024] [paper]

"Revisiting Code Similarity Evaluation with Abstract Syntax Tree Edit Distance" [2024-04] [ACL 2024 short] [paper]

"Is Next Token Prediction Sufficient for GPT? Exploration on Code Logic Comprehension" [2024-04] [paper]

"Refining Joint Text and Source Code Embeddings for Retrieval Task with Parameter-Efficient Fine-Tuning" [2024-05] [paper]

"Typhon: Automatic Recommendation of Relevant Code Cells in Jupyter Notebooks" [2024-05] [paper]

"Toward Exploring the Code Understanding Capabilities of Pre-trained Code Generation Models" [2024-06] [paper]

"Aligning Programming Language and Natural Language: Exploring Design Choices in Multi-Modal Transformer-Based Embedding for Bug Localization" [2024-06] [paper]

"Assessing the Code Clone Detection Capability of Large Language Models" [2024-07] [paper]

"CodeCSE: A Simple Multilingual Model for Code and Comment Sentence Embeddings" [2024-07] [paper]

"Large Language Models for cross-language code clone detection" [2024-08] [paper]

"Coding-PTMs: How to Find Optimal Code Pre-trained Models for Code Embedding in Vulnerability Detection?" [2024-08] [paper]

"You Augment Me: Exploring ChatGPT-based Data Augmentation for Semantic Code Search" [2024-08] [paper]

"Improving Source Code Similarity Detection Through GraphCodeBERT and Integration of Additional Features" [2024-08] [paper]

"LLM Agents Improve Semantic Code Search" [2024-08] [paper]

"zsLLMCode: An Effective Approach for Functional Code Embedding via LLM with Zero-Shot Learning" [2024-09] [paper]

"Exploring Demonstration Retrievers in RAG for Coding Tasks: Yeas and Nays!" [2024-10] [紙]

"Instructive Code Retriever: Learn from Large Language Model's Feedback for Code Intelligence Tasks" [2024-10] [paper]

"Binary Code Similarity Detection via Graph Contrastive Learning on Intermediate Representations" [2024-10] [paper]

"Are Decoder-Only Large Language Models the Silver Bullet for Code Search?" [2024-10] [紙]

"CodeXEmbed: A Generalist Embedding Model Family for Multiligual and Multi-task Code Retrieval" [2024-11] [paper]

"CodeSAM: Source Code Representation Learning by Infusing Self-Attention with Multi-Code-View Graphs" [2024-11] [paper]

"EnStack: An Ensemble Stacking Framework of Large Language Models for Enhanced Vulnerability Detection in Source Code" [2024-11] [paper]

"Isotropy Matters: Soft-ZCA Whitening of Embeddings for Semantic Code Search" [2024-11] [paper]

"An Empirical Study on the Code Refactoring Capability of Large Language Models" [2024-11] [paper]

"Automated Update of Android Deprecated API Usages with Large Language Models" [2024-11] [paper]

"An Empirical Study on the Potential of LLMs in Automated Software Refactoring" [2024-11] [paper]

"CODECLEANER: Elevating Standards with A Robust Data Contamination Mitigation Toolkit" [2024-11] [paper]

"Instruct or Interact? Exploring and Eliciting LLMs' Capability in Code Snippet Adaptation Through Prompt Engineering" [2024-11] [paper]

"Learning type annotation: is big data enough?" [2021-08] [ESEC/FSE 2021] [paper]

"Do Machine Learning Models Produce TypeScript Types That Type Check?" [2023-02] [ECOOP 2023] [paper]

"TypeT5: Seq2seq Type Inference using Static Analysis" [2023-03] [ICLR 2023] [paper]

"Type Prediction With Program Decomposition and Fill-in-the-Type Training" [2023-05] [paper]

"Generative Type Inference for Python" [2023-07] [ASE 2023] [paper]

"Activation Steering for Robust Type Prediction in CodeLLMs" [2024-04] [paper]

"An Empirical Study of Large Language Models for Type and Call Graph Analysis" [2024-10] [paper]

"Repository-Level Prompt Generation for Large Language Models of Code" [2022-06] [ICML 2023] [paper]

"CoCoMIC: Code Completion By Jointly Modeling In-file and Cross-file Context" [2022-12] [paper]

"RepoCoder: Repository-Level Code Completion Through Iterative Retrieval and Generation" [2023-03] [EMNLP 2023] [paper]

"Coeditor: Leveraging Repo-level Diffs for Code Auto-editing" [2023-05] [ICLR 2024 Spotlight] [paper]

"RepoBench: Benchmarking Repository-Level Code Auto-Completion Systems" [2023-06] [ICLR 2024] [paper]

"Guiding Language Models of Code with Global Context using Monitors" [2023-06] [paper]

"RepoFusion: Training Code Models to Understand Your Repository" [2023-06] [paper]

"CodePlan: Repository-level Coding using LLMs and Planning" [2023-09] [paper]

"SWE-bench: Can Language Models Resolve Real-World GitHub Issues?" [2023-10] [ICLR 2024] [paper]

"CrossCodeEval: A Diverse and Multilingual Benchmark for Cross-File Code Completion" [2023-10] [NeurIPS 2023] [paper]

"A^3-CodGen: A Repository-Level Code Generation Framework for Code Reuse with Local-Aware, Global-Aware, and Third-Party-Library-Aware" [2023-12] [paper]

"Teaching Code LLMs to Use Autocompletion Tools in Repository-Level Code Generation" [2024-01] [paper]

"RepoHyper: Better Context Retrieval Is All You Need for Repository-Level Code Completion" [2024-03] [paper]

"Repoformer: Selective Retrieval for Repository-Level Code Completion" [2024-03] [ICML 2024] [paper]

"CodeS: Natural Language to Code Repository via Multi-Layer Sketch" [2024-03] [paper]

"Class-Level Code Generation from Natural Language Using Iterative, Tool-Enhanced Reasoning over Repository" [2024-04] [paper]

"Contextual API Completion for Unseen Repositories Using LLMs" [2024-05] [paper]

"Dataflow-Guided Retrieval Augmentation for Repository-Level Code Completion" [2024-05][ACL 2024] [paper]

"How to Understand Whole Software Repository?" [2024-06] [紙]

"R2C2-Coder: Enhancing and Benchmarking Real-world Repository-level Code Completion Abilities of Code Large Language Models" [2024-06] [paper]

"CodeR: Issue Resolving with Multi-Agent and Task Graphs" [2024-06] [paper]

"Enhancing Repository-Level Code Generation with Integrated Contextual Information" [2024-06] [paper]

"On The Importance of Reasoning for Context Retrieval in Repository-Level Code Editing" [2024-06] [paper]

"GraphCoder: Enhancing Repository-Level Code Completion via Code Context Graph-based Retrieval and Language Model" [2024-06] [ASE 2024] [paper]

"STALL+: Boosting LLM-based Repository-level Code Completion with Static Analysis" [2024-06] [paper]

"Hierarchical Context Pruning: Optimizing Real-World Code Completion with Repository-Level Pretrained Code LLMs" [2024-06] [paper]

"Agentless: Demystifying LLM-based Software Engineering Agents" [2024-07] [paper]

"RLCoder: Reinforcement Learning for Repository-Level Code Completion" [2024-07] [paper]

"CoEdPilot: Recommending Code Edits with Learned Prior Edit Relevance, Project-wise Awareness, and Interactive Nature" [2024-08] [paper] [repo]

"RAMBO: Enhancing RAG-based Repository-Level Method Body Completion" [2024-09] [paper]

"Exploring the Potential of Conversational Test Suite Based Program Repair on SWE-bench" [2024-10] [paper]

"RepoGraph: Enhancing AI Software Engineering with Repository-level Code Graph" [2024-10] [paper]

"See-Saw Generative Mechanism for Scalable Recursive Code Generation with Generative AI" [2024-11] [paper]

"Seeking the user interface", 2014-09, ASE 2014, [paper]

"pix2code: Generating Code from a Graphical User Interface Screenshot", 2017-05, EICS 2018, [paper]

"Machine Learning-Based Prototyping of Graphical User Interfaces for Mobile Apps", 2018-02, TSE 2020, [paper]

"Automatic HTML Code Generation from Mock-Up Images Using Machine Learning Techniques", 2019-04, EBBT 2019, [paper]

"Sketch2code: Generating a website from a paper mockup", 2019-05, [paper]

"HTLM: Hyper-Text Pre-Training and Prompting of Language Models", 2021-07, ICLR 2022, [paper]

"Learning UI-to-Code Reverse Generator Using Visual Critic Without Rendering", 2023-05, [paper]

"Design2Code: How Far Are We From Automating Front-End Engineering?" [2024-03] [paper]

"Unlocking the conversion of Web Screenshots into HTML Code with the WebSight Dataset" [2024-03] [paper]

"VISION2UI: A Real-World Dataset with Layout for Code Generation from UI Designs" [2024-04] [paper]

"LogoMotion: Visually Grounded Code Generation for Content-Aware Animation" [2024-05] [paper]

"PosterLLaVa: Constructing a Unified Multi-modal Layout Generator with LLM" [2024-06] [paper]

"UICoder: Finetuning Large Language Models to Generate User Interface Code through Automated Feedback" [2024-06] [paper]

"On AI-Inspired UI-Design" [2024-06] [paper]

"Identifying User Goals from UI Trajectories" [2024-06] [paper]

"Automatically Generating UI Code from Screenshot: A Divide-and-Conquer-Based Approach" [2024-06] [paper]

"Web2Code: A Large-scale Webpage-to-Code Dataset and Evaluation Framework for Multimodal LLMs" [2024-06] [paper]

"Vision-driven Automated Mobile GUI Testing via Multimodal Large Language Model" [2024-07] [paper]

"AUITestAgent: Automatic Requirements Oriented GUI Function Testing" [2024-07] [paper]

"LLM-based Abstraction and Concretization for GUI Test Migration" [2024-09] [paper]

"Enabling Cost-Effective UI Automation Testing with Retrieval-Based LLMs: A Case Study in WeChat" [2024-09] [paper]

"Self-Elicitation of Requirements with Automated GUI Prototyping" [2024-09] [paper]

"Infering Alt-text For UI Icons With Large Language Models During App Development" [2024-09] [paper]

"Leveraging Large Vision Language Model For Better Automatic Web GUI Testing" [2024-10] [paper]

"Sketch2Code: Evaluating Vision-Language Models for Interactive Web Design Prototyping" [2024-10] [paper]

"WAFFLE: Multi-Modal Model for Automated Front-End Development" [2024-10] [paper]

"DesignRepair: Dual-Stream Design Guideline-Aware Frontend Repair with Large Language Models" [2024-11] [paper]

"Interaction2Code: How Far Are We From Automatic Interactive Webpage Generation?" [2024-11] [paper]

"A Multi-Agent Approach for REST API Testing with Semantic Graphs and LLM-Driven Inputs" [2024-11] [paper]

"PICARD: Parsing Incrementally for Constrained Auto-Regressive Decoding from Language Models" [2021-09] [EMNLP 2021] [paper]

"CodexDB: Generating Code for Processing SQL Queries using GPT-3 Codex" [2022-04] [paper]

"T5QL: Taming language models for SQL generation" [2022-09] [paper]

"Towards Generalizable and Robust Text-to-SQL Parsing" [2022-10] [EMNLP 2022 Findings] [paper]

"XRICL: Cross-lingual Retrieval-Augmented In-Context Learning for Cross-lingual Text-to-SQL Semantic Parsing" [2022-10] [EMNLP 2022 Findings] [paper]

"A comprehensive evaluation of ChatGPT's zero-shot Text-to-SQL capability" [2023-03] [paper]

"DIN-SQL: Decomposed In-Context Learning of Text-to-SQL with Self-Correction" [2023-04] [NeurIPS 2023] [paper]

"How to Prompt LLMs for Text-to-SQL: A Study in Zero-shot, Single-domain, and Cross-domain Settings" [2023-05] [paper]

"Enhancing Few-shot Text-to-SQL Capabilities of Large Language Models: A Study on Prompt Design Strategies" [2023-05] [paper]

"SQL-PaLM: Improved Large Language Model Adaptation for Text-to-SQL" [2023-05] [paper]

"Retrieval-augmented GPT-3.5-based Text-to-SQL Framework with Sample-aware Prompting and Dynamic Revision Chain" [2023-07] [ICONIP 2023] [paper]

"Text-to-SQL Empowered by Large Language Models: A Benchmark Evaluation" [2023-08] [paper]

"MAC-SQL: A Multi-Agent Collaborative Framework for Text-to-SQL" [2023-12] [paper]

"Investigating the Impact of Data Contamination of Large Language Models in Text-to-SQL Translation" [2024-02] [ACL 2024 Findings] [paper]

"Decomposition for Enhancing Attention: Improving LLM-based Text-to-SQL through Workflow Paradigm" [2024-02] [ACL 2024 Findings] [paper]

"Knowledge-to-SQL: Enhancing SQL Generation with Data Expert LLM" [2024-02] [ACL 2024 Findings] [paper]

"Understanding the Effects of Noise in Text-to-SQL: An Examination of the BIRD-Bench Benchmark" [2024-02] [ACL 2024 short] [paper]

"SQL-Encoder: Improving NL2SQL In-Context Learning Through a Context-Aware Encoder" [2024-03] [paper]

"LLM-R2: A Large Language Model Enhanced Rule-based Rewrite System for Boosting Query Efficiency" [2024-04] [paper]

"Dubo-SQL: Diverse Retrieval-Augmented Generation and Fine Tuning for Text-to-SQL" [2024-04] [paper]

"EPI-SQL: Enhancing Text-to-SQL Translation with Error-Prevention Instructions" [2024-04] [paper]

"ProbGate at EHRSQL 2024: Enhancing SQL Query Generation Accuracy through Probabilistic Threshold Filtering and Error Handling" [2024-04] [paper]

"CoE-SQL: In-Context Learning for Multi-Turn Text-to-SQL with Chain-of-Editions" [2024-05] [paper]

"Open-SQL Framework: Enhancing Text-to-SQL on Open-source Large Language Models" [2024-05] [paper]

"MCS-SQL: Leveraging Multiple Prompts and Multiple-Choice Selection For Text-to-SQL Generation" [2024-05] [paper]

"PromptMind Team at EHRSQL-2024: Improving Reliability of SQL Generation using Ensemble LLMs" [2024-05] [paper]

"LG AI Research & KAIST at EHRSQL 2024: Self-Training Large Language Models with Pseudo-Labeled Unanswerable Questions for a Reliable Text-to-SQL System on EHRs" [2024-05] [paper]

"Before Generation, Align it! A Novel and Effective Strategy for Mitigating Hallucinations in Text-to-SQL Generation" [2024-05] [ACL 2024 Findings] [paper]

"CHESS: Contextual Harnessing for Efficient SQL Synthesis" [2024-05] [paper]

"DeTriever: Decoder-representation-based Retriever for Improving NL2SQL In-Context Learning" [2024-06] [paper]

"Next-Generation Database Interfaces: A Survey of LLM-based Text-to-SQL" [2024-06] [paper]

"RH-SQL: Refined Schema and Hardness Prompt for Text-to-SQL" [2024-06] [paper]

"QDA-SQL: Questions Enhanced Dialogue Augmentation for Multi-Turn Text-to-SQL" [2024-06] [paper]

"End-to-end Text-to-SQL Generation within an Analytics Insight Engine" [2024-06] [paper]

"MAGIC: Generating Self-Correction Guideline for In-Context Text-to-SQL" [2024-06] [paper]

"SQLFixAgent: Towards Semantic-Accurate SQL Generation via Multi-Agent Collaboration" [2024-06] [paper]

"Unmasking Database Vulnerabilities: Zero-Knowledge Schema Inference Attacks in Text-to-SQL Systems" [2024-06] [paper]

"Lucy: Think and Reason to Solve Text-to-SQL" [2024-07] [paper]

"ESM+: Modern Insights into Perspective on Text-to-SQL Evaluation in the Age of Large Language Models" [2024-07] [paper]

"RB-SQL: A Retrieval-based LLM Framework for Text-to-SQL" [2024-07] [paper]

"AI-Assisted SQL Authoring at Industry Scale" [2024-07] [paper]

"SQLfuse: Enhancing Text-to-SQL Performance through Comprehensive LLM Synergy" [2024-07] [paper]

"A Survey on Employing Large Language Models for Text-to-SQL Tasks" [2024-07] [paper]

"Towards Automated Data Sciences with Natural Language and SageCopilot: Practices and Lessons Learned" [2024-07] [paper]

"Evaluating LLMs for Text-to-SQL Generation With Complex SQL Workload" [2024-07] [paper]

"Synthesizing Text-to-SQL Data from Weak and Strong LLMs" [2024-08] [ACL 2024] [paper]

"Improving Relational Database Interactions with Large Language Models: Column Descriptions and Their Impact on Text-to-SQL Performance" [2024-08] [paper]

"The Death of Schema Linking? Text-to-SQL in the Age of Well-Reasoned Language Models" [2024-08] [paper]

"MAG-SQL: Multi-Agent Generative Approach with Soft Schema Linking and Iterative Sub-SQL Refinement for Text-to-SQL" [2024-08] [paper]

"Enhancing Text-to-SQL Parsing through Question Rewriting and Execution-Guided Refinement" [2024-08] [ACL 2024 Findings] [paper]

"DAC: Decomposed Automation Correction for Text-to-SQL" [2024-08] [paper]

"Interactive-T2S: Multi-Turn Interactions for Text-to-SQL with Large Language Models" [2024-08] [paper]

"SQL-GEN: Bridging the Dialect Gap for Text-to-SQL Via Synthetic Data And Model Merging" [2024-08] [paper]

"Enhancing SQL Query Generation with Neurosymbolic Reasoning" [2024-08] [paper]

"Text2SQL is Not Enough: Unifying AI and Databases with TAG" [2024-08] [paper]

"Tool-Assisted Agent on SQL Inspection and Refinement in Real-World Scenarios" [2024-08] [paper]

"SelECT-SQL: Self-correcting ensemble Chain-of-Thought for Text-to-SQL" [2024-09] [paper]

"You Only Read Once (YORO): Learning to Internalize Database Knowledge for Text-to-SQL" [2024-09] [paper]

"PTD-SQL: Partitioning and Targeted Drilling with LLMs in Text-to-SQL" [2024-09] [paper]

"Enhancing Text-to-SQL Capabilities of Large Language Models via Domain Database Knowledge Injection" [2024-09] [paper]

"DataGpt-SQL-7B: An Open-Source Language Model for Text-to-SQL" [2024-09] [paper]

"E-SQL: Direct Schema Linking via Question Enrichment in Text-to-SQL" [2024-09] [paper]

"FLEX: Expert-level False-Less EXecution Metric for Reliable Text-to-SQL Benchmark" [2024-09] [paper]

"Enhancing LLM Fine-tuning for Text-to-SQLs by SQL Quality Measurement" [2024-10] [paper]

"From Natural Language to SQL: Review of LLM-based Text-to-SQL Systems" [2024-10] [paper]

"CHASE-SQL: Multi-Path Reasoning and Preference Optimized Candidate Selection in Text-to-SQL" [2024-10] [paper]

"Context-Aware SQL Error Correction Using Few-Shot Learning -- A Novel Approach Based on NLQ, Error, and SQL Similarity" [2024-10] [paper]

"Learning from Imperfect Data: Towards Efficient Knowledge Distillation of Autoregressive Language Models for Text-to-SQL" [2024-10] [paper]

"LR-SQL: A Supervised Fine-Tuning Method for Text2SQL Tasks under Low-Resource Scenarios" [2024-10] [paper]

"MSc-SQL: Multi-Sample Critiquing Small Language Models For Text-To-SQL Translation" [2024-10] [paper]

"Learning Metadata-Agnostic Representations for Text-to-SQL In-Context Example Selection" [2024-10] [paper]

"An Actor-Critic Approach to Boosting Text-to-SQL Large Language Model" [2024-10] [paper]

"RSL-SQL: Robust Schema Linking in Text-to-SQL Generation" [2024-10] [paper]

"KeyInst: Keyword Instruction for Improving SQL Formulation in Text-to-SQL" [2024-10] [paper]

"Grounding Natural Language to SQL Translation with Data-Based Self-Explanations" [2024-11] [paper]

"PDC & DM-SFT: A Road for LLM SQL Bug-Fix Enhancing" [2024-11] [paper]

"XiYan-SQL: A Multi-Generator Ensemble Framework for Text-to-SQL" [2024-11] [paper]

"Leveraging Prior Experience: An Expandable Auxiliary Knowledge Base for Text-to-SQL" [2024-11] [paper]

"Text-to-SQL Calibration: No Need to Ask -- Just Rescale Model Probabilities" [2024-11] [paper]

"Baldur: Whole-Proof Generation and Repair with Large Language Models" [2023-03] [FSE 2023] [paper]

"An In-Context Learning Agent for Formal Theorem-Proving" [2023-10] [paper]

"Towards AI-Assisted Synthesis of Verified Dafny Methods" [2024-02] [FSE 2024] [paper]

"Towards Neural Synthesis for SMT-Assisted Proof-Oriented Programming" [2024-05] [paper]

"Laurel: Generating Dafny Assertions Using Large Language Models" [2024-05] [paper]

"AutoVerus: Automated Proof Generation for Rust Code" [2024-09] [paper]

"Proof Automation with Large Language Models" [2024-09] [paper]

"Automated Proof Generation for Rust Code via Self-Evolution" [2024-10] [paper]

"CoqPilot, a plugin for LLM-based generation of proofs" [2024-10] [paper]

"dafny-annotator: AI-Assisted Verification of Dafny Programs" [2024-11] [paper]

"Unit Test Case Generation with Transformers and Focal Context" [2020-09] [AST@ICSE 2022] [paper]

"An Empirical Evaluation of Using Large Language Models for Automated Unit Test Generation" [2023-02] [IEEE TSE] [paper]

"A3Test: Assertion-Augmented Automated Test Case Generation" [2023-02] [paper]

"Learning Deep Semantics for Test Completion" [2023-02] [ICSE 2023] [paper]

"Using Large Language Models to Generate JUnit Tests: An Empirical Study" [2023-04] [EASE 2024] [paper]

"CodaMosa: Escaping Coverage Plateaus in Test Generation with Pre-Trained Large Language Models" [2023-05] [ICSE 2023] [paper]

"No More Manual Tests? Evaluating and Improving ChatGPT for Unit Test Generation" [2023-05] [paper]

"ChatUniTest: a ChatGPT-based automated unit test generation tool" [2023-05] [paper]

"ChatGPT vs SBST: A Comparative Assessment of Unit Test Suite Generation" [2023-07] [paper]

"Can Large Language Models Write Good Property-Based Tests?" [2023-07] [paper]

"Domain Adaptation for Deep Unit Test Case Generation" [2023-08] [paper]

"Effective Test Generation Using Pre-trained Large Language Models and Mutation Testing" [2023-08] [paper]

"How well does LLM generate security tests?" [2023-10] [paper]

"Reinforcement Learning from Automatic Feedback for High-Quality Unit Test Generation" [2023-10] [paper]

"An initial investigation of ChatGPT unit test generation capability" [2023-10] [SAST 2023] [paper]

"CoverUp: Coverage-Guided LLM-Based Test Generation" [2024-03] [paper]

"Enhancing LLM-based Test Generation for Hard-to-Cover Branches via Program Analysis" [2024-04] [paper]

"Large Language Models for Mobile GUI Text Input Generation: An Empirical Study" [2024-04] [paper]

"Test Code Generation for Telecom Software Systems using Two-Stage Generative Model" [2024-04] [paper]

"LLM-Powered Test Case Generation for Detecting Tricky Bugs" [2024-04] [paper]

"Generating Test Scenarios from NL Requirements using Retrieval-Augmented LLMs: An Industrial Study" [2024-04] [paper]

"Large Language Models as Test Case Generators: Performance Evaluation and Enhancement" [2024-04] [paper]

"Leveraging Large Language Models for Automated Web-Form-Test Generation: An Empirical Study" [2024-05] [paper]

"DLLens: Testing Deep Learning Libraries via LLM-aided Synthesis" [2024-06] [paper]

"Exploring Fuzzing as Data Augmentation for Neural Test Generation" [2024-06] [paper]

"Mokav: Execution-driven Differential Testing with LLMs" [2024-06] [paper]

"Code Agents are State of the Art Software Testers" [2024-06] [paper]

"CasModaTest: A Cascaded and Model-agnostic Self-directed Framework for Unit Test Generation" [2024-06] [paper]

"An Empirical Study of Unit Test Generation with Large Language Models" [2024-06] [paper]

"Large-scale, Independent and Comprehensive study of the power of LLMs for test case generation" [2024-06] [paper]

"Augmenting LLMs to Repair Obsolete Test Cases with Static Collector and Neural Reranker" [2024-07] [paper]

"Harnessing the Power of LLMs: Automating Unit Test Generation for High-Performance Computing" [2024-07] [paper]

"An LLM-based Readability Measurement for Unit Tests' Context-aware Inputs" [2024-07] [paper]

"A System for Automated Unit Test Generation Using Large Language Models and Assessment of Generated Test Suites" [2024-08] [paper]

"Leveraging Large Language Models for Enhancing the Understandability of Generated Unit Tests" [2024-08] [paper]

"Multi-language Unit Test Generation using LLMs" [2024-09] [paper]

"Exploring the Integration of Large Language Models in Industrial Test Maintenance Processes" [2024-09] [paper]

"Python Symbolic Execution with LLM-powered Code Generation" [2024-09] [paper]

"Rethinking the Influence of Source Code on Test Case Generation" [2024-09] [paper]

"On the Effectiveness of LLMs for Manual Test Verifications" [2024-09] [paper]

"Retrieval-Augmented Test Generation: How Far Are We?" [2024-09] [紙]

"Context-Enhanced LLM-Based Framework for Automatic Test Refactoring" [2024-09] [paper]

"TestBench: Evaluating Class-Level Test Case Generation Capability of Large Language Models" [2024-09] [paper]

"Advancing Bug Detection in Fastjson2 with Large Language Models Driven Unit Test Generation" [2024-10] [paper]

"Test smells in LLM-Generated Unit Tests" [2024-10] [paper]

"LLM-based Unit Test Generation via Property Retrieval" [2024-10] [paper]

"Disrupting Test Development with AI Assistants" [2024-11] [paper]

"Parameter-Efficient Fine-Tuning of Large Language Models for Unit Test Generation: An Empirical Study" [2024-11] [paper]

"VALTEST: Automated Validation of Language Model Generated Test Cases" [2024-11] [paper]

"REACCEPT: Automated Co-evolution of Production and Test Code Based on Dynamic Validation and Large Language Models" [2024-11] [paper]

"Generating Accurate Assert Statements for Unit Test Cases using Pretrained Transformers" [2020-09] [paper]

"TOGA: A Neural Method for Test Oracle Generation" [2021-09] [ICSE 2022] [paper]

"TOGLL: Correct and Strong Test Oracle Generation with LLMs" [2024-05] [paper]

"Test Oracle Automation in the era of LLMs" [2024-05] [paper]

"Beyond Code Generation: Assessing Code LLM Maturity with Postconditions" [2024-07] [paper]

"Chat-like Asserts Prediction with the Support of Large Language Model" [2024-07] [paper]

"Do LLMs generate test oracles that capture the actual or the expected program behaviour?" [2024-10] [紙]

"Generating executable oracles to check conformance of client code to requirements of JDK Javadocs using LLMs" [2024-11] [paper]

"Automatically Write Code Checker: An LLM-based Approach with Logic-guided API Retrieval and Case by Case Iteration" [2024-11] [paper]

"ASSERTIFY: Utilizing Large Language Models to Generate Assertions for Production Code" [2024-11] [paper]

"μBERT: Mutation Testing using Pre-Trained Language Models" [2022-03] [paper]

"Efficient Mutation Testing via Pre-Trained Language Models" [2023-01] [paper]

"LLMorpheus: Mutation Testing using Large Language Models" [2024-04] [paper]

"An Exploratory Study on Using Large Language Models for Mutation Testing" [2024-06] [paper]

"Fine-Tuning LLMs for Code Mutation: A New Era of Cyber Threats" [2024-10] [paper]

"Large Language Models are Zero-Shot Fuzzers: Fuzzing Deep-Learning Libraries via Large Language Models" [2022-12] [paper]

"Fuzz4All: Universal Fuzzing with Large Language Models" [2023-08] [paper]

"WhiteFox: White-Box Compiler Fuzzing Empowered by Large Language Models" [2023-10] [paper]

"LLAMAFUZZ: Large Language Model Enhanced Greybox Fuzzing" [2024-06] [paper]

"FuzzCoder: Byte-level Fuzzing Test via Large Language Model" [2024-09] [paper]

"ISC4DGF: Enhancing Directed Grey-box Fuzzing with LLM-Driven Initial Seed Corpus Generation" [2024-09] [paper]

"Large Language Models Based JSON Parser Fuzzing for Bug Discovery and Behavioral Analysis" [2024-10] [paper]

"Fixing Security Vulnerabilities with AI in OSS-Fuzz" [2024-11] [paper]

"A Code Knowledge Graph-Enhanced System for LLM-Based Fuzz Driver Generation" [2024-11] [paper]

"VulDeePecker: A Deep Learning-Based System for Vulnerability Detection" [2018-01] [NDSS 2018] [paper]

"DeepBugs: A Learning Approach to Name-based Bug Detection" [2018-04] [Proc. ACM Program. Lang.] [paper]

"Automated Vulnerability Detection in Source Code Using Deep Representation Learning" [2018-07] [ICMLA 2018] [paper]

"SySeVR: A Framework for Using Deep Learning to Detect Software Vulnerabilities" [2018-07] [IEEE TDSC] [paper]

"Devign: Effective Vulnerability Identification by Learning Comprehensive Program Semantics via Graph Neural Networks" [2019-09] [NeurIPS 2019] [paper]

"Improving bug detection via context-based code representation learning and attention-based neural networks" [2019-10] [Proc. ACM Program. Lang.] [paper]

"Global Relational Models of Source Code" [2019-12] [ICLR 2020] [paper]

"VulDeeLocator: A Deep Learning-based Fine-grained Vulnerability Detector" [2020-01] [IEEE TDSC] [paper]

"Deep Learning based Vulnerability Detection: Are We There Yet?" [2020-09] [IEEE TSE] [paper]

"Security Vulnerability Detection Using Deep Learning Natural Language Processing" [2021-05] [INFOCOM Workshops 2021] [paper]

"Self-Supervised Bug Detection and Repair" [2021-05] [NeurIPS 2021] [paper]

"Vulnerability Detection with Fine-grained Interpretations" [2021-06] [ESEC/SIGSOFT FSE 2021] [paper]

"ReGVD: Revisiting Graph Neural Networks for Vulnerability Detection" [2021-10] [ICSE Companion 2022] [paper]

"VUDENC: Vulnerability Detection with Deep Learning on a Natural Codebase for Python" [2022-01] [Inf.軟體. Technol] [paper]

"Transformer-Based Language Models for Software Vulnerability Detection" [222-04] [ACSAC 2022] [paper]

"LineVul: A Transformer-based Line-Level Vulnerability Prediction" [2022-05] [MSR 2022] [paper]

"VulBERTa: Simplified Source Code Pre-Training for Vulnerability Detection" [2022-05] [IJCNN 2022] [paper]

"Open Science in Software Engineering: A Study on Deep Learning-Based Vulnerability Detection" [2022-09] [IEEE TSE] [paper]

"An Empirical Study of Deep Learning Models for Vulnerability Detection" [2022-12] [ICSE 2023] [paper]

"CSGVD: A deep learning approach combining sequence and graph embedding for source code vulnerability detection" [2023-01] [J.系統。 Softw.] [paper]

"Benchmarking Software Vulnerability Detection Techniques: A Survey" [2023-03] [paper]

"Transformer-based Vulnerability Detection in Code at EditTime: Zero-shot, Few-shot, or Fine-tuning?" [2023-05] [paper]

"A Survey on Automated Software Vulnerability Detection Using Machine Learning and Deep Learning" [2023-06] [paper]

"Limits of Machine Learning for Automatic Vulnerability Detection" [2023-06] [paper]

"Evaluating Instruction-Tuned Large Language Models on Code Comprehension and Generation" [2023-08] [paper]

"Prompt-Enhanced Software Vulnerability Detection Using ChatGPT" [2023-08] [paper]

"Towards Causal Deep Learning for Vulnerability Detection" [2023-10] [paper]

"Understanding the Effectiveness of Large Language Models in Detecting Security Vulnerabilities" [2023-11] [paper]

"How Far Have We Gone in Vulnerability Detection Using Large Language Models" [2023-11] [paper]

"Can Large Language Models Identify And Reason About Security Vulnerabilities? Not Yet" [2023-12] [paper]

"LLM4Vuln: A Unified Evaluation Framework for Decoupling and Enhancing LLMs' Vulnerability Reasoning" [2024-01] [paper]

"Security Code Review by LLMs: A Deep Dive into Responses" [2024-01] [paper]

"Chain-of-Thought Prompting of Large Language Models for Discovering and Fixing Software Vulnerabilities" [2024-02] [paper]

"Multi-role Consensus through LLMs Discussions for Vulnerability Detection" [2024-03] [paper]

"A Comprehensive Study of the Capabilities of Large Language Models for Vulnerability Detection" [2024-03] [paper]

"Vulnerability Detection with Code Language Models: How Far Are We?" [2024-03] [paper]

"Multitask-based Evaluation of Open-Source LLM on Software Vulnerability" [2024-04] [paper]

"Large Language Model for Vulnerability Detection and Repair: Literature Review and Roadmap" [2024-04] [paper]

"Pros and Cons! Evaluating ChatGPT on Software Vulnerability" [2024-04] [paper]

"VulEval: Towards Repository-Level Evaluation of Software Vulnerability Detection" [2024-04] [paper]

"DLAP: A Deep Learning Augmented Large Language Model Prompting Framework for Software Vulnerability Detection" [2024-05] [paper]

"Bridging the Gap: A Study of AI-based Vulnerability Management between Industry and Academia" [2024-05] [paper]

"Bridge and Hint: Extending Pre-trained Language Models for Long-Range Code" [2024-05] [paper]

"Harnessing Large Language Models for Software Vulnerability Detection: A Comprehensive Benchmarking Study" [2024-05] [paper]

"LLM-Assisted Static Analysis for Detecting Security Vulnerabilities" [2024-05] [paper]

"Generalization-Enhanced Code Vulnerability Detection via Multi-Task Instruction Fine-Tuning" [2024-06] [ACL 2024 Findings] [paper]

"Security Vulnerability Detection with Multitask Self-Instructed Fine-Tuning of Large Language Models" [2024-06] [paper]

"M2CVD: Multi-Model Collaboration for Code Vulnerability Detection" [2024-06] [paper]

"Towards Effectively Detecting and Explaining Vulnerabilities Using Large Language Models" [2024-06] [paper]

"Vul-RAG: Enhancing LLM-based Vulnerability Detection via Knowledge-level RAG" [2024-06] [paper]

"Bug In the Code Stack: Can LLMs Find Bugs in Large Python Code Stacks" [2024-06] [paper]

"Supporting Cross-language Cross-project Bug Localization Using Pre-trained Language Models" [2024-07] [paper]

"ALPINE: An adaptive language-agnostic pruning method for language models for code" [2024-07] [paper]

"SCoPE: Evaluating LLMs for Software Vulnerability Detection" [2024-07] [paper]

"Comparison of Static Application Security Testing Tools and Large Language Models for Repo-level Vulnerability Detection" [2024-07] [paper]

"Code Structure-Aware through Line-level Semantic Learning for Code Vulnerability Detection" [2024-07] [paper]

"A Study of Using Multimodal LLMs for Non-Crash Functional Bug Detection in Android Apps" [2024-07] [paper]

"EaTVul: ChatGPT-based Evasion Attack Against Software Vulnerability Detection" [2024-07] [paper]

"Evaluating Large Language Models in Detecting Test Smells" [2024-07] [paper]

"Automated Software Vulnerability Static Code Analysis Using Generative Pre-Trained Transformer Models" [2024-07] [paper]

"A Qualitative Study on Using ChatGPT for Software Security: Perception vs. Practicality" [2024-08] [paper]

"Large Language Models for Secure Code Assessment: A Multi-Language Empirical Study" [2024-08] [paper]

"VulCatch: Enhancing Binary Vulnerability Detection through CodeT5 Decompilation and KAN Advanced Feature Extraction" [2024-08] [paper]

"Impact of Large Language Models of Code on Fault Localization" [2024-08] [paper]

"Better Debugging: Combining Static Analysis and LLMs for Explainable Crashing Fault Localization" [2024-08] [paper]

"Beyond ChatGPT: Enhancing Software Quality Assurance Tasks with Diverse LLMs and Validation Techniques" [2024-09] [paper]

"CLNX: Bridging Code and Natural Language for C/C++ Vulnerability-Contributing Commits Identification" [2024-09] [paper]

"Code Vulnerability Detection: A Comparative Analysis of Emerging Large Language Models" [2024-09] [paper]

"Program Slicing in the Era of Large Language Models" [2024-09] [paper]

"Generating API Parameter Security Rules with LLM for API Misuse Detection" [2024-09] [paper]

"Enhancing Fault Localization Through Ordered Code Analysis with LLM Agents and Self-Reflection" [2024-09] [paper]

"Comparing Unidirectional, Bidirectional, and Word2vec Models for Discovering Vulnerabilities in Compiled Lifted Code" [2024-09] [paper]

"Enhancing Pre-Trained Language Models for Vulnerability Detection via Semantic-Preserving Data Augmentation" [2024-10] [paper]

"StagedVulBERT: Multi-Granular Vulnerability Detection with a Novel Pre-trained Code Model" [2024-10] [paper]

"Understanding the AI-powered Binary Code Similarity Detection" [2024-10] [paper]

"RealVul: Can We Detect Vulnerabilities in Web Applications with LLM?" [2024-10] [紙]

"Just-In-Time Software Defect Prediction via Bi-modal Change Representation Learning" [2024-10] [paper]

"DFEPT: Data Flow Embedding for Enhancing Pre-Trained Model Based Vulnerability Detection" [2024-10] [paper]

"Utilizing Precise and Complete Code Context to Guide LLM in Automatic False Positive Mitigation" [2024-11] [paper]

"Smart-LLaMA: Two-Stage Post-Training of Large Language Models for Smart Contract Vulnerability Detection and Explanation" [2024-11] [paper]

"FlexFL: Flexible and Effective Fault Localization with Open-Source Large Language Models" [2024-11] [paper]

"Breaking the Cycle of Recurring Failures: Applying Generative AI to Root Cause Analysis in Legacy Banking Systems" [2024-11] [paper]

"Are Large Language Models Memorizing Bug Benchmarks?" [2024-11] [paper]

"An Empirical Study of Vulnerability Detection using Federated Learning" [2024-11] [paper]

"Fault Localization from the Semantic Code Search Perspective" [2024-11] [paper]

"Deep Android Malware Detection", 2017-03, CODASPY 2017, [paper]

"A Multimodal Deep Learning Method for Android Malware Detection Using Various Features", 2018-08, IEEE Trans. inf。 Forensics Secur. 2019, [paper]

"Portable, Data-Driven Malware Detection using Language Processing and Machine Learning Techniques on Behavioral Analysis Reports", 2018-12, Digit.調查。 2019, [paper]

"I-MAD: Interpretable Malware Detector Using Galaxy Transformer", 2019-09, Comput.證券。 2021, [paper]

"Droidetec: Android Malware Detection and Malicious Code Localization through Deep Learning", 2020-02, [paper]

"Malicious Code Detection: Run Trace Output Analysis by LSTM", 2021-01, IEEE Access 2021, [paper]

"Intelligent malware detection based on graph convolutional network", 2021-08, J. Supercomput. 2021, [paper]

"Malbert: A novel pre-training method for malware detection", 2021-09, Comput.證券。 2021, [paper]

"Single-Shot Black-Box Adversarial Attacks Against Malware Detectors: A Causal Language Model Approach", 2021-12, ISI 2021, [paper]

"M2VMapper: Malware-to-Vulnerability mapping for Android using text processing", 2021-12, Expert Syst.應用。 2022, [paper]

"Malware Detection and Prevention using Artificial Intelligence Techniques", 2021-12, IEEE BigData 2021, [paper]

"An Ensemble of Pre-trained Transformer Models For Imbalanced Multiclass Malware Classification", 2021-12, Comput.證券。 2022, [paper]

"EfficientNet convolutional neural networks-based Android malware detection", 2022-01, Comput.證券。 2022, [paper]

"Static Malware Detection Using Stacked BiLSTM and GPT-2", 2022-05, IEEE Access 2022, [paper]

"APT Malicious Sample Organization Traceability Based on Text Transformer Model", 2022-07, PRML 2022, [paper]

"Self-Supervised Vision Transformers for Malware Detection", 2022-08, IEEE Access 2022, [paper]

"A Survey of Recent Advances in Deep Learning Models for Detecting Malware in Desktop and Mobile Platforms", 2022-09, ACM Computing Surveys, [paper]

"Malicious Source Code Detection Using Transformer", 2022-09, [paper]

"Flexible Android Malware Detection Model based on Generative Adversarial Networks with Code Tensor", 2022-10, CyberC 2022, [paper]

"MalBERTv2: Code Aware BERT-Based Model for Malware Identification" [2023-03] [Big Data Cogn.計算。 2023] [paper]

"GPThreats-3: Is Automatic Malware Generation a Threat?" [2023-05] [SPW 2023] [paper]

"GitHub Copilot: A Threat to High School Security? Exploring GitHub Copilot's Proficiency in Generating Malware from Simple User Prompts" [2023-08] [ETNCC 2023] [paper]

"An Attacker's Dream? Exploring the Capabilities of ChatGPT for Developing Malware" [2023-08] [CSET 2023] [paper]

"Malicious code detection in android: the role of sequence characteristics and disassembling methods" [2023-12] [Int. J. Inf。秒2023] [paper]

"Prompt Engineering-assisted Malware Dynamic Analysis Using GPT-4" [2023-12] [paper]

"Shifting the Lens: Detecting Malware in npm Ecosystem with Large Language Models" [2024-03] [paper]

"AppPoet: Large Language Model based Android malware detection via multi-view prompt engineering" [2024-04] [paper]

"Tactics, Techniques, and Procedures (TTPs) in Interpreted Malware: A Zero-Shot Generation with Large Language Models" [2024-07] [paper]

"DetectBERT: Towards Full App-Level Representation Learning to Detect Android Malware" [2024-08] [paper]

"PackageIntel: Leveraging Large Language Models for Automated Intelligence Extraction in Package Ecosystems" [2024-09] [paper]

"Learning Performance-Improving Code Edits" [2023-06] [ICLR 2024 Spotlight] [paper]

"Large Language Models for Compiler Optimization" [2023-09] [paper]

"Refining Decompiled C Code with Large Language Models" [2023-10] [paper]

"Priority Sampling of Large Language Models for Compilers" [2024-02] [paper]

"Should AI Optimize Your Code? A Comparative Study of Current Large Language Models Versus Classical Optimizing Compilers" [2024-06] [paper]

"Iterative or Innovative? A Problem-Oriented Perspective for Code Optimization" [2024-06] [paper]

"Meta Large Language Model Compiler: Foundation Models of Compiler Optimization" [2024-06] [paper]

"ViC: Virtual Compiler Is All You Need For Assembly Code Search" [2024-08] [paper]

"Search-Based LLMs for Code Optimization" [2024-08] [paper]

"E-code: Mastering Efficient Code Generation through Pretrained Models and Expert Encoder Group" [2024-08] [paper]

"Large Language Models for Energy-Efficient Code: Emerging Results and Future Directions" [2024-10] [paper]

"Using recurrent neural networks for decompilation" [2018-03] [SANER 2018] [paper]

"Evolving Exact Decompilation" [2018] [paper]

"Towards Neural Decompilation" [2019-05] [paper]

"Coda: An End-to-End Neural Program Decompiler" [2019-06] [NeurIPS 2019] [paper]

"N-Bref : A High-fidelity Decompiler Exploiting Programming Structures" [2020-09] [paper]

"Neutron: an attention-based neural decompiler" [2021-03] [Cybersecurity 2021] [paper]

"Beyond the C: Retargetable Decompilation using Neural Machine Translation" [2022-12] [paper]

"Boosting Neural Networks to Decompile Optimized Binaries" [2023-01] [ACSAC 2022] [paper]

"SLaDe: A Portable Small Language Model Decompiler for Optimized Assembly" [2023-05] [paper]

"Nova+: Generative Language Models for Binaries" [2023-11] [paper]

"CodeArt: Better Code Models by Attention Regularization When Symbols Are Lacking" [2024-11] [paper]

"LLM4Decompile: Decompiling Binary Code with Large Language Models" [2024-03] [paper]