Implementasi transformator wajah berpelukan dari Multi-modal Retriever Interaksi Akhir yang Berbutir Halus.

Implementasi resminya ada di sini.

Detail model dan pos pemeriksaan dapat ditemukan di sini.

Detail untuk mereproduksi kumpulan data dan evaluasi dalam makalah dapat ditemukan di sini.

| Model | WIT Ingat @10 | IGLUE Ingat@1 | Penarikan KVQA@5 | Penarikan MSMARCO@5 | OVEN Ingat@5 | Penarikan LLaVA@1 | Penarikan EVQA@5 | Penarikan Semu EVQA@5 | OKVQA Penarikan@5 | Penarikan Semu OKVQA@5 | Penarikan Infoseek@5 | Infoseek Penarikan Semu@5 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LinWeizheDragon/PreFLMR_ViT-G? | 0,619 | 0,718 | 0,419 | 0,783 | 0,643 | 0,726 | 0,625 | 0,721 | 0,302 | 0,674 | 0,392 | 0,577 |

| LinWeizheDragon/PreFLMR_ViT-L? | 0,605 | 0,699 | 0,440 | 0,779 | 0,608 | 0,729 | 0,609 | 0,708 | 0,314 | 0,690 | 0,374 | 0,578 |

| LinWeizheDragon/PreFLMR_ViT-B? | 0,427 | 0,574 | 0,294 | 0,786 | 0,468 | 0,673 | 0,550 | 0,663 | 0,272 | 0,658 | 0,260 | 0,496 |

Catatan: Kami mengonversi pos pemeriksaan dari PyTorch ke Huggingface-transformator, yang hasil benchmarknya sedikit berbeda dari angka yang dilaporkan di makalah asli. Anda dapat mereproduksi hasilnya pada makalah di atas dengan mengacu pada petunjuk dalam dokumen ini.

Buat virtualenv:

conda create -n FLMR python=3.10 -y

conda activate FLMR

Instal Pytorch:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

Instal faiss

conda install -c pytorch -c nvidia faiss-gpu=1.7.4 mkl=2021 blas=1.0=mkl

Uji apakah faiss menghasilkan kesalahan

python -c "import faiss"

Instal FLMR

git clone https://github.com/LinWeizheDragon/FLMR.git

cd FLMR

pip install -e .

Pasang mesin ColBERT

cd third_party/ColBERT

pip install -e .

Instal dependensi lainnya

pip install ujson gitpython easydict ninja datasets transformers

Memuat model terlatih

import os

import torch

import pandas as pd

import numpy as np

from torchvision . transforms import ToPILImage

from transformers import AutoImageProcessor

from flmr import index_custom_collection

from flmr import FLMRQueryEncoderTokenizer , FLMRContextEncoderTokenizer , FLMRModelForRetrieval

# load models

checkpoint_path = "LinWeizheDragon/PreFLMR_ViT-G"

image_processor_name = "laion/CLIP-ViT-bigG-14-laion2B-39B-b160k"

query_tokenizer = FLMRQueryEncoderTokenizer . from_pretrained ( checkpoint_path , subfolder = "query_tokenizer" )

context_tokenizer = FLMRContextEncoderTokenizer . from_pretrained (

checkpoint_path , subfolder = "context_tokenizer"

)

model = FLMRModelForRetrieval . from_pretrained (

checkpoint_path ,

query_tokenizer = query_tokenizer ,

context_tokenizer = context_tokenizer ,

)

image_processor = AutoImageProcessor . from_pretrained ( image_processor_name )Buat koleksi dokumen

num_items = 100

feature_dim = 1664

passage_contents = [ f"This is test sentence { i } " for i in range ( num_items )]

# Option 1. text-only documents

custom_collection = passage_contents

# Option 2. multi-modal documents with pre-extracted image features

# passage_image_features = np.random.rand(num_items, feature_dim)

# custom_collection = [

# (passage_content, passage_image_feature, None) for passage_content, passage_image_feature in zip(passage_contents, passage_image_features)

# ]

# Option 3. multi-modal documents with images

# random_images = torch.randn(num_items, 3, 224, 224)

# to_img = ToPILImage()

# if not os.path.exists("./test_images"):

# os.makedirs("./test_images")

# for i, image in enumerate(random_images):

# image = to_img(image)

# image.save(os.path.join("./test_images", "{}.jpg".format(i)))

# image_paths = [os.path.join("./test_images", "{}.jpg".format(i)) for i in range(num_items)]

# custom_collection = [

# (passage_content, None, image_path)

# for passage_content, image_path in zip(passage_contents, image_paths)

# ]Jalankan pengindeksan pada koleksi khusus

index_custom_collection (

custom_collection = custom_collection ,

model = model ,

index_root_path = "." ,

index_experiment_name = "test_experiment" ,

index_name = "test_index" ,

nbits = 8 , # number of bits in compression

doc_maxlen = 512 , # maximum allowed document length

overwrite = True , # whether to overwrite existing indices

use_gpu = False , # whether to enable GPU indexing

indexing_batch_size = 64 ,

model_temp_folder = "tmp" ,

nranks = 1 , # number of GPUs used in indexing

)Buat data kueri mainan

num_queries = 2

query_instructions = [ f"instruction { i } " for i in range ( num_queries )]

query_texts = [ f" { query_instructions [ i ] } : query { i } " for i in range ( num_queries )]

query_images = torch . zeros ( num_queries , 3 , 224 , 224 )

query_encoding = query_tokenizer ( query_texts )

query_pixel_values = image_processor ( query_images , return_tensors = "pt" )[ 'pixel_values' ]Dapatkan penyematan kueri dengan model

inputs = dict (

input_ids = query_encoding [ 'input_ids' ],

attention_mask = query_encoding [ 'attention_mask' ],

pixel_values = query_pixel_values ,

)

# Run model query encoding

res = model . query ( ** inputs )

queries = { i : query_texts [ i ] for i in range ( num_queries )}

query_embeddings = res . late_interaction_outputCari koleksinya

from flmr import search_custom_collection , create_searcher

# initiate a searcher

searcher = create_searcher (

index_root_path = "." ,

index_experiment_name = "test_experiment" ,

index_name = "test_index" ,

nbits = 8 , # number of bits in compression

use_gpu = True , # whether to enable GPU searching

)

# Search the custom collection

ranking = search_custom_collection (

searcher = searcher ,

queries = queries ,

query_embeddings = query_embeddings ,

num_document_to_retrieve = 5 , # how many documents to retrieve for each query

)

# Analyse retrieved documents

ranking_dict = ranking . todict ()

for i in range ( num_queries ):

print ( f"Query { i } retrieved documents:" )

retrieved_docs = ranking_dict [ i ]

retrieved_docs_indices = [ doc [ 0 ] for doc in retrieved_docs ]

retrieved_doc_scores = [ doc [ 2 ] for doc in retrieved_docs ]

retrieved_doc_texts = [ passage_contents [ doc_idx ] for doc_idx in retrieved_docs_indices ]

data = {

"Confidence" : retrieved_doc_scores ,

"Content" : retrieved_doc_texts ,

}

df = pd . DataFrame . from_dict ( data )

print ( df ) import torch

from flmr import FLMRQueryEncoderTokenizer , FLMRContextEncoderTokenizer , FLMRModelForRetrieval

checkpoint_path = "LinWeizheDragon/PreFLMR_ViT-L"

image_processor_name = "openai/clip-vit-large-patch14"

query_tokenizer = FLMRQueryEncoderTokenizer . from_pretrained ( checkpoint_path , subfolder = "query_tokenizer" )

context_tokenizer = FLMRContextEncoderTokenizer . from_pretrained ( checkpoint_path , subfolder = "context_tokenizer" )

model = FLMRModelForRetrieval . from_pretrained ( checkpoint_path ,

query_tokenizer = query_tokenizer ,

context_tokenizer = context_tokenizer ,

)

Q_encoding = query_tokenizer ([ "Using the provided image, obtain documents that address the subsequent question: What is the capital of France?" , "Extract documents linked to the question provided in conjunction with the image: What is the capital of China?" ])

D_encoding = context_tokenizer ([ "Paris is the capital of France." , "Beijing is the capital of China." ,

"Paris is the capital of France." , "Beijing is the capital of China." ])

Q_pixel_values = torch . zeros ( 2 , 3 , 224 , 224 )

inputs = dict (

query_input_ids = Q_encoding [ 'input_ids' ],

query_attention_mask = Q_encoding [ 'attention_mask' ],

query_pixel_values = Q_pixel_values ,

context_input_ids = D_encoding [ 'input_ids' ],

context_attention_mask = D_encoding [ 'attention_mask' ],

use_in_batch_negatives = True ,

)

res = model . forward ( ** inputs )

print ( res )Perhatikan bahwa contoh dalam blok kode ini hanya untuk tujuan demonstrasi. Mereka menunjukkan bahwa model terlatih memberikan skor lebih tinggi untuk mengoreksi dokumen. Dalam pelatihan nyata, Anda selalu harus memasukkan dokumen dalam urutan "dokumen positif untuk kueri1, dokumen negatif1 untuk kueri1, dokumen negatif2 untuk kueri1, ..., dokumen positif untuk kueri2, dokumen negatif1 untuk kueri2, dokumen2 negatif untuk kueri2, ... ". Anda mungkin ingin membaca bagian selanjutnya yang memberikan contoh skrip penyempurnaan.

pip install transformers

from transformers import AutoConfig , AutoModel , AutoImageProcessor , AutoTokenizer

import torch

checkpoint_path = "LinWeizheDragon/PreFLMR_ViT-L"

image_processor_name = "openai/clip-vit-large-patch14"

query_tokenizer = AutoTokenizer . from_pretrained ( checkpoint_path , subfolder = "query_tokenizer" , trust_remote_code = True )

context_tokenizer = AutoTokenizer . from_pretrained ( checkpoint_path , subfolder = "context_tokenizer" , trust_remote_code = True )

model = AutoModel . from_pretrained ( checkpoint_path ,

query_tokenizer = query_tokenizer ,

context_tokenizer = context_tokenizer ,

trust_remote_code = True ,

)

image_processor = AutoImageProcessor . from_pretrained ( image_processor_name )Kami menyediakan dua skrip untuk menunjukkan bagaimana model yang telah dilatih sebelumnya dapat digunakan dalam evaluasi:

examples/example_use_flmr.py : contoh skrip untuk mengevaluasi FLMR (dengan 10 ROI) pada OK-VQA.examples/example_use_preflmr.py : contoh skrip untuk mengevaluasi PreFLMR pada E-VQA. cd examples/ Unduh KBVQA_data dari sini dan unzip folder gambar. Hasil ROI/captioning/deteksi objek telah disertakan.

Jalankan perintah berikut (hapus --run_indexing jika Anda sudah menjalankan pengindeksan satu kali):

python example_use_flmr.py

--use_gpu --run_indexing

--index_root_path " . "

--index_name OKVQA_GS

--experiment_name OKVQA_GS

--indexing_batch_size 64

--image_root_dir /path/to/KBVQA_data/ok-vqa/

--dataset_path BByrneLab/OKVQA_FLMR_preprocessed_data

--passage_dataset_path BByrneLab/OKVQA_FLMR_preprocessed_GoogleSearch_passages

--use_split test

--nbits 8

--Ks 1 5 10 20 50 100

--checkpoint_path LinWeizheDragon/FLMR

--image_processor_name openai/clip-vit-base-patch32

--query_batch_size 8

--num_ROIs 9 Anda dapat mengunduh gambar E-VQA dari https://github.com/google-research/google-research/tree/master/encyclopedic_vqa. Kami akan segera menambahkan tautan kumpulan data di sini.

cd examples/ Jalankan perintah berikut (hapus --run_indexing jika Anda sudah menjalankan pengindeksan satu kali):

python example_use_preflmr.py

--use_gpu --run_indexing

--index_root_path " . "

--index_name EVQA_PreFLMR_ViT-G

--experiment_name EVQA

--indexing_batch_size 64

--image_root_dir /rds/project/rds-hirYTW1FQIw/shared_space/vqa_data/KBVQA_data/EVQA/eval_image/

--dataset_hf_path BByrneLab/multi_task_multi_modal_knowledge_retrieval_benchmark_M2KR

--dataset EVQA

--use_split test

--nbits 8

--Ks 1 5 10 20 50 100 500

--checkpoint_path LinWeizheDragon/PreFLMR_ViT-G

--image_processor_name laion/CLIP-ViT-bigG-14-laion2B-39B-b160k

--query_batch_size 8

--compute_pseudo_recall Di sini, kami mengunggah semua dataset M2KR ke dalam satu dataset HF BByrneLab/multi_task_multi_modal_knowledge_retrieval_benchmark_M2KR dengan dataset berbeda sebagai subset. Untuk mereproduksi hasil kumpulan data lain di makalah, Anda dapat mengubah --dataset menjadi OKVQA , KVQA , LLaVA , OVEN , Infoseek , WIT , IGLUE dan EVQA .

Pembaruan :

--compute_pseudo_recall untuk menghitung penarikan semu untuk kumpulan data seperti EVQA/OKVQA/Infoseek--Ks 1 5 10 20 50 100 500 : max(Ks) harus 500 agar sesuai dengan kinerja yang dilaporkan dalam makalah PreFLMR. Ubah jalur root gambar di examples/evaluate_all.sh dan jalankan:

cd examples

bash evaluate_all.shDapatkan laporannya dengan cara:

python report.pyAnda perlu menginstal pytorch-lightning:

pip install pytorch-lightning==2.1.0

python example_finetune_preflmr.py

--image_root_dir /path/to/EVQA/images/

--dataset_hf_path BByrneLab/multi_task_multi_modal_knowledge_retrieval_benchmark_M2KR

--dataset EVQA

--freeze_vit

--log_with_wandb

--model_save_path saved_models

--checkpoint_path LinWeizheDragon/PreFLMR_ViT-G

--image_processor_name laion/CLIP-ViT-bigG-14-laion2B-39B-b160k

--batch_size 8

--accumulate_grad_batches 8

--valid_batch_size 16

--test_batch_size 64

--mode train

--max_epochs 99999999

--learning_rate 0.000005

--warmup_steps 100

--accelerator auto

--devices auto

--strategy ddp_find_unused_parameters_true

--num_sanity_val_steps 2

--precision bf16

--val_check_interval 2000

--save_top_k -1 python example_use_preflmr.py

--use_gpu --run_indexing

--index_root_path " . "

--index_name EVQA_PreFLMR_ViT-G_finetuned_model_step_10156

--experiment_name EVQA

--indexing_batch_size 64

--image_root_dir /path/to/EVQA/images/

--dataset_hf_path BByrneLab/multi_task_multi_modal_knowledge_retrieval_benchmark_M2KR

--dataset EVQA

--use_split test

--nbits 8

--num_gpus 1

--Ks 1 5 10 20 50 100 500

--checkpoint_path saved_models/model_step_10156

--image_processor_name laion/CLIP-ViT-bigG-14-laion2B-39B-b160k

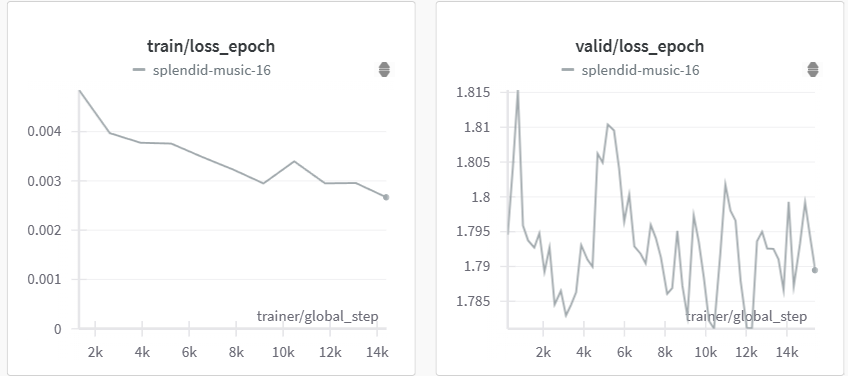

--query_batch_size 8 Dengan menjalankan skrip di atas, kita dapat memperoleh performa finetuning berikut:

| Melangkah | Penarikan Semu@5 di EVQA |

|---|---|

| 2500 | 73.6 |

| 10.000 | 73,55 |

| 12000 | 74.21 |

| 14000 | 73.73 |

(Pos pemeriksaan dengan kerugian validasi rendah dipilih dan diuji, dijalankan pada 2 GPU A100)

Model FLMR diimplementasikan mengikuti gaya dokumentasi transformers . Anda dapat menemukan dokumentasi terperinci di file pemodelan.

Jika pekerjaan kami membantu penelitian Anda, silakan mengutip makalah kami untuk FLMR dan PreFLMR.

@inproceedings{

lin2023finegrained,

title={Fine-grained Late-interaction Multi-modal Retrieval for Retrieval Augmented Visual Question Answering},

author={Weizhe Lin and Jinghong Chen and Jingbiao Mei and Alexandru Coca and Bill Byrne},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

url={https://openreview.net/forum?id=IWWWulAX7g}

}

@inproceedings{lin-etal-2024-preflmr,

title = "{P}re{FLMR}: Scaling Up Fine-Grained Late-Interaction Multi-modal Retrievers",

author = "Lin, Weizhe and

Mei, Jingbiao and

Chen, Jinghong and

Byrne, Bill",

editor = "Ku, Lun-Wei and

Martins, Andre and

Srikumar, Vivek",

booktitle = "Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = aug,

year = "2024",

address = "Bangkok, Thailand",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.acl-long.289",

pages = "5294--5316",

abstract = "Large Multimodal Models (LMMs) excel in natural language and visual understanding but are challenged by exacting tasks such as Knowledge-based Visual Question Answering (KB-VQA) which involve the retrieval of relevant information from document collections to use in shaping answers to questions. We present an extensive training and evaluation framework, M2KR, for KB-VQA. M2KR contains a collection of vision and language tasks which we have incorporated into a single suite of benchmark tasks for training and evaluating general-purpose multi-modal retrievers. We use M2KR to develop PreFLMR, a pre-trained version of the recently developed Fine-grained Late-interaction Multi-modal Retriever (FLMR) approach to KB-VQA, and we report new state-of-the-art results across a range of tasks. We also present investigations into the scaling behaviors of PreFLMR intended to be useful in future developments in general-purpose multi-modal retrievers.",

}