DALE

1.0.0

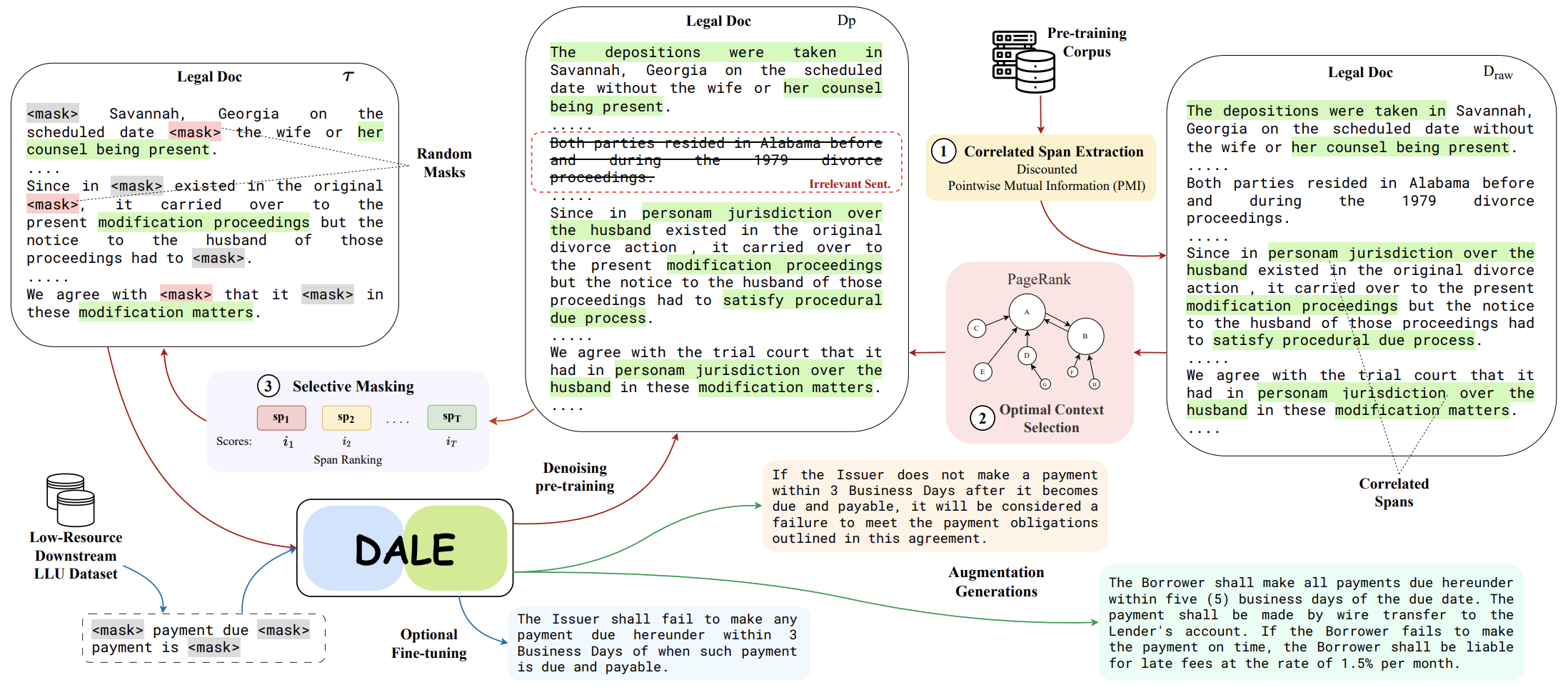

EMNLP 2023 논문 구현: DALE: 저자원 법률 NLP를 위한 생성 데이터 확대.

DALE 사전 훈련된 bart-large 모델은 여기에서 찾을 수 있습니다. 훈련 전 데이터는 여기에서 확인할 수 있습니다.

단계:

다음을 사용하여 종속성을 설치합니다.

pip install -r requirements.txt

필요한 파일을 실행하세요.

PMI 마스킹의 경우:

cd pmi/

sh pmi.sh <config_name> <dataset_path> <output_path> <n_gram_value> <pmi_cut_off>

sh pmi.sh unfair_tos ./unfair_tos ./output_path 3 95

BART 사전 교육의 경우:

cd bart_pretrain/

python pretrain.py --ckpt_path ./ckpt_path

--dataset_path ./dataset_path>

--max_input_length 1024

--max_target_length 1024

--batch_size 4

--num_train_epochs 10

--logging_steps 100

--save_steps 1000

--output_dir ./output_path

BART 생성의 경우:

cd bart_generation/

python bart_ctx_augs.py --dataset_name "scotus"

--path ./dataset_path

--dest_path ./dest_path

--n_augs 5

--batch_size 4

--model_path ./model_path

bart_ctx_augs.py -> BART generation for multi-class data generation.

bart_ctx_augs_multi.py -> BART generation for multi-label data generation.

bart_ctx_augs_ch.py -> BART generation for casehold dataset.

우리 문서/코드/데모가 유용하다고 생각되면 우리 문서를 인용해 주세요.

@inproceedings{ghosh-etal-2023-dale,

title = "DALE: Generative Data Augmentation for Low-Resource Legal NLP",

author = "Sreyan Ghosh and

Chandra Kiran Evuru and

Sonal Kumar and

S Ramaneswaran and

S Sakshi and

Utkarsh Tyagi and

Dinesh Manocha",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Sentosa, Singapore",

abstract = "We present DALE, a novel and effective generative Data Augmentation framework for lowresource LEgal NLP. DALE addresses the challenges existing frameworks pose in generating effective data augmentations of legal documents - legal language, with its specialized vocabulary and complex semantics, morphology, and syntax, does not benefit from data augmentations that merely rephrase the source sentence. To address this, DALE, built on an EncoderDecoder Language Model, is pre-trained on a novel unsupervised text denoising objective based on selective masking - our masking strategy exploits the domain-specific language characteristics of templatized legal documents to mask collocated spans of text. Denoising these spans help DALE acquire knowledge about legal concepts, principles, and language usage. Consequently, it develops the ability to generate coherent and diverse augmentations with novel contexts. Finally, DALE performs conditional generation to generate synthetic augmentations for low-resource Legal NLP tasks. We demonstrate the effectiveness of DALE on 13 datasets spanning 6 tasks and 4 low-resource settings. DALE outperforms all our baselines, including LLMs, qualitatively and quantitatively, with improvements of 1%-50%."

}