toolformer pytorch

0.0.30

도구를 사용할 수 있는 언어 모델인 Toolformer의 MetaAI 구현

Stability.ai 작업 및 오픈소스 최첨단 인공지능 연구에 대한 아낌없는 후원

다양한 도구의 초기 커밋을 통해 공을 굴려준 Enrico!

API 호출에 대한 함수와 매개변수를 구문 분석하기 위해 이 저장소에서 모든 정규식을 수행한 ChatGPT에 감사드립니다. 저는 정규 표현식을 잘 못해서 AI의 도움이 컸습니다(장애 없이 완벽했습니다).

$ pip install toolformer-pytorch현재 날짜와 시간에 대한 인식을 언어 모델에 제공하는 사용 예입니다.

import torch

from toolformer_pytorch import Toolformer , PaLM

# simple calendar api call - function that returns a string

def Calendar ():

import datetime

from calendar import day_name , month_name

now = datetime . datetime . now ()

return f'Today is { day_name [ now . weekday ()] } , { month_name [ now . month ] } { now . day } , { now . year } .'

# prompt for teaching it to use the Calendar function from above

prompt = f"""

Your task is to add calls to a Calendar API to a piece of text.

The API calls should help you get information required to complete the text.

You can call the API by writing "[Calendar()]"

Here are some examples of API calls:

Input: Today is the first Friday of the year.

Output: Today is the first [Calendar()] Friday of the year.

Input: The president of the United States is Joe Biden.

Output: The president of the United States is [Calendar()] Joe Biden.

Input: [input]

Output:

"""

data = [

"The store is never open on the weekend, so today it is closed." ,

"The number of days from now until Christmas is 30" ,

"The current day of the week is Wednesday."

]

# model - here using PaLM, but any nn.Module that returns logits in the shape (batch, seq, num_tokens) is fine

model = PaLM (

dim = 512 ,

depth = 2 ,

heads = 8 ,

dim_head = 64

). cuda ()

# toolformer

toolformer = Toolformer (

model = model ,

model_seq_len = 256 ,

teach_tool_prompt = prompt ,

tool_id = 'Calendar' ,

tool = Calendar ,

finetune = True

)

# invoking this will

# (1) prompt the model with your inputs (data), inserted into [input] tag

# (2) with the sampled outputs, filter out the ones that made proper API calls

# (3) execute the API calls with the `tool` given

# (4) filter with the specialized filter function (which can be used independently as shown in the next section)

# (5) fine-tune on the filtered results

filtered_stats = toolformer ( data )

# then, once you see the 'finetune complete' message

response = toolformer . sample_model_with_api_calls ( "How many days until the next new years?" )

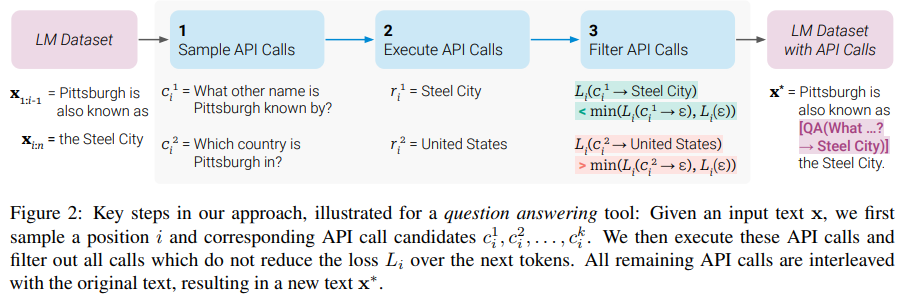

# hopefully you see it invoke the calendar and utilize the response of the api call...이 논문의 주요 참신함은 API 호출을 삽입하도록 지시된 변환기의 출력에 대한 적합성 점수를 정의하는 것입니다. 점수는 변환기를 미세 조정하여 뒤따르는 텍스트의 복잡성을 줄이는 API 호출을 만들기 위해 샘플링된 출력을 필터링하는 데 사용됩니다.

import torch

from toolformer_pytorch import (

Toolformer ,

PaLM ,

filter_tokens_with_api_response

)

# model

palm = PaLM (

dim = 512 ,

num_tokens = 20000 ,

depth = 2 ,

heads = 8 ,

dim_head = 64

). cuda ()

# mock some tokens

mock_start_pos = 512

mock_api_call_length = 10

mock_api_start_id = 19998

mock_api_stop_id = 19999

tokens = torch . randint ( 0 , 20000 , ( 10 , 1024 )). cuda ()

tokens_with_api_response = torch . randint ( 0 , 20000 , ( 10 , 1024 )). cuda ()

tokens_without_api_response = torch . randint ( 0 , 20000 , ( 10 , 1024 )). cuda ()

tokens_with_api_response [:, mock_start_pos ] = mock_api_start_id

tokens_with_api_response [:, mock_start_pos + mock_api_call_length ] = mock_api_stop_id

tokens_without_api_response [:, mock_start_pos ] = mock_api_start_id

tokens_without_api_response [:, mock_start_pos + mock_api_call_length ] = mock_api_stop_id

# filter

filtered_results = filter_tokens_with_api_response (

model = palm ,

tokens = tokens ,

tokens_with_api_response = tokens_with_api_response ,

tokens_without_api_response = tokens_without_api_response ,

filter_threshold = 1. ,

api_start_token_id = mock_api_start_id ,

api_end_token_id = mock_api_stop_id

) 언어 모델에 의해 생성된 문자열에서 도구를 호출하려면 invoke_tools 사용하세요.

from toolformer_pytorch import invoke_tools

def inc ( i ):

return i + 1

def dec ( i ):

return i - 1

function_registry = dict (

inc = inc ,

dec = dec

)

text = 'make the following api calls: [inc(1)] and [dec(2)] and [ignored(3)]'

invoke_tools ( function_registry , text )

# make the following api calls: [inc(1) → 2] and [dec(2) → 1] and [ignored(3)] Toolformer 에서 엔드투엔드 교육 수행 Toolformer 인스턴스에서 훈련된 최종 모델이 여러 도구를 사용하여 호출될 수 있는지 확인하십시오. 배치 크기 1부터 시작하여 작업을 진행하세요. @inproceedings { Schick2023ToolformerLM ,

title = { Toolformer: Language Models Can Teach Themselves to Use Tools } ,

author = { Timo Schick and Jane Dwivedi-Yu and Roberto Dessi and Roberta Raileanu and Maria Lomeli and Luke Zettlemoyer and Nicola Cancedda and Thomas Scialom } ,

year = { 2023 }

} @article { Gao2022PALPL ,

title = { PAL: Program-aided Language Models } ,

author = { Luyu Gao and Aman Madaan and Shuyan Zhou and Uri Alon and Pengfei Liu and Yiming Yang and Jamie Callan and Graham Neubig } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2211.10435 }

}현실은 믿기를 멈추더라도 사라지지 않는 것입니다. – 필립 K. 딕.