pixel level contrastive learning

0.1.1

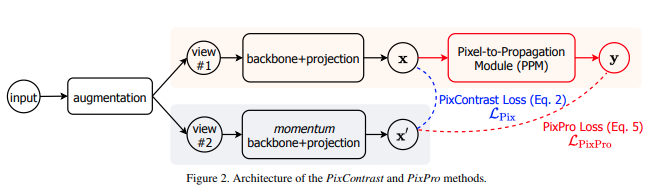

Pytorch의 "Propagate Yourself" 논문에서 제안된 픽셀 수준 대조 학습 구현. 픽셀 수준에서 대조 학습을 수행하는 것 외에도 온라인 네트워크는 픽셀 수준 표현을 픽셀 전파 모듈에 전달하고 대상 네트워크에 대한 유사성 손실을 적용합니다. 그들은 세분화 작업에서 이전의 모든 비지도 및 감독 방법을 능가했습니다.

$ pip install pixel-level-contrastive-learning다음은 레이어 4(8 x 8 '픽셀')의 출력을 사용하여 프레임워크를 사용하여 resnet 교육을 자체 감독하는 방법의 예입니다.

import torch

from pixel_level_contrastive_learning import PixelCL

from torchvision import models

from tqdm import tqdm

resnet = models . resnet50 ( pretrained = True )

learner = PixelCL (

resnet ,

image_size = 256 ,

hidden_layer_pixel = 'layer4' , # leads to output of 8x8 feature map for pixel-level learning

hidden_layer_instance = - 2 , # leads to output for instance-level learning

projection_size = 256 , # size of projection output, 256 was used in the paper

projection_hidden_size = 2048 , # size of projection hidden dimension, paper used 2048

moving_average_decay = 0.99 , # exponential moving average decay of target encoder

ppm_num_layers = 1 , # number of layers for transform function in the pixel propagation module, 1 was optimal

ppm_gamma = 2 , # sharpness of the similarity in the pixel propagation module, already at optimal value of 2

distance_thres = 0.7 , # ideal value is 0.7, as indicated in the paper, which makes the assumption of each feature map's pixel diagonal distance to be 1 (still unclear)

similarity_temperature = 0.3 , # temperature for the cosine similarity for the pixel contrastive loss

alpha = 1. , # weight of the pixel propagation loss (pixpro) vs pixel CL loss

use_pixpro = True , # do pixel pro instead of pixel contrast loss, defaults to pixpro, since it is the best one

cutout_ratio_range = ( 0.6 , 0.8 ) # a random ratio is selected from this range for the random cutout

). cuda ()

opt = torch . optim . Adam ( learner . parameters (), lr = 1e-4 )

def sample_batch_images ():

return torch . randn ( 10 , 3 , 256 , 256 ). cuda ()

for _ in tqdm ( range ( 100000 )):

images = sample_batch_images ()

loss = learner ( images ) # if positive pixel pairs is equal to zero, the loss is equal to the instance level loss

opt . zero_grad ()

loss . backward ()

print ( loss . item ())

opt . step ()

learner . update_moving_average () # update moving average of target encoder

# after much training, save the improved model for testing on downstream task

torch . save ( resnet , 'improved-resnet.pt' ) 로깅이나 다른 목적으로 forward 에서 양수 픽셀 쌍의 수를 반환할 수도 있습니다.

loss , positive_pairs = learner ( images , return_positive_pairs = True ) @misc { xie2020propagate ,

title = { Propagate Yourself: Exploring Pixel-Level Consistency for Unsupervised Visual Representation Learning } ,

author = { Zhenda Xie and Yutong Lin and Zheng Zhang and Yue Cao and Stephen Lin and Han Hu } ,

year = { 2020 } ,

eprint = { 2011.10043 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

}