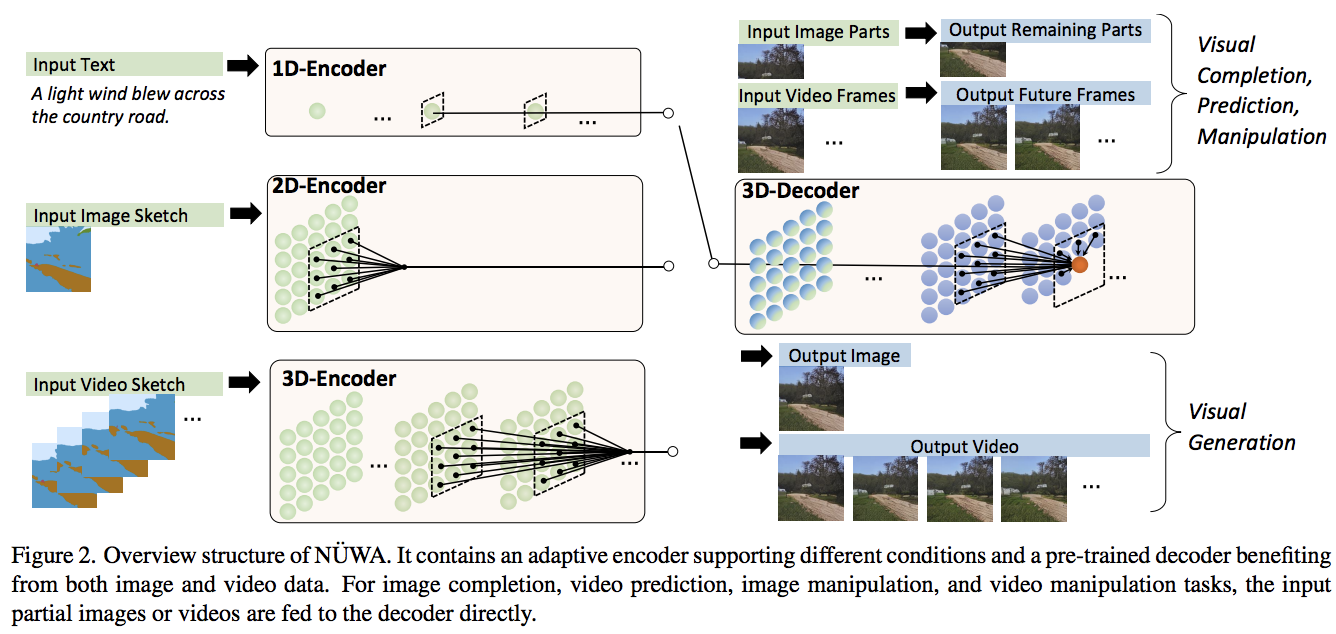

텍스트-비디오 합성을 위한 최첨단 주의 네트워크인 NÜWA를 Pytorch에서 구현합니다. 또한 듀얼 디코더 접근 방식을 사용하여 비디오 및 오디오 생성에 대한 확장 기능도 포함되어 있습니다.

야닉 킬처

딥리더

2022년 3월 - 어려운 버전의 Moving Mnist로 생명의 흔적을 봅니다.

2022년 4월 - 확산 기반 방법이 SOTA의 새로운 왕좌를 차지한 것 같습니다. 그러나 나는 NUWA를 계속해서 다중 머리 코드 + 계층적 인과 변환기를 사용하도록 확장할 것입니다. 나는 이 업무 분야를 개선하기 위한 방향이 아직 정해지지 않았다고 생각합니다.

$ pip install nuwa-pytorch먼저 VAE를 훈련시킵니다.

import torch

from nuwa_pytorch import VQGanVAE

vae = VQGanVAE (

dim = 512 ,

channels = 3 , # default is 3, but can be changed to any value for the training of the segmentation masks (sketches)

image_size = 256 , # image size

num_layers = 4 , # number of downsampling layers

num_resnet_blocks = 2 , # number of resnet blocks

vq_codebook_size = 8192 , # codebook size

vq_decay = 0.8 # codebook exponential decay

)

imgs = torch . randn ( 10 , 3 , 256 , 256 )

# alternate learning for autoencoder ...

loss = vae ( imgs , return_loss = True )

loss . backward ()

# and the discriminator ...

discr_loss = vae ( imgs , return_discr_loss = True )

discr_loss . backward ()

# do above for many steps

# return reconstructed images and make sure they look ok

recon_imgs = vae ( imgs )그런 다음 학습한 VAE를 사용하여

import torch

from nuwa_pytorch import NUWA , VQGanVAE

# autoencoder

vae = VQGanVAE (

dim = 64 ,

num_layers = 4 ,

image_size = 256 ,

num_conv_blocks = 2 ,

vq_codebook_size = 8192

)

# NUWA transformer

nuwa = NUWA (

vae = vae ,

dim = 512 ,

text_num_tokens = 20000 , # number of text tokens

text_enc_depth = 12 , # text encoder depth

text_enc_heads = 8 , # number of attention heads for encoder

text_max_seq_len = 256 , # max sequence length of text conditioning tokens (keep at 256 as in paper, or shorter, if your text is not that long)

max_video_frames = 10 , # number of video frames

image_size = 256 , # size of each frame of video

dec_depth = 64 , # video decoder depth

dec_heads = 8 , # number of attention heads in decoder

dec_reversible = True , # reversible networks - from reformer, decoupling memory usage from depth

enc_reversible = True , # reversible encoders, if you need it

attn_dropout = 0.05 , # dropout for attention

ff_dropout = 0.05 , # dropout for feedforward

sparse_3dna_kernel_size = ( 5 , 3 , 3 ), # kernel size of the sparse 3dna attention. can be a single value for frame, height, width, or different values (to simulate axial attention, etc)

sparse_3dna_dilation = ( 1 , 2 , 4 ), # cycle dilation of 3d conv attention in decoder, for more range

shift_video_tokens = True # cheap relative positions for sparse 3dna transformer, by shifting along spatial dimensions by one

). cuda ()

# data

text = torch . randint ( 0 , 20000 , ( 1 , 256 )). cuda ()

video = torch . randn ( 1 , 10 , 3 , 256 , 256 ). cuda () # (batch, frames, channels, height, width)

loss = nuwa (

text = text ,

video = video ,

return_loss = True # set this to True, only for training, to return cross entropy loss

)

loss . backward ()

# do above with as much data as possible

# then you can generate a video from text

video = nuwa . generate ( text = text , num_frames = 5 ) # (1, 5, 3, 256, 256) 논문에서는 분할 마스크를 기반으로 비디오 생성을 조절하는 방법도 제시합니다. 사전에 스케치에 대해 VQGanVAE 를 훈련시키면 이 작업도 쉽게 수행할 수 있습니다.

그런 다음 스케치 VAE를 참조로 사용할 수 있는 NUWA 대신 NUWASketch 사용합니다.

전.

import torch

from nuwa_pytorch import NUWASketch , VQGanVAE

# autoencoder, one for main video, the other for the sketch

vae = VQGanVAE (

dim = 64 ,

num_layers = 4 ,

image_size = 256 ,

num_conv_blocks = 2 ,

vq_codebook_size = 8192

)

sketch_vae = VQGanVAE (

dim = 512 ,

channels = 5 , # say the sketch has 5 classes

num_layers = 4 ,

image_size = 256 ,

num_conv_blocks = 2 ,

vq_codebook_size = 8192

)

# NUWA transformer for conditioning with sketches

nuwa = NUWASketch (

vae = vae ,

sketch_vae = sketch_vae ,

dim = 512 , # model dimensions

sketch_enc_depth = 12 , # sketch encoder depth

sketch_enc_heads = 8 , # number of attention heads for sketch encoder

sketch_max_video_frames = 3 , # max number of frames for sketches

sketch_enc_use_sparse_3dna = True , # whether to use 3d-nearby attention (of full attention if False) for sketch encoding transformer

max_video_frames = 10 , # number of video frames

image_size = 256 , # size of each frame of video

dec_depth = 64 , # video decoder depth

dec_heads = 8 , # number of attention heads in decoder

dec_reversible = True , # reversible networks - from reformer, decoupling memory usage from depth

enc_reversible = True , # reversible encoders, if you need it

attn_dropout = 0.05 , # dropout for attention

ff_dropout = 0.05 , # dropout for feedforward

sparse_3dna_kernel_size = ( 5 , 3 , 3 ), # kernel size of the sparse 3dna attention. can be a single value for frame, height, width, or different values (to simulate axial attention, etc)

sparse_3dna_dilation = ( 1 , 2 , 4 ), # cycle dilation of 3d conv attention in decoder, for more range

cross_2dna_kernel_size = 5 , # 2d kernel size of spatial grouping of attention from video frames to sketches

cross_2dna_dilation = 1 , # 2d dilation of spatial attention from video frames to sketches

shift_video_tokens = True # cheap relative positions for sparse 3dna transformer, by shifting along spatial dimensions by one

). cuda ()

# data

sketch = torch . randn ( 2 , 2 , 5 , 256 , 256 ). cuda () # (batch, frames, segmentation classes, height, width)

sketch_mask = torch . ones ( 2 , 2 ). bool (). cuda () # (batch, frames) [Optional]

video = torch . randn ( 2 , 10 , 3 , 256 , 256 ). cuda () # (batch, frames, channels, height, width)

loss = nuwa (

sketch = sketch ,

sketch_mask = sketch_mask ,

video = video ,

return_loss = True # set this to True, only for training, to return cross entropy loss

)

loss . backward ()

# do above with as much data as possible

# then you can generate a video from sketch(es)

video = nuwa . generate ( sketch = sketch , num_frames = 5 ) # (1, 5, 3, 256, 256) 이 저장소는 비디오와 오디오를 모두 생성할 수 있는 NUWA의 변형도 제공합니다. 지금은 오디오를 수동으로 인코딩해야 합니다.

import torch

from nuwa_pytorch import NUWAVideoAudio , VQGanVAE

# autoencoder

vae = VQGanVAE (

dim = 64 ,

num_layers = 4 ,

image_size = 256 ,

num_conv_blocks = 2 ,

vq_codebook_size = 100

)

# NUWA transformer

nuwa = NUWAVideoAudio (

vae = vae ,

dim = 512 ,

num_audio_tokens = 2048 , # codebook size for audio tokens

num_audio_tokens_per_video_frame = 32 , # number of audio tokens per video frame

cross_modality_attn_every = 3 , # cross modality attention every N layers

text_num_tokens = 20000 , # number of text tokens

text_enc_depth = 1 , # text encoder depth

text_enc_heads = 8 , # number of attention heads for encoder

text_max_seq_len = 256 , # max sequence length of text conditioning tokens (keep at 256 as in paper, or shorter, if your text is not that long)

max_video_frames = 10 , # number of video frames

image_size = 256 , # size of each frame of video

dec_depth = 4 , # video decoder depth

dec_heads = 8 , # number of attention heads in decoder

enc_reversible = True , # reversible encoders, if you need it

dec_reversible = True , # quad-branched reversible network, for making depth of twin video / audio decoder independent of network depth. recommended to be turned on unless you have a ton of memory at your disposal

attn_dropout = 0.05 , # dropout for attention

ff_dropout = 0.05 , # dropout for feedforward

sparse_3dna_kernel_size = ( 5 , 3 , 3 ), # kernel size of the sparse 3dna attention. can be a single value for frame, height, width, or different values (to simulate axial attention, etc)

sparse_3dna_dilation = ( 1 , 2 , 4 ), # cycle dilation of 3d conv attention in decoder, for more range

shift_video_tokens = True # cheap relative positions for sparse 3dna transformer, by shifting along spatial dimensions by one

). cuda ()

# data

text = torch . randint ( 0 , 20000 , ( 1 , 256 )). cuda ()

audio = torch . randint ( 0 , 2048 , ( 1 , 32 * 10 )). cuda () # (batch, audio tokens per frame * max video frames)

video = torch . randn ( 1 , 10 , 3 , 256 , 256 ). cuda () # (batch, frames, channels, height, width)

loss = nuwa (

text = text ,

video = video ,

audio = audio ,

return_loss = True # set this to True, only for training, to return cross entropy loss

)

loss . backward ()

# do above with as much data as possible

# then you can generate a video from text

video , audio = nuwa . generate ( text = text , num_frames = 5 ) # (1, 5, 3, 256, 256), (1, 32 * 5 == 160) 이 라이브러리는 훈련을 더 쉽게 해주는 몇 가지 유틸리티를 제공합니다. 우선, VQGanVAETrainer 클래스를 사용하여 VQGanVAE 훈련을 관리할 수 있습니다. 간단히 모델을 래핑하고 이미지 폴더 경로와 다양한 훈련 하이퍼파라미터를 전달합니다.

import torch

from nuwa_pytorch import VQGanVAE , VQGanVAETrainer

vae = VQGanVAE (

dim = 64 ,

image_size = 256 ,

num_layers = 5 ,

vq_codebook_size = 1024 ,

vq_use_cosine_sim = True ,

vq_codebook_dim = 32 ,

vq_orthogonal_reg_weight = 10 ,

vq_orthogonal_reg_max_codes = 128 ,

). cuda ()

trainer = VQGanVAETrainer (

vae , # VAE defined above

folder = '/path/to/images' , # path to images

lr = 3e-4 , # learning rate

num_train_steps = 100000 , # number of training steps

batch_size = 8 , # batch size

grad_accum_every = 4 # gradient accumulation (effective batch size is (batch_size x grad_accum_every))

)

trainer . train ()

# results and model checkpoints will be saved periodically to ./results NUWA를 교육하려면 먼저 캡션이 포함된 해당 .txt 파일과 함께 .gif 파일 폴더를 구성해야 합니다. 그것은 그렇게 구성되어야 한다.

전.

video-and-text-data

┣ cat.gif

┣ cat.txt

┣ dog.gif

┣ dog.txt

┣ turtle.gif

┗ turtle.txt

그런 다음 이전에 교육한 VQGan-VAE를 로드하고 GifVideoDataset 및 NUWATrainer 클래스를 사용하여 NUWA를 교육합니다.

import torch

from nuwa_pytorch import NUWA , VQGanVAE

from nuwa_pytorch . train_nuwa import GifVideoDataset , NUWATrainer

# dataset

ds = GifVideoDataset (

folder = './path/to/videos/' ,

channels = 1

)

# autoencoder

vae = VQGanVAE (

dim = 64 ,

image_size = 256 ,

num_layers = 5 ,

num_resnet_blocks = 2 ,

vq_codebook_size = 512 ,

attn_dropout = 0.1

)

vae . load_state_dict ( torch . load ( './path/to/trained/vae.pt' ))

# NUWA transformer

nuwa = NUWA (

vae = vae ,

dim = 512 ,

text_enc_depth = 6 ,

text_max_seq_len = 256 ,

max_video_frames = 10 ,

dec_depth = 12 ,

dec_reversible = True ,

enc_reversible = True ,

attn_dropout = 0.05 ,

ff_dropout = 0.05 ,

sparse_3dna_kernel_size = ( 5 , 3 , 3 ),

sparse_3dna_dilation = ( 1 , 2 , 4 ),

shift_video_tokens = True

). cuda ()

# data

trainer = NUWATrainer (

nuwa = nuwa , # NUWA transformer

dataset = dataset , # video dataset class

num_train_steps = 1000000 , # number of training steps

lr = 3e-4 , # learning rate

wd = 0.01 , # weight decay

batch_size = 8 , # batch size

grad_accum_every = 4 , # gradient accumulation

max_grad_norm = 0.5 , # gradient clipping

num_sampled_frames = 10 , # number of frames to sample

results_folder = './results' # folder to store checkpoints and samples

)

trainer . train () 이 라이브러리는 다양한 개선 사항(향상된 vqgan, 직교 코드북 정규화 등)이 포함된 이 벡터 양자화 라이브러리에 의존합니다. 이러한 개선 사항을 사용하려면 VQGanVAE 초기화에 vq_ 추가하여 벡터 양자화기 키워드 매개변수를 구성할 수 있습니다.

전. 향상된 vqgan에서 제안된 코사인 시뮬레이션

from nuwa_pytorch import VQGanVAE

vae = VQGanVAE (

dim = 64 ,

image_size = 256 ,

num_layers = 4 ,

vq_use_cosine_sim = True

# VectorQuantize will be initialized with use_cosine_sim = True

# https://github.com/lucidrains/vector-quantize-pytorch#cosine-similarity

). cuda () @misc { wu2021nuwa ,

title = { N"UWA: Visual Synthesis Pre-training for Neural visUal World creAtion } ,

author = { Chenfei Wu and Jian Liang and Lei Ji and Fan Yang and Yuejian Fang and Daxin Jiang and Nan Duan } ,

year = { 2021 } ,

eprint = { 2111.12417 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { esser2021taming ,

title = { Taming Transformers for High-Resolution Image Synthesis } ,

author = { Patrick Esser and Robin Rombach and Björn Ommer } ,

year = { 2021 } ,

eprint = { 2012.09841 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { iashin2021taming ,

title = { Taming Visually Guided Sound Generation } ,

author = { Vladimir Iashin and Esa Rahtu } ,

year = { 2021 } ,

eprint = { 2110.08791 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { ding2021cogview ,

title = { CogView: Mastering Text-to-Image Generation via Transformers } ,

author = { Ming Ding and Zhuoyi Yang and Wenyi Hong and Wendi Zheng and Chang Zhou and Da Yin and Junyang Lin and Xu Zou and Zhou Shao and Hongxia Yang and Jie Tang } ,

year = { 2021 } ,

eprint = { 2105.13290 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { kitaev2020reformer ,

title = { Reformer: The Efficient Transformer } ,

author = { Nikita Kitaev and Łukasz Kaiser and Anselm Levskaya } ,

year = { 2020 } ,

eprint = { 2001.04451 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

} @misc { shazeer2020talkingheads ,

title = { Talking-Heads Attention } ,

author = { Noam Shazeer and Zhenzhong Lan and Youlong Cheng and Nan Ding and Le Hou } ,

year = { 2020 } ,

eprint = { 2003.02436 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.LG }

} @misc { shazeer2020glu ,

title = { GLU Variants Improve Transformer } ,

author = { Noam Shazeer } ,

year = { 2020 } ,

url = { https://arxiv.org/abs/2002.05202 }

} @misc { su2021roformer ,

title = { RoFormer: Enhanced Transformer with Rotary Position Embedding } ,

author = { Jianlin Su and Yu Lu and Shengfeng Pan and Bo Wen and Yunfeng Liu } ,

year = { 2021 } ,

eprint = { 2104.09864 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CL }

} @inproceedings { ho2021classifierfree ,

title = { Classifier-Free Diffusion Guidance } ,

author = { Jonathan Ho and Tim Salimans } ,

booktitle = { NeurIPS 2021 Workshop on Deep Generative Models and Downstream Applications } ,

year = { 2021 } ,

url = { https://openreview.net/forum?id=qw8AKxfYbI }

} @misc { liu2021swin ,

title = { Swin Transformer V2: Scaling Up Capacity and Resolution } ,

author = { Ze Liu and Han Hu and Yutong Lin and Zhuliang Yao and Zhenda Xie and Yixuan Wei and Jia Ning and Yue Cao and Zheng Zhang and Li Dong and Furu Wei and Baining Guo } ,

year = { 2021 } ,

eprint = { 2111.09883 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

} @misc { crowson2022 ,

author = { Katherine Crowson } ,

url = { https://twitter.com/RiversHaveWings/status/1478093658716966912 }

}관심은 관대함의 가장 드물고 순수한 형태입니다. - 시몬느 웨일