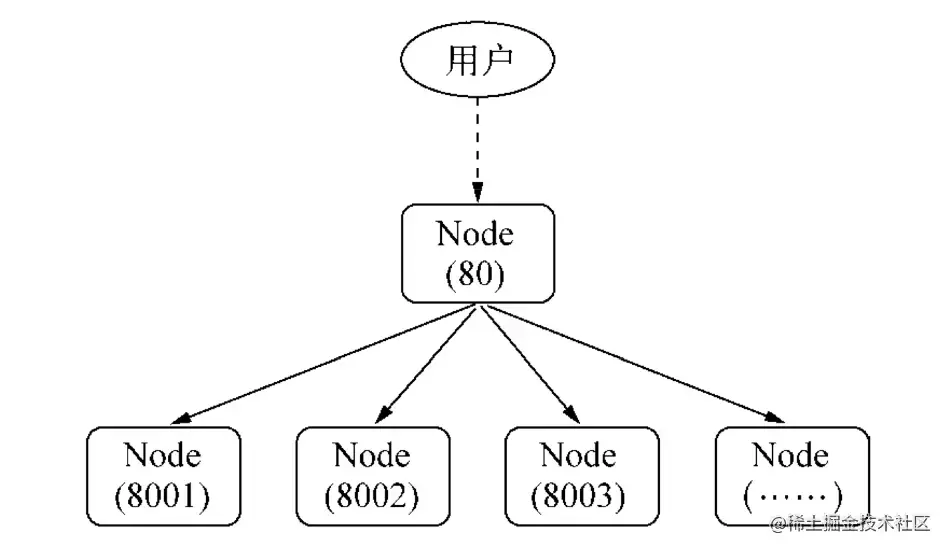

Friends who are familiar with js know that js 是单线程. In Node, a multi-process single-thread model is adopted. Due to the single-thread limitation of JavaScript, on multi-core servers, we often need to start multiple processes to maximize server performance.

Node.js process clusters can be used to run multiple Node.js instances, which can distribute workload among their application threads. When process isolation is not required, use the worker_threads module instead, which allows multiple application threads to be run within a single Node.js instance.

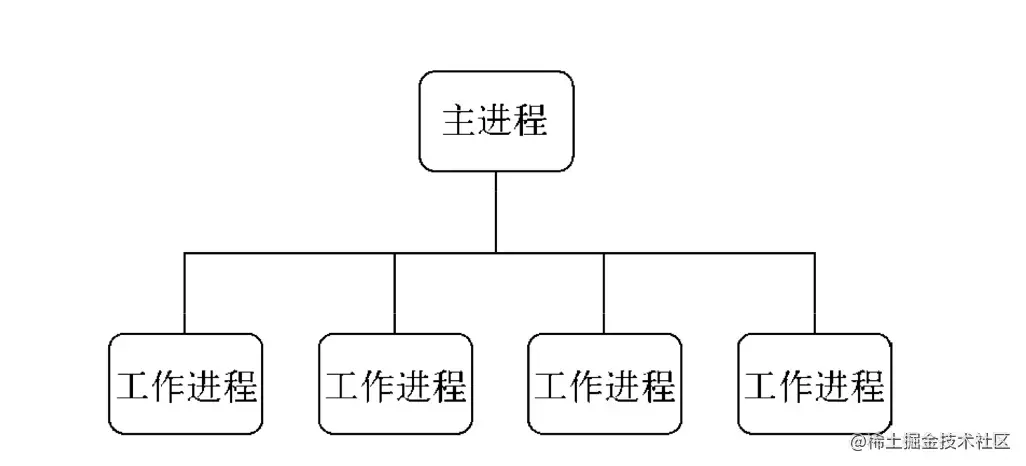

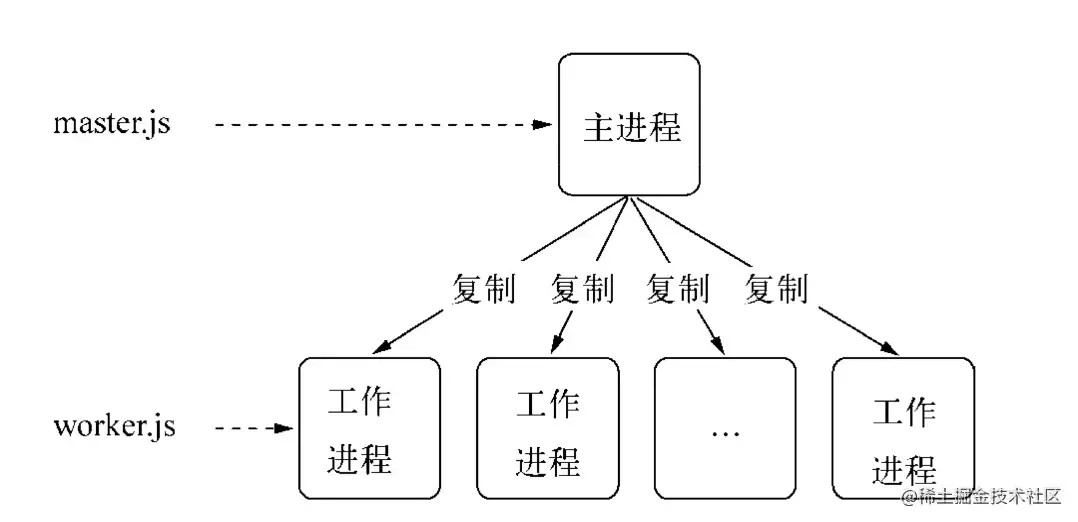

Node introduced the cluster module after version V0.8,一个主进程(master) 管理多个子进程(worker) 的方式实现集群.

The cluster module makes it easy to create child processes that share server ports.

The bottom layer of cluster is the child_process module. In addition to sending ordinary messages, it can also send underlying objects

TCP,UDP, etc.clustermodule is a combined application ofchild_processmodule and thenetmodule. When the cluster starts, the TCP server will be started internally and the file descriptor of the TCP server socket will be sent to the working process.

In the cluster module application,一个主进程只能管理一组工作进程. Its operating mode is not as flexible as the child_process module, but it is more stable:

const cluster = require('cluster') and complex .isMaster identifies the main process, Node<16.isPrimary.isPrimary the main process, Node>16.isWorker.isWorker the sub-process.worker is.worker for the current work Reference to process object [in child process].workers stores the hash of the active worker process object, with the id field as the key. This makes it easy to loop through all worker processes. It is only available in the main process. cluster.wokers[id] === worker [in the main process].settings is read-only, cluster configuration item. After calling the .setupPrimary() or .fork() method, this settings object will contain the settings, including default values. Previously an empty object. This object should not be changed or set manually.cluster.settings configuration item details:- `execArgv` <string[]>A list of string parameters passed to the Node.js executable file. **Default:** `process.execArgv`. - `exec` <string> File path to worker process file. **Default:** `process.argv[1]`. - `args` <string[]> String arguments passed to the worker process. **Default:** `process.argv.slice(2)`. - `cwd` <string>The current working directory of the worker process. **Default:** `undefined` (inherited from parent process). - `serialization` <string>Specifies the serialization type used to send messages between processes. Possible values are `'json'` and `'advanced'`. **Default:** `false`. - `silent` <boolean>Whether to send output to the standard input and output of the parent process. **Default:** `false`. - `stdio` <Array> configures the standard input and output of the spawned process. Because the cluster module relies on IPC to run, this configuration must contain an `'ipc'` entry. When this option is provided, it overrides `silent`. - `uid` <number> sets the user ID of the process. - `gid` <number> sets the group ID of the process. - `inspectPort` <number> | <Function> Sets the inspector port for the worker process. This can be a number or a function that takes no parameters and returns a number. By default, each worker process has its own port, starting from the main process's `process.debugPort` and increasing. - `windowsHide` <boolean> Hide the spawned process console window normally created on Windows systems. **Default:** `false`.

.fork([env]) spawns a new worker process [in the main process].setupPrimary([settings]) Node>16.setupMaster([settings]) is used to change the default 'fork' behavior, after use The settings will appear in cluster.settings . Any setting changes will only affect future calls to .fork() , not already running worker processes. The above default values only apply to the first call. Node is less than 16 [In main process].disconnect([callback]) Called when all worker processes disconnect and close handles [In main process]In order to make the cluster more stable and robust, cluster module also exposes many Event:

'message' event, triggered when the cluster master process receives a message from any worker process.'exit' event, when any worker process dies, the cluster module will trigger the 'exit' event.cluster.on('exit', (worker, code, signal) => {

console.log('worker %d died (%s). restarting...',

worker.process.pid, signal || code);

cluster.fork();

}); 'listening' event, after calling listen() from the worker process, when the 'listening' event is triggered on the server, cluster in the main process will also trigger the 'listening' event.cluster.on('listening', (worker, address) => {

console.log(

`A worker is now connected to ${address.address}:${address.port}`);

}); 'fork' event, when a new worker process is spawned, the cluster module will trigger the 'fork' event.cluster.on('fork', (worker) => {

timeouts[worker.id] = setTimeout(errorMsg, 2000);

}); 'setup' event, triggered every time .setupPrimary() is called.disconnect event is triggered after the IPC channel of the worker process is disconnected. When the worker process exits normally, is killed, or manually disconnectscluster.on('disconnect', (worker) => {

console.log(`The worker #${worker.id} has disconnected`);

}); The Worker object contains all public information and methods of the worker process. In the main process, you can use cluster.workers to get it. In a worker process, you can use cluster.worker to get it.

.id process identification. Each new worker process is given its own unique id. This id is stored in id . When a worker process is alive, this is the key that indexes it in cluster.workers ..process All worker processes are created using child_process.fork() , and the object returned by this function is stored as .process . In the worker process, the global process is stored..send(message[, sendHandle[, options]][, callback]) sends a message to the worker process or the main process, and you can choose to use a handle. In the main process, this sends a message to a specific worker process. It is the same as ChildProcess.send() . In the worker process, this sends a message to the main process. It is the same as process.send() ..destroy().kill([signal]) This function will kill the worker process. The kill() function kills the worker process without waiting for a graceful disconnect, it has the same behavior as worker.process.kill() . For backward compatibility, this method is aliased to worker.destroy() ..disconnect([callback]) is sent to the worker process, causing it to call its own .disconnect() which will shut down all servers, wait for 'close' events on those servers, and then disconnect the IPC channel..isConnect() This function returns true if the worker process is connected to its main process through its IPC channel, false otherwise. Worker processes connect to their master process after creation..isDead() This function returns true if the worker process has terminated (due to exit or receipt of a signal). Otherwise, it returns false .In order to make the cluster more stable and robust, the cluster module also exposes many events:

'message' event, in the worker process.cluster.workers[id].on('message', messageHandler); 'exit' event, when any worker process dies,当前worker工作进程object will trigger the 'exit' event.if (cluster.isPrimary) {

const worker = cluster.fork();

worker.on('exit', (code, signal) => {

if (signal) {

console.log(`worker was killed by signal: ${signal}`);

} else if (code !== 0) {

console.log(`worker exited with error code: ${code}`);

} else {

console.log('worker success!');

}

});

} 'listening' event, call listen() from the worker process to listen to the current worker process.cluster.fork().on('listening', (address) => {

// The worker process is listening }); disconnect event, which is triggered after the IPC channel of the worker process is disconnected. When the worker process exits normally, is killed, or manually disconnectscluster.fork().on('disconnect', () => {

//Limited to triggering on the current worker object}); In Node, inter-process communication (IPC) is used to realize inter-process communication between the main process and sub-processes. The communication between processes is through .send() (a.send means sending a message to a Send) method to send messages and listen to message events to collect information. This is implemented by cluster模块by integrating EventEmitter . It is also a simple inter-process communication example on the official website

process.on('message') , process.send()child.on('message') , child.send()# cluster.isMaster

# cluster.fork()

# cluster.workers

# cluster.workers[id].on('message', messageHandler);

# cluster.workers[id].send();

# process.on('message', messageHandler);

# process.send();

const cluster = require('cluster');

const http = require('http');

# Main process if (cluster.isMaster) {

// Keep track of http requests

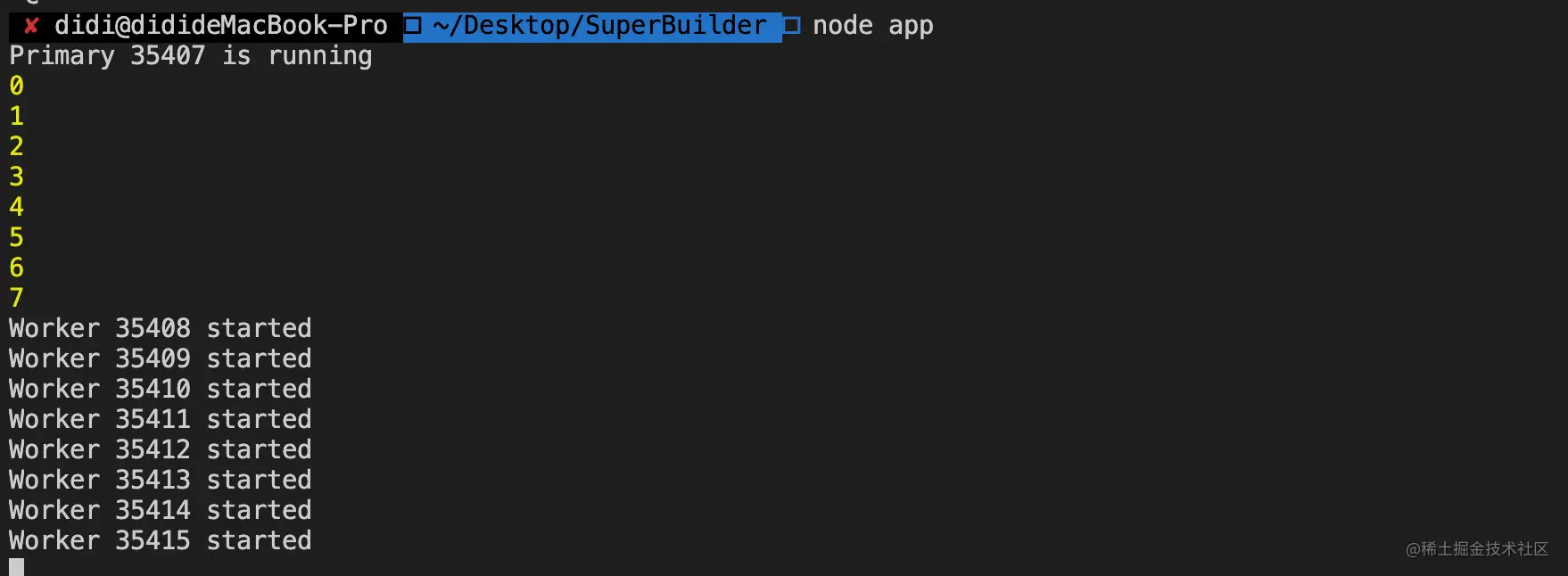

console.log(`Primary ${process.pid} is running`);

let numReqs = 0;

// Count requests

function messageHandler(msg) {

if (msg.cmd && msg.cmd === 'notifyRequest') {

numReqs += 1;

}

}

// Start workers and listen for messages containing notifyRequest

// Start multi-process (number of cpu cores)

// Spawn worker process.

const numCPUs = require('os').cpus().length;

for (let i = 0; i < numCPUs; i++) {

console.log(i)

cluster.fork();

}

// cluster worker main process communicates with child processes for (const id in cluster.workers) {

// ***Listen to events from child processes cluster.workers[id].on('message', messageHandler);

// ***Send cluster.workers[id].send({ to the child process

type: 'masterToWorker',

from: 'master',

data: {

number: Math.floor(Math.random() * 50)

}

});

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

});

} else {

# Child processes // Worker processes can share any TCP connection // In this example, it is an HTTP server // Worker processes have a http server.

http.Server((req, res) => {

res.writeHead(200);

res.end('hello worldn');

//******! ! ! ! Notify master about the request! ! ! ! ! ! *******

//****** Send process.send({ cmd: 'notifyRequest' });

//****** Listen to process.on('message', function(message) {

// xxxxxxx

})

}).listen(8000);

console.log(`Worker ${process.pid} started`);

}

Communication between NodeJS processes only involves message passing, and does not actually transfer objects.

Before sending the message, the send() method will assemble the message into a handle and message. This message will be serialized by JSON.stringify . That is to say, when passing the handle, the entire object will not be passed. It is transmitted through the IPC channel. They are all strings and are restored to objects through JSON.parse after transmission.

There is app.listen(port) in the code. When forking, why can multiple processes listen to the same port?

The reason is that the main process sends the handle of a service object belonging to the main process to multiple sub-processes through the send() method, so for each sub-process, after restoring the handle, they get the same service object. When the network When a request is made to the server, the process service is preemptive, so no exception will be caused when listening on the same port.

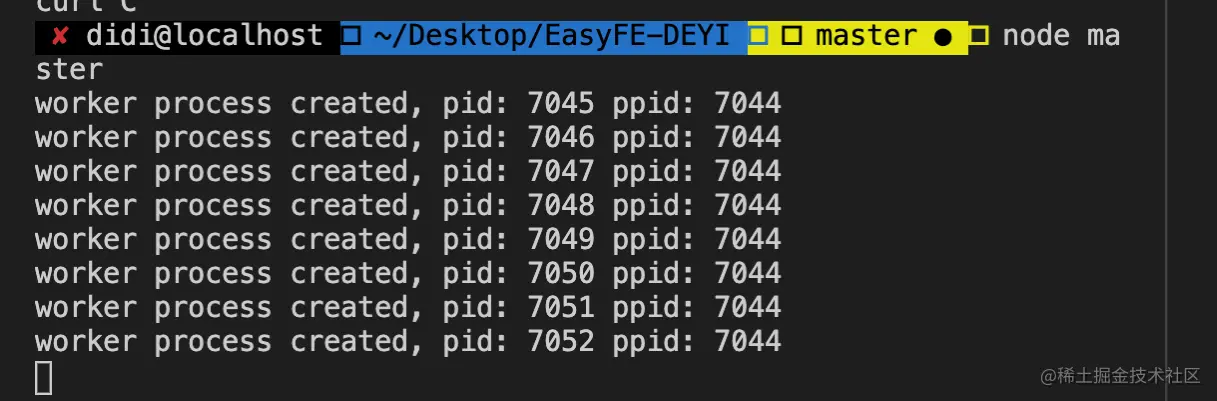

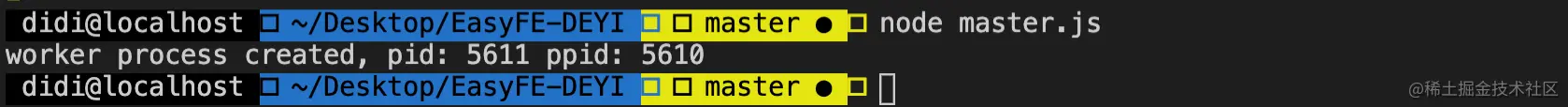

# master.js

const fork = require('child_process').fork;

const cpus = require('os').cpus();

for (let i=0; i<cpus.length; i++) {

const worker = fork('worker.js');

console.log('worker process created, pid: %s ppid: %s', worker.pid, process.pid);

} # worker.js

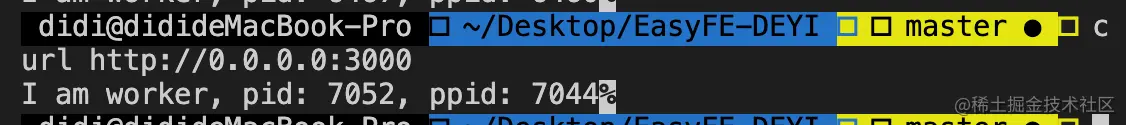

const http = require('http');

http.createServer((req, res) => {

res.end('I am worker, pid: ' + process.pid + ', ppid: ' + process.ppid);

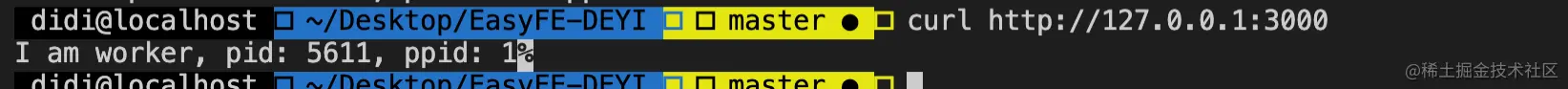

}).listen(3000); In the above code example, when the console executes

node master.jsonly one worker can listen to port 3000, and the rest will throwError: listen EADDRINUSE :::3000error.

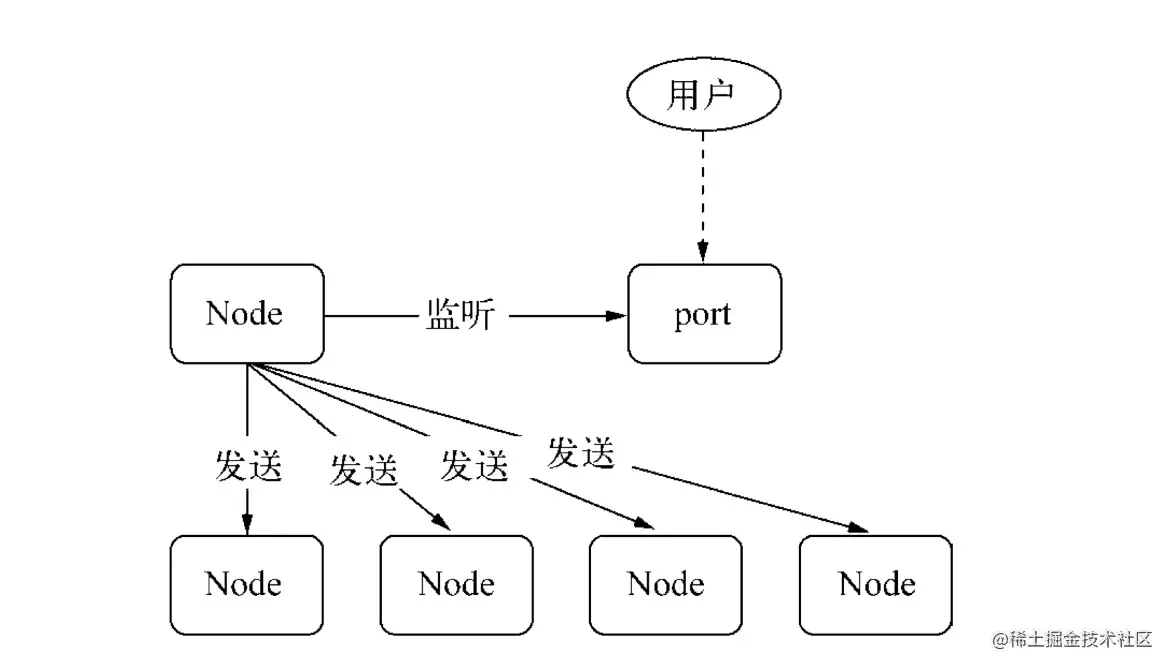

发送句柄between processes after version v0.5.9/** * http://nodejs.cn/api/child_process.html#child_process_subprocess_send_message_sendhandle_options_callback * message * sendHandle */ subprocess.send(message, sendHandle)

After the IPC channel is established between the parent and child processes, the message is sent through the send method of the subprocess object.二个参数sendHandle 就是句柄,可以是TCP套接字、TCP服务器、UDP套接字等, in order to solve the above multi-process port occupation problem, we pass the socket of the main process to the child process.

# master.js

const fork = require('child_process').fork;

const cpus = require('os').cpus();

const server = require('net').createServer();

server.listen(3000);

process.title = 'node-master'

for (let i=0; i<cpus.length; i++) {

const worker = fork('worker.js');

# Pass the handle worker.send('server', server);

console.log('worker process created, pid: %s ppid: %s', worker.pid, process.pid);

} // worker.js

let worker;

process.title = 'node-worker'

process.on('message', function (message, sendHandle) {

if (message === 'server') {

worker = sendHandle;

worker.on('connection', function (socket) {

console.log('I am worker, pid: ' + process.pid + ', ppid: ' + process.ppid)

});

}

}); Verify that the console executes node master.js

If you understand cluster , you will know that child processes are created through cluster.fork() . In Linux, the system natively provides the fork method, so why does Node choose to implement cluster模块by itself instead of directly using the system's native method? The main reasons are the following two points:

The fork process monitors the same port, which will cause port occupation errors.

There is no load balancing between the fork processes, which can easily lead to the thundering herd phenomenon

. In cluster模块, for the first problem, we determine whether the current process is master进程, if it is, listens on the port. If not, it is represented as a fork worker进程and does not listen on the port.

In response to the second question, cluster模块has a built-in load balancing function. master进程is responsible for listening to the port to receive requests, and then assigns them to the corresponding worker进程through the scheduling algorithm (the default is Round-Robin, the scheduling algorithm can be modified through the environment variable NODE_CLUSTER_SCHED_POLICY ).

When the code throws an exception that is not caught, the process will exit. At this time, Node.js provides process.on('uncaughtException', handler) interface to catch it, but when an When the Worker process encounters an uncaught exception, it is already in an uncertain state. At this time, we should let the process exit gracefully:

+---------+ +---------+

| Worker | | Master |

+---------+ +----+----+

| uncaughtException |

+----------------+ |

| | | +---------+

| <----------+ | | Worker |

| | +----+----+

| disconnect | fork a new worker |

+------------------------> + ------------------------> |

| wait... | |

| exit | |

+------------------------> | |

| | |

die | |

| |

| | When a process has an exception that causes a crash or OOM and is killed by the system, unlike when an uncaught exception occurs, we still have a chance to let the process continue to execute. We can only let the current process exit directly, and the Master immediately forks a New Worker.

The child_process module provides the ability to derive child processes, which is simply执行cmd命令的能力. By default, stdin、 stdout 和stderr 的管道会在父Node.js 进程和衍生的子进程之间建立. These pipelines have limited (and platform-specific) capacity. If the child process exceeds this limit when writing to stdout and no output is captured, the child process blocks and waits for the pipe buffer to accept more data. This is the same behavior as a pipe in the shell. If the output is not consumed, use the { stdio: 'ignore' } option.

const cp = require('child_process'); The child process created through API has no necessary connection with the parent process.

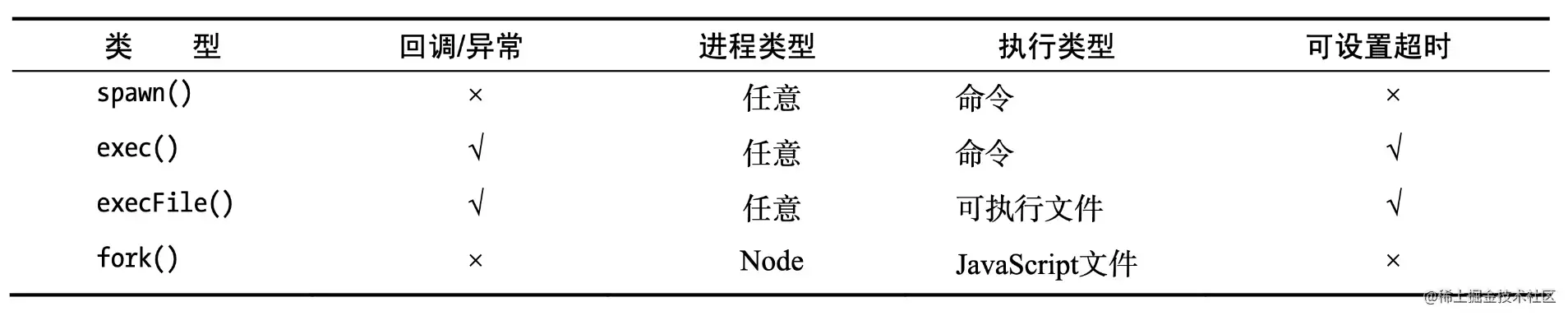

4 asynchronous methods are used to create child processes: fork, exec, execFile, spawn

Node

fork(modulePath, args) : Used when you want to run a Node process as an independent process, so that the calculation processing and file descriptor are separated from the Node main process (copying a child process)Non-Node

spawn(command, args) : Process some problems Use execFile(file, args[, callback]) when there are many sub-process I/Os or when the process has a large amount of outputexecFile(file, args[, callback]) Use it when you only need to execute an external program. The execution speed is fast and it is relatively safe to process user input.exec(command, options) : Used when you want to directly access the thread's shell command. Be sure to pay attention tothe three synchronization methods entered by the user: execSync , execFileSync , spawnSync

The other three methods are extensions of spawn() .

. Remember, the derived Node.js child process is independent of The parent process, except for the IPC communication channel established between the two. Each process has its own memory and its own V8 instance

.

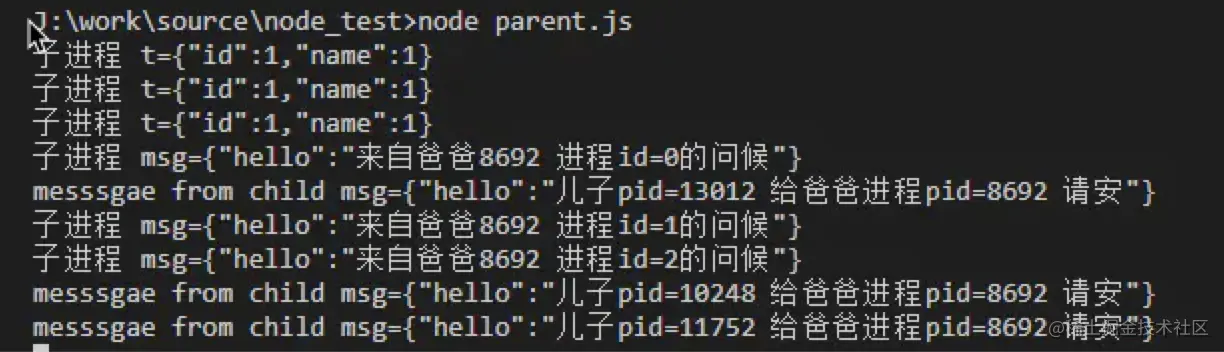

For example, create two files, worker.js and master.js, in a directory:

# child.js

const t = JSON.parse(process.argv[2]);

console.error(`child process t=${JSON.stringify(t)}`);

process.send({hello:`son pid=${process.pid} please give dad process pid=${process.ppid} hello`});

process.on('message', (msg)=>{

console.error(`child process msg=${JSON.stringify(msg)}`);

}); # parent.js

const {fork} = require('child_process');

for(let i = 0; i < 3; i++){

const p = fork('./child.js', [JSON.stringify({id:1,name:1})]);

p.on('message', (msg) => {

console.log(`messsgae from child msg=${JSON.stringify(msg)}`, );

});

p.send({hello:`Greetings from dad ${process.pid} process id=${i}`});

}

Start parent.js through node parent.js , and then check the number of processes through ps aux | grep worker.js We can find that ideally, the number of processes is equal to the number of CPU cores, and each process uses one CPU core.

This is the classic Master-Worker mode (master-slave mode)

In fact, forking a process is expensive, and the purpose of copying a process is to make full use of CPU resources, so NodeJS uses an event-driven approach on a single thread to solve the problem of high concurrency.

Applicable Scenarios <br/>Generally used for time-consuming scenarios, and are implemented using node, such as downloading files;

Fork can implement multi-threaded downloading: divide the file into multiple blocks, then each process downloads a part, and finally puts them together;

const cp = require('child_process');

// The first parameter is the name or path of the executable file to be run. here is echo

cp.execFile('echo', ['hello', 'world'], (err, stdout, stderr) => {

if (err) { console.error(err); }

console.log('stdout: ', stdout);

console.log('stderr: ', stderr);

}); Applicable scenarios <br/> More suitable for tasks with low overhead and more attention to results, such as ls, etc.;

is mainly used to execute a shell method, and spawn is still called internally, but it There is a maximum cache limit.

const cp = require('child_process');

cp.exec(`cat ${__dirname}/messy.txt | sort | uniq`, (err, stdout, stderr) => {

console.log(stdout);

}); Applicable scenarios <br/>More suitable for tasks with low overhead and paying more attention to results, such as ls, etc.;

single task

const cp = require('child_process');

const child = cp.spawn('echo', ['hello', 'world']);

child.on('error', console.error);

# The output is a stream, output to the main process stdout, the console child.stdout.pipe(process.stdout);

child.stderr.pipe(process.stderr); Multi-tasking concatenation

const cp = require('child_process');

const path = require('path');

const cat = cp.spawn('cat', [path.resolve(__dirname, 'messy.txt')]);

const sort = cp.spawn('sort');

const uniq = cp.spawn('uniq');

#The output is a stream cat.stdout.pipe(sort.stdin);

sort.stdout.pipe(uniq.stdin);

uniq.stdout.pipe(process.stdout); Applicable scenarios

spawn is streaming, so it is suitable for time-consuming tasks, such as executing npm install and printing the install process

is triggered after the process has ended and the standard input and output stream (sdtio) of the child process has been closed. 'close' event. This event is different from exit because multiple processes can share the same stdio stream.

Parameters:

Question: Does the code have to exist?

(It seems not from the comments on the code) For example, if you use kill to kill the child process, what is the code?

parameters:

code, signal, if the child process exits by itself, then code is the exit code, otherwise it is null;

If the child process is terminated through a signal, then signal is the signal to end the process, otherwise it is null.

Of the two, one must not be null.

Things to note :

When the exit event is triggered, the stdio stream of the child process may still be open. (Scenario?) In addition, nodejs listens to the SIGINT and SIGTERM signals. That is to say, when nodejs receives these two signals, it will not exit immediately. Instead, it will do some cleanup work first and then rethrow these two signals. (Visually, js can do cleanup work at this time, such as closing the database, etc.)

SIGINT : interrupt, program termination signal, usually issued when the user presses CTRL+C, used to notify the foreground process to terminate the process.

SIGTERM : terminate, program end signal, this signal can be blocked and processed, and is usually used to require the program to exit normally. The shell command kill generates this signal by default. If the signal cannot be terminated, we will try SIGKILL (forced termination).

When the following things happen, error will be triggered. When error triggers, exit may or may not trigger. (The heart is broken)

is triggered when process.send() is used to send a message.

parameter :

message , is a json object, or primitive value; sendHandle , a net.Socket object, or a net.Server object (students familiar with cluster should be familiar with this)

: When calling .disconnected() , set it to false. Represents whether it can receive messages from the child process or send messages to the child process.

.disconnect() : Close the IPC channel between the parent process and the child process. When this method is called, the disconnect event will fire. If the child process is a node instance (created through child_process.fork()), then process.disconnect() can also be actively called inside the child process to terminate the IPC channel.

responds to single-threaded problems. Multi-process methods are usually used to simulate multi-threading.

are occupied by the Node process.

the 7-thread

Node is the v8 engine. After the Node is started, an instance of v8 will be created. This instance is the

The execution of JavaScript is单线程, but the host environment of Javascript, whether it is Node or the browser, is multi-threaded.

Why is Javascript single-threaded?

This problem needs to start with the browser. For DOM operations in the browser environment, just imagine if multiple threads operate on the same DOM, it will be chaotic. That means that the DOM operation can only be done in a single way. Threads to avoid DOM rendering conflicts. In the browser environment, the UI rendering thread and the JS execution engine are mutually exclusive. When one is executed, the other will be suspended. This is determined by the JS engine.

process.env.UV_THREADPOOL_SIZE. = 64

worker_threads to provide real multi-threading capabilities to Node.const {

isMainThread,

parentPort,

workerData,

threadId,

MessageChannel,

MessagePort,

Worker

} = require('worker_threads');

function mainThread() {

for (let i = 0; i < 5; i++) {

const worker = new Worker(__filename, { workerData: i });

worker.on('exit', code => { console.log(`main: worker stopped with exit code ${code}`); });

worker.on('message', msg => {

console.log(`main: receive ${msg}`);

worker.postMessage(msg + 1);

});

}

}

function workerThread() {

console.log(`worker: workerDate ${workerData}`);

parentPort.on('message', msg => {

console.log(`worker: receive ${msg}`);

}),

parentPort.postMessage(workerData);

}

if (isMainThread) {

mainThread();

} else {

workerThread();

} const assert = require('assert');

const {

Worker,

MessageChannel,

MessagePort,

isMainThread,

parentPort

} = require('worker_threads');

if (isMainThread) {

const worker = new Worker(__filename);

const subChannel = new MessageChannel();

worker.postMessage({ hereIsYourPort: subChannel.port1 }, [subChannel.port1]);

subChannel.port2.on('message', (value) => {

console.log('received:', value);

});

} else {

parentPort.once('message', (value) => {

assert(value.hereIsYourPort instanceof MessagePort);

value.hereIsYourPort.postMessage('the worker is sending this');

value.hereIsYourPort.close();

});

}Process is the smallest unit of resource allocation, and thread is the smallest unit of CPU scheduling.

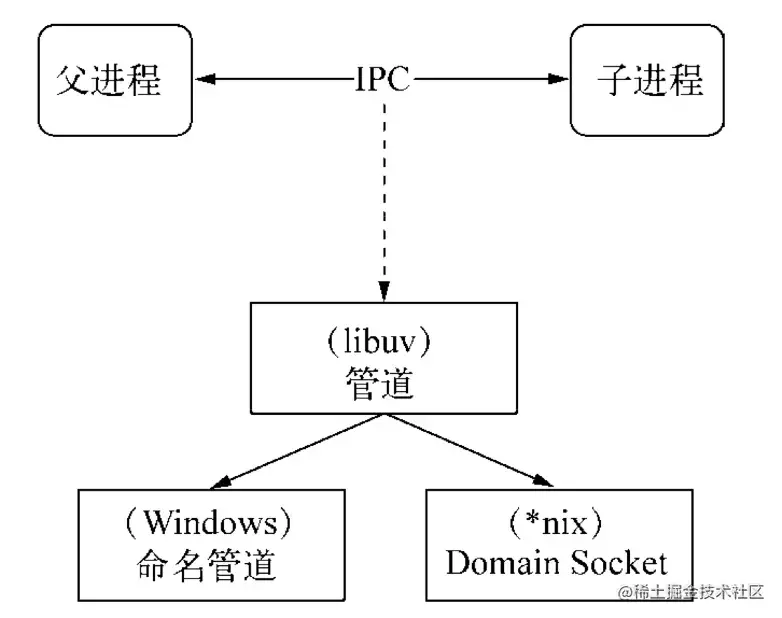

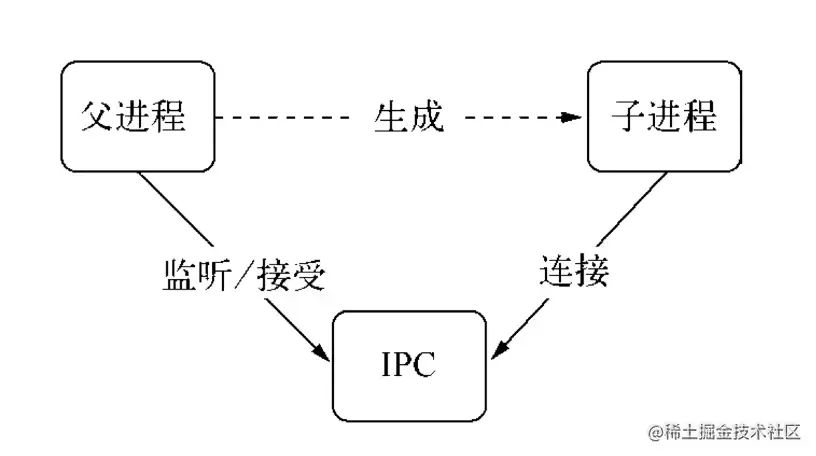

IPC (Inter-process communication) is进程间通信. Since each process has its own independent address space after creation, the purpose of implementing IPC is to share access to resources between processes.

There are many ways to implement IPC: pipes, message queues, semaphores, Domain Sockets, and Node.js is implemented through pipes.

In fact, the parent process will first create an IPC channel and listen to this IPC before creating the child process, and then create the child process. It will tell the child process and the file descriptor related to the IPC channel through the environment variable (NODE_CHANNEL_FD). The child process starts At this time, the IPC channel is connected according to the file descriptor to establish a connection with the parent process.

A handle is a reference that can be used to identify a resource. It contains the file resource descriptor pointing to the object.

Generally, when we want to monitor multiple processes on one port, we may consider using the main process agent:

However, this proxy solution will cause each request reception and proxy forwarding to use two file descriptors, and the system's file descriptors are limited. This approach will affect the system's scalability.

So, why use handles? The reason is that in actual application scenarios, establishing IPC communication may involve more complex data processing scenarios. The handle can be passed in as the second optional parameter of the send() method, which means that the resource identifier can be passed directly. IPC transmission avoids the use of file descriptors caused by proxy forwarding mentioned above.

The following are the handle types that support sending:

After the parent process of the orphan process creates a child process, the parent process exits, but one or more child processes corresponding to the parent process are still alive. During operation, these child processes will be adopted by the system's init process, and the corresponding process ppid is 1, which is an orphan process. This is illustrated by the following code example.

# worker.js

const http = require('http');

const server = http.createServer((req, res) => {

res.end('I am worker, pid: ' + process.pid + ', ppid: ' + process.ppid);

//Record the current worker process pid and parent process ppid

});

let worker;

process.on('message', function (message, sendHandle) {

if (message === 'server') {

worker = sendHandle;

worker.on('connection', function(socket) {

server.emit('connection', socket);

});

}

}); # master.js

const fork = require('child_process').fork;

const server = require('net').createServer();

server.listen(3000);

const worker = fork('worker.js');

worker.send('server', server);

console.log('worker process created, pid: %s ppid: %s', worker.pid, process.pid);

process.exit(0);

// After creating the child process, the main process exits. The worker process created at this time will become an orphan process console for testing, and the current worker process pid and parent process ppid will be output.

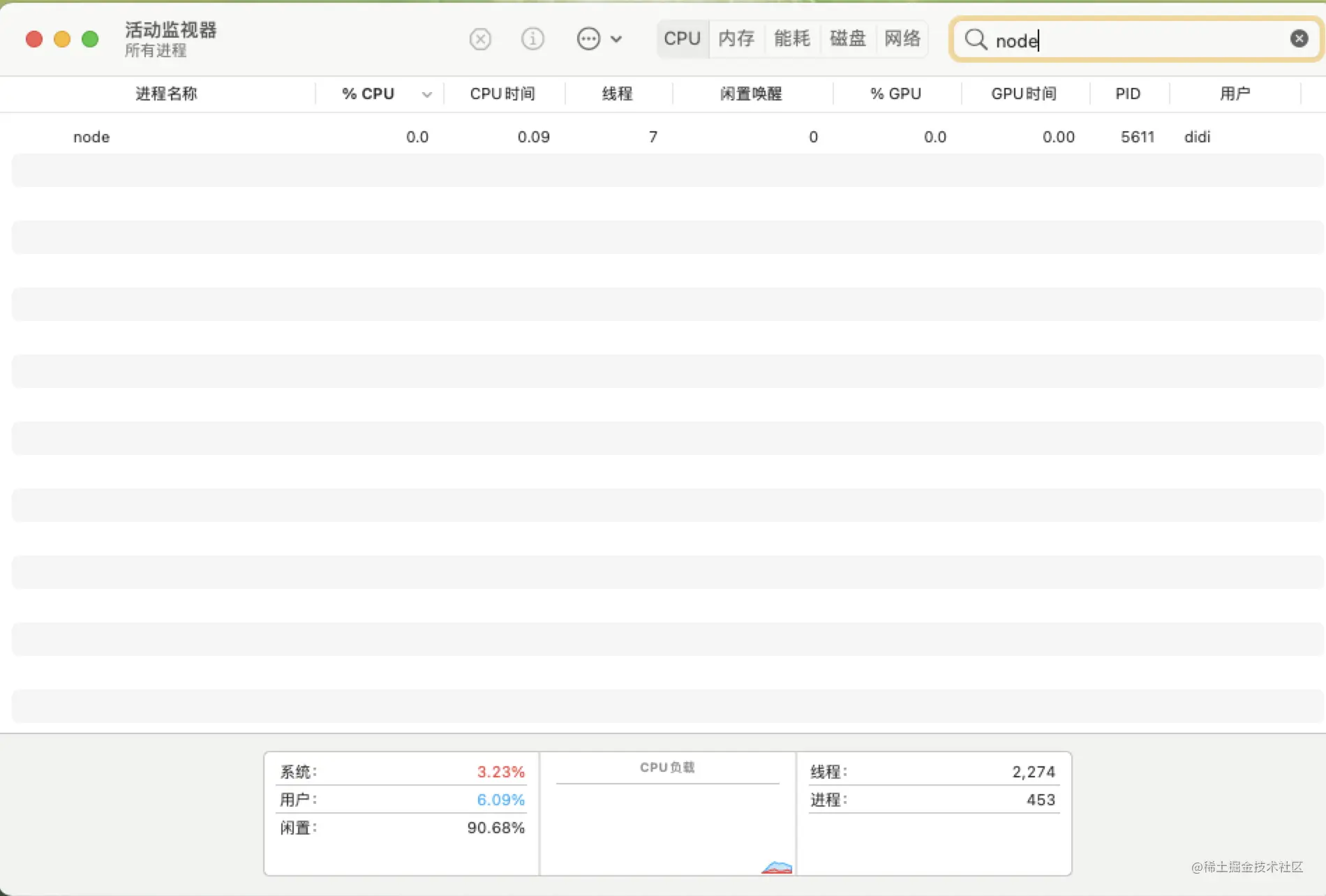

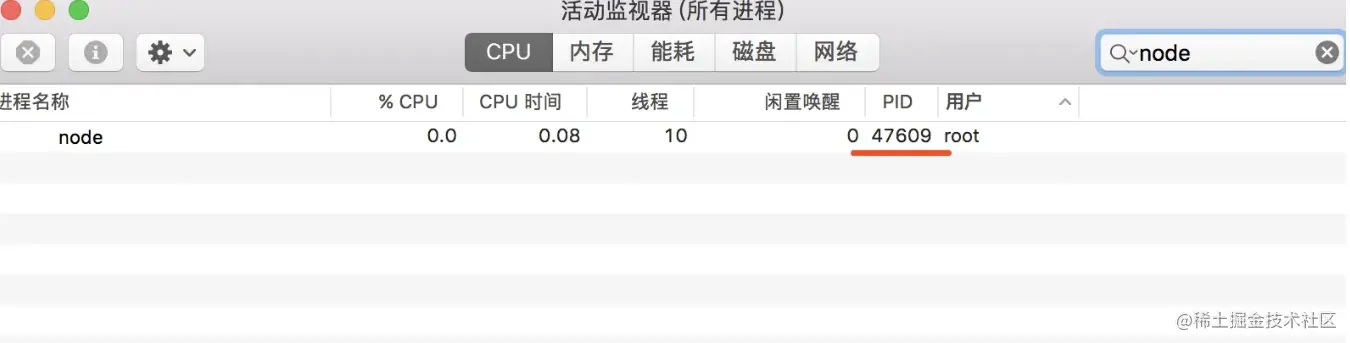

Since the parent process is exited in master.js, the activity monitor only shows the worker process.

Verify again, open the console call interface, you can see that the ppid corresponding to the worker process 5611 is 1 (for the init process), and it has become an orphan process at this time.

The daemon process runs in the background and is not affected by the terminal. What does it mean?

Students who develop Node.js may be familiar with it. When we open the terminal and execute node app.js to start a service process, the terminal will always be occupied. If we close the terminal, the service will be disconnected, which is前台运行模式.

If the daemon process method is used, after I execute node app.js to start a service process in this terminal, I can also do other things on this terminal without affecting each other.

Create a child process

Create a new session in the child process (call the system function setsid)

Change the working directory of the child process (such as: "/" or "/usr/, etc.)

Terminate the parent process

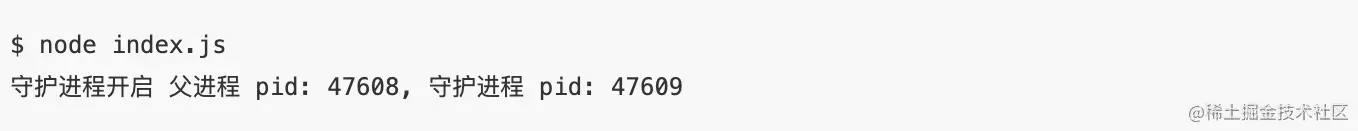

options.detached to true allows the child process to continue running after the parent process exits (the system layer will call the setsid method). This is The second step.. index.js

const spawn = require('child_process').spawn;

function startDaemon() {

const daemon = spawn('node', ['daemon.js'], {

cwd: '/usr',

detached : true,

stdio: 'ignore',

});

console.log('Daemon process starts parent process pid: %s, daemon process pid: %s', process.pid, daemon.pid);

daemon.unref();

}

startDaemon() The processing logic in the daemon.js file starts a timer and executes it every 10 seconds so that this resource will not exit. At the same time, the log is written to

/usr/daemon.jsin the current working directory of the child process.

const fs = require('fs');

const { Console } = require('console');

// custom simple logger

const logger = new Console(fs.createWriteStream('./stdout.log'), fs.createWriteStream('./stderr.log'));

setInterval(function() {

logger.log('daemon pid: ', process.pid, ', ppid: ', process.ppid);

}, 1000 * 10); The daemon process implements the Node.js version source code address

https://github.com/Q-Angelo/project-training/tree/master/nodejs/simple-daemon

In actual work, we are no strangers to daemon processes, such as PM2, Egg-Cluster, etc. The above is just a simple Demo to explain the daemon process. In actual work, the requirements for the robustness of the daemon process are still very high. High ones, such as: process exception monitoring, work process management and scheduling, restarting the process after it hangs up, etc. These still need to be constantly thought about.

5.What is the

The current working directory of the process can be obtained through the process.cwd() command. The default is the currently started directory. If a child process is created, it will be inherited from the directory of the parent process, which can be obtained through process.chdir() command resets. For example, a child process created by the spawn command can specify the cwd option to set the working directory of the child process.

What is the role?

For example, if you read the file through FS, if you set it to the relative path, you can find it relative to the directory of the current process. In one case, the third -party module in the program also searches according to the directory started by the current process.

// Example process.chdir ('/users/may/documens/tests/') // Set the current process directory console.log (process.cwd ()); // Get the current process directory