这是一个端到端项目,包括数据摄取、指令/答案对的创建、微调和结果评估。

首先安装依赖项:

pip install -r requirements.txt

为了找到微调数据,我们从 Arxiv 中抓取了 Llama 3 发布日期之后发表的法学硕士论文。

Selenium 抓取代码可以在llama3_8b_finetuning/arxiv_scraping/Arxiv_pdfs_download.py中找到(必须在执行此脚本之前下载 Webdriver)。

抓取代码获取 Arxiv 第一页上的论文并将其下载到llama3_8b_finetuning/data/pdfs文件夹中。

此步骤的代码可以在 /llama3_8b_finetuning/creating_instruction_dataset.py 中找到。

使用 Langchain 的 PyPDFLoader 解析下载论文的文本内容。然后,文本通过 Grok 发送到 Llama 3 70B 模型。选择 Grok 是因为其速度快且成本低。必须注意的是,Llama 3 用户许可证仅允许将其用于训练/微调 Llama LLM。因此,我们无法使用 Llama 3 为其他模型(甚至开源模型)或非商业用途创建指令/答案对。

创建对的提示位于 utils 文件中,也可以在下面看到:

'''

You are a highly intelligent and knowledgeable assistant tasked with generating triples of instruction, input, and output from academic papers related to Large Language Models (LLMs). Each triple should consist of:

Instruction: A clear and concise task description that can be performed by an LLM.

Input: A sample input that corresponds to the instruction.

Output: The expected result or answer when the LLM processes the input according to the instruction.

Below are some example triples:

Example 1:

Instruction: Summarize the following abstract.

Input: "In this paper, we present a new approach to training large language models by incorporating a multi-task learning framework. Our method improves the performance on a variety of downstream tasks."

Output: "A new multi-task learning framework improves the performance of large language models on various tasks."

Example 2:

Instruction: Provide a brief explanation of the benefits of using multi-task learning for large language models.

Input: "Multi-task learning allows a model to learn from multiple related tasks simultaneously, which can lead to better generalization and performance improvements across all tasks. This approach leverages shared representations and can reduce overfitting."

Output: "Multi-task learning helps large language models generalize better and improve performance by learning from multiple related tasks simultaneously."

Now, generate similar triples based on the provided text from academic papers related to LLMs:

Source Text

(Provide the text from the academic papers here)

Generated Triples

Triple 1:

Instruction:

Input:

Output:

Triple 2:

Instruction:

Input:

Output:

Triple 3:

Instruction:

Input:

Output:

'''

最后,指令保存在llama3_8b_finetuning/data/arxiv_instruction_dataset.json中。

此步骤的代码可以在/llama3_8b_finetuning/model_trainer.py中找到

首先,我们加载指令/答案对,将它们分成测试和训练数据集,然后

将它们格式化为正确的结构。

class DatasetHandler :

def __init__ ( self , data_path ):

self . data_path = data_path

def load_and_split_dataset ( self ):

dataset = load_dataset ( "json" , data_files = self . data_path )

train_test_split = dataset [ 'train' ]. train_test_split ( test_size = 0.2 )

dataset_dict = DatasetDict ({

'train' : train_test_split [ 'train' ],

'test' : train_test_split [ 'test' ]

})

return dataset_dict [ 'train' ], dataset_dict [ 'test' ]

@ staticmethod

def format_instruction ( sample ):

return f"""

Below is an instruction that describes a task, paired with an input that provides further context.

Write a response that appropriately completes the request.

### Instruction:

{ sample [ 'Instruction' ] }

### Input:

{ sample [ 'Input' ] }

### Response:

{ sample [ 'Output' ] }

"""然后,我们定义从 Hugging Face 加载模型和标记器的类。

class ModelManager :

def __init__ ( self , model_id , use_flash_attention2 , hf_token ):

self . model_id = model_id

self . use_flash_attention2 = use_flash_attention2

self . hf_token = hf_token

self . bnb_config = BitsAndBytesConfig (

load_in_4bit = True ,

bnb_4bit_use_double_quant = True ,

bnb_4bit_quant_type = "nf4" ,

bnb_4bit_compute_dtype = torch . bfloat16 if use_flash_attention2 else torch . float16

)

def load_model_and_tokenizer ( self ):

model = AutoModelForCausalLM . from_pretrained (

self . model_id ,

quantization_config = self . bnb_config ,

use_cache = False ,

device_map = "auto" ,

token = self . hf_token ,

attn_implementation = "flash_attention_2" if self . use_flash_attention2 else "sdpa"

)

model . config . pretraining_tp = 1

tokenizer = AutoTokenizer . from_pretrained (

self . model_id ,

token = self . hf_token

)

tokenizer . pad_token = tokenizer . eos_token

tokenizer . padding_side = "right"

return model , tokenizer我们定义Trainer类和训练配置:

class Trainer :

def __init__ ( self , model , tokenizer , train_dataset , peft_config , use_flash_attention2 , output_dir ):

self . model = model

self . tokenizer = tokenizer

self . train_dataset = train_dataset

self . peft_config = peft_config

self . args = TrainingArguments (

output_dir = output_dir ,

num_train_epochs = 3 ,

per_device_train_batch_size = 4 ,

gradient_accumulation_steps = 4 ,

gradient_checkpointing = True ,

optim = "paged_adamw_8bit" ,

logging_steps = 10 ,

save_strategy = "epoch" ,

learning_rate = 2e-4 ,

bf16 = use_flash_attention2 ,

fp16 = not use_flash_attention2 ,

tf32 = use_flash_attention2 ,

max_grad_norm = 0.3 ,

warmup_steps = 5 ,

lr_scheduler_type = "linear" ,

disable_tqdm = False ,

report_to = "none"

)

self . model = get_peft_model ( self . model , self . peft_config )

def train_model ( self , format_instruction_func ):

trainer = SFTTrainer (

model = self . model ,

train_dataset = self . train_dataset ,

peft_config = self . peft_config ,

max_seq_length = 2048 ,

tokenizer = self . tokenizer ,

packing = True ,

formatting_func = format_instruction_func ,

args = self . args ,

)

trainer . train ()

return trainer最后,实例化类并开始训练。

请注意,Llama 模型是有门控的,这意味着 Hugging Face 需要在接受使用条款并且 Meta 批准访问后提供令牌(几乎是即时的)。

dataset_handler = DatasetHandler ( data_path = utils . Variables . INSTRUCTION_DATASET_JSON_PATH )

train_dataset , test_dataset = dataset_handler . load_and_split_dataset ()

new_test_dataset = []

for dict_ in test_dataset :

dict_ [ 'Output' ] = ''

new_test_dataset . append ( dict_ )

model_manager = ModelManager (

model_id = "meta-llama/Meta-Llama-3-8B" ,

use_flash_attention2 = True ,

hf_token = os . environ [ "HF_TOKEN" ]

)

model , tokenizer = model_manager . load_model_and_tokenizer ()

model_manager . save_model_and_tokenizer ( model , tokenizer , save_directory = utils . Variables . BASE_MODEL_PATH )

model = model_manager . prepare_for_training ( model )

peft_config = LoraConfig (

lora_alpha = 16 ,

lora_dropout = 0.1 ,

r = 64 ,

bias = "none" ,

task_type = "CAUSAL_LM" ,

target_modules = [

"q_proj" , "k_proj" , "v_proj" , "o_proj" , "gate_proj" , "up_proj" , "down_proj" ,

]

)

trainer = Trainer (

model = model ,

tokenizer = tokenizer ,

train_dataset = train_dataset ,

peft_config = peft_config ,

use_flash_attention2 = True ,

output_dir = utils . Variables . FINE_TUNED_MODEL_PATH

)

trained_model = trainer . train_model ( format_instruction_func = dataset_handler . format_instruction )

trained_model . save_model ()为了评估微调结果,我们采用了面向召回的要点评估(ROUGE)分数,它比较两组文本之间的重叠以衡量它们之间的相似性。

具体来说,我们使用 rouge_scorer 库来计算 ROUGE-1 和 ROUGE-2,它们测量文本之间的 1-gram 和 2-gram 重叠。

import pandas as pd

from rouge_score import rouge_scorer

def calculate_rouge_scores ( generated_answers , ground_truth ):

scorer = rouge_scorer . RougeScorer ([ 'rouge1' , 'rouge2' , 'rougeL' ], use_stemmer = True )

total_rouge1 , total_rouge2 , total_rougeL = 0 , 0 , 0

for gen , ref in zip ( generated_answers , ground_truth ):

scores = scorer . score ( gen , ref )

total_rouge1 += scores [ 'rouge1' ]. fmeasure

total_rouge2 += scores [ 'rouge2' ]. fmeasure

total_rougeL += scores [ 'rougeL' ]. fmeasure

average_rouge1 = total_rouge1 / len ( generated_answers )

average_rouge2 = total_rouge2 / len ( generated_answers )

average_rougeL = total_rougeL / len ( generated_answers )

return { 'average_rouge1' : average_rouge1 ,

'average_rouge2' : average_rouge2 ,

'average_rougeL' : average_rougeL }为了执行此计算,我们从测试数据集中获取指令,将它们传递到基础模型和微调模型中,并将输出与指令/答案数据集的预期结果进行比较。

评估代码可以在 /llama3_8b_finetuning/model_evaluation.py 中找到。

class ModelHandler :

def __init__ ( self ):

pass

def loading_model ( self , model_chosen = 'fine_tuned_model' ):

if model_chosen == 'fine_tuned_model' :

model_dir = utils . Variables . FINE_TUNED_MODEL_PATH

self . model = AutoPeftModelForCausalLM . from_pretrained (

model_dir ,

low_cpu_mem_usage = True ,

torch_dtype = torch . float16 ,

load_in_4bit = True ,

)

elif model_chosen == 'base_model' :

model_dir = utils . Variables . BASE_MODEL_PATH

self . model = AutoModelForCausalLM . from_pretrained (

model_dir ,

low_cpu_mem_usage = True ,

torch_dtype = torch . float16 ,

load_in_4bit = True ,

)

self . tokenizer = AutoTokenizer . from_pretrained ( model_dir )

def ask_question ( self , instruction , temperature = 0.5 , max_new_tokens = 1000 ):

prompt = format_instruction ( instruction )

input_ids = self . tokenizer ( prompt , return_tensors = "pt" , truncation = True ). input_ids . cuda ()

start_time = time . time ()

with torch . inference_mode ():

outputs = self . model . generate ( input_ids = input_ids , pad_token_id = self . tokenizer . eos_token_id , max_new_tokens = max_new_tokens , do_sample = True , top_p = 0.5 , temperature = temperature )

end_time = time . time ()

total_time = end_time - start_time

output_length = len ( outputs [ 0 ]) - len ( input_ids [ 0 ])

self . output = self . tokenizer . batch_decode ( outputs . detach (). cpu (). numpy (), skip_special_tokens = True )[ 0 ]

return self . outputROUGE分数如下:

微调模型:

{'average_rouge1': 0.39997816307812206, 'average_rouge2': 0.2213826792342886, 'average_rougeL': 0.33508922374837047}

基本型号:

{'average_rouge1': 0.2524191394349585, 'average_rouge2': 0.13402054342344535, 'average_rougeL': 0.2115590931984475}

因此,可以看出,微调模型在测试数据集上的性能明显优于基础模型。

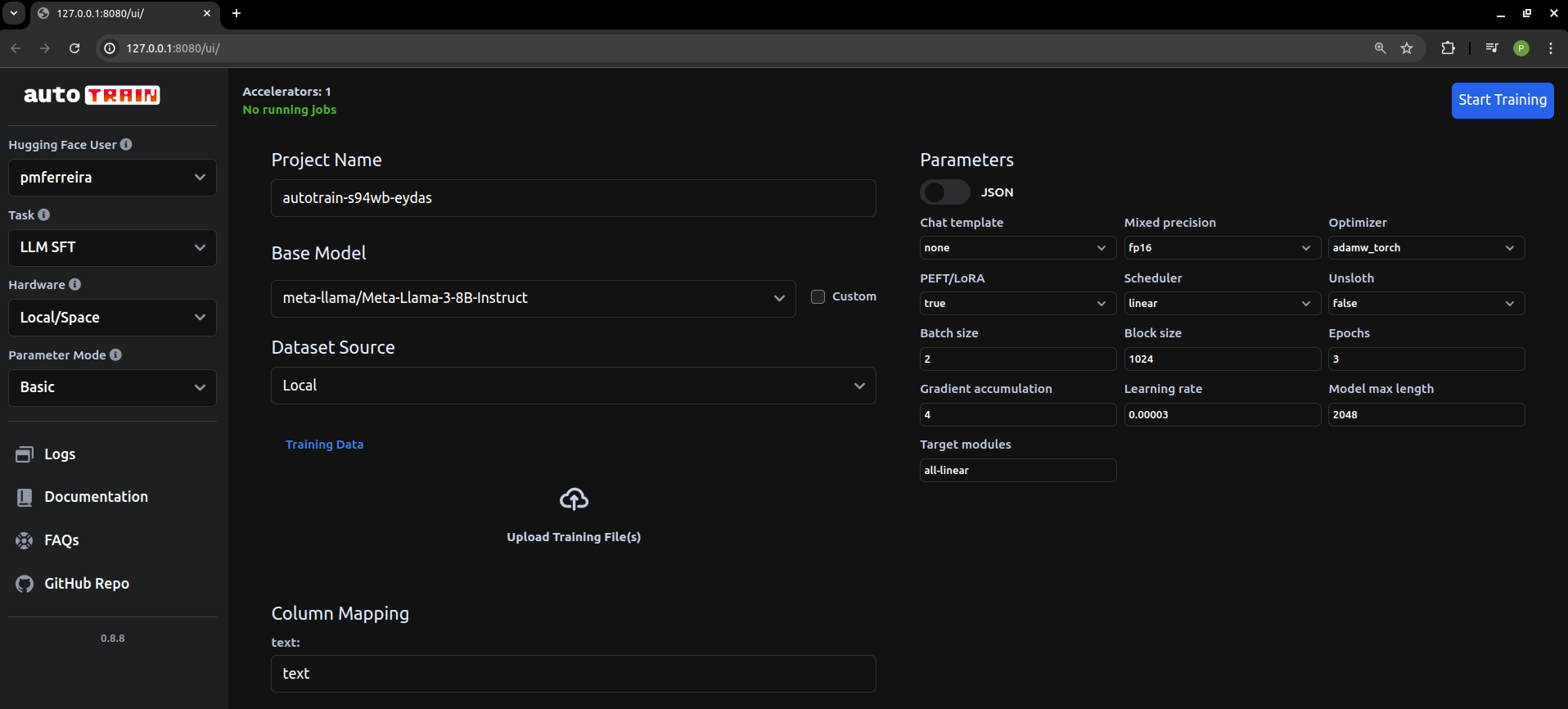

编写这段代码并使其运行花了相当长的时间。这是很好的做法,但对于日常微调相关的工作,只需使用本地托管的 Hugging Face AutoTrain (https://github.com/huggingface/autotrain-advanced)。