autoregressive diffusion pytorch

0.2.8

在 Pytorch 中实现无矢量量化的自回归图像生成背后的架构

官方仓库已经发布在这里

替代路线

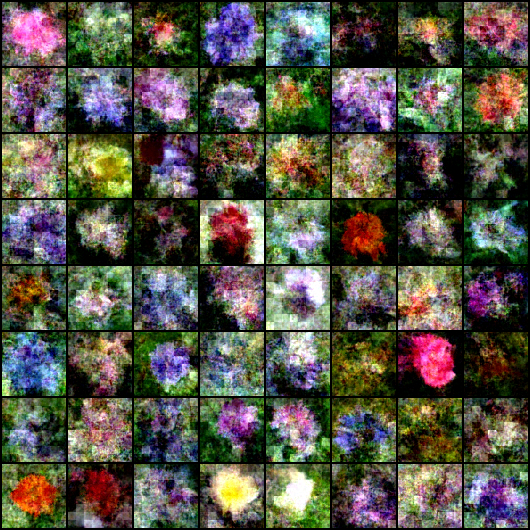

96k 步的牛津花

$ pip install autoregressive-diffusion-pytorch import torch

from autoregressive_diffusion_pytorch import AutoregressiveDiffusion

model = AutoregressiveDiffusion (

dim_input = 512 ,

dim = 1024 ,

max_seq_len = 32 ,

depth = 8 ,

mlp_depth = 3 ,

mlp_width = 1024

)

seq = torch . randn ( 3 , 32 , 512 )

loss = model ( seq )

loss . backward ()

sampled = model . sample ( batch_size = 3 )

assert sampled . shape == seq . shape对于被视为标记序列的图像(如在论文中)

import torch

from autoregressive_diffusion_pytorch import ImageAutoregressiveDiffusion

model = ImageAutoregressiveDiffusion (

model = dict (

dim = 1024 ,

depth = 12 ,

heads = 12 ,

),

image_size = 64 ,

patch_size = 8

)

images = torch . randn ( 3 , 3 , 64 , 64 )

loss = model ( images )

loss . backward ()

sampled = model . sample ( batch_size = 3 )

assert sampled . shape == images . shape图像训练师

import torch

from autoregressive_diffusion_pytorch import (

ImageDataset ,

ImageAutoregressiveDiffusion ,

ImageTrainer

)

dataset = ImageDataset (

'/path/to/your/images' ,

image_size = 128

)

model = ImageAutoregressiveDiffusion (

model = dict (

dim = 512

),

image_size = 128 ,

patch_size = 16

)

trainer = ImageTrainer (

model = model ,

dataset = dataset

)

trainer ()对于使用流匹配的临时版本,只需导入ImageAutoregressiveFlow和AutoregressiveFlow

其余的都一样

前任。

import torch

from autoregressive_diffusion_pytorch import (

ImageDataset ,

ImageTrainer ,

ImageAutoregressiveFlow ,

)

dataset = ImageDataset (

'/path/to/your/images' ,

image_size = 128

)

model = ImageAutoregressiveFlow (

model = dict (

dim = 512

),

image_size = 128 ,

patch_size = 16

)

trainer = ImageTrainer (

model = model ,

dataset = dataset

)

trainer () @article { Li2024AutoregressiveIG ,

title = { Autoregressive Image Generation without Vector Quantization } ,

author = { Tianhong Li and Yonglong Tian and He Li and Mingyang Deng and Kaiming He } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2406.11838 } ,

url = { https://api.semanticscholar.org/CorpusID:270560593 }

} @article { Wu2023ARDiffusionAD ,

title = { AR-Diffusion: Auto-Regressive Diffusion Model for Text Generation } ,

author = { Tong Wu and Zhihao Fan and Xiao Liu and Yeyun Gong and Yelong Shen and Jian Jiao and Haitao Zheng and Juntao Li and Zhongyu Wei and Jian Guo and Nan Duan and Weizhu Chen } ,

journal = { ArXiv } ,

year = { 2023 } ,

volume = { abs/2305.09515 } ,

url = { https://api.semanticscholar.org/CorpusID:258714669 }

} @article { Karras2022ElucidatingTD ,

title = { Elucidating the Design Space of Diffusion-Based Generative Models } ,

author = { Tero Karras and Miika Aittala and Timo Aila and Samuli Laine } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2206.00364 } ,

url = { https://api.semanticscholar.org/CorpusID:249240415 }

} @article { Liu2022FlowSA ,

title = { Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow } ,

author = { Xingchao Liu and Chengyue Gong and Qiang Liu } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2209.03003 } ,

url = { https://api.semanticscholar.org/CorpusID:252111177 }

} @article { Esser2024ScalingRF ,

title = { Scaling Rectified Flow Transformers for High-Resolution Image Synthesis } ,

author = { Patrick Esser and Sumith Kulal and A. Blattmann and Rahim Entezari and Jonas Muller and Harry Saini and Yam Levi and Dominik Lorenz and Axel Sauer and Frederic Boesel and Dustin Podell and Tim Dockhorn and Zion English and Kyle Lacey and Alex Goodwin and Yannik Marek and Robin Rombach } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2403.03206 } ,

url = { https://api.semanticscholar.org/CorpusID:268247980 }

}