本計畫提供了一個大規模中文對話資料集,並提供了在此資料集上的中文對話預訓練模型(中文GPT模型),更多資訊可參考我們的論文。

本專案程式碼修改自TransferTransfo,使用了HuggingFace Pytorch版的Transformers庫, 可用於預訓練與微調。

from datasets import load_dataset

dataset = load_dataset ( "lccc" , "base" ) # or "large"我們所提供的資料集LCCC(Large-scale Cleaned Chinese Conversation)主要包含兩部分: LCCC-base (百度網盤, Google Drive) 和LCCC-large (百度網盤, Google Drive). 我們設計了一套嚴格的資料過濾流程來確保該資料集中對話資料的品質。 此資料過濾流程中包含一系列手動規則以及若干基於機器學習演算法所建構的分類器。 我們所過濾掉的噪音包括:髒字髒詞、特殊字元、顏表情、語法不通的語句、上下文不相關的對話等。

此資料集的統計資訊如下表所示。 其中,我們將只包含兩個語句的對話稱為“單輪對話”,我們將包含兩個以上語句的對話稱為“多輪對話”。 統計詞表大小時使用Jieba 分詞。

| LCCC-base (百度網盤, Google Drive) | 單輪對話 | 多輪對話 |

|---|---|---|

| 總對話輪次 | 3,354,232 | 3,466,274 |

| 總對話語句 | 6,708,464 | 13,365,256 |

| 總字元數 | 68,559,367 | 163,690,569 |

| 詞表大小 | 372,063 | 666,931 |

| 話語句的平均詞數 | 6.79 | 8.32 |

| 每輪對話的平均語句數 | 2 | 3.86 |

請注意, LCCC-base 資料集的清洗過程比LCCC-large 更為嚴格,因此其規模也更小。

| LCCC-large (百度網盤, Google Drive) | 單輪對話 | 多輪對話 |

|---|---|---|

| 總對話輪次 | 7,273,804 | 4,733,955 |

| 總對話語句 | 14,547,608 | 18,341,167 |

| 總字元數 | 162,301,556 | 217,776,649 |

| 詞表大小 | 662,514 | 690,027 |

| 對話語句的評價詞數 | 7.45 | 8.14 |

| 每輪對話的平均語句數 | 2 | 3.87 |

LCCC-base 資料集中的原始對話資料來自於微博對話,LCCC-large 資料集中的原始對話資料在這些微博對話的基礎上融合了其他開源對話資料集:

| 數據集 | 總對話輪次 | 對話範例 |

|---|---|---|

| Weibo Corpus | 79M | Q:火鍋我在重慶成都吃了七八頓火鍋A: 哈哈哈哈!那我的嘴巴可能要爛掉! |

| PTT Gossiping Corpus | 0.4M | Q:為什麼鄉下人總是欺負國高中生呢QQ A:如果以為選好科係就會變成比爾蓋茲那不如退學吧 |

| Subtitle Corpus | 2.74M | Q:京戲裡頭的人都是不自由的A:他們讓人拿籠子給套起來了 |

| Xiaohuangji Corpus | 0.45M | Q:你談過戀愛麼A:談過,哎,別提了,傷心.. |

| Tieba Corpus | 2.32M | Q:前排,魯迷都起床了吧A:標題說助攻,但是看了那球,真是活生生的諷刺了 |

| Qingyun Corpus | 0.1M | Q:看來你很愛錢A:噢是嗎?那你也差不多了 |

| Douban Conversation Corpus | 0.5M | Q:看原版英文電影學純正英語A:大愛老友記反覆看了好多次了Q:一樣光盤都快被我看花了A:那你現在的英語應該不錯了 |

| E-commerical Conversation Corpus | 0.5M | Q:這個會不會聚划算A:暫時沒有哦Q:後期會不會有A:不一定哦親多多關注我們哦 |

| Chinese Chat Corpus | 0.5M | Q: 我今天腿都廢了,你們過節,我搬磚A: 辛苦啊,聖誕節還去賺大錢了加油Q: 畢竟是沒男朋友的人,什麼節都是一樣的 |

我們同時提供了一系列中文預訓練模型(中文GPT模型),這些模型的預訓練過程分為兩步,首先在一個中文小說資料上預先訓練,然後在LCCC資料集上預先訓練。

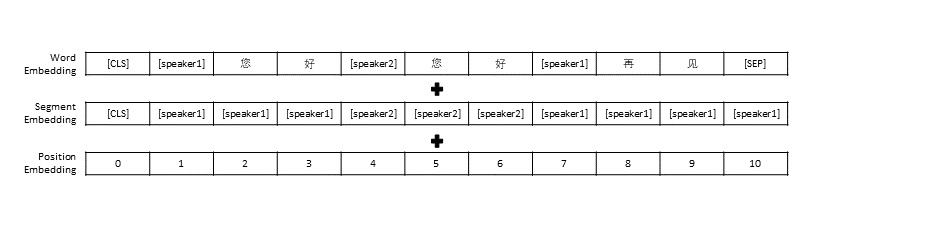

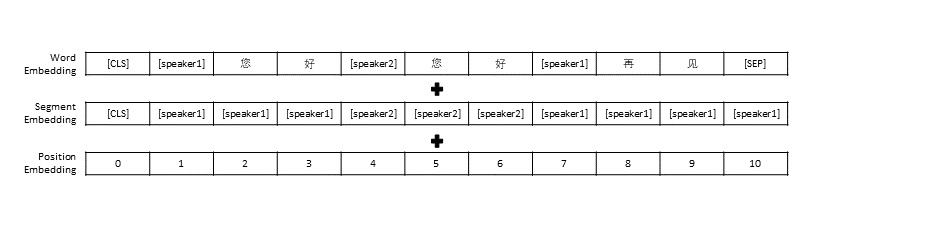

我們沿用了TransferTransfo 中的資料預處理設定,既將所有的對話歷史拼接為一個句子,然後使用這個句子作為模型的輸入,預測對話回應。我們模型的輸入除了各個單字的向量表示外,還包括發話者向量表示和位置向量表示。

| 預訓練模型 | 參數數量 | 預訓練所使用數據 | 描述 |

|---|---|---|---|

| GPT Novel | 95.5M | 中文小說數據 | 以中文小說資料建構中文預訓練GPT模型(此小說資料共包含1.3B個字) |

| CDial-GPT LCCC-base | 95.5M | LCCC-base | 在GPT Novel的基礎上,使用LCCC-base 訓練得到的中文預訓練GPT模型 |

| CDial-GPT2 LCCC-base | 95.5M | LCCC-base | 在GPT Novel的基礎上,使用LCCC-base 訓練得到的中文預訓練GPT2模型 |

| CDial-GPT LCCC-large | 95.5M | LCCC-large | 在GPT Novel的基礎上,使用LCCC-large 訓練得到的中文預訓練GPT模型 |

從原始碼直接安裝:

git clone https://github.com/thu-coai/CDial-GPT.git

cd CDial-GPT

pip install -r requirements.txt

Step 1: 準備預訓練模型和fine-tuning 所需使用的資料集(如STC dataset 或該項目目錄中的toy資料"data/toy_data.json", 請注意如資料中包含英文需按字母分割如: hello)

# 下载 STC 数据集 中的训练集和验证集 并将其解压至 "data_path" 目录 (如果微调所使用的数据集为 STC)

git lfs install

git clone https://huggingface.co/thu-coai/CDial-GPT_LCCC-large # 您可自行下载模型或者OpenAIGPTLMHeadModel.from_pretrained("thu-coai/CDial-GPT_LCCC-large")

ps:可以使用以下連結下載STC的訓練集和驗證集(百度網盤, Google Drive)

Step 2: 訓練模型

python train.py --pretrained --model_checkpoint thu-coai/CDial-GPT_LCCC-large --data_path data/STC.json --scheduler linear # 使用单个GPU进行训练

或者

python -m torch.distributed.launch --nproc_per_node=8 train.py --pretrained --model_checkpoint thu-coai/CDial-GPT_LCCC-large --data_path data/STC.json --scheduler linear # 以分布式的方式在8块GPU上训练

我們的訓練腳本中也提供了train_path參數,使用者可使用該參數以切片地形式讀取純文字檔案。如果您所使用的系統中記憶體有限,可以考慮使用此參數讀入訓練資料。 如果您使用train_path則需要將data_path置空。

Step 3: 生成文本

# YOUR_MODEL_PATH: 你要使用的模型的路径,每次微调后的模型目录保存在./runs/中

python infer.py --model_checkpoint YOUR_MODEL_PATH --datapath data/STC_test.json --out_path STC_result.txt # 在测试数据上生成回复

python interact.py --model_checkpoint YOUR_MODEL_PATH # 在命令行中与模型进行交互

ps:可以使用以下連結下載STC的測試集(百度網碟, Google Drive)

訓練腳本參數

| 參數 | 類型 | 預設值 | 描述 |

|---|---|---|---|

| model_checkpoint | str | "" | Path or URL of model files (Directory of pre-training model and config/vocab files) |

| pretrained | bool | False | If False, then train the model from scratch |

| data_path | str | "" | Path of the dataset |

| dataset_cache | str | default="dataset_cache" | Path or url of the dataset cache |

| train_path | str | "" | Path of the training set for distributed dataset |

| valid_path | str | "" | Path of the validation set for distributed dataset |

| log_file | str | "" | Output logs to a file under this path |

| num_workers | int | 1 | Number of subprocesses for data loading |

| n_epochs | int | 70 | Number of training epochs |

| train_batch_size | int | 8 | Batch size for training |

| valid_batch_size | int | 8 | Batch size for validation |

| max_history | int | 15 | Number of previous exchanges to keep in history |

| scheduler | str | "noam" | Method of optimizer |

| n_emd | int | 768 | Number of n_emd in config file (for noam) |

| eval_before_start | bool | False | If true, start evaluation before training |

| warmup_steps | int | 5000 | Warm up steps |

| valid_steps | int | 0 | Perform validation every X steps, if is not 0 |

| gradient_accumulation_steps | int | 64 | Accumulate gradients on several steps |

| max_norm | float | 1.0 | Clipping gradient norm |

| device | str | "cuda" if torch.cuda.is_available() else "cpu" | Device (cuda or cpu) |

| fp16 | str | "" | Set to O0, O1, O2 or O3 for fp16 training (see apex documentation) |

| local_rank | int | -1 | Local rank for distributed training (-1: not distributed) |

我們評測了使用STC資料集(訓練集/驗證集(百度網盤, Google Drive), 測試集(百度網盤, Google Drive)) 微調後的對話預訓練模型。 所有的回覆均使用Nucleus Sampling 的方法採樣得到(p=0.9, temperature=0.7)。

| 模型 | 模型大小 | PPL | BLEU-2 | BLEU-4 | Dist-1 | Dist-2 | Greedy Matching | Embedding Average |

|---|---|---|---|---|---|---|---|---|

| Attn-Seq2seq | 73M | 34.20 | 3.93 | 0.90 | 8.5 | 11.91 | 65.84 | 83.38 |

| Transformer | 113M | 22.10 | 6.72 | 3.14 | 8.8 | 13.97 | 66.06 | 83.55 |

| GPT2-chitchat | 88M | - | 2.28 | 0.54 | 10.3 | 16.25 | 61.54 | 78.94 |

| GPT Novel | 95.5M | 21.27 | 5.96 | 2.71 | 8.0 | 11.72 | 66.12 | 83.34 |

| GPT LCCC-base | 95.5M | 18.38 | 6.48 | 3.08 | 8.3 | 12.68 | 66.21 | 83.54 |

| GPT2 LCCC-base | 95.5M | 22.76 | 5.69 | 2.50 | 7.7 | 10.87 | 66.24 | 83.46 |

| GPT LCCC-large | 95.5M | 18.23 | 6.63 | 3.20 | 8.3 | 12.71 | 66.25 | 83.63 |

我們為每個模型採樣了200個回复,並在外包平台上邀請了3位標註員對這些回復進行人工評測,評測維度為對話流暢性、上下文相關性和回復多樣性。每個維度的評分範圍為2/1/0。更多細節請參考我們的論文。

| 模型 | +2 | +1 | +0 | Kappa |

|---|---|---|---|---|

| Attn-Seq2Seq | 27.1% | 21.4% | 51.4% | 0.4544 |

| Transformer | 42.4% | 23.6% | 34.0% | 0.4429 |

| GPT2-chitchat | 24.3% | 19,1% | 56.6% | 0.3941 |

| GPT LCCC-base | 46.7% | 21.8% | 31.5% | 0.3954 |

| GPT LCCC-large | 48.6% | 24.5% | 27.0% | 0.4203 |

我們隨機採樣了1000個對話回复,並統計了這些回復中的不良回复:

| 包含重複語句 | 包含髒字 | 其他不良回覆 |

|---|---|---|

| 23 | 9 | 2 |

我們在STC資料集上微調了GPT LCCC-large模型的參數,並使用此微調後的模型產生如下對話範例:

| Post | Response |

|---|---|

| 這居然是北京不是哈爾濱。 | 哈爾濱的天氣好像比北京好點, 我這裡就是小雨, 白天都是大風 |

| 經營者校園廣告大PK , 太歡樂了! 哈哈哈。 | 我以為是馬雲的廣告。 |

| D elsa S posa 糖果色系列婚紗, 粉紅藍紅紫, 俏皮又清新, 你喜歡嗎? | 最後一件太美了, 美的不像話! |

我們也使用上述微調後的模型得到如下交互作用結果:

| Roel | Response |

|---|---|

| User | 別愛我沒結果 |

| Bot | 沒結果的愛是多麼的痛。 |

| User | 但是愛過就可以了 |

| Bot | 有時候,愛情就是一場遊戲。 |

| User | 輸的總是我 |

| Bot | 我愛你, 就像我愛你一樣。 |

| User | 你可拉到吧 |

| Bot | 你可拉到吧。 |

| User | 別學我說話 |

| Bot | 我說話就是你不行。 |

| User | 逼我動手吧是吧 |

| Bot | 不, 是逼你動口是吧 |

Yida Wang, Pei Ke, Yinhe Zheng, Kaili Huang, Yong Jiang, Xiaoyan Zhu, Minlie Huang

Zuoxian Ye, Yao Wang, Yifan Pan

本計畫所提供的LCCC 資料集和預訓練對話模型僅限於科學研究用途。 LCCC資料集中的對話收集自不同的來源,雖然我們設計了一套嚴格的資料清洗流程,但我們並不保證所有不當內容均已被過濾。該數據中所包含的所有內容和意見與本項目作者無關。 本專案所提供的模型和程式碼僅為完整對話系統的一個組成部分,我們所提供的解碼腳本僅限研究用途,使用本專案中的模型和腳本所產生的一切對話內容與本專案作者無關。

如果您覺得我們的專案對您有幫助,請引用我們的論文:

@inproceedings{wang2020chinese,

title={A Large-Scale Chinese Short-Text Conversation Dataset},

author={Wang, Yida and Ke, Pei and Zheng, Yinhe and Huang, Kaili and Jiang, Yong and Zhu, Xiaoyan and Huang, Minlie},

booktitle={NLPCC},

year={2020},

url={https://arxiv.org/abs/2008.03946}

}

This project provides a large-scale cleaned Chinese conversation dataset and a Chinese GPT model pre-trained on this dataset. Please refer to our paper for more details.

Our code used for the pre-training is adapted from the TransferTransfo model based on the Transformers library. The codes used for both pre-training and fine-tuning are provided in this repository.

We present a Large-scale Cleaned Chinese Conversation corpus (LCCC) containing: LCCC-base (Baidu Netdisk, Google Drive) and LCCC-large (Baidu Netdisk, Google Drive). A rigorous data cleaning pipeline is designed to ensure the quality of the corpus. This pipeline involves a set of rules and several classifier-based filters. Noises such as offensive or sensitive words, special symbols, emojis, grammatically incorrect sentences, and incoherent conversations are filtered.

The statistic of our corpus is presented below. Dialogues with only two utterances are regarded as "Single-turn", and dialogues with more than three utterances are regarded as "Multi-turn". The vocabulary three is calabary Jieba is used to tokenize each utterance to words.

| LCCC-base (Baidu Netdisk, Google Drive) | Single-turn | Multi-turn |

|---|---|---|

| Sessions | 3,354,382 | 3,466,607 |

| Utterances | 6,708,554 | 13,365,268 |

| Characters | 68,559,727 | 163,690,614 |

| Vocabulary | 372,063 | 666,931 |

| Avg. 字 per utterance | 6.79 | 8.32 |

| Avg. utterances per session | 2 | 3.86 |

Note that LCCC-base is cleaned using more strict rules compared to LCCC-large.

| LCCC-large (Baidu Netdisk, Google Drive) | Single-turn | Multi-turn |

|---|---|---|

| Sessions | 7,273,804 | 4,733,955 |

| Utterances | 14,547,608 | 18,341,167 |

| Characters | 162,301,556 | 217,776,649 |

| Vocabulary | 662,514 | 690,027 |

| Avg. 字 per utterance | 7.45 | 8.14 |

| Avg. utterances per session | 2 | 3.87 |

The raw dialogues for LCCC-base originate from a Weibo Corpus that we crawled from Weibo, and the raw dialogues for LCCC-large is built by combining several conversation datasets in addition to the Weibo Corpus:

| Dataset | Sessions | Sample |

|---|---|---|

| Weibo Corpus | 79M | Q:火鍋我在重慶成都吃了七八頓火鍋A: 哈哈哈哈!那我的嘴巴可能要爛掉! |

| PTT Gossiping Corpus | 0.4M | Q:為什麼鄉下人總是欺負國高中生呢QQ A:如果以為選好科係就會變成比爾蓋茲那不如退學吧 |

| Subtitle Corpus | 2.74M | Q:京戲裡頭的人都是不自由的A:他們讓人拿籠子給套起來了 |

| Xiaohuangji Corpus | 0.45M | Q:你談過戀愛麼A:談過,哎,別提了,傷心.. |

| Tieba Corpus | 2.32M | Q:前排,魯迷都起床了吧A:標題說助攻,但是看了那球,真是活生生的諷刺了 |

| Qingyun Corpus | 0.1M | Q:看來你很愛錢A:噢是嗎?那你也差不多了 |

| Douban Conversation Corpus | 0.5M | Q:看原版英文電影學純正英語A:大愛老友記反覆看了好多次了Q:一樣光盤都快被我看花了A:那你現在的英語應該不錯了 |

| E-commerical Conversation Corpus | 0.5M | Q:這個會不會聚划算A:暫時沒有哦Q:後期會不會有A:不一定哦親多多關注我們哦 |

| Chinese Chat Corpus | 0.5M | Q: 我今天腿都廢了,你們過節,我搬磚A: 辛苦啊,聖誕節還去賺大錢了加油Q: 畢竟是沒男朋友的人,什麼節都是一樣的 |

We also present a series of Chinese GPT model that are first pre-trained on a Chinese novel dataset and then post-trained on our LCCC dataset.

Similar to TransferTransfo, we concatenate all dialogue histories into one context sentence, and use this sentence to predict the response. The input of our model consists of word embedding, speaker embedding, and positional embedding.

| Models | Parameter Size | Pre-training Dataset | Description |

|---|---|---|---|

| GPT Novel | 95.5M | Chinese Novel | A GPT model pre-trained on Chinese Novel dataset (1.3B words, note that we do not provide the detail of this model) |

| CDial-GPT LCCC-base | 95.5M | LCCC-base | A GPT model post-trained on LCCC-base dataset from GPT Novel |

| CDial-GPT2 LCCC-base | 95.5M | LCCC-base | A GPT2 model post-trained on LCCC-base dataset from GPT Novel |

| CDial-GPT LCCC-large | 95.5M | LCCC-large | A GPT model post-trained on LCCC-large dataset from GPT Novel |

Install from the source codes:

git clone https://github.com/thu-coai/CDial-GPT.git

cd CDial-GPT

pip install -r requirements.txt

Step 1: Prepare the data for fine-tuning (Eg, STC dataset or "data/toy_data.json" in our respository) and the pre-trianed model:

# Download the STC dataset and unzip into "data_path" dir (fine-tuning on STC)

git lfs install

git clone https://huggingface.co/thu-coai/CDial-GPT_LCCC-large # or OpenAIGPTLMHeadModel.from_pretrained("thu-coai/CDial-GPT_LCCC-large")

ps: You can download the train and valid split of STC from the following links: (Baidu Netdisk, Google Drive)

Step 2: Train the model

python train.py --pretrained --model_checkpoint thu-coai/CDial-GPT_LCCC-large --data_path data/STC.json --scheduler linear # Single GPU training

or

python -m torch.distributed.launch --nproc_per_node=8 train.py --pretrained --model_checkpoint thu-coai/CDial-GPT_LCCC-large --data_path data/STC.json --scheduler linear # Training on 8 GPUs

Note: We have also provided train_path argument in the training script to read dataset in plain text, which will be sliced and handled distributionally. You can consider to use this argument if the dataset is too large can consider to use this argument if the dataset is too large em. leave the data_path argument empty if you are using train_path ).

Step 3: Inference mode

# YOUR_MODEL_PATH: the model path used for generation

python infer.py --model_checkpoint YOUR_MODEL_PATH --datapath data/STC_test.json --out_path STC_result.txt # Do Inference on a corpus

python interact.py --model_checkpoint YOUR_MODEL_PATH # Interact on the terminal

ps: You can download the test split of STC from the following links: (Baidu Netdisk, Google Drive)

Training Arguments

| Arguments | Type | Default value | Description |

|---|---|---|---|

| model_checkpoint | str | "" | Path or URL of model files (Directory of pre-training model and config/vocab files) |

| pretrained | bool | False | If False, then train the model from scratch |

| data_path | str | "" | Path of the dataset |

| dataset_cache | str | default="dataset_cache" | Path or url of the dataset cache |

| train_path | str | "" | Path of the training set for distributed dataset |

| valid_path | str | "" | Path of the validation set for distributed dataset |

| log_file | str | "" | Output logs to a file under this path |

| num_workers | int | 1 | Number of subprocesses for data loading |

| n_epochs | int | 70 | Number of training epochs |

| train_batch_size | int | 8 | Batch size for training |

| valid_batch_size | int | 8 | Batch size for validation |

| max_history | int | 15 | Number of previous exchanges to keep in history |

| scheduler | str | "noam" | Method of optimizer |

| n_emd | int | 768 | Number of n_emd in config file (for noam) |

| eval_before_start | bool | False | If true, start evaluation before training |

| warmup_steps | int | 5000 | Warm up steps |

| valid_steps | int | 0 | Perform validation every X steps, if is not 0 |

| gradient_accumulation_steps | int | 64 | Accumulate gradients on several steps |

| max_norm | float | 1.0 | Clipping gradient norm |

| device | str | "cuda" if torch.cuda.is_available() else "cpu" | Device (cuda or cpu) |

| fp16 | str | "" | Set to O0, O1, O2 or O3 for fp16 training (see apex documentation) |

| local_rank | int | -1 | Local rank for distributed training (-1: not distributed) |

Evaluation is performed on results generated by models fine-tuned on

STC dataset (Train/Valid split (Baidu Netdisk, Google Drive), Test split (Baidu Netdisk, Google Drive)). All responses are generated using the Nucleus Sampling scheme with a threshold 0.9 and temperature 0.7.

| Models | Model Size | PPL | BLEU-2 | BLEU-4 | Dist-1 | Dist-2 | Greedy Matching | Embedding Average |

|---|---|---|---|---|---|---|---|---|

| Attn-Seq2seq | 73M | 34.20 | 3.93 | 0.90 | 8.5 | 11.91 | 65.84 | 83.38 |

| Transformer | 113M | 22.10 | 6.72 | 3.14 | 8.8 | 13.97 | 66.06 | 83.55 |

| GPT2-chitchat | 88M | - | 2.28 | 0.54 | 10.3 | 16.25 | 61.54 | 78.94 |

| GPT Novel | 95.5M | 21.27 | 5.96 | 2.71 | 8.0 | 11.72 | 66.12 | 83.34 |

| GPT LCCC-base | 95.5M | 18.38 | 6.48 | 3.08 | 8.3 | 12.68 | 66.21 | 83.54 |

| GPT2 LCCC-base | 95.5M | 22.76 | 5.69 | 2.50 | 7.7 | 10.87 | 66.24 | 83.46 |

| GPT LCCC-large | 95.5M | 18.23 | 6.63 | 3.20 | 8.3 | 12.71 | 66.25 | 83.63 |

We also employed 3 crowd-sourced annotators to evaluate 200 samples of each model about fluency, relevance, and diversity based on a 2/1/0 score schema. Refer to our paper for more details.

| Models | +2 | +1 | +0 | Kappa |

|---|---|---|---|---|

| Attn-Seq2Seq | 27.1% | 21.4% | 51.4% | 0.4544 |

| Transformer | 42.4% | 23.6% | 34.0% | 0.4429 |

| GPT2-chitchat | 24.3% | 19,1% | 56.6% | 0.3941 |

| GPT LCCC-base | 46.7% | 21.8% | 31.5% | 0.3954 |

| GPT LCCC-large | 48.6% | 24.5% | 27.0% | 0.4203 |

We randomly select 1000 generated samples, and make a statistic of toxic generation:

| Repetition | Dirty word | Other Bad Cases |

|---|---|---|

| 23 | 9 | 2 |

Single-turn generated examples from STC dataset (fine-tuned on STC):

| Post | Response |

|---|---|

| 這居然是北京不是哈爾濱。 | 哈爾濱的天氣好像比北京好點, 我這裡就是小雨, 白天都是大風 |

| 經營者校園廣告大PK , 太歡樂了! 哈哈哈。 | 我以為是馬雲的廣告。 |

| D elsa S posa 糖果色系列婚紗, 粉紅藍紅紫, 俏皮又清新, 你喜歡嗎? | 最後一件太美了, 美的不像話! |

Multi-turn generated examples using the interactive script:

| Roel | Response |

|---|---|

| User | 別愛我沒結果 |

| Bot | 沒結果的愛是多麼的痛。 |

| User | 但是愛過就可以了 |

| Bot | 有時候,愛情就是一場遊戲。 |

| User | 輸的總是我 |

| Bot | 我愛你, 就像我愛你一樣。 |

| User | 你可拉到吧 |

| Bot | 你可拉到吧。 |

| User | 別學我說話 |

| Bot | 我說話就是你不行。 |

| User | 逼我動手吧是吧 |

| Bot | 不, 是逼你動口是吧 |

Yida Wang, Pei Ke, Yinhe Zheng, Kaili Huang, Yong Jiang, Xiaoyan Zhu, Minlie Huang

Zuoxian Ye, Yao Wang, Yifan Pan

The LCCC dataset and the pre-trained models aim to facilitate the research for conversation generation. The LCCC dataset provided in this repository originates from various sources. Although a rigorous cleaning process has been carried outgure is isalat the noappate carried outguate is prival spriate carried outgue is been completely filtered out. All the contents contained in this dataset does not represent the authors' opinion. This repository contains only part of the modeling machinery needed to actually produce a dialogue model。 We are not responsible for any contents generated using our model.

Please kindly cite our paper if you use the datasets or models in your research:

@inproceedings{wang2020chinese,

title={A Large-Scale Chinese Short-Text Conversation Dataset},

author={Wang, Yida and Ke, Pei and Zheng, Yinhe and Huang, Kaili and Jiang, Yong and Zhu, Xiaoyan and Huang, Minlie},

booktitle={NLPCC},

year={2020},

url={https://arxiv.org/abs/2008.03946}

}