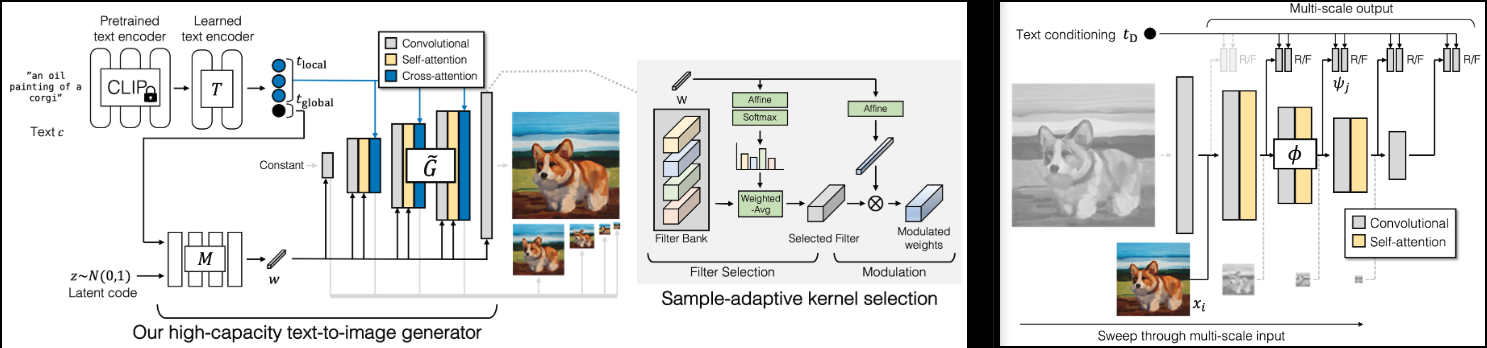

實施 GigaGAN(專案頁面),Adobe 推出的新 SOTA GAN。

我還將添加一些輕量級 gan 的發現,以實現更快的收斂(跳過層激勵)和更好的穩定性(鑑別器中的重建輔助損失)

它還包含 1k - 4k 上採樣器的程式碼,我認為這是本文的亮點。

如果您有興趣幫助 LAION 社區進行複製,請加入

穩定性人工智慧和?感謝慷慨的贊助,以及我的其他贊助商,感謝他們為我提供了開源人工智慧的獨立性。

? Huggingface 的加速程式庫

OpenClip 的所有維護者,感謝他們的 SOTA 開源對比學習文字圖像模型

Xavier 非常有幫助的程式碼審查,以及關於如何建立鑑別器中的尺度不變性的討論!

@CerebralSeed 請求生成器和上採樣器的初始採樣代碼!

Keerth 進行了程式碼審查並指出了與論文的一些差異!

$ pip install gigagan-pytorch簡單的無條件 GAN,適合初學者

import torch

from gigagan_pytorch import (

GigaGAN ,

ImageDataset

)

gan = GigaGAN (

generator = dict (

dim_capacity = 8 ,

style_network = dict (

dim = 64 ,

depth = 4

),

image_size = 256 ,

dim_max = 512 ,

num_skip_layers_excite = 4 ,

unconditional = True

),

discriminator = dict (

dim_capacity = 16 ,

dim_max = 512 ,

image_size = 256 ,

num_skip_layers_excite = 4 ,

unconditional = True

),

amp = True

). cuda ()

# dataset

dataset = ImageDataset (

folder = '/path/to/your/data' ,

image_size = 256

)

dataloader = dataset . get_dataloader ( batch_size = 1 )

# you must then set the dataloader for the GAN before training

gan . set_dataloader ( dataloader )

# training the discriminator and generator alternating

# for 100 steps in this example, batch size 1, gradient accumulated 8 times

gan (

steps = 100 ,

grad_accum_every = 8

)

# after much training

images = gan . generate ( batch_size = 4 ) # (4, 3, 256, 256)對於無條件 Unet 上採樣器

import torch

from gigagan_pytorch import (

GigaGAN ,

ImageDataset

)

gan = GigaGAN (

train_upsampler = True , # set this to True

generator = dict (

style_network = dict (

dim = 64 ,

depth = 4

),

dim = 32 ,

image_size = 256 ,

input_image_size = 64 ,

unconditional = True

),

discriminator = dict (

dim_capacity = 16 ,

dim_max = 512 ,

image_size = 256 ,

num_skip_layers_excite = 4 ,

multiscale_input_resolutions = ( 128 ,),

unconditional = True

),

amp = True

). cuda ()

dataset = ImageDataset (

folder = '/path/to/your/data' ,

image_size = 256

)

dataloader = dataset . get_dataloader ( batch_size = 1 )

gan . set_dataloader ( dataloader )

# training the discriminator and generator alternating

# for 100 steps in this example, batch size 1, gradient accumulated 8 times

gan (

steps = 100 ,

grad_accum_every = 8

)

# after much training

lowres = torch . randn ( 1 , 3 , 64 , 64 ). cuda ()

images = gan . generate ( lowres ) # (1, 3, 256, 256) G - 發電機MSG - 多尺度產生器D - 鑑別器MSD - 多尺度鑑別器GP梯度罰分SSL - 判別器中的輔助重建(來自輕量級 GAN)VD - 視覺輔助鑑別器VG - 視覺輔助產生器CL - 生成器比較損失MAL - 匹配感知損失健康的運作將使G 、 MSG 、 D 、 MSD值徘徊在0到10之間,並且通常保持相當恆定。如果在 1k 訓練步驟之後的任何時候這些值持續保持在三位數,則表示出現了問題。生成器和鑑別器的值偶爾會下降為負值是可以的,但它應該回升至上述範圍。

GP和SSL應推向0 。 GP偶爾會出現峰值;我喜歡將其想像為網路正在經歷某種頓悟

GigaGAN級現在配備了?加速器。您可以使用其accelerate CLI 輕鬆地透過兩步驟進行多 GPU 訓練

在訓練腳本所在的專案根目錄中,執行

$ accelerate config然後在同一個目錄下

$ accelerate launch train . py 確保可以無條件訓練

閱讀相關論文並消除所有 3 個輔助損失

unet上採樣器

對多尺度輸入和輸出進行程式碼審查,因為論文有點模糊

新增上採樣網路架構

使基礎產生器和上採樣器無條件工作

使文字條件訓練適用於基礎和上採樣器

透過隨機採樣補丁提高偵察效率

確保生成器和鑑別器也可以接受預先編碼的 CLIP 文字編碼

審查輔助損失

添加一些可微的增強,這是舊 GAN 時代經過驗證的技術

將所有調變投影移至自適應 conv2d 類別中

增加加速

剪輯對於所有模組來說都是可選的,並由GigaGAN管理,文字 -> 文字嵌入處理一次

加入從多尺度維度選擇隨機子集的能力,以提高效率

從輕量級|stylegan2-pytorch 透過 CLI 移植

連接文字影像的 laion 資料集

@misc { https://doi.org/10.48550/arxiv.2303.05511 ,

url = { https://arxiv.org/abs/2303.05511 } ,

author = { Kang, Minguk and Zhu, Jun-Yan and Zhang, Richard and Park, Jaesik and Shechtman, Eli and Paris, Sylvain and Park, Taesung } ,

title = { Scaling up GANs for Text-to-Image Synthesis } ,

publisher = { arXiv } ,

year = { 2023 } ,

copyright = { arXiv.org perpetual, non-exclusive license }

} @article { Liu2021TowardsFA ,

title = { Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis } ,

author = { Bingchen Liu and Yizhe Zhu and Kunpeng Song and A. Elgammal } ,

journal = { ArXiv } ,

year = { 2021 } ,

volume = { abs/2101.04775 }

} @inproceedings { dao2022flashattention ,

title = { Flash{A}ttention: Fast and Memory-Efficient Exact Attention with {IO}-Awareness } ,

author = { Dao, Tri and Fu, Daniel Y. and Ermon, Stefano and Rudra, Atri and R{'e}, Christopher } ,

booktitle = { Advances in Neural Information Processing Systems } ,

year = { 2022 }

} @inproceedings { Karras2020ada ,

title = { Training Generative Adversarial Networks with Limited Data } ,

author = { Tero Karras and Miika Aittala and Janne Hellsten and Samuli Laine and Jaakko Lehtinen and Timo Aila } ,

booktitle = { Proc. NeurIPS } ,

year = { 2020 }

} @article { Xu2024VideoGigaGANTD ,

title = { VideoGigaGAN: Towards Detail-rich Video Super-Resolution } ,

author = { Yiran Xu and Taesung Park and Richard Zhang and Yang Zhou and Eli Shechtman and Feng Liu and Jia-Bin Huang and Difan Liu } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2404.12388 } ,

url = { https://api.semanticscholar.org/CorpusID:269214195 }

}