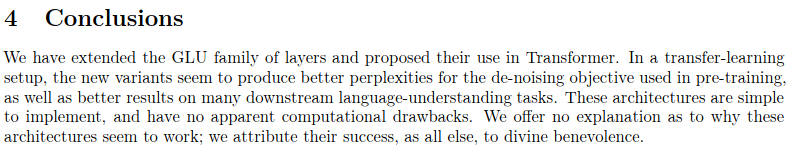

這是稀疏 Sinkhorn Attention 中概述的工作的複製品,並進行了額外的增強。

它包括一個參數化排序網絡,使用 Sinkhorn 標準化對排列矩陣進行採樣,該排列矩陣將最相關的鍵桶與查詢桶相匹配。

這項工作還引入了可逆網路和前饋分塊(從 Reformer 引入的概念),以進一步節省記憶體。

204k 代幣(演示目的)

$ pip install sinkhorn_transformer基於 Sinkhorn Transformer 的語言模型

import torch

from sinkhorn_transformer import SinkhornTransformerLM

model = SinkhornTransformerLM (

num_tokens = 20000 ,

dim = 1024 ,

heads = 8 ,

depth = 12 ,

max_seq_len = 8192 ,

bucket_size = 128 , # size of the buckets

causal = False , # auto-regressive or not

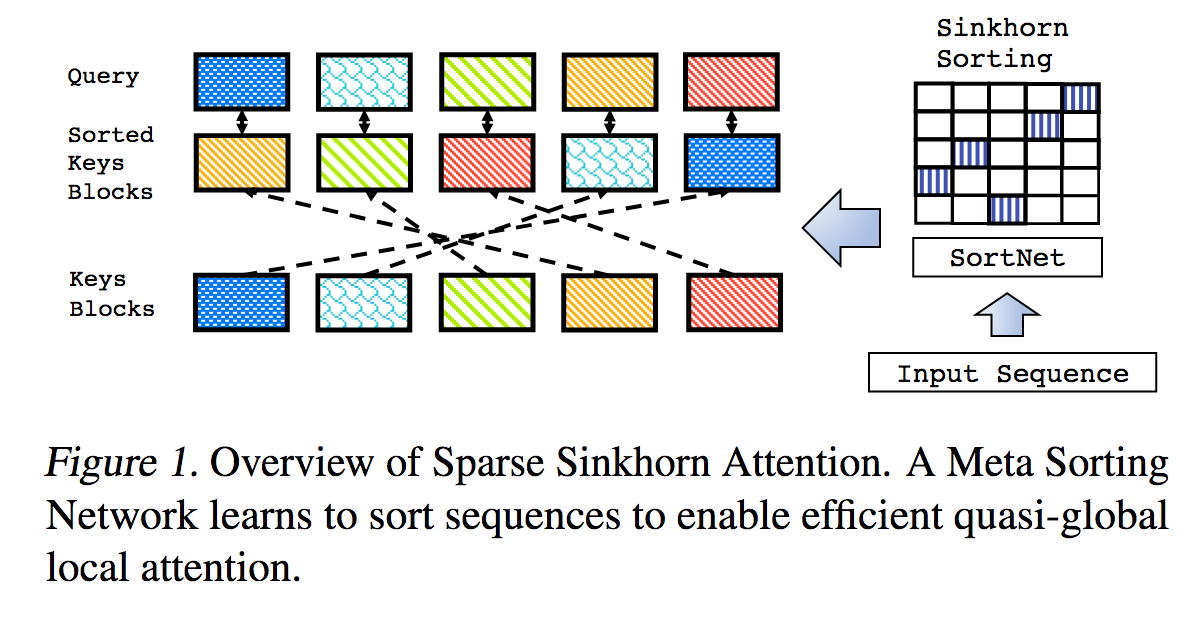

n_sortcut = 2 , # use sortcut to reduce memory complexity to linear

n_top_buckets = 2 , # sort specified number of key/value buckets to one query bucket. paper is at 1, defaults to 2

ff_chunks = 10 , # feedforward chunking, from Reformer paper

reversible = True , # make network reversible, from Reformer paper

emb_dropout = 0.1 , # embedding dropout

ff_dropout = 0.1 , # feedforward dropout

attn_dropout = 0.1 , # post attention dropout

attn_layer_dropout = 0.1 , # post attention layer dropout

layer_dropout = 0.1 , # add layer dropout, from 'Reducing Transformer Depth on Demand' paper

weight_tie = True , # tie layer parameters, from Albert paper

emb_dim = 128 , # embedding factorization, from Albert paper

dim_head = 64 , # be able to fix the dimension of each head, making it independent of the embedding dimension and the number of heads

ff_glu = True , # use GLU in feedforward, from paper 'GLU Variants Improve Transformer'

n_local_attn_heads = 2 , # replace N heads with local attention, suggested to work well from Routing Transformer paper

pkm_layers = ( 4 , 7 ), # specify layers to use product key memory. paper shows 1 or 2 modules near the middle of the transformer is best

pkm_num_keys = 128 , # defaults to 128, but can be increased to 256 or 512 as memory allows

)

x = torch . randint ( 0 , 20000 , ( 1 , 2048 ))

model ( x ) # (1, 2048, 20000)一個普通的Sinkhorn Transformer,層層的Sinkhorn注意力

import torch

from sinkhorn_transformer import SinkhornTransformer

model = SinkhornTransformer (

dim = 1024 ,

heads = 8 ,

depth = 12 ,

bucket_size = 128

)

x = torch . randn ( 1 , 2048 , 1024 )

model ( x ) # (1, 2048, 1024)Sinkhorn 編碼器/解碼器變壓器

import torch

from sinkhorn_transformer import SinkhornTransformerLM

DE_SEQ_LEN = 4096

EN_SEQ_LEN = 4096

enc = SinkhornTransformerLM (

num_tokens = 20000 ,

dim = 512 ,

depth = 6 ,

heads = 8 ,

bucket_size = 128 ,

max_seq_len = DE_SEQ_LEN ,

reversible = True ,

return_embeddings = True

). cuda ()

dec = SinkhornTransformerLM (

num_tokens = 20000 ,

dim = 512 ,

depth = 6 ,

causal = True ,

bucket_size = 128 ,

max_seq_len = EN_SEQ_LEN ,

receives_context = True ,

context_bucket_size = 128 , # context key / values can be bucketed differently

reversible = True

). cuda ()

x = torch . randint ( 0 , 20000 , ( 1 , DE_SEQ_LEN )). cuda ()

y = torch . randint ( 0 , 20000 , ( 1 , EN_SEQ_LEN )). cuda ()

x_mask = torch . ones_like ( x ). bool (). cuda ()

y_mask = torch . ones_like ( y ). bool (). cuda ()

context = enc ( x , input_mask = x_mask )

dec ( y , context = context , input_mask = y_mask , context_mask = x_mask ) # (1, 4096, 20000) 預設情況下,如果給定的輸入不是桶大小的倍數,模型會抱怨。為了避免每次都進行相同的填充計算,您可以使用幫助器Autopadder類別。如果給定的話,它也會為您處理input_mask 。也支援上下文鍵/值和遮罩。

import torch

from sinkhorn_transformer import SinkhornTransformerLM

from sinkhorn_transformer import Autopadder

model = SinkhornTransformerLM (

num_tokens = 20000 ,

dim = 1024 ,

heads = 8 ,

depth = 12 ,

max_seq_len = 2048 ,

bucket_size = 128 ,

causal = True

)

model = Autopadder ( model , pad_left = True ) # autopadder will fetch the bucket size and autopad input

x = torch . randint ( 0 , 20000 , ( 1 , 1117 )) # odd sequence length

model ( x ) # (1, 1117, 20000) 該存儲庫與論文有所不同,現在使用注意力機制代替原來的排序網+gumbel sinkhorn 採樣。我還沒有發現性能上有明顯的差異,新的方案允許我將網路推廣到靈活的序列長度。如果您想嘗試 Sinkhorn,請使用以下設置,該設置僅適用於非因果網路。

import torch

from sinkhorn_transformer import SinkhornTransformerLM

model = SinkhornTransformerLM (

num_tokens = 20000 ,

dim = 1024 ,

heads = 8 ,

depth = 12 ,

bucket_size = 128 ,

max_seq_len = 8192 ,

use_simple_sort_net = True , # turn off attention sort net

sinkhorn_iter = 7 , # number of sinkhorn iterations - default is set at reported best in paper

n_sortcut = 2 , # use sortcut to reduce complexity to linear time

temperature = 0.75 , # gumbel temperature - default is set at reported best in paper

non_permutative = False , # allow buckets of keys to be sorted to queries more than once

)

x = torch . randint ( 0 , 20000 , ( 1 , 8192 ))

model ( x ) # (1, 8192, 20000) 要看到使用 PKM 的好處,必須將值的學習率設定為高於其餘參數。 (建議為1e-2 )

您可以按照此處的說明正確設定 https://github.com/lucidrains/product-key-memory#learning-rates

Sinkhorn 在固定長度序列上進行訓練時,似乎無法從頭開始解碼序列,這主要是由於當桶部分填充填充令牌時排序網路難以泛化。

幸運的是,我想我已經找到了一個簡單的解決方案。在訓練過程中,對於因果網絡,隨機截斷序列並強制排序網絡進行泛化。我為AutoregressiveWrapper實例提供了一個標誌( randomly_truncate_sequence ),以簡化此操作。

import torch

from sinkhorn_transformer import SinkhornTransformerLM , AutoregressiveWrapper

model = SinkhornTransformerLM (

num_tokens = 20000 ,

dim = 1024 ,

heads = 8 ,

depth = 12 ,

bucket_size = 75 ,

max_seq_len = 8192 ,

causal = True

)

model = AutoregressiveWrapper ( model )

x = torch . randint ( 0 , 20000 , ( 1 , 8192 ))

loss = model ( x , return_loss = True , randomly_truncate_sequence = True ) # (1, 8192, 20000)如果有人找到更好的解決方案,我願意接受建議。

因果排序網路存在一個潛在的問題,其中將過去的哪些鍵/值儲存桶排序到儲存桶的決定僅取決於第一個令牌,而不依賴其餘令牌(由於分桶方案並防止未來的洩漏)過去的)。

我試圖通過將一半的頭向左旋轉桶大小 - 1 來緩解這個問題,從而將最後一個令牌提升為第一個。這也是AutoregressiveWrapper在訓練期間預設使用左填充的原因,以始終確保序列中的最後一個標記對要檢索的內容有發言權。

如果有人找到了更乾淨的解決方案,請在問題中告訴我。

@misc { tay2020sparse ,

title = { Sparse Sinkhorn Attention } ,

author = { Yi Tay and Dara Bahri and Liu Yang and Donald Metzler and Da-Cheng Juan } ,

year = { 2020 } ,

url. = { https://arxiv.org/abs/2002.11296 }

} @inproceedings { kitaev2020reformer ,

title = { Reformer: The Efficient Transformer } ,

author = { Nikita Kitaev and Lukasz Kaiser and Anselm Levskaya } ,

booktitle = { International Conference on Learning Representations } ,

year = { 2020 } ,

url = { https://openreview.net/forum?id=rkgNKkHtvB }

} @misc { lan2019albert ,

title = { ALBERT: A Lite BERT for Self-supervised Learning of Language Representations } ,

author = { Zhenzhong Lan and Mingda Chen and Sebastian Goodman and Kevin Gimpel and Piyush Sharma and Radu Soricut } ,

year = { 2019 } ,

url = { https://arxiv.org/abs/1909.11942 }

} @misc { shazeer2020glu ,

title = { GLU Variants Improve Transformer } ,

author = { Noam Shazeer } ,

year = { 2020 } ,

url = { https://arxiv.org/abs/2002.05202 }

} @misc { roy*2020efficient ,

title = { Efficient Content-Based Sparse Attention with Routing Transformers } ,

author = { Aurko Roy* and Mohammad Taghi Saffar* and David Grangier and Ashish Vaswani } ,

year = { 2020 } ,

url = { https://openreview.net/forum?id=B1gjs6EtDr }

} @inproceedings { fan2020reducing ,

title = { Reducing Transformer Depth on Demand with Structured Dropout } ,

author = { Angela Fan and Edouard Grave and Armand Joulin } ,

booktitle = { International Conference on Learning Representations } ,

year = { 2020 } ,

url = { https://openreview.net/forum?id=SylO2yStDr }

} @misc { lample2019large ,

title = { Large Memory Layers with Product Keys } ,

author = { Guillaume Lample and Alexandre Sablayrolles and Marc'Aurelio Ranzato and Ludovic Denoyer and Hervé Jégou } ,

year = { 2019 } ,

eprint = { 1907.05242 } ,

archivePrefix = { arXiv }

} @misc { bhojanapalli2020lowrank ,

title = { Low-Rank Bottleneck in Multi-head Attention Models } ,

author = { Srinadh Bhojanapalli and Chulhee Yun and Ankit Singh Rawat and Sashank J. Reddi and Sanjiv Kumar } ,

year = { 2020 } ,

eprint = { 2002.07028 }

}