glom pytorch

0.0.14

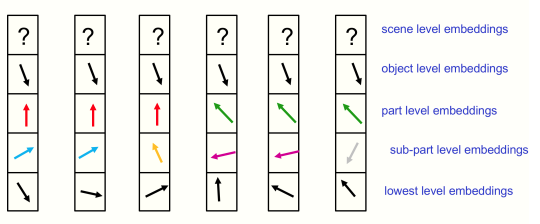

Glom 的實現是 Geoffrey Hinton 的新想法,它整合了神經領域的概念、自上而下的處理和注意力(列之間的共識),用於從資料中學習新興的部分整體層次結構。

Yannic Kilcher 的影片對幫助我理解本文很有幫助

$ pip install glom-pytorch import torch

from glom_pytorch import Glom

model = Glom (

dim = 512 , # dimension

levels = 6 , # number of levels

image_size = 224 , # image size

patch_size = 14 # patch size

)

img = torch . randn ( 1 , 3 , 224 , 224 )

levels = model ( img , iters = 12 ) # (1, 256, 6, 512) - (batch - patches - levels - dimension)在向前傳遞return_all = True關鍵字參數,您將傳回每次迭代的所有欄位和層級狀態(包括初始狀態、迭代次數 + 1)。然後,您可以使用它在任何時間步將任何損失附加到任何等級輸出。

它還允許您存取迭代中的所有層級資料以進行聚類,從中可以檢查論文中的理論島。

import torch

from glom_pytorch import Glom

model = Glom (

dim = 512 , # dimension

levels = 6 , # number of levels

image_size = 224 , # image size

patch_size = 14 # patch size

)

img = torch . randn ( 1 , 3 , 224 , 224 )

all_levels = model ( img , iters = 12 , return_all = True ) # (13, 1, 256, 6, 512) - (time, batch, patches, levels, dimension)

# get the top level outputs after iteration 6

top_level_output = all_levels [ 7 , :, :, - 1 ] # (1, 256, 512) - (batch, patches, dimension)正如 Hinton 所描述的那樣,對自我監督學習進行去噪以鼓勵湧現

import torch

import torch . nn . functional as F

from torch import nn

from einops . layers . torch import Rearrange

from glom_pytorch import Glom

model = Glom (

dim = 512 , # dimension

levels = 6 , # number of levels

image_size = 224 , # image size

patch_size = 14 # patch size

)

img = torch . randn ( 1 , 3 , 224 , 224 )

noised_img = img + torch . randn_like ( img )

all_levels = model ( noised_img , return_all = True )

patches_to_images = nn . Sequential (

nn . Linear ( 512 , 14 * 14 * 3 ),

Rearrange ( 'b (h w) (p1 p2 c) -> b c (h p1) (w p2)' , p1 = 14 , p2 = 14 , h = ( 224 // 14 ))

)

top_level = all_levels [ 7 , :, :, - 1 ] # get the top level embeddings after iteration 6

recon_img = patches_to_images ( top_level )

# do self-supervised learning by denoising

loss = F . mse_loss ( img , recon_img )

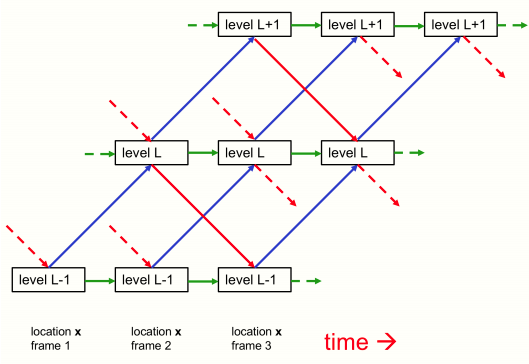

loss . backward ()您可以將列和級別的狀態傳回模型,以繼續您上次停下的地方(也許如果您正在處理慢速視訊的連續幀,如論文中所述)

import torch

from glom_pytorch import Glom

model = Glom (

dim = 512 ,

levels = 6 ,

image_size = 224 ,

patch_size = 14

)

img1 = torch . randn ( 1 , 3 , 224 , 224 )

img2 = torch . randn ( 1 , 3 , 224 , 224 )

img3 = torch . randn ( 1 , 3 , 224 , 224 )

levels1 = model ( img1 , iters = 12 ) # image 1 for 12 iterations

levels2 = model ( img2 , levels = levels1 , iters = 10 ) # image 2 for 10 iteratoins

levels3 = model ( img3 , levels = levels2 , iters = 6 ) # image 3 for 6 iterations感謝 Cfoster0 審閱代碼

@misc { hinton2021represent ,

title = { How to represent part-whole hierarchies in a neural network } ,

author = { Geoffrey Hinton } ,

year = { 2021 } ,

eprint = { 2102.12627 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CV }

}