self rewarding lm pytorch

0.2.12

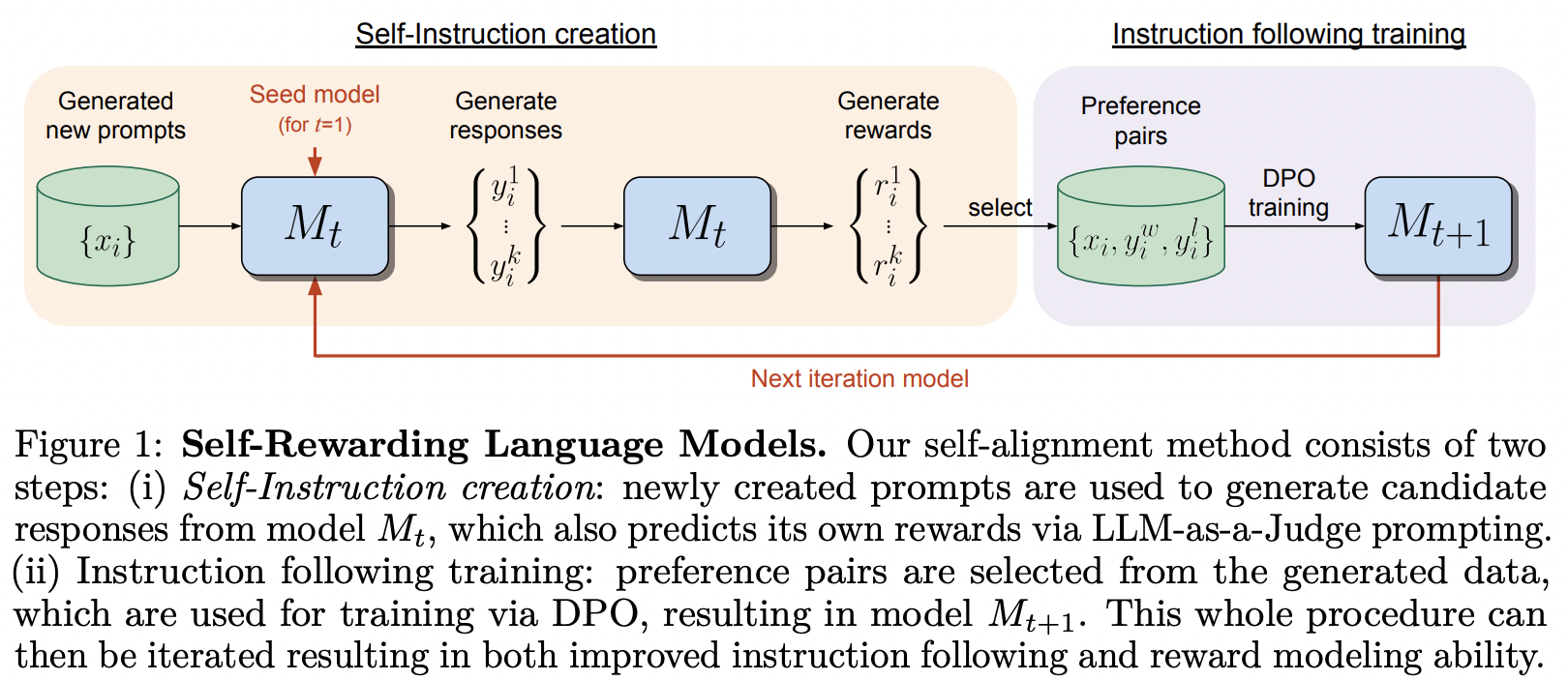

MetaAI 的自我獎勵語言模型中提出的培訓框架的實現

他們確實把 DPO 論文的標題牢記在心。

該庫還包含 SPIN 的實現,Nous Research 的 Teknium 對此表示樂觀。

$ pip install self-rewarding-lm-pytorch import torch

from torch import Tensor

from self_rewarding_lm_pytorch import (

SelfRewardingTrainer ,

create_mock_dataset

)

from x_transformers import TransformerWrapper , Decoder

transformer = TransformerWrapper (

num_tokens = 256 ,

max_seq_len = 1024 ,

attn_layers = Decoder (

dim = 512 ,

depth = 1 ,

heads = 8

)

)

sft_dataset = create_mock_dataset ( 100 , lambda : ( torch . randint ( 0 , 256 , ( 256 ,)), torch . tensor ( 1 )))

prompt_dataset = create_mock_dataset ( 100 , lambda : 'mock prompt' )

def decode_tokens ( tokens : Tensor ) -> str :

decode_token = lambda token : str ( chr ( max ( 32 , token )))

return '' . join ( list ( map ( decode_token , tokens )))

def encode_str ( seq_str : str ) -> Tensor :

return Tensor ( list ( map ( ord , seq_str )))

trainer = SelfRewardingTrainer (

transformer ,

finetune_configs = dict (

train_sft_dataset = sft_dataset ,

self_reward_prompt_dataset = prompt_dataset ,

dpo_num_train_steps = 1000

),

tokenizer_decode = decode_tokens ,

tokenizer_encode = encode_str ,

accelerate_kwargs = dict (

cpu = True

)

)

trainer ( overwrite_checkpoints = True )

# checkpoints after each finetuning stage will be saved to ./checkpointsSPIN 可以如下進行訓練 - 也可以將其添加到微調管道中,如自述文件中的最後一個範例所示。

import torch

from self_rewarding_lm_pytorch import (

SPINTrainer ,

create_mock_dataset

)

from x_transformers import TransformerWrapper , Decoder

transformer = TransformerWrapper (

num_tokens = 256 ,

max_seq_len = 1024 ,

attn_layers = Decoder (

dim = 512 ,

depth = 6 ,

heads = 8

)

)

sft_dataset = create_mock_dataset ( 100 , lambda : ( torch . randint ( 0 , 256 , ( 256 ,)), torch . tensor ( 1 )))

spin_trainer = SPINTrainer (

transformer ,

max_seq_len = 16 ,

train_sft_dataset = sft_dataset ,

checkpoint_every = 100 ,

spin_kwargs = dict (

λ = 0.1 ,

),

)

spin_trainer ()假設您想嘗試自己的獎勵提示(法學碩士法官除外)。首先,您需要匯入RewardConfig ,然後將其作為reward_prompt_config傳遞到訓練器中

# first import

from self_rewarding_lm_pytorch import RewardConfig

# then say you want to try asking the transformer nicely

# reward_regex_template is the string that will be looked for in the LLM response, for parsing out the reward where {{ reward }} is defined as a number

trainer = SelfRewardingTrainer (

transformer ,

...,

self_reward_prompt_config = RewardConfig (

prompt_template = """

Pretty please rate the following user prompt and response

User: {{ prompt }}

Response: {{ response }}

Format your score as follows:

Rating: <rating as integer from 0 - 10>

""" ,

reward_regex_template = """

Rating: {{ reward }}

"""

)

)最後,如果您想嘗試任意順序的微調,您也可以透過將FinetuneConfig實例作為列表傳遞到finetune_configs來獲得這種靈活性

前任。假設你想對交錯 SPIN、外在獎勵和自我獎勵進行研究

這個想法源自於 Teknium 的一個私人不和諧頻道。

# import the configs

from self_rewarding_lm_pytorch import (

SFTConfig ,

SelfRewardDPOConfig ,

ExternalRewardDPOConfig ,

SelfPlayConfig ,

)

trainer = SelfRewardingTrainer (

model ,

finetune_configs = [

SFTConfig (...),

SelfPlayConfig (...),

ExternalRewardDPOConfig (...),

SelfRewardDPOConfig (...),

SelfPlayConfig (...),

SelfRewardDPOConfig (...)

],

...

)

trainer ()

# checkpoints after each finetuning stage will be saved to ./checkpoints 概括抽樣,使其可以在批次中的不同位置進行,固定所有抽樣進行批次。還允許左填充序列,在這種情況下,有些人擁有具有相對位置的變壓器,允許這樣做

處理eos

顯示使用您自己的獎勵提示而不是預設 llm-as-judge 的範例

允許對對進行不同的採樣策略

早停者

sft、spin、自我獎勵 DPO、具有外部獎勵模型的 DPO 任意順序

允許對獎勵進行驗證功能(例如獎勵必須是整數、浮點數、在某個範圍內等)

弄清楚如何最好地處理 kv 快取的不同實現,現在就不用了

自動清除所有檢查點資料夾的環境標誌

@misc { yuan2024selfrewarding ,

title = { Self-Rewarding Language Models } ,

author = { Weizhe Yuan and Richard Yuanzhe Pang and Kyunghyun Cho and Sainbayar Sukhbaatar and Jing Xu and Jason Weston } ,

year = { 2024 } ,

eprint = { 2401.10020 } ,

archivePrefix = { arXiv } ,

primaryClass = { cs.CL }

} @article { Chen2024SelfPlayFC ,

title = { Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models } ,

author = { Zixiang Chen and Yihe Deng and Huizhuo Yuan and Kaixuan Ji and Quanquan Gu } ,

journal = { ArXiv } ,

year = { 2024 } ,

volume = { abs/2401.01335 } ,

url = { https://api.semanticscholar.org/CorpusID:266725672 }

} @article { Rafailov2023DirectPO ,

title = { Direct Preference Optimization: Your Language Model is Secretly a Reward Model } ,

author = { Rafael Rafailov and Archit Sharma and Eric Mitchell and Stefano Ermon and Christopher D. Manning and Chelsea Finn } ,

journal = { ArXiv } ,

year = { 2023 } ,

volume = { abs/2305.18290 } ,

url = { https://api.semanticscholar.org/CorpusID:258959321 }

} @inproceedings { Guo2024DirectLM ,

title = { Direct Language Model Alignment from Online AI Feedback } ,

author = { Shangmin Guo and Biao Zhang and Tianlin Liu and Tianqi Liu and Misha Khalman and Felipe Llinares and Alexandre Rame and Thomas Mesnard and Yao Zhao and Bilal Piot and Johan Ferret and Mathieu Blondel } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:267522951 }

}