lumiere pytorch

0.0.24

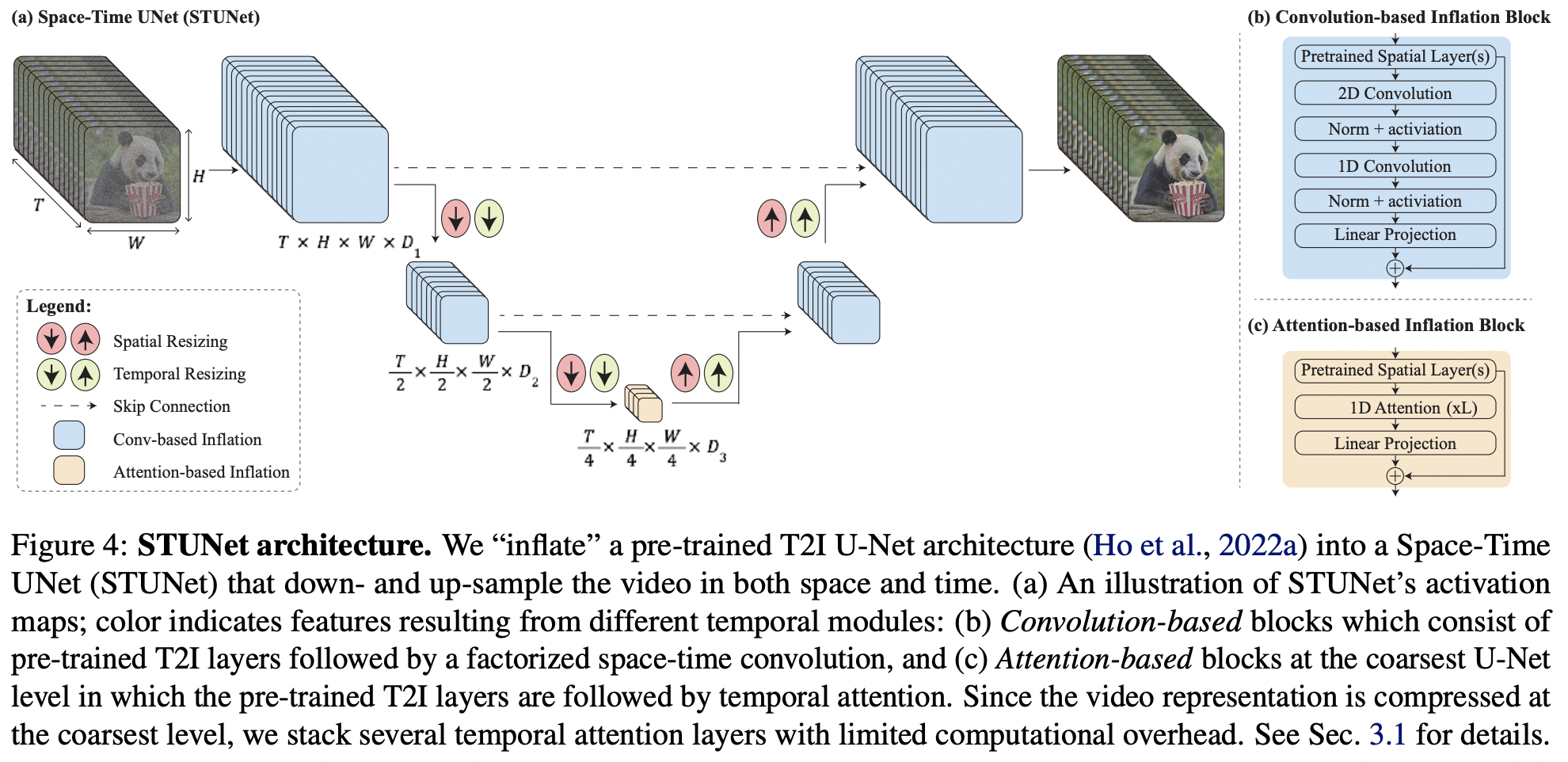

在 Pytorch 中實現 Lumiere(來自 Google Deepmind 的 SOTA 文字到影片生成)

Yannic 的論文評論

由於本文主要只是文字到圖像模型之上的一些關鍵想法,因此將更進一步,將新的 Karras U-net 擴展到此儲存庫中的影片。

$ pip install lumiere-pytorch import torch

from lumiere_pytorch import MPLumiere

from denoising_diffusion_pytorch import KarrasUnet

karras_unet = KarrasUnet (

image_size = 256 ,

dim = 8 ,

channels = 3 ,

dim_max = 768 ,

)

lumiere = MPLumiere (

karras_unet ,

image_size = 256 ,

unet_time_kwarg = 'time' ,

conv_module_names = [

'downs.1' ,

'ups.1' ,

'downs.2' ,

'ups.2' ,

],

attn_module_names = [

'mids.0'

],

upsample_module_names = [

'ups.2' ,

'ups.1' ,

],

downsample_module_names = [

'downs.1' ,

'downs.2'

]

)

noised_video = torch . randn ( 2 , 3 , 8 , 256 , 256 )

time = torch . ones ( 2 ,)

denoised_video = lumiere ( noised_video , time = time )

assert noised_video . shape == denoised_video . shape 新增所有時間層

僅公開用於學習的時間參數,凍結其他所有內容

找出處理時間下採樣後時間調節的最佳方法 - 而不是一開始就進行 pytree 轉換,可能需要掛鉤所有模組並檢查批量大小

處理可能具有輸出形狀(batch, seq, dim)的中間模組

根據 Tero Karras 的結論,即興設計具有震級保持功能的 4 個模組的變體

在 imagen-pytorch 上測試

研究多重擴散,看看它是否可以變成一些簡單的包裝器

@inproceedings { BarTal2024LumiereAS ,

title = { Lumiere: A Space-Time Diffusion Model for Video Generation } ,

author = { Omer Bar-Tal and Hila Chefer and Omer Tov and Charles Herrmann and Roni Paiss and Shiran Zada and Ariel Ephrat and Junhwa Hur and Yuanzhen Li and Tomer Michaeli and Oliver Wang and Deqing Sun and Tali Dekel and Inbar Mosseri } ,

year = { 2024 } ,

url = { https://api.semanticscholar.org/CorpusID:267095113 }

} @article { Karras2023AnalyzingAI ,

title = { Analyzing and Improving the Training Dynamics of Diffusion Models } ,

author = { Tero Karras and Miika Aittala and Jaakko Lehtinen and Janne Hellsten and Timo Aila and Samuli Laine } ,

journal = { ArXiv } ,

year = { 2023 } ,

volume = { abs/2312.02696 } ,

url = { https://api.semanticscholar.org/CorpusID:265659032 }

}