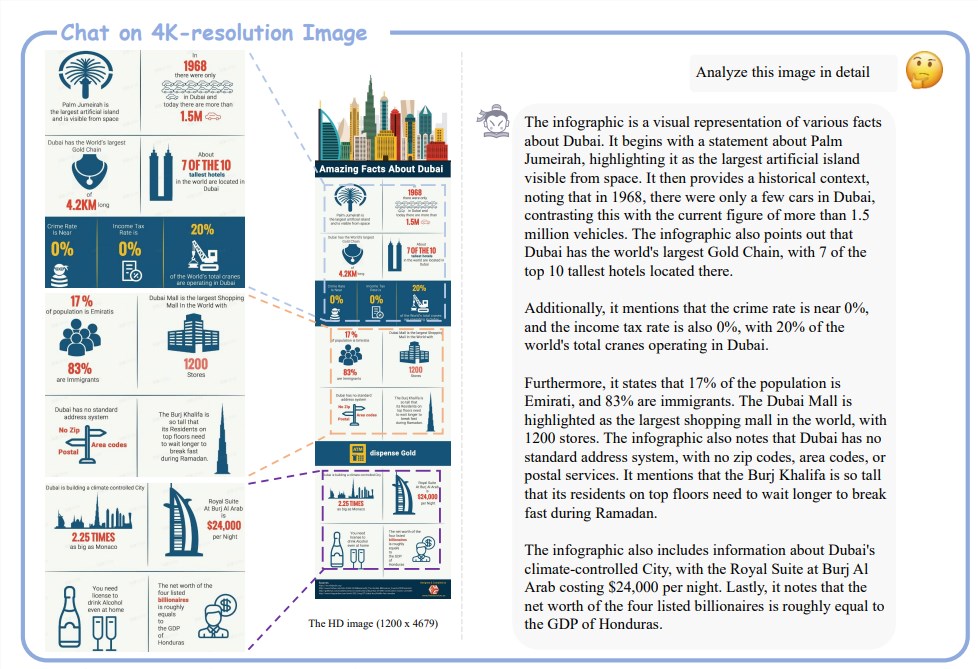

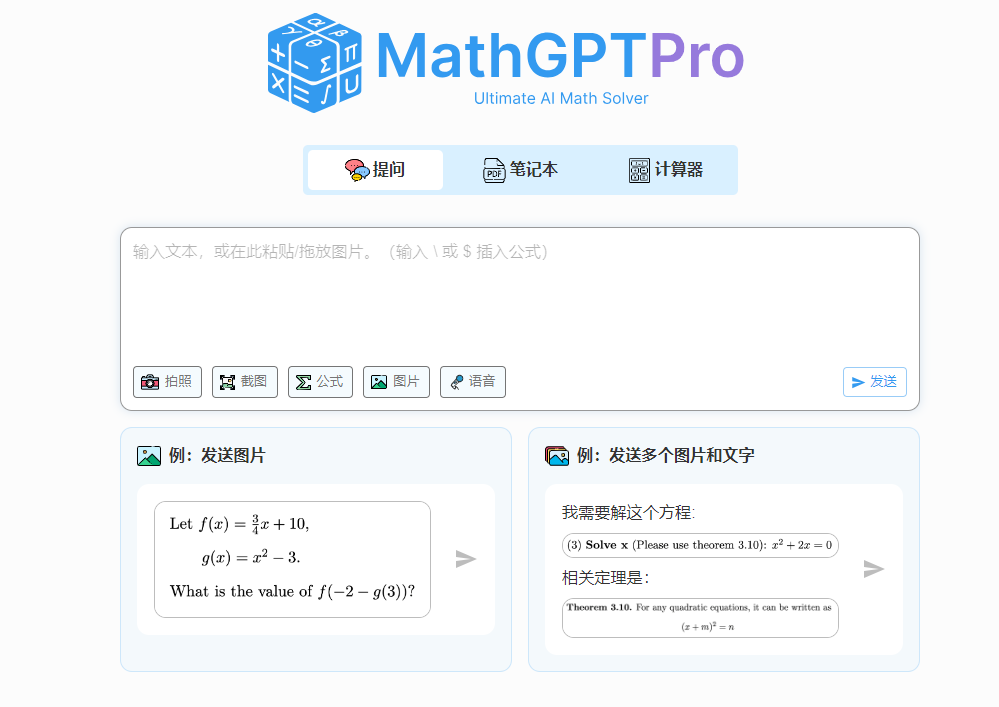

Scholar Puyu Lingbi multi-modal large model upgrade to version 2.5 supports longer context image and video understanding, pointing directly to GPT4V

Recently, the InternLM-XComposer multi-modal large model was upgraded to version 2.5. This model, developed by the Shanghai Artificial Intelligence Laboratory, has improved text and image understanding with its excellent long-context input and output capa

2024-12-15