ConvNetSharp

vNetSharp v0.4.14

처음에는 Concnetjs의 C# 포트로 시작되었습니다. ConvnetSharp를 사용하여 CNN (Convolutional Neural Networks)을 교육하고 평가할 수 있습니다.

Convnetjs (Andrej Karpathy)의 원래 저자와 모든 기고자들에게 대단히 감사합니다!

ConvnetSharp는 ManagedCuda 라이브러리에 NVIDIA의 CUDA에 의존합니다

| Core.layers | flow.layers | 계산 그래프 |

|---|---|---|

| 계산 그래프가 없습니다 | 장면 뒤에 계산 그래프를 만드는 레이어 | '순수한 흐름' |

| 스태킹 레이어로 구성된 네트워크 | 스태킹 레이어로 구성된 네트워크 | 'OPS'는 서로 연결되었습니다. 보다 복잡한 네트워크를 구현할 수 있습니다 |

|  |  |

| 예를 들어 Mnistdemo | 예 : mnistflowgpudemo 또는 Classify2ddemo의 흐름 버전 | 예를 들어 examplecpusingle |

다음은 2 층 신경망을 정의하고 단일 데이터 포인트에서 교육하는 최소 예입니다.

using System ;

using ConvNetSharp . Core ;

using ConvNetSharp . Core . Layers . Double ;

using ConvNetSharp . Core . Training . Double ;

using ConvNetSharp . Volume ;

using ConvNetSharp . Volume . Double ;

namespace MinimalExample

{

internal class Program

{

private static void Main ( )

{

// specifies a 2-layer neural network with one hidden layer of 20 neurons

var net = new Net < double > ( ) ;

// input layer declares size of input. here: 2-D data

// ConvNetJS works on 3-Dimensional volumes (width, height, depth), but if you're not dealing with images

// then the first two dimensions (width, height) will always be kept at size 1

net . AddLayer ( new InputLayer ( 1 , 1 , 2 ) ) ;

// declare 20 neurons

net . AddLayer ( new FullyConnLayer ( 20 ) ) ;

// declare a ReLU (rectified linear unit non-linearity)

net . AddLayer ( new ReluLayer ( ) ) ;

// declare a fully connected layer that will be used by the softmax layer

net . AddLayer ( new FullyConnLayer ( 10 ) ) ;

// declare the linear classifier on top of the previous hidden layer

net . AddLayer ( new SoftmaxLayer ( 10 ) ) ;

// forward a random data point through the network

var x = BuilderInstance . Volume . From ( new [ ] { 0.3 , - 0.5 } , new Shape ( 2 ) ) ;

var prob = net . Forward ( x ) ;

// prob is a Volume. Volumes have a property Weights that stores the raw data, and WeightGradients that stores gradients

Console . WriteLine ( " probability that x is class 0: " + prob . Get ( 0 ) ) ; // prints e.g. 0.50101

var trainer = new SgdTrainer ( net ) { LearningRate = 0.01 , L2Decay = 0.001 } ;

trainer . Train ( x , BuilderInstance . Volume . From ( new [ ] { 1.0 , 0.0 , 0.0 , 0.0 , 0.0 , 0.0 , 0.0 , 0.0 , 0.0 , 0.0 } , new Shape ( 1 , 1 , 10 , 1 ) ) ) ; // train the network, specifying that x is class zero

var prob2 = net . Forward ( x ) ;

Console . WriteLine ( " probability that x is class 0: " + prob2 . Get ( 0 ) ) ;

// now prints 0.50374, slightly higher than previous 0.50101: the networks

// weights have been adjusted by the Trainer to give a higher probability to

// the class we trained the network with (zero)

}

}

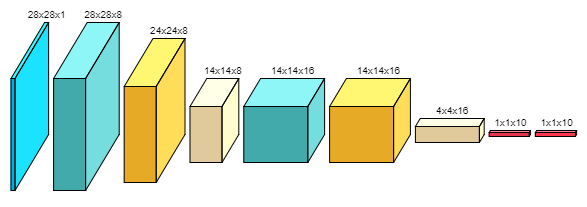

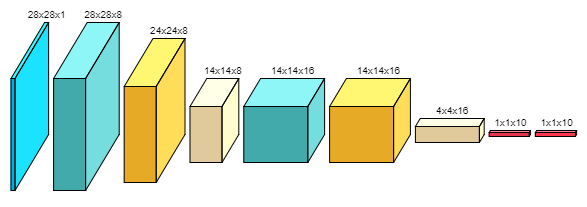

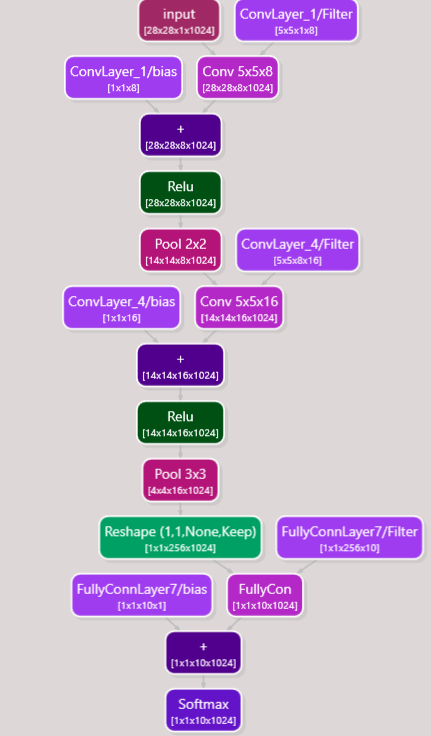

} var net = FluentNet < double > . Create ( 24 , 24 , 1 )

. Conv ( 5 , 5 , 8 ) . Stride ( 1 ) . Pad ( 2 )

. Relu ( )

. Pool ( 2 , 2 ) . Stride ( 2 )

. Conv ( 5 , 5 , 16 ) . Stride ( 1 ) . Pad ( 2 )

. Relu ( )

. Pool ( 3 , 3 ) . Stride ( 3 )

. FullyConn ( 10 )

. Softmax ( 10 )

. Build ( ) ; GPU 모드로 전환하려면 :

GPU '를 추가하십시오 : using ConvNetSharp.Volume. GPU .Single; 또는 using ConvNetSharp.Volume. GPU .Double;BuilderInstance<float>.Volume = new ConvNetSharp.Volume.GPU.Single.VolumeBuilder(); 또는 BuilderInstance<double>.Volume = new ConvNetSharp.Volume.GPU.Double.VolumeBuilder(); 코드를 구걸 할 때CUDA 10.0 설치하려면 CUDA 버전 10.0 및 CUDNN V7.6.4 (2019 년 9 월 27 일)가 있어야합니다. CUDNN 빈 경로는 경로 환경 변수에서 참조해야합니다.

MNIST GPU 데모는 여기에 있습니다

using ConvNetSharp . Core . Serialization ;

[ .. . ]

// Serialize to json

var json = net . ToJson ( ) ;

// Deserialize from json

Net deserialized = SerializationExtensions . FromJson < double > ( json ) ; using ConvNetSharp . Flow . Serialization ;

[ .. . ]

// Serialize to two files: MyNetwork.graphml (graph structure) / MyNetwork.json (volume data)

net . Save ( " MyNetwork " ) ;

// Deserialize from files

var deserialized = SerializationExtensions . Load < double > ( " MyNetwork " , false ) [ 0 ] ; // first element is the network (second element is the cost if it was saved along)