FLASH pytorch

0.1.9

線性時間內變壓器質量論文中提出的變壓器變體的實現

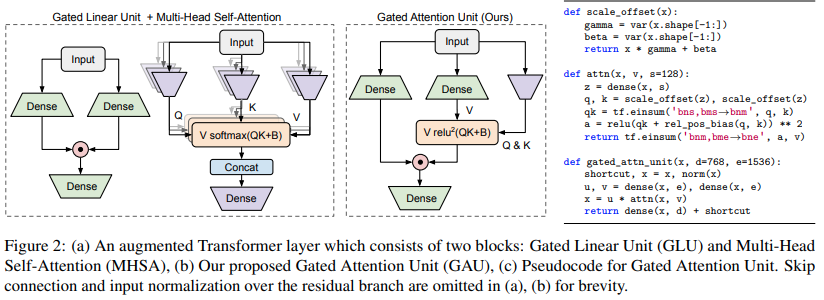

$ pip install FLASH-pytorch本文的主要新穎電路是“門控注意力單元”,他們聲稱它可以取代多頭注意力,同時將其減少到只有一個頭。

它使用 relu 平方活化取代了 softmax,其活化首次出現在 Primer 論文中,並在 ReLA Transformer 中使用了 ReLU。門控風格似乎主要受到 gMLP 的啟發。

import torch

from flash_pytorch import GAU

gau = GAU (

dim = 512 ,

query_key_dim = 128 , # query / key dimension

causal = True , # autoregressive or not

expansion_factor = 2 , # hidden dimension = dim * expansion_factor

laplace_attn_fn = True # new Mega paper claims this is more stable than relu squared as attention function

)

x = torch . randn ( 1 , 1024 , 512 )

out = gau ( x ) # (1, 1024, 512)然後,作者將GAU與 Katharopoulos 線性注意力相結合,使用序列分組來克服自回歸線性注意力的已知問題。

這種二次門控注意力單元與分組線性注意力的組合被他們命名為 FLASH

你也可以很容易地使用它

import torch

from flash_pytorch import FLASH

flash = FLASH (

dim = 512 ,

group_size = 256 , # group size

causal = True , # autoregressive or not

query_key_dim = 128 , # query / key dimension

expansion_factor = 2. , # hidden dimension = dim * expansion_factor

laplace_attn_fn = True # new Mega paper claims this is more stable than relu squared as attention function

)

x = torch . randn ( 1 , 1111 , 512 ) # sequence will be auto-padded to nearest group size

out = flash ( x ) # (1, 1111, 512)最後,您可以使用本文中提到的完整 FLASH 變壓器。這包含論文中提到的所有位置嵌入。絕對位置嵌入使用縮放正弦曲線。 GAU 二次注意力會得到單向 T5 相對位置偏差。最重要的是,GAU 注意力和線性注意力都將被旋轉嵌入(RoPE)。

import torch

from flash_pytorch import FLASHTransformer

model = FLASHTransformer (

num_tokens = 20000 , # number of tokens

dim = 512 , # model dimension

depth = 12 , # depth

causal = True , # autoregressive or not

group_size = 256 , # size of the groups

query_key_dim = 128 , # dimension of queries / keys

expansion_factor = 2. , # hidden dimension = dim * expansion_factor

norm_type = 'scalenorm' , # in the paper, they claimed scalenorm led to faster training at no performance hit. the other option is 'layernorm' (also default)

shift_tokens = True # discovered by an independent researcher in Shenzhen @BlinkDL, this simply shifts half of the feature space forward one step along the sequence dimension - greatly improved convergence even more in my local experiments

)

x = torch . randint ( 0 , 20000 , ( 1 , 1024 ))

logits = model ( x ) # (1, 1024, 20000) $ python train.py @article { Hua2022TransformerQI ,

title = { Transformer Quality in Linear Time } ,

author = { Weizhe Hua and Zihang Dai and Hanxiao Liu and Quoc V. Le } ,

journal = { ArXiv } ,

year = { 2022 } ,

volume = { abs/2202.10447 }

} @software { peng_bo_2021_5196578 ,

author = { PENG Bo } ,

title = { BlinkDL/RWKV-LM: 0.01 } ,

month = { aug } ,

year = { 2021 } ,

publisher = { Zenodo } ,

version = { 0.01 } ,

doi = { 10.5281/zenodo.5196578 } ,

url = { https://doi.org/10.5281/zenodo.5196578 }

} @inproceedings { Ma2022MegaMA ,

title = { Mega: Moving Average Equipped Gated Attention } ,

author = { Xuezhe Ma and Chunting Zhou and Xiang Kong and Junxian He and Liangke Gui and Graham Neubig and Jonathan May and Luke Zettlemoyer } ,

year = { 2022 }

}